Transcription

Lecture 2:Image Classification pipelineFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 1April 5, 2018

Administrative: PiazzaFor questions about midterm, poster session, projects, etc, use Piazza!SCPD students: Use your @stanford.edu address to register for Piazza; contactscpd-customerservice@stanford.edu for help.Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 2April 5, 2018

Administrative: Assignment 1Out yesterday, due 4/18 11:59pm-K-Nearest NeighborLinear classifiers: SVM, SoftmaxTwo-layer neural networkImage featuresFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 3April 5, 2018

Administrative: Friday Discussion SectionsFridays 12:30pm - 1:20pm in Skilling AuditoriumHands-on tutorials, with more practical detail than main lectureCheck course website for his Friday: Python / numpy / Google Cloud setupFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 4April 5, 2018

Administrative: Python /Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 5April 5, 2018

Administrative: Google CloudWe will be using Google Cloud in this classWe will be distributing coupons coupons to all enrolled studentsSee our tutorial here for walking through Google Cloud setup:http://cs231n.github.io/gce-tutorial/Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 6April 5, 2018

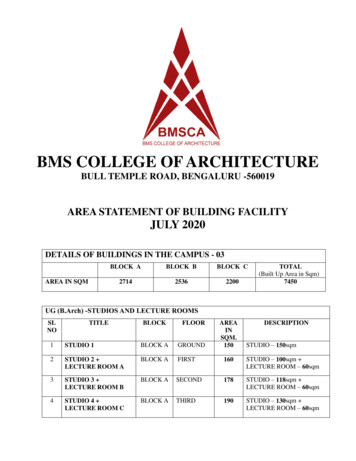

Image Classification: A core task in Computer Vision(assume given set of discrete labels){dog, cat, truck, plane, .}catThis image by Nikita islicensed under CC-BY 2.0Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 7April 5, 2018

The Problem: Semantic GapWhat the computer seesAn image is just a big grid ofnumbers between [0, 255]:This image by Nikita islicensed under CC-BY 2.0Fei-Fei Li & Justin Johnson & Serena Yeunge.g. 800 x 600 x 3(3 channels RGB)Lecture 2 - 8April 5, 2018

Challenges: Viewpoint variationAll pixels change whenthe camera moves!This image by Nikita islicensed under CC-BY 2.0Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 9April 5, 2018

Challenges: IlluminationThis image is CC0 1.0 public domainThis image is CC0 1.0 public domainFei-Fei Li & Justin Johnson & Serena YeungThis image is CC0 1.0 public domainLecture 2 - 10This image is CC0 1.0 public domainApril 5, 2018

Challenges: DeformationThis image by Umberto Salvagninis licensed under CC-BY 2.0This image by Umberto Salvagninis licensed under CC-BY 2.0Fei-Fei Li & Justin Johnson & Serena YeungThis image by sare bear islicensed under CC-BY 2.0Lecture 2 - 11This image by Tom Thai islicensed under CC-BY 2.0April 5, 2018

Challenges: OcclusionThis image is CC0 1.0 public domainThis image by jonsson is licensedunder CC-BY 2.0This image is CC0 1.0 public domainFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 12April 5, 2018

Challenges: Background ClutterThis image is CC0 1.0 public domainFei-Fei Li & Justin Johnson & Serena YeungThis image is CC0 1.0 public domainLecture 2 - 13April 5, 2018

Challenges: Intraclass variationThis image is CC0 1.0 public domainFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 14April 5, 2018

An image classifierUnlike e.g. sorting a list of numbers,no obvious way to hard-code the algorithm forrecognizing a cat, or other classes.Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 15April 5, 2018

Attempts have been madeFind edgesFind corners?John Canny, “A Computational Approach to Edge Detection”, IEEE TPAMI 1986Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 16April 5, 2018

Machine Learning: Data-Driven Approach1. Collect a dataset of images and labels2. Use Machine Learning to train a classifier3. Evaluate the classifier on new imagesExample training setFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 17April 5, 2018

First classifier: Nearest NeighborMemorize alldata and labelsPredict the labelof the most similartraining imageFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 18April 5, 2018

Example Dataset: CIFAR1010 classes50,000 training images10,000 testing imagesAlex Krizhevsky, “Learning Multiple Layers of Features from Tiny Images”, Technical Report, 2009.Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 19April 5, 2018

Example Dataset: CIFAR1010 classes50,000 training images10,000 testing imagesTest images and nearest neighborsAlex Krizhevsky, “Learning Multiple Layers of Features from Tiny Images”, Technical Report, 2009.Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 20April 5, 2018

Distance Metric to compare imagesL1 distance:addFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 21April 5, 2018

Nearest Neighbor classifierFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 22April 5, 2018

Nearest Neighbor classifierMemorize training dataFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 23April 5, 2018

Nearest Neighbor classifierFor each test image:Find closest train imagePredict label of nearest imageFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 24April 5, 2018

Nearest Neighbor classifierQ: With N examples,how fast are trainingand prediction?Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 25April 5, 2018

Nearest Neighbor classifierQ: With N examples,how fast are trainingand prediction?A: Train O(1),predict O(N)Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 26April 5, 2018

Nearest Neighbor classifierQ: With N examples,how fast are trainingand prediction?A: Train O(1),predict O(N)This is bad: we wantclassifiers that are fastat prediction; slow fortraining is okFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 27April 5, 2018

What does this look like?Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 28April 5, 2018

K-Nearest NeighborsInstead of copying label from nearest neighbor,take majority vote from K closest pointsK 1K 3Fei-Fei Li & Justin Johnson & Serena YeungK 5Lecture 2 - 29April 5, 2018

What does this look like?Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 30April 5, 2018

What does this look like?Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 31April 5, 2018

K-Nearest Neighbors: Distance MetricL1 (Manhattan) distanceFei-Fei Li & Justin Johnson & Serena YeungL2 (Euclidean) distanceLecture 2 - 32April 5, 2018

K-Nearest Neighbors: Distance MetricL1 (Manhattan) distanceK 1Fei-Fei Li & Justin Johnson & Serena YeungL2 (Euclidean) distanceK 1Lecture 2 - 33April 5, 2018

K-Nearest Neighbors: Demo os/knn/Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 34April 5, 2018

HyperparametersWhat is the best value of k to use?What is the best distance to use?These are hyperparameters: choices aboutthe algorithm that we set rather than learnFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 35April 5, 2018

HyperparametersWhat is the best value of k to use?What is the best distance to use?These are hyperparameters: choices aboutthe algorithm that we set rather than learnVery problem-dependent.Must try them all out and see what works best.Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 36April 5, 2018

Setting HyperparametersIdea #1: Choose hyperparametersthat work best on the dataYour DatasetFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 37April 5, 2018

Setting HyperparametersIdea #1: Choose hyperparametersthat work best on the dataBAD: K 1 always worksperfectly on training dataYour DatasetFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 38April 5, 2018

Setting HyperparametersIdea #1: Choose hyperparametersthat work best on the dataBAD: K 1 always worksperfectly on training dataYour DatasetIdea #2: Split data into train and test, choosehyperparameters that work best on test datatrainFei-Fei Li & Justin Johnson & Serena YeungtestLecture 2 - 39April 5, 2018

Setting HyperparametersIdea #1: Choose hyperparametersthat work best on the dataBAD: K 1 always worksperfectly on training dataYour DatasetIdea #2: Split data into train and test, choosehyperparameters that work best on test dataBAD: No idea how algorithmwill perform on new datatrainFei-Fei Li & Justin Johnson & Serena YeungtestLecture 2 - 40April 5, 2018

Setting HyperparametersBAD: K 1 always worksperfectly on training dataIdea #1: Choose hyperparametersthat work best on the dataYour DatasetIdea #2: Split data into train and test, choosehyperparameters that work best on test dataBAD: No idea how algorithmwill perform on new datatraintestIdea #3: Split data into train, val, and test; choosehyperparameters on val and evaluate on testtrainFei-Fei Li & Justin Johnson & Serena YeungBetter!validationLecture 2 - 41testApril 5, 2018

Setting HyperparametersYour DatasetIdea #4: Cross-Validation: Split data into folds,try each fold as validation and average the resultsfold 1fold 2fold 3fold 4fold 5testfold 1fold 2fold 3fold 4fold 5testfold 1fold 2fold 3fold 4fold 5testUseful for small datasets, but not used too frequently in deep learningFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 42April 5, 2018

Setting HyperparametersExample of5-fold cross-validationfor the value of k.Each point: singleoutcome.The line goesthrough the mean, barsindicated standarddeviation(Seems that k 7 works bestfor this data)Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 43April 5, 2018

k-Nearest Neighbor on images never used.- Very slow at test time- Distance metrics on pixels are not informativeOriginalOriginal image isCC0 public domainBoxedShiftedTinted(all 3 images have same L2 distance to the one on the left)Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 44April 5, 2018

k-Nearest Neighbor on images never used.Dimensions 3Points 43- Curse of dimensionalityDimensions 2Points 42Dimensions 1Points 4Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 45April 5, 2018

K-Nearest Neighbors: SummaryIn Image classification we start with a training set of images and labels, andmust predict labels on the test setThe K-Nearest Neighbors classifier predicts labels based on nearest trainingexamplesDistance metric and K are hyperparametersChoose hyperparameters using the validation set; only run on the test set once atthe very end!Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 46April 5, 2018

Linear ClassificationFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 47April 5, 2018

Neural NetworkLinearclassifiersThis image is CC0 1.0 public domainFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 48April 5, 2018

Two young girls are Boy is doing backflipplaying with lego toy. on wakeboardMan in black shirtis playing guitar.Construction worker inorange safety vest isworking on road.Karpathy and Fei-Fei, “Deep Visual-Semantic Alignments for Generating Image Descriptions”, CVPR 2015Figures copyright IEEE, 2015. Reproduced for educational purposes.Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 49April 5, 2018

Recall CIFAR1050,000 training imageseach image is 32x32x310,000 test images.Fei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 50April 5, 2018

Parametric ApproachImagef(x,W)Array of 32x32x3 numbers(3072 numbers total)10 numbers givingclass scoresWparametersor weightsFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 51April 5, 2018

Parametric Approach: Linear ClassifierImagef(x,W) Wxf(x,W)Array of 32x32x3 numbers(3072 numbers total)10 numbers givingclass scoresWparametersor weightsFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 52April 5, 2018

Parametric Approach: Linear Classifier3072x1Imagef(x,W) Wx10x110x3072f(x,W)Array of 32x32x3 numbers(3072 numbers total)10 numbers givingclass scoresWparametersor weightsFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 53April 5, 2018

Parametric Approach: Linear Classifier3072x1Imagef(x,W) Wx b10x110x3072f(x,W)Array of 32x32x3 numbers(3072 numbers total)10x110 numbers givingclass scoresWparametersor weightsFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 54April 5, 2018

Example with an image with 4 pixels, and 3 classes (cat/dog/ship)Stretch pixels into column5656231242Input imageFei-Fei Li & Justin Johnson & Serena Yeung231242Lecture 2 - 55April 5, 2018

Example with an image with 4 pixels, and 3 classes (cat/dog/ship)Stretch pixels into column5656231242Input image0.2-0.50.12.01.1-96.8Cat score437.9Dog score61.95Ship score2311.51.32.10.02400.250.2-0.3 3.2 -1.22WFei-Fei Li & Justin Johnson & Serena YeungbLecture 2 - 56April 5, 2018

Example with an image with 4 pixels, and 3 classes (cat/dog/ship)Algebraic Viewpointf(x,W) WxFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 57April 5, 2018

Example with an image with 4 pixels, and 3 classes (cat/dog/ship)Input imageAlgebraic Viewpointf(x,W) ei Li & Justin Johnson & Serena Yeung1.13.2-1.2-96.8437.961.95Lecture 2 - 58April 5, 2018

Interpreting a Linear ClassifierFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 59April 5, 2018

Interpreting a Linear Classifier: Visual ViewpointFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 60April 5, 2018

Interpreting a Linear Classifier: Geometric Viewpointf(x,W) Wx bArray of 32x32x3 numbers(3072 numbers total)Plot created using Wolfram CloudFei-Fei Li & Justin Johnson & Serena YeungCat image by Nikita is licensed under CC-BY 2.0Lecture 2 - 61April 5, 2018

Hard cases for a linear classifierClass 1:First and third quadrantsClass 1:1 L2 norm 2Class 1:Three modesClass 2:Second and fourth quadrantsClass 2:Everything elseClass 2:Everything elseFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 62April 5, 2018

Linear Classifier: Three ViewpointsAlgebraic ViewpointVisual ViewpointGeometric Viewpointf(x,W) WxOne templateper classHyperplanescutting up spaceFei-Fei Li & Justin Johnson & Serena YeungLecture 2 - 63April 5, 2018

So far: Defined a (linear) score function f(x,W) Wx bExample classscores for 3images forsome W:How can we tellwhether this Wis good or bad?Cat image by Nikita is licensed under CC-BY 2.0Car image is CC0 1.0 public domainFrog image is in the public domainFei-Fei Li & Justin Johnson & Serena e 2 - 643.424.642.655.12.645.55-4.34-1.5-4.796.14April 5, 2018

f(x,W) Wx bComing up:- Loss function- Optimization- ConvNets!Fei-Fei Li & Justin Johnson & Serena Yeung(quantifying what it means tohave a “good” W)(start with random W and find aW that minimizes the loss)(tweak the functional form of f)Lecture 2 - 65April 5, 2018

Administrative: Python Numpy 5 . no obvious way to hard-code the algorithm for recognizing a cat, or other classes. . the algorithm that we set rather than learn. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 2 - April 5, 2018 Hyperparameters 36 What is the best value of k to use?