Transcription

(IJACSA) International Journal of Advanced Computer Science and Applications,Vol. 10, No. 11, 2019A Generic Approach for Weight Assignment to theDecision Making ParametersMd. Zahid Hasan1, Shakhawat Hossain2, Mohammad Shorif Uddin3, Mohammad Shahidul Islam4Department of Computer Science and Engineering Jahangirnagar University, Dhaka, Bangladesh 1, 3Department of CSE, International Islamic University Chittagong, Chattogram, Bangladesh 2Institute of Information Technology, Jahangirnagar University, Dhaka, Bangladesh 4Abstract—Weight assignment to the decision parameters is acrucial factor in the decision-making process. Any imprecision inweight assignment to the decision attributes may lead the wholedecision-making process useless which ultimately mislead thedecision-makers to find an optimal solution. Therefore,attributes’ weight allocation process should be flawless andrational, and should not be just assigning some random values tothe attributes without a proper analysis of the attributes’ impacton the decision-making process. Unfortunately, there is nosophisticated mathematical framework for analyzing theattribute’s impact on the decision-making process and thus theweight allocation task is accomplished based on some humansensing factors. To fill this gap, present paper proposes a weightassignment framework that analyzes the impact of an attributeon the decision-making process and based on that, each attributeis evaluated with a justified numerical value. The proposedframework analyzes historical data to assess the importance ofan attribute and organizes the decision problems in ahierarchical structure and uses different mathematical formulasto explicit weights at different levels. Weights of mid and higherlevel attributes are calculated based on the weights of root-levelattributes. The proposed methodology has been validated withdiverse data. In addition, the paper presents some potentialapplications of the proposed weight allocation scheme.Keywords—Multiple attribute decision problem; average termfrequency; cosine similarity; weight setup for multiple attributes;decision makingI.INTRODUCTIONIn decision-making approaches, the decision-makers needto obtain the optimal alternative from a set of predefinedalternatives based on some decision parameters say attributes.Attribute‟s weight states the relative importance of an attributeand is numerically described to address the impact of anattribute on the decision-making process. A precise decisionmaking process mostly depends upon its attributes‟ weights.Decision attributes in decision problems can be organized in ahierarchical layer. The root-layer attributes of a decisionproblem expose the basic decision parameters whereasintermediate-layer attributes have a significant dependency onthe root-layer attributes.However, all the attributes in a decision solution are notequally important. So, to identify the importance of anattribute relative to other attributes in decision problemsolution, a weight is assigned to each attribute. For example,suppose, a music school determines to appoint a music teacherand for that, the school committee sets two basic qualitymeasurement attributes- knowledge on a musical instrument(KMI) and knowledge on geography (KG). However, the casemay happen that, a candidate scores 120marks (40 marks inKMI and 80 marks in KG) out of 200 where another candidateobtains 100 marks (70 in KMI and 30 marks in KG). If each ofthese two attributes is given similar importance then the firstcandidate will be selected which is by no means, an optimaldecision. So, it is important to set a specific weight to eachattribute in a decision-making process. But there is nomathematical approach that helps the decision-makers toallocate weights to the decision parameters. Decision-makersvery often depend on domain experts to determine attributes'weights manually which produces some uncertainties in thedecision-making process and consequently, leads to a nonoptional decision. In real-life scenarios, a decision-makerhimself sets an identical weight to each decision-makingattribute rather than depending on domain experts which inconsequence makes the decision-making framework find atroublesome decision solution.However, this paper proposes a very straightforwardformula to allocate weights to the decision attributes. Thepaper proposes the Term Frequency to allocate weights to theroot level attributes and the Cosine Similarity to generateweights for the intermediate level attributes. Both approachesanalyze some historical data to emerge the weights of thedecision attributes.The paper is organized as follows: Section II provides adetailed description of the existing weight allocationmethodologies. Based on the study of related works, a newgeneric weight assignment methodology is proposed inSection III. In Section IV, a numerical experiment on theproposed methodology is provided. The system is validated inSection V through the representation of its results at differentcritical situations. The paper is concluded in Section VI.II. RELATED WORKSIn multiple attribute decision-making problems, therelative importance of each attribute allocated by an expert hasa great impact to evaluate every alternative [1]. The multipleattribute decision-making (MADM) method offers a practicaland efficient way to obtain a ranking of all the alternativesbased on non-relative and inconsistent attributes [2]. For thatreason, it is especially imperative to find a logical and sensibleweight allocation scheme. In real life, the imperfect, inexactinformation and the impact of particular and individualpreference lead to expanding the indecision and trouble in512 P a g ewww.ijacsa.thesai.org

(IJACSA) International Journal of Advanced Computer Science and Applications,Vol. 10, No. 11, 2019weight calculation and distribution in the decision-makingproblem [3].decision approach, the attributes‟ weights setup process can becategories into the following classes.The decision scientists have proposed variousmethodologies for obtaining attributes‟ weights of thedecision-making problems. The present methods can begenerally divided into three groups: subjective, objective andintegrated approach [4]. In the subjective approach, thedecision-makers set the attributes‟ weights by using theirpreference knowledge [5]. AHP [6] and Delphi method [7] arethe standard methods for determining subjective weightsbased on the preference of decision-makers. Ranked and pointallocation methods proposed by Doyle et al [8] and rank orderdistribution method provided by Roberts and Goodwin [9] arealso some subjective weighting approaches.1) The rule-based decision-making processes set a randomweight for each of its attributes. Some rule-based approacheslike the Evidential Reasoning (ER) approach use those randomvalues to calculate the final result. On the other hand, someadvance decision-making approaches update the primarily setup random weights to enhance the decision-making accuracy.For example, the RIMER approach updates the activationweight to emerge an accurate decision result.2) Decision-making approaches like deep learningmethodologies use some random values as its primaryactivation weight. For example, ANN, RNN, CNN or GANuse some random values to activate their attributes‟ weights.Later, these approaches update the weights to reach themaximum accuracy in any type of decision-making process.So, in terms of decision attributes‟ weight generation, decisionproblems of the existing decision-making approaches can belisted as:a) Rule-based decision-making approaches use somerandom values to update its decision attributes‟ weights whichlead the decision-making approaches to some troublesomeresults.b) Weight updating processes used in decision-makingapproaches are not enough matured and as a consequencedecision-making approaches very often fail to reveal thedecision results with enough confidence.c) Deep learning approaches take huge time to updateits activation weights as it requires multiple iterations toupdate attributes weights.The above discussion makes it clear that decisionapproaches need to follow an appropriate weight setup processin order to generate an accurate decision result under anyunfair circumstance like risk or uncertainties.In the objective approach, the decision-makers set theattribute-weights by using applicable facts, rationalimplications, and viewpoints. Entropy-based method [10],TOPSIS method [11] and mathematical programming basedmethod [12] follow the objective approach to allocate weightsto the decision parameters. Decision-makers' preferences andevidence-facts of the specific problem are consideredsimultaneously in the integrated approach for obtaining theattributes-weights. Cook and Kress proposed a preferenceaggregation model [13] which is actually an integratedapproach for allocating weights to the decision attributes. Fanet al. [14], Horsky and Rao [15], and Pekelman and Sen [16]also constructed some optimization-based models.However, it is crucial to select suitable attributes‟ weightsin decision-making conditions since the diverse values ofattributes‟ weights may effect in unlike ranking order ofalternatives. Nevertheless, in most MADM scenarios, thepreference of the attributes over the alternatives distributed bydecision-makers is typically not adequate for the crispnumerical data, because things are uncertain, fuzzy andpossibly inclined by the subjectivity of the decision-makers, orthe knowledge and data about the problem domain areinsufficient during the decision-making the process.Based on the above analysis, many factors affect theweight allocation, there should be considered that theimportance of attribute is reflected by the objective data andthe subjective preference of the decision-makers. But there isno better method to fuse the subjective and objective weightsin existing MADM literature. With the motivation ofestablishing a weight allocation approach in a uniform as wellas effective way, this paper proposes the current genericscheme.III. METHODOLOGYA. Problem StatementDecision-makers use different methodologies to capturedifferent types of decision problems. Different methodologiescalculate alternatives‟ scores in different ways. However,almost all the available advanced decision-making approachesset some specific weights to distinguish the decision-makingparameters based on their relative importance on the decisionmaking process. Based on the decision-making process andB. Problem StructuringBased on types and nature, decision problems can beclassified into two basic classes: Single-layer decision problems; and Multilayer decision problems.In single-layer decision problems, decision solutions aremade with the direct involvement of the decision attributes.Only the basic decision parameters that have a direct impacton decision problem solutions construct the single –layerdecision architecture. This type of attribute can be representedusing the architecture (see Fig. 1).XX AlternativeX1, X2, X3 Decision attributesX1X2X3Fig. 1. Single Layer Decision Problem.513 P a g ewww.ijacsa.thesai.org

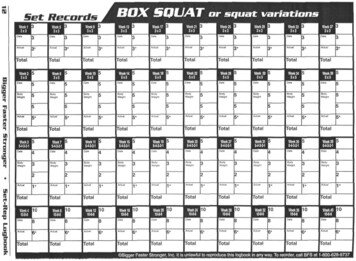

(IJACSA) International Journal of Advanced Computer Science and Applications,Vol. 10, No. 11, 2019XX1X11X31Here,denotes the value ofattributes and n is the total number of attributes. So, theaverage TF will be considered as the final weights of the rootlevel attributes.Intermediate DecisionAttributeX3X2X12(3)Decision AlternativeD. Different Cases of Weight Generation using TermFrequencyWeight generation by analyzing the historical data can becategorized into two basic classes based on the data types.Basic DecisionAttributeX32Fig. 2. Multi Layer Decision Problem.On the other hand, multi-level decision problems dependon one or more intermediate levels to make the final decisionrather than just depending on the basic attributes. These typesof decision problems can be expressed by an ArchitecturalTheory Diagram (ATD) or a hierarchical structure (see Fig. 2).So, weight generation for decision attributes cannecessarily be associated at root level or at both root andintermediate levels of the decision problem architecture.C. Weight Generation for Root-layer AttributesWeights for the basic attributes of a decision problem canbe generated by analyzing the historical data. This paperproposes the Term Frequency (TF) as the data analyzing toolfor weight assignment to the decision attributes. Termfrequency is a very sophisticated technique for revealing theimportance of a term for a specific purpose. Term frequencyassigns weight to an attribute based on the number of times anattribute appears in a dataset. Term frequency of a term „t‟ canmathematically represented asTerm Frequency,1) Weight calculation from textual data: In case of textualdatasets, the weight of an attribute can be assigned based onthe times the attributes appears in each document. Thus, theweights of an attribute in different datasets are calculated.Finally, the average weight can be measured. For example,Table I shows the weight calculation process from twodifferent documents.The term frequency of "Dhaka" for each of thesedocuments can be calculated as:DhakaDhakaWhere, d1 and d2 both are the two separate documents.So, the average TF or the actual weight of "Dhaka" iscalculated by using equation 3.(1) However, very often weight estimation of an attributebecomes troublesome because of different TF from differentdatasets. In that case, this paper proposes a normalized termfrequency to expose the importance of an attribute. Thenormalization task is accomplished by calculating the averageof the Term frequencies measured from different datasets. Theaverage term frequency of an attribute can be represented as: (2)Here,refers to an average term frequency. Theaverage TF of an attribute denotes the actual weight of anattribute.TABLE. II.TABLE. I.CALCULATION OF TERM FREQUENCY FROM TEXTDocument 1 (d1)Document 2 f6population1Bangladesh3country7TermTermTerm CountWEIGHT CALCULATION FROM DIFFERENT TEXTUAL DATASETSDiabetes Dataset 1(Total 334 Patients)Diabetes Dataset 2(Total 203Patients)Weight CalculationAttributesTerm CountTerm FrequencyAttributesTerm CountTerm FrequencyAverage TFHunger3040.91Hunger1650.810.86Peeing more often2780.83Peeing more often1700.830.83Dry Mouth3110.93Dry Mouth1730.850.89Blurred Vision900.26Blurred Vision700.340.3514 P a g ewww.ijacsa.thesai.org

(IJACSA) International Journal of Advanced Computer Science and Applications,Vol. 10, No. 11, 20192) Calculation of weight from numerical data: Togenerate the weight of an attribute from a dataset, the ration ofthe number of data samples containing that attribute and thetotal number of the sample data is calculated. Finally, theaverage weight of the attribute calculated from differentdatasets is measured. For example, we consider two Type-Idiabetes patients' datasets where the first and second datasetboth contain 334 and 203 diabetes-patients. There are fourattributes (Hunger, Peeing, Dry mouth, and blurred vision)related to Type-I diabetes considering their correspondingaffected patients as listed in Table II. Patients experiencingany of these four symptoms are represented and theircorresponding term frequency is calculated by using equation1. After calculating all the term frequency for both datasets,the average term frequency is calculated by using equation 3.E. Weight Generation for Intermediate-layer AttributesVery often decision-makers need to consider someintermediate-level attributes to calculate the efficiency of aparticular attribute in order to rank the decision attributes.Attributes at the intermediate level are not always seemed tobe present directly in the datasets. However, their derivativesmostly determine their significance in decision-making tasks.Weight generation for the attributes of the intermediate levelcan be accomplished by using Cosine Similarity. CosineSimilarity measures the similarity between two non-zerovectors in a vector space model [17]. Suppose, and are twonon-zero vectors in a vector space model. So, cosinessimilarity between and can be demonstrated as,Cosine Similarity frequency of the attributes. For example, to determine theweight of skin in the following decision problem, vector ̅ isconstructed as ̅Here, the weight of the skin is calculated based on the termfrequency of purpura and edema in the historical datasets. Todetermine the importance of skin condition in the CKDdiagnosis process, the skin condition is decomposed into itssymptoms like edema and purpura (see Fig. 3). These twosymptoms construct a vector. And the frequency of edema andpurpura in the dataset constructs another vector. Here thevalue of the vector is always considered 1 to ensure thepresence of edema and purpura in skin conditiondetermination only once. If the value for edema would beconsidered 0, the skin condition determination process wouldbe accomplished without considering edema. On the otherhand, if the value in the vector for edema is considered 2,edema will actually be considered twice to determine skincondition (see Fig. 4).From the above three scenarios, it becomes clear that thevalue for ̅ should be ̅todetermine the skincondition perfectly by avoiding the scenarios 2 and 3.Axiom-2: The weight of an attribute in decision-makingprocess is always. Here w is the weight of anattribute and the value of w is neither 0 nor less than 0,. Because if w 0, then the attribute has no impact onthe decision-making process. Again the weight of an attributecan never be negative. If the weight of an attribute is negativethen that attribute will have an adverse impact on decisionprocess.(4)CKDTo generate weights for the intermediate-level attributes,one of the non-zero vectors, is constructed with the numericvalues measured from the basic attributes of the decisionproblems which is always 1. The other vector,isconstructed with the Term Frequency of the attributes.Weight of Attribute ParpuraEdemaHistological ConditionAnemiaIncreased BPFig. 3. Hierarchical Structure of CKD Diagnosis Problem. Skin Condition(5) For example, suppose, an intermediate-level attribute „I‟has two derivativesandand the TF of andarecalculated as 0.9 and 0.87 respectively. So, the vectors andfor will be constructed as, [1, 1] and [0.9, 0.87]Axiom-1: The value of the non-zero vectors used in thevector space model to generate the weight of intermediatelevel attributes in the architectural theory diagram of decisionprocess is always considered 1. For example in equation 4,each parameter of vector α is considered 1 which can berepresented as,̅In weight generation process explained in the earliersections of this paper, one of the vector say α contains theFig. 4. Different Scenarios of Vector Construction Process.515 P a g ewww.ijacsa.thesai.org

(IJACSA) International Journal of Advanced Computer Science and Applications,Vol. 10, No. 11, 2019To make the experiment transparent and adaptable, theinputs and outputs of the experiment are expressed as follows:A. Weight Calculation for Root -Layer Attributes by usingAverage Term FrequencyTerm frequency calculates how many patients areexperiencing a particular symptom over the total number ofpatients in a specific dataset. Based on how many times asymptom is present in a dataset, the weight for that symptom(attribute) is determined. The following algorithm provides aclear direction to the computation of the weights for the rootlayer attributes.X: Chronic Kidney DiseaseAlgorithm-1: TF calculationX1: General Condition1. Start2. DefineIV. NUMERICAL EXPERIMENTTo conduct a numerical experiment, multiple sets of datafrom four different hospitals in Bangladesh have beencollected. A total amount from 4000 CKD (Chronic KidneyDisease) patients has been taken into consideration in thisexperiment.X2: Gastrointestinal ConditionX3: Skin ConditionX4: Hematologic ConditionX11: Pain on the side or mid to lower backX12: Fatigue3. Compute4. endX13: Mental DepressionThe following tables (Table. III-VI) show the weighs ofdifferent symptoms based on the TF calculation from differentdatasets. The TF of a symptom is calculated by using equation(1) and the weight is calculated by using equation (3).X14: HeadachesX21: VomitingX22: Loss Body WeightX31: EdemaAlgorithm-2 in the next page provides an instruction forthe implementation of equation (3) to generate weights forroot-level attributes.X32: PurpuraAlgorithm-2: Weight Calculation for Root-layer attributesX41: Blood in UrineStep 1: StartStep 2: DefineX23: Change TasteX42: High Blood Pressure (HBP)X43: Loss of AppetiteX44: Protein in UrineIn order to set weights to the CKD diagnosis attributes saysymptoms of CKD, the presence of symptoms in datasets iscarefully analyzed. To make the analysis convenient, the CKDdiagnosis problem is organized in a hierarchical structure.Decision attributes are structured in a two layered architecturebased on attribute nature and their impact on diagnosis process(see Fig. 5).X2Fig. 5. Expression of 1Decision Problem in Hierarchical Structure.Step 3: SetStep 4:Increase by 1Go to Step 3 untilStep 5: ComputePrintStep 6: EndFor example, for a dataset as shown in Table III, the valueof TF for X41is calculated as 984/990 0.99 where 990 is thetotal number of patients and 984 is the number of patientsexperiencing the symptoms “Blood in Urine”. Therefore, theTF of the attribute X41 is 0.99 in case of dataset 1. However;the average TF of X41 provides its actual weight which 0.97 asshown in Table VII.516 P a g ewww.ijacsa.thesai.org

(IJACSA) International Journal of Advanced Computer Science and Applications,Vol. 10, No. 11, 2019TABLE. III.CKD DATASET FROM BANGLADESH MEDICAL COLLEGEB (990 tribute‟s Density770970550223406601333379421984980801705Term 980.80.71TABLE. IV.CKD DATASET FROM SHAHEEDSUHRAWARDY MEDICAL COLLEGE (1013 tribute‟s m 980.890.68TABLE. V.CKD DATASET FROM BANGABANDHU MEDICAL COLLEGE (997 tribute‟s Density699965507245423629400405467981987790695Term .790.69TABLE. VI.CKD DATASET FROM DHAKA MEDICAL COLLEGE (1104 tribute‟s erm 70.770.67TABLE. VII. ROOT-LEVEL ATTRIBUTES‟ WEIGHT 1X42X43X44Attributes’ 70.810.69TABLE. VIII. WEIGHT CALCULATION OF INTERMEDIATE-LAYER ATTRIBUTESIntermediate-level AttributesX1Root-Level 460.970.970.810.69Attribute’s weight0.92X2X30.970.35X40.99517 P a g ewww.ijacsa.thesai.org

(IJACSA) International Journal of Advanced Computer Science and Applications,Vol. 10, No. 11, 2019B. Weight Calculation for Intermediate -Layer Attributes byusing Cosine SimilarityAttribute weights for intermediate layer are determined bythe cosine similarity. Cosine similarity measures the cosineangle between two non-zero vectors. Intermediate layerattribute weight is calculated by the following algorithmshown in Table VIII. In Table VIII, the two non-zero vectorsα and β are defined according to the Section 3.3.Algorithm-3: Weight Calculation for Intermediate-layerattributesStep 1: StartStep 2: Define WStep 3: SetweightOn the other hand, some popular rule-based approacheslike RIMER consider some random values as the attribute'sinitial weights which are later updated based on somethresholds. The domain experts manually set up the thresholdsfor making the weight updating process operational. To makea comparison among different weight allocation processes, theCKD diagnosis problem described earlier in this paper hasbeen considered to generate the weighs by using the RIMERapproach.The other two prominent weight allocation methods- AHPand TOPSIS have also been utilized to generate weights forthe attributed to a similar decision problem.The Table IX provides a clear description of theperformance level of different weighs assignment approaches.From the above table, it becomes very clear that TOPSIS andAHP methods result much differently than the other threemethods. The ANN, RIMER and Proposed methodologiesprovide almost similar weights for almost all decisionattributes. However, the optimal weight allocating frameworkcan be selected by comparing the results of different methodsagainst the benchmark results (see Fig. 6).ComputeIncrease by 1Compute End forStep 4: Setfunction for checking the current result against the threshold.To analyze the CKD problem, we consider a total of 12attributes and feed the attribute values to the feedforwardneural network with one hidden layer. The backpropagationalgorithm sets the weights after 10 successful iterations.End for TABLE. IX.VISUALIZATION OF WEIGHT GENERATION 30.390.540.60.56X40.120.150.20.250.23Step 6:X50.40.330.450.650.42Step 7: Print WStep 8: .69End forV. RESULT AND DISCUSSIONWeight assignment process has been validated throughsome numerical experiments. For that, weights generated bythe proposed system are compared to the benchmark results.The benchmark weights are calculated by analyzing a hugenumber of historical data by a group of domain experts. Theaccuracy of the proposed framework is found very close to thebenchmark results.To demonstrate that the proposed system performs betterthan any of the existing weight allocation approaches, someselected weight allocation methods have been experimented.To analyze the performance of artificial neural network(ANN), the same input attributes and four datasets of theprevious problem have been considered. In ANN, when inputcomes in the neural unit it is multiplied by a random weight ofthe corresponding node, and then the summation of the outputof every node is performed. The final output comes out aftertransferring the current result into the sigmoid activation1.2Relative WeightEnd forStep 5: 2BenchmarkResult0X1 X2 X3 X4 X5 X6 X7 X8 X9 X10X12X11Fig. 6. Comparative Result of NN, RIMER, Benchmark Result withProposed Framework.518 P a g ewww.ijacsa.thesai.org

(IJACSA) International Journal of Advanced Computer Science and Applications,Vol. 10, No. 11, 2019The above graph visualizes that, the proposed weightallocation method is very close to the benchmark results.Therefore, it can be strongly claimed that the proposed weightassignment approaches provide the optimum weights to thedecision parameters.[3][4]V. CONCLUSIONWeight assignment is an important task in decisionmaking approaches. The solution of any decision problemmostly depends upon its attributes‟ weights. Unfortunately,there is no sophisticated framework for assigning weights tothe decision parameters. So, decision-makers need to dependon human sensing factors to assign weights to the attributesthat leads the decision-makers to a non-optimal solution.However, this paper proposes a weight setup method formultiple attribute decision problems where the weights will becalculated from some historical data without any engagementof the domain experts. The decision problem in this paper isrepresented in a hierarchical structure where the attributelayers are divided into intermediate and root layers. Weightsfor root-layer attributes are first determined by the termfrequency of an individual dataset and then average termfrequency is computed from the total number of datasets tofind out the final weights. Cosine similarity is applied tocompute the weights for intermediate-layer attributes whichactually measures the similarity between two non-zerovectors. This can efficiently evade the unreasoning assessmentvalues due to the lack of knowledge or partial experience ofthe experts. Additionally, a numerical experiment provided inthis paper validates the effectiveness of the proposedmethodology which can proficiently help the decision-makersassign the accurate weights to the decision attributes.Funding: This research received no external funding.Conflicts of Interest: The authors declare no conflict ofinterest.[1][2]REFERENCESW. Ho, X. Xu, and P. K. Dey, “Multi-criteria decision makingapproaches for supplier evaluation and selection: a literature review,”European Journal of Operational Research, vol. 202, no. 1, pp. 16–24,2010.Taho Yang, Yiyo Kuo, David Parker, and Kuan Hung Chen, “AMultiple Attribute Group Decision Making Approach for SolvingProblems with the Assessment of Preference Relations,” MathematicalProblems in Engineering, vol. 2015, 10 pages, 7]Hongqiang Jiaoa and Shuangyou Wang, Multi-attribute decisionmaking with dynamic weight allocation, Intelligent DecisionTechnologies 8 (2014) vadskas,AmirSalarVanaki, Hamid Reza Firoozfar, MahnazLari&ZenonasTurskis(2019) A new evaluation model for corporate financial performanceusing integrated CCSD and FCM-ARAS approach, Economic ResearchEkonomskaIstraživanja, 32:1, 1088-1113,2019.Quan Zhang and HongWeiXiu, “An Approach to Determining AttributeWeights Based on Integrating Preference Information on Attributes withDecision Matrix,” Computational Intelligence and Neuroscience, vol.2018, 8 pages, 2018.R. J. Ormerod and W. Ulrich, “Operational research and ethics: aliterature review,” European Journal o

almost all the available advanced decision-making approaches set some specific weights to distinguish the decision-making parameters based on their relative importance on the decision-making process. Based on the decision-making process and decision approach, the attributes‟ weights setup process can be categories into the following classes.