Transcription

1Logram: Efficient Log Parsing Using n-GramDictionariesHetong Dai, Student Member, IEEE, Heng Li, Member, IEEE, Che-Shao Chen, Student Member, IEEE,Weiyi Shang, Member, IEEE, Tse-Hsun (Peter) Chen, Member, IEEE,Abstract—Software systems usually record important runtime information in their logs. Logs help practitioners understand systemruntime behaviors and diagnose field failures. As logs are usually very large in size, automated log analysis is needed to assistpractitioners in their software operation and maintenance efforts. Typically, the first step of automated log analysis is log parsing, i.e.,converting unstructured raw logs into structured data. However, log parsing is challenging, because logs are produced by statictemplates in the source code (i.e., logging statements) yet the templates are usually inaccessible when parsing logs. Prior workproposed automated log parsing approaches that have achieved high accuracy. However, as the volume of logs grows rapidly in the eraof cloud computing, efficiency becomes a major concern in log parsing. In this work, we propose an automated log parsing approach,Logram, which leverages n-gram dictionaries to achieve efficient log parsing. We evaluated Logram on 16 public log datasets andcompared Logram with five state-of-the-art log parsing approaches. We found that Logram achieves a higher parsing accuracy than thebest existing approaches (i.e., at least 10% higher, on average) and also outperforms these approaches in efficiency (i.e., 1.8 to 5.1times faster than the second-fastest approaches in terms of end-to-end parsing time). Furthermore, we deployed Logram on Spark andwe found that Logram scales out efficiently with the number of Spark nodes (e.g., with near-linear scalability for some logs) withoutsacrificing parsing accuracy. In addition, we demonstrated that Logram can support effective online parsing of logs, achieving similarparsing results and efficiency to the offline mode.Index Terms—Log parsing, Log analysis, N-gramF1I NTRODUCTIONModern software systems usually record valuable runtimeinformation (e.g., important events and variable values) inlogs. Logs play an important role for practitioners to understand the runtime behaviors of software systems and to diagnose system failures [1], [2]. However, since logs are oftenvery large in size (e.g., tens or hundreds of gigabytes) [3], [4],prior research has proposed automated approaches to analyze logs. These automated approaches help practitionerswith various software maintenance and operation activities,such as anomaly detection [5], [6], [7], [8], [9], failure diagnosis [10], [11], performance diagnosis and improvement [12],[13], and system comprehension [10], [14]. Recently, the fastemerging AIOps (Artificial Intelligence for IT Operations)solutions also depend heavily on automated analysis ofoperation logs [15], [16], [17], [18], [19].Logs are generated by logging statements in the sourcecode. As shown in Figure 1, a logging statement is composed of log level (i.e., info), static text (i.e., “Found block” and“locally”), and dynamic variables (i.e., “ blockId”). Duringsystem runtime, the logging statement would generate rawlog messages, which is a line of unstructured text thatcontains the static text and the values for the dynamicvariables (e.g., “rdd 42 20”) that are specified in the logging Department of Computer Science and Software Engineering, ConcordiaUniversity, Montreal, Canada.E-mail: (he da, c chesha, shang, peterc)@encs.concordia.ca School of Computing, Queen’s University, Kingston, Canada.E-mail: hengli@cs.queensu.caLoggingstatementlogInfo("Found block blockId locally")(From: spark/storage/BlockManager.scala)17/06/09 20:11:11 INFO storage.BlockManager:Raw log(Unstructured) Found block rdd 42 20 locallyParsed log(Structured)Timestamp: 17/06/09 20:11:11; Level: INFOLogger: storage.BlockManagerStatic template: Found block * locallyDynamic variable(s): rdd 42 20Fig. 1. An illustrative example of parsing an unstructured log messageinto a structured format.statement. The log message also contains information suchas the timestamp (e.g., “17/06/09 20:11:11”) of when theevent happened. In other words, logging statements definethe templates for the log messages that are generated atruntime. Automated log analysis usually has difficultiesanalyzing and processing the unstructured logs due totheir dynamic nature [5], [10]. Instead, a log parsing stepis needed to convert the unstructured logs into a structured format before the analysis. The goal of log parsingis to extract the static template, dynamic variables, and theheader information (i.e., timestamp, log level, and loggername) from a raw log message to a structured format. Suchstructured information is then used as input for automatedlog analysis. He et al. [20] found that the results of logparsing are critical to the success of log analysis tasks.In practice, practitioners usually write ad hoc log parsingscripts that depend heavily on specially-designed regularexpressions [21], [22], [23] . As modern software systemsAuthorized licensed use limited to: Concordia University Library. Downloaded on October 05,2020 at 12:55:18 UTC from IEEE Xplore. Restrictions apply.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TSE.2020.3007554, IEEETransactions on Software Engineering2usually contain large numbers of log templates which areconstantly evolving [24], [25], [26], practitioners need toinvest a significant amount of efforts to develop and maintain such regular expressions. In order to ease the painof developing and maintaining ad hoc log parsing scripts,prior work proposed various approaches for automated logparsing [21]. For example, Drain [22] uses a fixed-depthtree to parse logs. Each layer of the tree defines a rule forgrouping log messages (e.g., log message length, precedingtokens, and token similarity). At the end, log messages withthe same templates are clustered into the same groups. Zhuet al. [21] proposed a benchmark and thoroughly comparedprior approaches for automated log parsing.Despite the existence of prior log parsers, as the size oflogs grows rapidly [1], [2], [27] and the need for low-latencylog analysis increases [19], [28], efficiency becomes an important concern for log parsing. In this work, we proposeLogram, an automated log parsing approach that leveragesn-gram dictionaries to achieve efficient log parsing. In short,Logram uses dictionaries to store the frequencies of n-gramsin logs and leverage the n-gram dictionaries to extract thestatic templates and dynamic variables in logs. Our intuitionis that frequent n-grams are more likely to represent thestatic templates while rare n-grams are more likely to be dynamic variables. The n-gram dictionaries can be constructedand queried efficiently, i.e., with a complexity of O(n) andO(1), respectively.We evaluated Logram on 16 log datasets [21] and compared Logram with five state-of-the-art log parsing approaches. We found that Logram achieves a higher accuracycompared with the best existing approaches (i.e., at least10% higher on average), and that Logram outperforms thesebest existing approaches in efficiency, achieving a parsingspeed that is 1.8 to 5.1 times faster than the second-fastestapproaches. Furthermore, as the n-gram dictionaries canbe constructed in parallel and aggregated efficiently, wedemonstrated that Logram can achieve high scalability whendeployed on a multi-core environment (e.g., a Spark cluster),without sacrificing any parsing accuracy. Finally, we demonstrated that Logram can support effective online parsing,i.e., by updating the n-gram dictionaries continuously whennew logs are added in a streaming manner.In summary, the main contributions1 of our work include: We present the detailed design of an innovativeapproach, Logram, for automated log parsing. Logramleverages n-gram dictionaries to achieve accurateand efficient log parsing.We compare the performance of Logram with otherstate-of-the-art log parsing approaches, based onan evaluation on 16 log datasets. The results showthat Logram outperforms other state-of-the-art approaches in efficiency and achieves better accuracythan existing approaches.We deployed Logram on Spark and we show thatLogram scales out efficiently as the number of Sparknodes increases (e.g., with near-linear scalability forsome logs), without sacrificing paring accuracy.1. The source code of our tool and the data used in our study areshared at https://github.com/BlueLionLogram/Logram We demonstrate that Logram can effectively supportonline parsing, achieving similar parsing results andefficiency compared to the offline mode.Our highly accurate, highly efficient, and highly scalableLogram can benefit future research and practices that relyon automated log parsing for log analysis on large logdata. In addition, practitioners can leverage Logram in alog streaming environment to enable effective online logparsing for real-time log analysis.Paper organization. The paper is organized as follows.Section 2 introduces the background of log parsing and ngrams. Section 3 surveys prior work related to log parsing.Section 4 presents a detailed description of our Logramapproach. Section 5 shows the results of evaluating Logramon 16 log datasets. Section 6 discusses the effectiveness ofLogram for online log parsing. Section 7 discusses the threatsto the validity of our findings. Finally, Section 8 concludesthe paper.2BACKGROUNDIn this section, we introduce the background of log parsingand n-grams that are used in our log parsing approach.2.1Log ParsingIn general, the goal of log parsing is to extract the static template, dynamic variables, and the header information (i.e.,timestamp, level, and logger) from a raw log message. Whilethe header information usually follows a fixed format thatis easy to parse, extracting the templates and the dynamicvariables is much more challenging, because 1) the statictemplates (i.e., logging statements) that generate logs areusually inaccessible [21], and 2) logs usually contain a largevocabulary of words [23]. Table 1 shows four simplifiedlog messages with their header information removed. Thesefour log messages are actually produced from two statictemplates (i.e., “Found block * locally” and “Droppingblock * from memory”). These log messages also containdynamic variables (i.e., “rdd 42 20” and “rdd 42 22”) thatvary across different log messages produced by the sametemplate. Log parsing aims to separate the static templatesand the dynamic variables from such log messages.Traditionally, practitioners rely on ad hoc regular expressions to parse the logs that they are interested in. Forexample, two regular expressions (e.g., “Found block [a-z09 ] locally” and “Dropping block [a-z0-9 ] from memory”)could be used to parse the log messages shown in Table 1.Log processing & management tools (e.g., Splunk2 and ELKstack3 ) also enable users to define their own regular expressions to parse log data. However, modern software systemsusually contain large numbers (e.g., tens of thousands) oflog templates which are constantly evolving [21], [24], [25],[26], [29]. Thus, practitioners need to invest a significantamount of efforts to develop and maintain such ad hoc regular expressions. Therefore, recent work perform literaturereviews and study various approaches to automate the logparsing process [21], [30]. In this work, we propose an automated log parsing approach that is highly accurate, highlyefficient, highly scalable, and supports online parsing.2. https://www.splunk.com3. https://www.elastic.co0098-5589 (c) 2020 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications standards/publications/rights/index.html for more information.Authorized licensed use limited to: Concordia University Library. Downloaded on October 05,2020 at 12:55:18 UTC from IEEE Xplore. Restrictions apply.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TSE.2020.3007554, IEEETransactions on Software Engineering3TABLE 1Simplified log messages for illustration purposes.1.2.3.4.Found block rdd 42Found block rdd 42Dropping block rddDropping block rdd20 locally22 locally42 20 from memory42 22 from memoryn-gramsAn n-gram is a subsequence of length n from an itemsequence (e.g., text [31], speech [32], source code [33], orgenome sequences [34]). Taking the word sequence in thesentence: “The cow jumps over the moon” as an example,there are five 2-grams (i.e., bigrams): “The cow”, “cow jumps”,“jumps over”, “over the”, and “the moon”, and four 3-grams(i.e., trigrams): “The cow jumps”, “cow jumps over”, “jumpsover the”, and “over the moon”. n-grams have been successfully used to model natural language [31], [35], [36], [37] andsource code [38], [39], [40]. However, there exists no workthat leverages n-grams to model log data. In this work, wepropose Logram that leverages n-grams to parse log data inan efficient manner. Our intuition is that frequent n-gramsare more likely to be static text while rare n-grams are morelikely to be dynamic variables.Logram extracts n-grams from the log data and storethe frequencies of each n-gram in dictionaries (i.e., n-gramdictionaries). Finding all the n-grams in a sequence (for alimited n value) can be achieved efficiently by a single passof the sequence (i.e., with a linear complexity) [41]. Forexample, to get the 2-grams and 3-grams in the sentence“The cow jumps over the moon”, an algorithm can move oneword forward each time and get a 2-gram and a 3-gramstarting from that word each time. Besides, the nature ofthe n-gram dictionaries enables one to construct the dictionaries in parallel (e.g., by building separate dictionariesfor different parts of logs in parallel and then aggregatingthe dictionaries). Furthermore, the n-gram dictionaries canbe updated online when more logs are added (e.g., in logstreaming scenarios). As a result, as shown in our experimental results, Logram is highly efficient, highly scalable,and supports online parsing.2.23R ELATED W ORKIn this section, we discuss prior work that proposed logparsing techniques and prior work that leveraged log parsing techniques in various software engineering tasks (e.g.,anomaly detection).3.1Prior Work on Log ParsingIn general, existing log parsing approaches could begrouped under three categories: rule-based, source code-based,and data mining-based approaches.Rule-based log parsing. Traditionally, practitioners andresearchers hand-craft heuristic rules (e.g., in the formsof regular expressions) to parse log data [42], [43], [44].Modern log processing & management tools usually provide support for users to specify customized rules to parsetheir log data [45], [46], [47]. Rule-based approaches requiresubstantial human effort to design the rules and maintainthe rules as log formats evolve [24]. Using standardizedlogging formats [48], [49], [50] can ease the pain of manuallydesigning log parsing rules. However, such standardizedlog formats have never been widely used in practice [23].Source code-based log parsing. A log event is uniquelyassociated with a logging statement in the source code (seeSection 2.1). Thus, prior studies proposed automated logparsing approaches that rely on the logging statements inthe source code to derive log templates [5], [51]. Such approaches first use static program analysis to extract the logtemplates (i.e., from logging statements) in the source code.Based on the log templates, these approaches automaticallycompose regular expressions to match log messages thatare associated with each of the extracted log templates.Following studies [52], [53] applied [5] on production logs(e.g., Google’s production logs) and achieved a very highaccuracy. However, source code is often not available forlog parsing tasks, for example, when the log messages areproduced by closed-source software or third-party libraries;not to mention the efforts for performing static analysisto extract log templates using different logging libraries ordifferent programming languages.Data mining-based log parsing. Other automated log parsing approaches do not require the source code, but instead, leverage various data mining techniques. SLCT [54],LogCluster [55], and LFA [55] proposed approaches thatautomatically parse log messages by mining the frequenttokens in the log messages. These approaches count tokenfrequencies and use a predefined threshold to identify thestatic components of log messages. The intuition is that ifa log event occurs many times, then the static componentswill occur many times, whereas the unique values of thedynamic components will occur fewer times. Prior workalso formulated log parsing as a clustering problem andused various approaches to measure the similarity/distancebetween two log messages (e.g., LKE [8], LogSig [56], LogMine [57], SHISO [58], and LenMa [59]). For example, LKE [8]clusters log messages into event groups based on the editdistance, weighted by token positions, between each pair oflog messages.AEL [23] used heuristics based on domain knowledgeto recognize dynamic components (e.g., tokens followingthe “ ” symbol) in log messages, then group log messagesinto the same event group if they have the same staticand dynamic components. Spell [60] parses log messagesbased on a longest common subsequence algorithm, builton the observation that the longest common subsequence oftwo log messages are likely to be the static components.IPLoM [61] iteratively partitions log messages into finergroups, firstly by the number of tokens, then by the positionof tokens, and lastly by the association between token pairs.Drain [22] uses a fixed-depth tree to represent the hierarchical relationship between log messages. Each layer of thetree defines a rule for grouping log messages (e.g., log message length, preceding tokens, and token similarity). Zhuet al. [21] evaluated the performance of such data miningbased parsers and they found that Drain [22] achieved thebest performance in terms of accuracy and efficiency. Our ngram-based log parser achieves a much faster parsing speedand a better parsing accuracy compared to Drain.0098-5589 (c) 2020 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications standards/publications/rights/index.html for more information.Authorized licensed use limited to: Concordia University Library. Downloaded on October 05,2020 at 12:55:18 UTC from IEEE Xplore. Restrictions apply.

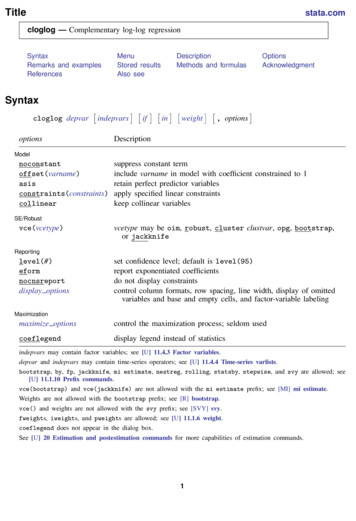

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TSE.2020.3007554, IEEETransactions on Software Engineering417/06/09 20:10:46 INFO rdd.HadoopRDD: Input split: hdfs://hostname/2kSOSP.log:21876 729217/06/09 20:10:46 INFO rdd.HadoopRDD: Input split: hdfs://hostname/2kSOSP.log:14584 729217/06/09 20:10:46 INFO rdd.HadoopRDD: Input split: hdfs://hostname/2kSOSP.log:0 729217/06/09 20:10:46 INFO rdd.HadoopRDD: Input split: hdfs://hostname/2kSOSP.log:7292 729217/06/09 20:10:46 INFO rdd.HadoopRDD: Input split: hdfs://hostname/2kSOSP.log:29168 729217/06/09 20:11:11 INFO storage.BlockManager: Found block rdd 42 20 locally17/06/09 20:11:11 INFO storage.BlockManager: Found block rdd 42 22 locally17/06/09 20:11:11 INFO storage.BlockManager: Found block rdd 42 23 locally17/06/09 20:11:11 INFO storage.BlockManager: Found block rdd 42 24 locally ! ! "" "Input split: hdfs://hostname/2kSOSP.log:21876 7292Input split: hdfs://hostname/2kSOSP.log:14584 7292Input split: hdfs://hostname/2kSOSP.log:0 7292Input split: hdfs://hostname/2kSOSP.log:7292 7292Input split: hdfs://hostname/2kSOSP.log:29168 7292Found block rdd 42 20 locallyFound block rdd 42 22 locallyFound block rdd 42 23 locallyFound block rdd 42 24 locally ! # ! # !&3-gramsInput- split:- hdfs://hostname/2kSOSP.log:21876 7292split:- hdfs://hostname/2kSOSP.log:21876 7292- Inputhdfs://hostname/2kSOSP.log:21876 7292- Input- split:.split:- hdfs://hostname/2kSOSP.log:29168 7292- Foundhdfs://hostname/2kSOSP.log:29168 7292- Found- blockFound- block- rdd 42 20block- rdd 42 20- locallyrdd 42 20- locally- Foundlocally- Found- block.2-gramsInput- split:split:- hdfs://hostname/2kSOSP.log:21876 7292hdfs://hostname/2kSOSP.log:21876 7292- Input.hdfs://hostname/2kSOSP.log:29168 7292- FoundFound- blockblock- rdd 42 20rdd 42 20- locallylocally- Found.# appearance11111111131# appearance5111141141Fig. 2. A running example of generating n-gram dictionary, which will be later used for parsing each log messages as shown in Figure 3.In addition, prior study performed a systematic literature review on automated log parsing techniques [30].They investigated the advantages and limitations of 17 logparsing techniques in terms of seven aspects, such as efficiency and required parameter tuning effort, which can helppractitioners choose the right log parsers for their specificscenarios and provide insights for future research directions.3.2Applications of Log ParsingLog parsing is usually a prerequisite for various log analysistasks, such as anomaly detection [5], [6], [7], [8], [9], failurediagnosis [10], [11], performance diagnosis and improvement [12], [13], and system comprehension [10], [14]. Forexample, Fu et al. [8] first parse the raw log messages toextract log events. Based on the extracted event sequences,they then learn a Finite State Automaton (FSA) to representthe normal work flow, which is in turn used to detectanomalies in new log files. Prior work [20] shows thatthe accuracy of log parsing is critical to the success of loganalysis tasks. Besides, as the size of log files grows fast [1],[2], [27], a highly efficient log parser is important to ensurethat the log analysis tasks can be performed in a timelymanner. In this work, we propose a log parsing approachthat is not only accurate but also efficient, which can benefitfuture log analysis research and practices.4A PPROACHIn this section, we present our automated log parsing approach that is designed using n-gram dictionaries.4.1Overview of LogramOur approach consists of two steps: 1) generating n-gramdictionaries and 2) parsing log messages using n-gramdictionaries. In particular, the first step generates n-gramsfrom log messages and calculate the number of appearancesof each n-gram. In the second step, each log message istransformed into n-grams. By checking the number of appearance of each n-gram, we can automatically parse thelog message into static text and dynamic variables. Figure 2and 3 show the overview of our approach with a runningexample.4.24.2.1Generating an n-gram dictionaryPre-processing logsIn this step, we extract a list of tokens (i.e., separatedwords) from each log message. First of all, we extract thecontent of a log message by using a pre-defined regularexpression. For example, a log message often starts withthe time stamp , the log level, and the logger name. Sincethese parts of logs often follow a common format in thesame software system (specified by the configuration oflogging libraries), we can directly parse and obtain theseinformation. For example, a log message from the runningexample in Figure 2, i.e., “17/06/09 20:11:11 INFO storage.BlockManager: Found block rdd 42 24 locally”, “17/06/0920:11:11” is automatically identified as time stamp, “INFO”is identified as the log level and “Storage.BlockManager:” isidentified as the logger name; while the content of the logis “Found block rdd 42 24 locally”. After getting the contentof each log message, we split the log message into tokens.The log message is split with white-space characters (e.g.,space and tab). Finally, there exist common formats for somespecial dynamic information in logs, such as IP address andemail address.In order to have a unbiased comparison with otherexisting log parsers in the LogPai benchmark (cf. Section 5),we leverage the openly defined regular expressions thatare available from the LogPai benchmark to identify suchdynamic information.4.2.2Generating an n-gram dictionaryWe use the log tokens extracted from each log messageto create an n-gram dictionary. Naively, for a log messagewith m tokens, one may create an n-gram where n m.However, when m has the same value as n, the phraseswith n-grams are exactly all log messages. Such a dictionaryis not useful since almost all log messages have tokens thatare generated from dynamic variables. On the other hand,a small value of n may increase the chance that the textgenerated by a dynamic variable has multiple appearances.A prior study [62] finds that the repetitiveness of an ngram in logs starts to become stable when n 3. Therefore, in our approach, we generate the dictionary using0098-5589 (c) 2020 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications standards/publications/rights/index.html for more information.Authorized licensed use limited to: Concordia University Library. Downloaded on October 05,2020 at 12:55:18 UTC from IEEE Xplore. Restrictions apply.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TSE.2020.3007554, IEEETransactions on Software Engineering5Input split: hdfs://hostname/2kSOSP.log:29168 7292Found block rdd 42 20 locally ! " ! # ! "Found block rdd 42 22 locally !" # " 3-gramssplit:- hdfs://hostname/2kSOSP.log:29168 7292- Foundhdfs://hostname/2kSOSP.log:29168 7292- Found- blockFound- block- rdd 42 20block- rdd 42 20- locallyrdd 42 20- locally- Foundlocally- Found- block & ! ! # # # block- rdd 42 20rdd 42 20- locally % ! " & # & % ! " ! " ! # ! " % ! # ! ! & % ! # appearance111113 # % ! # 2-gramshdfs://hostname/2kSOSP.log:29168 7292- FoundFound- blockblock- rdd 42 20rdd 42 20- locallylocally- Found# appearance14114 ! ! " & # & % ! " Fig. 3. A running example of parsing one log message using the dictionary built from Figure 2.phrases with two or three words (i.e., 2-grams and 3-grams).Naively, one may generate the dictionary by processingevery single log message independently. However, such anaive approach has two limitations: 1) some log events mayspan across multiple lines and 2) the beginning and theending tokens of a log message may not reside in the samenumber of n-grams like other tokens (c.f. our parsing step“Identifying dynamically and statically generated tokens”in Section 4.3.2). For example, the first token of each logmessage cannot be put in a 2-gram nor 3-gram and thesecond token of each log line cannot be put in a 3-gram.This limitation may lead to potential bias of the tokens beingconsidered as dynamic variables. In order to ensure that allthe tokens are considered equally when generating the ngram dictionary for Logram, at the beginning and the endingtokens of a log message, we also include the end of the priorlog message and the beginning of the following log message,respectively, to create n-grams. For example, if our highestn in the n-gram is 3, we would check two more tokens atthe end of the prior log message and the beginning of thefollowing log message. In addition, we calculate the numberof occurrences of each n-gram in our dictionary.As shown in a running example in Figure 2, a dictionaryfrom the nine lines of logs is generated consisting of 3-gramsand 2-grams. Only one 3-grams, “locally- Found- block”,in the example have multiple appearance. Three 2-grams,“Found- block”, “Input- split:” and “locally- Found”, havefour to five appearances. In particular, there exists n-grams,such as the 3-gram “locally- Found- block”, that are generated by combining the end and beginning of two logmessages. Without such combination, tokens like “input”,“Found” and “locally” will have lower appearance in thedictionary.4.3Parsing log messages using an n-gram dictionaryIn this step of our approach, we parse log messages usingthe dictionary that is generated from the last step.4.3.1 Identifying n-grams that may contain dynamic variablesSimilar to the last step, each log message is transformed inton-grams. For each n-gram from the log message, we checkits number of appearances in the dictionary. If the numberof occurrence of a n-gram is smaller than a automaticallydetermined threshold (see Section 4.3.3), we consider thatthe n-gram may contain a token that is generated fromdynamic variables. In order to scope down to identifythe dynamically generated tokens, after collecting all lowappearing n-grams, we transform each of these n-gramsinto n 1-grams, and check the number of appearance ofeach n 1-gram. We recursively apply this step until wehave a list of low-appearing 2-grams, where each of themmay contain one or two tokens generated from dynamicvariables. For our running example shown in Figure 3, wefirst transform the log message into two 3-grams, whileboth only have one appearance in the dictionary. Hence,both 3-grams from the running example in Figure 3 maycontain dynamic variables. Afterwards, we transform the3-grams into three 2-grams. One of the 2-grams (“Found block”) has four appearances; while the other two 2-grams(“block- rdd 42 20” and “rdd 42 20- locally”) only haveone appearance. Therefore, we keep the two 2-grams toidentify the dynamic variables.4.3.2kensIdentifying dynamically and statically generated to-From the last step,

In this section, we introduce the background of log parsing and n-grams that are used in our log parsing approach. 2.1 Log Parsing In general, the goal of log parsing is to extract the static tem-plate, dynamic variables, and the header information (i.e., timestamp, level, and logger) from a raw log message. While