Transcription

Basic issues on Parsing 1IntroductionParsing issuesParsing context free grammar (CFG)Robust parsingStatistical parsingNLP parsing general1

Basic issues on Parsing 2Parsing goals Syntactic structure Semantic structureSyntax/semantic interaction Only syntax Only semantics Performing in sequence Performing in parallelNLP parsing general2

Basic issues on Parsing 3Parsing as searching in a searchspace Characterizing the states (if possible) enumerate them Define the initial state (s) Define (if possible) final statesor the condition to reach one ofthemNLP parsing general3

Basic issues on Parsing3Ambiguity in parsingA sentence is structurally ambiguos ifthe grammar assigns it more than apossible parse.Common kinds of structuralambiguity include:- PP-attachment- Coordination ambiguityNLP parsing general4

Basic issues on Parsing5“I was on the hill that has a telescopewhen I saw a man.”“I saw a man who was on a hill andwho had a telescope.”“I saw a man who was on the hillthat has a telescope on it.”“Using a telescope, I saw a man whowas on a hill.”.“I was on the hill when I used thetelescope to see a man.”I saw the man on the hill with the telescopeMeNLP parsing generalSeeA manThe telescopeThe hillTaken from Loper5

Basic issues on Parsing4Factors in parsing Grammar expressivity Coverage Involved Knowledge Sources Parsing strategy Parsing direction Ambiguity management (in)determinism Parsing engineeringNLP parsing general6

Basic issues on Parsing 6Parsers today Context free grammars (extended or not) Tabular Charts Others Unification-basedStatisticalDependency parsingRobust parsing (shallow, fragmental, chunkers,spotters)NLP parsing general7

Basic issues on Parsing7Parsing strategiesParsing can be viewed as a search problemTwo common architectural approaches for thissearch areTop-down: Starting with the root S andgrowing trees down to the input wordsBottom-up: Starting with the words andgrowing trees uptoward the root S.NLP parsing general8

Basic issues on ParsingCFG grammarNon terminalsentence NP VPNP det nNP nVP viVP vt NPtheNLP parsing generalcat8Terminaldet then catn fishv eatseatsfish9

Basic issues on ParsingTop-downFirst stepSentenceNPVPSecond stepSentenceSentenceNP VPNPVPdet n vinviNLP parsing general9SentenceSentenceNPVPNPVPdet n vt NP n vt NP10

Basic issues on ParsingBottom-upFirst stepSecond stepThird stepFourth stepNLP parsing general10detnvtnthecateats ecateatsfishSentenceNPVP11

Basic issues on Parsing11Parsing strategy Top Down Guided by goalsStarts with a goal (or set of goals) to be built.Tries to solve one of the pending goalsIf more than one production can be applied: serach problem Pending goals can be reordered Several search criteria (including heuristics) can beapplied The process ends when all the goals have been reachedNLP parsing general12

Basic issues on Parsing12Parsing strategy Bottom up Data driven Starts from the sequence of words to beparsed (facts) Proceeds bottom up Several search criteria (including heuristics)can be applied The process ends when the list of factscontains the initial symbol of the grammarNLP parsing general13

Basic issues on Parsing 13Problems of top down strategy Left recursivity Many productions expanding the samenon terminal Useless work Search basically guided by the grammar Repeated work Problems of backtracking algorithmsNLP parsing general14

Basic issues on Parsing 14Problems of bottom up parsing empty (optional) categories Useless work (locally possiblebut globally impossible) Inefficient when there is a highlexical ambiguity Repeated workNLP parsing general15

Transition Networks 1Finite state automata- Transition Network (TN) States associated to the positions in the sentence Arcs (transitions) Labeled with part of speech (POS) An arc can be traversed if the current word has the samePOS as the arc. Non determinism More than one initial state Current word with more than 1 POS More than one arc for the same POSNLP parsing general16

Transition Networks 2adjdet q1q0ndetq2vq4q5nvnpnq6adjq3thedetNLP parsing generalcat eats fishnvn17

Transition Networks 3Transition networks limitations Only regular grammarsOnly recognitionNon-determinism - backtrackingNo separation between grammar andparser grammar - syntactic model description parser - controlNLP parsing general18

Transition Networks 4Recursive transition networks (RTN) Colection of TNs labeled with a name Arcs Labeled as in TN with POS Terminal labels Labeled with RTN identifiers Non terminal labels Final states in RTN produce comingback to the target state of the arcproducing the callRTN are weakly equivalent to CFGNLP parsing general19

Transition Networks 52NPSentenceVP31NPndet12PP3nadjnpNLP parsing general20

Transition Networks 6Vtrans1VPNP23VintPrepPPNLP parsing general1NP2321

Transition Networks 7Recursive transition networks (RTN) Limitations Transitions depend only on thecategories CFGOnly recognizingIn fact fixed top-down strategyNLP parsing general22

Transition Networks 8 Woods (1970)Aumented transition networks (ATN) RTN withoperations attached to arcs and use of registersOperations ConditionsFilter transitions betweenstatesActionsBuilding intermediate andoutput structures.InitializationsATN allow expressing contextual constraintsNLP parsing general23

Transition Networks 9FeaturesNumber: Singular, PluralPerson: 1st, 2nd, 3rdDefault: emptyDefault: 3rdRols: Subject6:Proper5:Pronoun1:detf8: Sendg4:Nounh7:pp2: Jump3: AdjectiveNLP parsing generalTaken from Winograd, 198324

Transition Networks 10Inicializations, Conditions and ActionsNP-1: fDeterminergA: Set Number to the number of *NP-4: gNounhC: Number is empty or number is the numberof *A: Set Number to the number of *Set Subject to *NP-5: fPronounhA: Set Number to the number of *Set Person to the Person of *Set Subject to *NP-6: fProperhA: Set Number to the number of *Set Subject to *NLP parsing general25

Transition Networks 11Aumented Transition networks limitations Fixed top-down strategy Redundancy in backtracking operations Problems of notational expressivity: Very difficult to transportNLP parsing general26

Basic issues on Parsing 11Unified mechanism of parser description Sikkel, 1997 Parser (schema): Given a sentence, an inicial set of items is build Given a grammar, a set of rules can be used for gettingadditional items Parser (algorithm):Parsing schema data structures control structures( communication structures)NLP parsing general27

Charts1 A Chart is a directed graph built dynamicallyalong parsingExtension of WFSTNodes correspond to the start and end of thesentence and to the positions between words.Active arcs (goals or hypothesis) and inactive arcs(facts) Notation active arcs: dotted rules inactive arcs : category10theNLP parsing general2cat43eatsfish28

Charts2CFG grammarNon terminalsentence NP VPNP det nNP nVP viVP vt NPtheNLP parsing generalcatTerminaldet then catn fishv eatseatsfish29

Charts3program chart{ inicialize the chart with H;inicialize the agenda with items which can be deduced withoutantecedents;while not empty (agenda){extract current item from agenda and put it on the chart;foreach item which could be deduced with one step includingcurrent item{if item not in agenda and not in chartthen add item to agenda}}}NLP parsing general30

Charts4 A concrete chart algorithm should: define the structure of agenda and itsscheduling criteria define order of performing deductive stepsDscan Dcompl - Combination ruleDpred - Top-down ruleBottom-up ruleBUstrategyNLP parsing generalTDstrategy31

Charts5Combination ruleWhen an active arc of the Chart reaches a node j and from thisnode starts aninactive arc labeled with the category the active arc was waitingfor, both arcs are combined for building a new arc (active ornot) starting in the start node of the active arc and ending inthe ending node of the inactive arc.BA α BβijkA αB βNLP parsing general32

Charts6Top-down ruleWhen an active arc of the Chart reaches a node j, for all theproductions of the grammar expanding the category the active arcis waiting for a new active arc is built starting and ending in jcorresponding to the dotted rule with dot in the initial position.B γB γB ηB ϕ.B ηiNLP parsing generalB ϕA α Bβj33

Charts7Bottom-up ruleWhen an inactive arc of the Chart starts in a node i, for eachProducction of the grammar owning as first copnstituent of theright side the category of the inactive arc a new active arc is builtstarting and ending in i corresponding to the dotted rule with dotin the initial positionB AγB AηB AγB AηB Aϕ.B AϕiNLP parsing generalAj34

Charts8[sentence NP VP ][sentence NP VP ][sentence NP VP][NP n ][VP vi][sentence NP VP][VP vt NP][NP det n][NP det n][NP det n ][NP n] [det][n]the0NLP parsing general[NP det n][VP vi ][NP n][vt] [vi]cat1[VP vt NP]eats2[n]fish3435

Charts9 Problems The size of the chart grows with thesize of the grammar making thealgorithm difficult to scale up. A lot of useless active and inactive arcsare built. Inpractice,lackingappropriateknowledge, a fixed bottom-up strategy,eventually corrected with top-downpredictions, is usedNLP parsing general36

Charts10- Ambiguity combined with the repeated parsing ofsub-trees are a difficulty for parsing algorithms.Those algorithms use simple backtrackingmechanisms.- There parsing algorithms that use dynamicprogramming techniques, such as a table of partialparsers to efficiently parse ambiguous sentences.-The CKY, Earley and Chart-Parsing algorithms all usedynamic-programming to solve repeated parsing ofsubtrees problem.NLP parsing general37

Robust Parsing1 Conventional methods of parsingare insufficient for dealing withnon restricted texts Adequate segmentation DisambiguationWhich are the units toparse CoverageWhich is the most likelyparse What to do Not parsing all Not parsing in depthNLP parsing generalParsing beyond the lexicalcoveragefragmentalparsingshallowparsing38

Robust Parsing2Problems when parsing non restrictedcorpus Adaptation of a grammar to a corpus orsublanguage Selection of the correct (!?) parsebetween the ones allowed by thegrammar Production of good parses for entriesoutside the coverage of the grammar(Robustness) NLP parsing general39

Robust Parsing2- Partial parsing and chunking are methodsfor identitying shallow syntactic constituentsin a text.- High accuracy partial parsing can beachieved either trough rule-based ormachine learning-based methods.NLP parsing general40

Robust Parsing3Partial parsersphrasal parsers chunkers, spotters Church,1988coocurrence parsers Church,Hanks,1989, Brent,1993fragmental parsers Fidditch, Hindle,1994, MITFP, Abney,1991constraint-based parsers Voutilainen,1995NLP parsing general41

Robust Parsing4 Chunking detection of phrases nominal, verbal,adjetival, adverbial basic (withoutrecursion) Finite state techniques Performance of a cascade oftransductors Hidden Markov ModelsAbney, 1996Argamon et al, 1998Cardie, Pierce, 1998Church, 1988Ramshaw, Marcus,1995Skut, Brants, 1998 Machine LearningNLP parsing general42

Robust Parsing5Definition of chunkWith linguistic basis: AbneyOnly pragmatic: Contiguous sequences of related tokens– Not confusing with terms e.g. Base NPApproaches to chunkingLook for (include) informationRemove information e.g. ChinkNLP parsing general43

Robust Parsing6Representing chunksLabels e.g. tags BEGIN, INSIDE, OUTSIDETreesChunk parserLooking for non overlapped chunks forreaching a maximal coverageNLP parsing general44

Robust Parsing7Frecuently regular expressions over sequences of POStagsaglomerative (chunk rules) vs divisive (chink rules)- Rules for fusion of adjacent chunks- Rules for splitting a chunk in smaller componentsNLP parsing general45

Statistical Parsing1Using statistical models for- Guiding parsing- Get the most likely parse- Ambiguity resolution (pp-attachment)- Grammatical induction from corporaGoal: Parsing of non restricted texts with a reasonable levelof accuracy ( 90%) and efficiency.Requirements:Corpora tagged (with POS): Brown, LOB, Clic-TalpCorpora analyzed: Penn treebank, Susanne, AncoraNLP parsing general46

Statistical Parsing2- Lexical approaches- Context free: unigram- Context dependent: N-gram, HMM- Syntactic approaches- Statistical context free grammar (SCFG)- Hibrid approaches- Stochastic lexicalized Tags- Computing the most likeky (most probable) parseNLP parsing general47

Statistical Parsing3Probabilistic grammars assign a probability to a sentenceor string of words.Usually they capture more general syntactic informationthan the N-gram grammars.In a probabilistic context-free grammar (PCFG):- Associate a probability to each rule- Associate a probability to each lexical entryEach PCFG rule is treated as if it were conditionallyindependent; thus the probability of a sentence iscomputed by multiplying the probabilities of each rule inthe parse of the sentence.NLP parsing general48

Statistical Parsing4NLP parsing general49

Statistical Parsing5NLP parsing general50

Statistical Parsing6NLP parsing general51

Statistical Parsing7NLP parsing general52

Statistical Parsing7Pros and cons of SCFGSome idea of the probability of a parse but not very goodCFG cannot be learned without negative examples, SCFGcan SCFGs provide a language model for a languageIn practice SCFG provide a worse language model than a3-gramP([N [N toy] [N [N coffee] [N grinder]]]) P ([N [N [N cat] [N food]] [N tin]])P (NP Pro) is in Subj position than in Obj position.NLP parsing general53

Statistical Parsing8- Robust- Posibility of combining SCFG with 3-grams- SCFG assign a lot of probability mass toshort sentences (a small tree is more probablethan a big one)- Parameter estimation (probabilities)- Problem of sparseness- VolumeNLP parsing general54

Statistical Parsing9- Association to the rule. Information aboutthe point of application of the rule in thederivation tree is lost.- Low frequency constructions are penalized- Probability of a derivation. Contextualindependence is assumed- Posibility of relax conditional independence:- Sensitivity to structure- LexicalizationNLP parsing general55

Statistical Parsing10Sensitivity to structureNode expansion depends of its position in the treePronoun LexicalSubject91%9%Object34%66%Enrichment of a node with information of its ancestors SNP is different of VPNPPronouns as arguments of ditransitive verbs I gave Charlie the bookI gave the book to CharlieI gave you the book? I gave the book to youNLP parsing general56

Statistical Parsing11(Head) Lexicalizationput takes both an NP and a VP Sue put [ the book ]NP [ on the table ]PP * Sue put [ the book ]NP * Sue put [ on the table ]PPlike usually takes an NP and not a PP Sue likes [ the book ]NP * Sue likes [ on the table ]PPNLP parsing general57

Statistical Parsing12Local TreeComeTakeThinkWantVP- V9.5%2.6%4.6%5.7%VP- V NP1.1%32.1%0.2%13.9%VP- V PP34.5%3.1%7.1%0.3%VP- V SBAR6.6%0.3%73.0%0.2%VP- V S2.2%1.3%4.8%70.8%VP- V NP S0.1%5.7%0.0%0.3%VP- V PRT NP0.3%5.8%0.0%0.0%VP- V PRT PP6.1%1.5%0.2%0.0%NLP parsing general58

Statistical Parsing13NLP parsing general59

Statistical Parsing14NLP parsing general60

Statistical Parsing15NLP parsing general61

Statistical Parsing15Two modelsConditional/Discriminative model :The probability of a parse tree is directly estimatedP t s, G con P t s, G 1tProbabilities are conditioned on a concrete sentence.No sentence distribution probabilities is assumedNLP parsing general62

Statistical Parsing16 CFGSCFG For each rule of G, (A α) PG we shouldbe able to define a probability P(A α) P A α 1 A α P G Probability of a treeP (ψ ) αP ( A α ) f ( A α ;ψ )( A ) PGNLP parsing general63

Statistical Parsing17P(t) -- Probability of a tree t (product of probabilitiesof the rules generating it.P(w1n) -- Probability of a sentence is the sum of theprobabilities of all the valid parse trees of thesentenceP(w1n) Σj P(w1n, t) where t is a parse of w1n Σj P(t)NLP parsing general64

Statistical Parsing18- Positional invariance:The probability of a subtree is independent of itsposition in the derivation tree- Context-freeIndependence from ancestorsNLP parsing general65

Statistical Parsing19Parameter estimation Supervised learning– From a treebankNon supervised learningNLP parsing general66

Statistical Parsing20NLP parsing general67

Treebank grammars1Consider a treebank containingthe following treesS (1)S (2)S (3)AABBAAaaaafgS (4)S (5)AAAAfagfNLP parsing general68

Treebank grammars2Supose that (1) occurs 40 times, (2)occurs 10 times, (3) occurs 5 times, (4)occurs 5 times, and (5) occurs once.We want to induce a SCFG reflexing thistreebank.Parameter estimationΣj P(Ni ζj Ni ) 1NLP parsing general69

Treebank grammars3RulesSSAAAB A A B B: a: f: g: a:NLP parsing general: 40 5 5 1 511040 40 5 855 5 1 115 1 61070

Treebank grammars4Parameters maximizing global likelihood of thecorpus:GFrequencyTotalProbabilityS AAS BBA aA fA gB 591.0NLP parsing general71

Treebank grammars5Given this parametrization,what is the mostlikely parse for "a a"?S (1)S (2)AABBaaaaP(1) P(S A A) * P(A a) * P(A a) 0.836 * 0.833 * 0.833 0.580P(2) P(S B B) * P(B a) * P(B a) 0.164 * 1.0 * 1.0 0.164NLP parsing general72

Treebank grammars8-Removing non lnguistically valid rules- Assign probabilities (MLE) to the initial rules- Remove a rule except in the the probability of the structurebuilt from its application is greater than the probability ofbuilding applicating simpler rules.- Thresholding Removing rules occurring n allyCompactedGrammar 1LinguisticallyCompactedGrammar 417NLP parsing general73

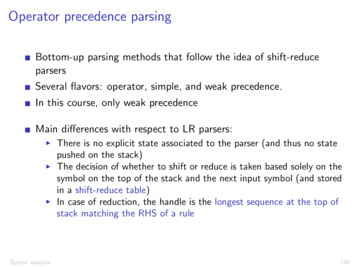

NLP parsing general 37 Charts 10 - Ambiguity combined with the repeated parsing of sub-trees are a difficulty for parsing algorithms. Those algorithms use simple backtracking mechanisms. - There parsing algorithms that use dynamic programming techniques, such as a table of partial parsers to efficiently parse ambiguous sentences.