Transcription

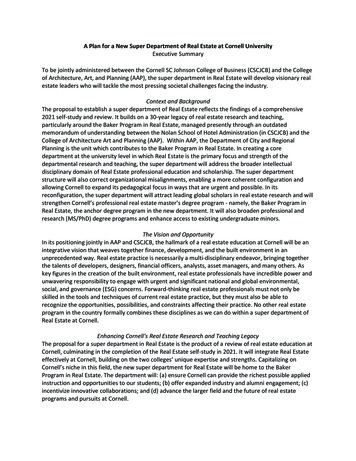

Real-world Video Super-resolution: A Benchmark Datasetand A Decomposition based Learning Scheme†Xi Yang1,2,*, Wangmeng Xiang1,2,*, Hui Zeng1,2 , Lei Zhang1,2,1The Hong Kong Polytechnic University, 2 DAMO Academy, Alibaba Groupxxxxi.yang@connect.polyu.hk, {cswxiang, cshzeng, cslzhang}@comp.polyu.edu.hkAbstractVideo super-resolution (VSR) aims to improve the spatial resolution of low-resolution (LR) videos. ExistingVSR methods are mostly trained and evaluated on synthetic datasets, where the LR videos are uniformly downsampled from their high-resolution (HR) counterparts bysome simple operators (e.g., bicubic downsampling). Suchsimple synthetic degradation models, however, cannot welldescribe the complex degradation processes in real-worldvideos, and thus the trained VSR models become ineffective in real-world applications. As an attempt to bridgethe gap, we build a real-world video super-resolution (RealVSR) dataset by capturing paired LR-HR video sequencesusing the multi-camera system of iPhone 11 Pro Max. Sincethe LR-HR video pairs are captured by two separate cameras, there are inevitably certain misalignment and luminance/color differences between them. To more robustlytrain the VSR model and recover more details from the LRinputs, we convert the LR-HR videos into YCbCr space anddecompose the luminance channel into a Laplacian pyramid, and then apply different loss functions to different components. Experiments validate that VSR models trained onour RealVSR dataset demonstrate better visual quality thanthose trained on synthetic datasets under real-world settings. They also exhibit good generalization capability incross-camera tests. The dataset and code can be found athttps://github.com/IanYeung/RealVSR.1. IntroductionSuper-resolution (SR) [5] is a classical yet challengingtask in image/video processing and computer vision, aimingat reconstructing high-resolution (HR) images/videos fromtheir low-resolution (LR) counterparts. There are two major research branches in the field of SR: single image superresolution (SISR) [9] and video super-resolution (VSR) [1].* Equal contribution.† Corresponding author.RIF grant (R5001-18).This work is supported by the Hong Kong RGCLRVimeo-90kRealVSR Lv1RealVSR Lv2Figure 1. Video super-resolution results on a real-world video(captured by the iPhone 11 Pro Max) by EDVR [27] trained onthe synthetic Vimeo-90k datatset [31] and our RealVSR dataset.While SISR mainly exploits the spatial redundancy withinan image, VSR utilizes both spatial and temporal redundancies to reconstruct the HR video. With the increasing popularity of mobile imaging devices and rapid developmentof communication technology, VSR is attracting more andmore attention for its great potentials in HR video generation and enhancement.The recent progress in VSR research largely attributesto the rapid development of deep convolutional neural networks (CNNs) [3, 31, 14, 20, 25, 27, 11], which set newstate-of-the-arts on several benchmarking VSR datasets[31, 23]. Those datasets, however, are mostly synthetic onesbecause it was difficult to collect real-world LR-HR videopairs. Specifically, the LR videos are obtained by uniformlydownsampling their HR counterparts using some simpleoperators, e.g., bicubic downsampling or direct downsampling after Gaussian smoothing. Unfortunately, such simpledegradation models could not faithfully describe the complex degradation processes in real-world LR videos. As a4781

result, VSR models trained on such synthetic datasets become much less effective when applied in real-world applications. An example is shown in Fig. 1, where we can seethat the VSR model trained on the widely used Vimeo-90kdatatset [31] is less effective in super-resolving on a realworld video captured by the iPhone 11 Pro Max.In order to remedy the above mentioned problem, it ishighly desired that we can have a VSR dataset of pairedLR-HR sequences which are more consistent with the realworld degradations. Constructing such a paired datasetused to be very difficult since it requires capturing accurately aligned LR-HR sequences of the same dynamic scenesimultaneously. Fortunately, the multi-camera system ofiPhone 11 Pro series enables us to move one large step towards this goal. As shown in Fig. 2, there are three separate cameras of different focal lengths available in iPhone11 Pro series. Utilizing the double taking function providedby the DoubleTake app, we are able to capture two approximately synchronized sequences using two of the three cameras. Some image registration algorithms [4] can then beemployed to align the LR-HR video sequence pairs. Fig. 2also shows an example of the LR-HR pairs before and afterregistration. In this way, a real-world VSR dataset, namelyRealVSR, is constructed by capturing various indoor andoutdoor scenes under different illuminations. RealVSR provides a worthy benchmark for training and evaluating VSRalgorithms for real-world degradations.Due to the constraints in dual camera capturing, thereexists certain misalignment and luminance/color differences between the LR-HR sequences even after registration.Therefore, directly training a CNN to map the LR sequenceto the HR sequence with simple losses is not a very suitable strategy. To alleviate the influence of color difference,we disentangle the luminance and color by transforming theRGB videos into YCbCr space, and focus on the reconstruction of video details such as edges and textures. On thecolor channels, we adopt a gradient weighted loss [30], intending to pay more attention to color edge reconstruction.To address the problem of small misalignment and luminance difference in Y channel, we decompose Y channels ofpredicted and targeted frames into Laplacian pyramids, andapply different losses on low-frequency and high-frequencycomponents. As shown in Fig. 1, the VSR model trained onour dataset with the proposed learning strategy reproducesmuch better video details with less artifacts.The contributions of this work are twofold. First, a RealVSR dataset (the first of its kind to the best of our knowledge) is constructed to mitigate the limitations of syntheticVSR datasets and provides a new benchmark for trainingand evaluating real-world VSR algorithms. Second, we propose a specific training strategy on RealVSR to learn VSRmodel with focus on detail reconstruction. Extensive experiments are conducted to validate the proposed RealVSRdataset and training strategy. Although the RealVSR datasetis built with iPhone 11 Pro Max, the VSR models trained onit also exhibit good generalization capability to videos captured by other mobile phone cameras.2. Related WorkVideo super-resolution datasets.There are severaldatasets widely adopted in the VSR research. Vimeo-90k[31] is the most popular one, which consists of more than90,000 septuplets collecting from the Internet. Each septuplet contains 7 frames of resultion 256 448. REDS [23] isa dataset captured by the GOPRO sport camera. It consistsof 300 sequences and each sequence contains 100 frames ofresolution 720 1280. There are also some private datasetsfor VSR training [25]. In all these datasets, LR sequencesare synthesized from HR sequences with simple degradation models, such as bicubic downsampling or direct downsampling after Gaussian smoothing. Though these datasetscan serve as reasonable benchmarks for investigating andevaluating VSR algorithms, the adopted simple degradation model for LR-HR video pair generation makes themhard to use in practice because the degradation process ofreal-world videos is much more complex. When applyingthe VSR models trained on these datasets to real-world LRvideos, the super-resolved videos are often over-smooth andprone to visual artifacts. This motivates us to build a realworld VSR dataset to narrow this synthetic-to-real gap.Real-world image super-resolution datasets. Thoughthere is no real-world VSR dataset publically available yet,several real-world SISR datasets have been built and released. Chen et al. [6] collected 100 LR-HR image pairs ofprinted postcards in a carefully controlled indoor environment. Zhang et al. [33] built a raw image SISR dataset of500 outdoor scenes via optical zooming, while the LR-HRimage pairs are not well-aligned. Cai et al. [4] constructeda real-world SISR benchmark also by optical zooming, butthey developed a registration algorithm to carefully alignthe LR-HR image pairs so that end-to-end training of CNNis easy to implement. Wei et al. [30] further explored thisidea and established a larger benchmarking dataset withmore DSLR cameras. Inspired by those works on realworld SISR datasets, we propose to build the first real-worldVSR dataset to facilitate the research of practical VSR.Video super-resolution methods. The recent development of VSR algorithms [3, 31, 14, 20, 25, 27, 11] largelybenefits from the rapid development of deep-learning technologies. Existing VSR algorithms can be roughly dividedinto two categories based on how the frame alignment isdone. The first category of algorithms does not have an explicit alignment process. Instead, they resort to techniquessuch as 3-dimensional convolution [15] and recurrent neuralnetwork [11] to exploit spatial-temporal information. Theother category of algorithms adopts explicit alignment to4782

Figure 2. The camera system of iPhone 11 Pro Max, the DoubleTake app, the captured low-resolution (LR) and high-resolution (HR)sequences, and the LR-HR video registration process.help the network better exploit spatial-temporal information. These algorithms generally follow the paradigm ofalignment, fusion and reconstruction. Earlier algorithmsadopt optical flow to perform frame alignment. Deep-DE[20] and VSRnet [14] first perform motion compensationwith optical flow and then reconstruct the HR frame witha CNN. Later, Caballero et al. [3] proposed an end-to-endsolution called VESPCN, which integrates alignment andreconstruction into a single deep-learning framework. Similar strategies are adopted in DRVSR [25] and TOF [31].Recently, deformable convolution [7, 35] has become popular for alignment owing to its powerful modeling capability.In particular, EDVR [27] aligns multi-level frame featureswith deformable convolution and fuses the aligned featureswith spatial and temporal attention. All the above VSR algorithms are developed based on the synthetic datasets. Inthis work, we built a real-world VSR dataset, which hasdistinct properties from the synthetic ones. Some new training strategies will be accordingly proposed to train effectivereal-world VSR models.3. The Real-world VSR DatasetOur goal is to build a real-world VSR dataset of pairedLR-HR sequences, which can serve as a worthy benchmark to train and evaluate real-world VSR algorithms. Thedataset is constructed using iPhone 11 Pro Max mobilephones with dual camera taking function provided by theDoubleTake app. As illustrated in Fig. 2, the DoubleTakeapp makes it possible to capture two approximately synchronized high-definite video sequences at different scalesby two cameras with different focal lengths. There are threerear cameras mounted on iPhone 11 Pro Max: an ultrawide camera with 13mm-equivalent lens, a wide camerawith 26mm-equivalent lens, and a telephoto-camera with52mm-equivalent lens. All the three cameras capture photos with 12 megapixels. Cameras with larger focal lengthcan capture scenes with finer details, and the scaling factoris equal to the ratio of focal lengths. Considering the severedistortion of ultra-wide lens and the inferior image qualityafter cropping, we adopt the cameras with 26mm-equivalentlens and 52mm-equivalent lens for dataset construction. Foreach pair of captured video sequences, the sequence captured by camera with 52mm-equivalent lens is taken as theground truth HR sequence, while the sequence captured bycamera with 26mm-equivalent lens is adopted to generatethe corresponding LR sequence, leading to a dataset for 2VSR. It is worth mentioning that 2 scaling is currently theprimary demand in practical VSR.Using iPhone 11 Pro Max cameras and the DoubleTakeapp, we captured more than 700 sequence pairs. Each pairconsists of two approximately synchronized sequences offrame rate 30fps and resolution 1080P. To ensure the diversity of the dataset, the captured sequences cover a varietyof scenes, including outdoor and indoor scenes, daytimeand nighttime scenes, still scenes and scenes with moving objects, etc. In general, scenes with rich textures arepreferred as they are more effective to train a useful VSRmodel. The sequences in the dataset cover a variety of motions, including camera motions and object motions. Afterdata collection, we manually selected and excluded about200 sequences of inferior quality, e.g., severely blurred,noisy, over-exposed or under-exposed videos, etc. Considering the imperfect synchronization between the LR-HRsequences, we excluded sequence pairs with serious misalignment problem. After careful selection, 500 sequencepairs remain in the dataset. Fig. 3 shows some examplescenes and the motion statistics of the dataset. More example scenes and content analysis can be found in the supplementary material.Finally, the LR frames and HR frames in each sequencepair need to be aligned so that one can perform supervisedVSR model training more easily. We adopt the image registration algorithm proposed in [4] to align the LR-HR videosframe by frame. Considering that there can be some smallregistration drifts between adjacent frames, we extended theregistration algorithm in [4] by using five adjacent framesas inputs to compute the registration matrix of the centeredframe. Once aligned, we crop the aligned LR and HR sequences at the center region of size 1024 512 to eliminatethe alignment artifacts around the boundary. Fig. 2 illustrates the dataset construction process. It is worth noting4783

Figure 3. Example video scenes and motion statistics of the constructed RealVSR dataset.in Fig. 5. Any existing VSR networks can be adopted in ourframework with the proposed training losses. We convertthe estimated and ground-truth HR videos into the YCbCrspace to disentangle the luminance and color, and apply different loss functions on different components. On the Ychannel, we design a Laplacian pyramid based loss to helpthe network better reconstruct the details under minor luminance difference. On color channels Cb and Cr, we adopt agradient weighted content loss to focus on color edges. Tofurther enhance the visual quality of the reconstructed HRvideo, we propose a multi-scale edge-based GAN loss toguide the texture generation. The details of the losses willbe introduced in the next section.that the LR and HR sequences are of the same size afterregistration. To further standardize the dataset, we cut allsequences to have the same length of 50 frames. The final dataset consists of 500 LR-HR sequence pairs, each ofwhich has 50 frames in length and 1024 512 pixels in size.(b) LR crop4. VSR Model Learning4.1. Motivation and overall learning frameworkFollowing most of the existing works [19, 31, 26,27], we formulate VSR as a multi-frame super-resolutionproblem. Given 2N 1 consecutive LR video framesLL{It N, ., ItL ., It N}, we aim to predict the HR versionof the center frame, denoted by ItH .There are a few sources of image degradation in videoacquisition process, such as the anisotropic blurring, thesignal-dependent noise, the non-linear mapping in imagesignal processing (ISP) pipeline and the video compressionalgorithm, etc. Compared with the existing synthetic VSRdatasets [31, 23] which assume the simple bicubic downsampling degradation, our RealVSR dataset is collected inthe real-world environment and it naturally considers thecomplex degradation factors in real scenarios. However, italso poses greater challenges to effectively train VSR models. Specifically, the LR-HR videos taken from the twocameras undergo different lens, sensors and ISP pipelines,and thus exhibit different distortions. The registration algorithm [4] we adopt could alleviate the problems; however,there still exist minor misalignment and luminance/colordifferences between the LR-HR sequences. Fig. 4 showsan example, where we can see the slight global luminanceand color difference between the LR and HR frames dueto the variations in illumination, exposure time and cameraISP between the two cameras.Our goal is to recover the image details (edge, texture,etc.) in the LR frames but not the global luminance andcolors. Therefore, we propose a set of decomposition basedlosses to learn an effective VSR model from the constructedRealVSR dataset. The overall learning framework is shown(a) LR-HR pair(c) HR cropFigure 4. Slight luminance and color difference exist in some LRHR sequence pairs.4.2. Decomposition based lossesLaplacian pyramid based loss on luminance channel.The Y channel contains most of the texture informationof a video frame and it is crucial to reconstruct image details in VSR. The commonly used losses in VSR research[19, 31, 26, 27], such as L1 loss, L2 loss and Charbonnierloss [17], are sensitive to global luminance differences, andhence the VSR models trained using such losses may bedistracted from learning image structures and details. Totackle this problem, we decompose the Y channel into aLaplacian pyramid [2]. The low-frequency component captures the global luminance and general structure of the original image, and the high-frequency components contain themulti-scale details of the original image. By applying different losses on the low-frequency and high-frequency components, we are able to achieve better detail reconstructionwhile allowing certain difference in global luminance.Denote the predicted HR luminance channel and theground-truth HR luminance channel by Ŷ and Y respectively. As shown in Fig. 5, we decompose them into athree-layer Laplacian pyramid, denoted by {Ŝ0 , Ŝ1 , Ŝ2 } and{S0 , S1 , S2 }, respectively, where Ŝ0 and S0 refer to the4784

Figure 5. Framework of our decomposition based learning scheme of VSR models.low-frequency components and others the high-frequencyones. The global luminance difference between Ŷ and Ylies mostly in the low-frequency components, and we adoptthe SSIM loss [29] to encourage structural information reconstruction. Compared to the L1 loss and L2 loss, SSIMfocuses more on the image structures and is insensitive tothe luminance changes, which fits our goal well. The structure loss is given by\mathcal {L} {s} \mathcal {L} {\mathrm {SSIM}}(\hat {S} {0}, S {0}) 1-\mathrm {SSIM}(\hat {S} {0}, S {0}).(1)Since the high-frequency components are basically freeof global luminance difference, we adopt Charbonnier lossto encourage accurate reconstruction of fine details. Thedetail loss is then\mathcal {L} {d} \sqrt {{\ {\hat {S} {2} - S {2}}\ } 2 \epsilon 2} \sqrt {{\ {\hat {S} {1} - S {1}}\ } 2 \epsilon 2},(2)where ϵ 10 3 is a small constant.Gradient weighted loss on chrominance channels. Compared with the luminance channel, the chrominance channels CbCr are much smoother. We thus focus on reconstructing the prominent color edges on the chrominancechannels. Inspired by [30], we adopt a gradient weightedloss here. Referring to Fig. 5, denote by Ĉ and C the predicted and ground truth HR chrominance channels, respectively. The gradient weighted color loss is given byMulti-scale edge-based GAN loss. The generative adversarial networks (GANs) [10] have been used in some SISRmethods [18, 28] to improve the perceptual quality of estimated HR images. However, these methods usually apply GAN loss directly on the full-color image, which maynot be effective enough to generate textures. We proposea multi-scale edge-based GAN loss by adopting the designof PatchGAN [12] and the relativistic average discriminator[13]. The GAN loss is applied to the high frequency components S1 and S2 of the Laplacian pyramid to enable betterfine-grained discrimination for the VSR task. The adversarial loss for the generator is(4)and the loss for the discriminator is(5)where Di is the relativistic average discriminator for thei-th high-frequency components of the Laplacian pyramid.Final loss. With the reconstruction losses Ls , Ld , Lc andadversarial loss Ladv , we propose two versions of final lossfor VSR network training. The first version, denoted byLv1 , focuses on the reconstruction of fine details, whichcombines Ls , Ld and Lc as follows:\mathcal {L} {\mathrm {v1}} \mathcal {L} {s} \mathcal {L} {d} \mathcal {L} {c},\mathcal {L} {c} \sqrt {{\ {\Delta {gw} * \hat {C} - \Delta {gw} * C}\ } 2 \epsilon 2},(3)where gw (1 w x )(1 w y ), x and y are theabsolute difference maps between the gradient of Ĉ andC in the horizontal and vertical directions, respectively.w 4 is a weighting factor, and ϵ 10 3 is a smallconstant.(6)The second version, denoted by Lv2 , aims to further enhance the visual quality by generating some details, and isdefined as\mathcal {L} {\mathrm {v2}} \mathcal {L} {\mathrm {v1}} \lambda \mathcal {L} {\mathrm {adv}},(7)where Ladv is LG for the generator and LD for the discriminator, and λ is a parameter to control to what degree thesynthetic details will be involved.4785

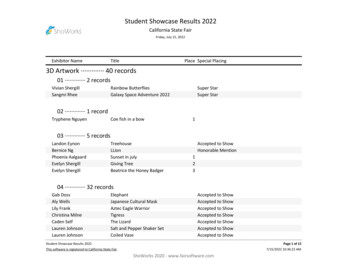

5. Experiments5.1. Experiment settingsDatasets. Apart from the constructed RealVSR, we alsoadopt the widely used synthetic Vimeo-90k [31] dataset inthe experiments. Vimeo-90k consists of more than 90,0007-frame sequences of resolution 256 448. Among them,64,612 sequences are selected as the training set. The LRsequences in Vimeo-90k are synthesized via bicubic (BI)downsampling. Our RealVSR dataset consists of 500 realworld LR-HR sequence pairs with 1024 512 resolution.Each sequence contains 50 frames. We randomly select 50sequence pairs as the testing set and leave the remaining 450sequence pairs as the training set.VSR networks. We conduct experiments by taking 5 representative and recently developed VSR models into our VSRmodel learning framework (referring to Fig. 5): RCAN[34], FSTRN [19], TOF [31], TDAN [26] and EDVR [27].RCAN is a representative deep network for SISR. We modify it for VSR by concatenating the input frames along thechannel dimension. FSTRN is a lightweight VSR modelwithout explicit alignment. It exploits spatial-temporal information with separable 3D convolution. TOF is a typicalVSR model which performs image domain alignment usingoptical flow. We replace its reconstruction branch with aresidual backbone with 10 residual blocks. TDAN is a pioneer VSR model with deformable convolution [7]. EDVR isa powerful and popular VSR model which perform featurespace alignment using deformable convolution. For EDVR,we adopt its moderate version and remove the TSA module, which mainly consists of a PCD alignment module anda reconstruction backbone with 10 residual blocks. For allmethods, we remove their upsampling operations to fit ourRealVSR dataset.Implementation details. We randomly crop patches of size192 192 from the video frames during training. The minibatch size is set to 32. Data augmentation is performedby random horizontal flipping and random 90 rotation.Moreover, we adopt the CutBlur [32] technique to alleviate the risk of overfitting in real-world VSR training. Forthe weighting factors in Lv2 , we empirically set λ 1e 4 .We choose Adam [16] as the optimizer with default parameters. For model training with Lv1 , we set the initial learningrate to 1e 4 . For model training with Lv2 , we initialize themodel weights with those trained with Lv1 and set the initial learning rate to 5e 5 . In both cases, we gradually decaythe learning rate with the cosine learning rate decay strategy. All the models are trained for 150, 000 iterations. Weconduct all experiments with the PyTorch [24] framework.5.2. Synthetic dataset vs. RealVSR datasetTo demonstrate the advantages of our dataset in realworld VSR, we compare the performance of VSR mod-els trained on the synthetic Vimeo-90k dataset and our RealVSR dataset. With the 5 VSR networks (RCAN, FSTRN,TOF, TDAN, EDVR), 10 VSR models are trained in totalon the two datasets. To balance the speed and performance,3 adjacent LR frames are used to estimate the center HRframe. For fair comparison, we train all the 10 models withthe baseline Charbonnier (CB) loss in YCbCr space.We evaluate the 10 trained models on the RealVSR testing set. Table 1 lists the quantitative results in both fullreference and no-reference metrics. Considering the influence of slight color difference between LR and HR sequences in RealVSR, we compute the PSNR/SSIM indiceson the Y channel to more accurately reflect the performance of texture reconstruction. As shown in Table 1,compared with the baseline bicubic interpolator (LR), VSRmodels trained on the synthetic dataset only achieve smallimprovement in terms of SSIM, while perform even worsein terms of PSNR. This validates that VSR models trainedon synthetic dataset cannot generalize well to the real-worldvideos with more complex degradations. In contrast, allVSR models trained on our RealVSR dataset achieve muchbetter performance in terms of PSNR/SSIM. We also compare the results with two popular no-reference image qualitymetrics, NIQE [22] and BRISQUE [21]. Models trained onRealVSR dataset also demonstrate better performance.Fig. 6 shows the super-resolved frames for qualitativecomparison. One can see that models trained on syntheticdataset tend to generate blurry edges and some artifacts,while models trained on RealVSR produce sharper edgesand exhibit much less artifacts. This further demonstratesthe importance of using data with real-world degradationsfor training a robust VSR model. More visual examples canbe found in the supplementary file.We further compare the temporal consistency of VSR results trained with different datasets. Models trained on theRealVSR dataset achieves better temporal consistency measured by vector norm differences of warped frames (T-diff).5.3. Study on lossesIn this section, we conduct experiments to demonstratethe effectiveness of the proposed losses Lv1 and Lv2 . Weuse two representative VSR networks, TOF and EDVR, inthis study. We train the models with 5 different losses.Three of them are fidelity-oriented losses. The first one isthe baseline CB loss on YCbCr channels (LYCbCr). TheCBsecond one combines the proposed Ls and Ld on Y channelwith the CB loss on CbCr channels (Ls Ld LCbCrCB ). Thethird one is our Lv1 . The other two are perceptual-orientedlosses. One is to combine Lv1 with the baseline RaGANdiscriminator [28] on Y channel, denoted by Lv1 RaGAN,and another one is our Lv2 .The TOF and EDVR models trained with the five different losses are evaluated on the RealVSR testing set, and4786

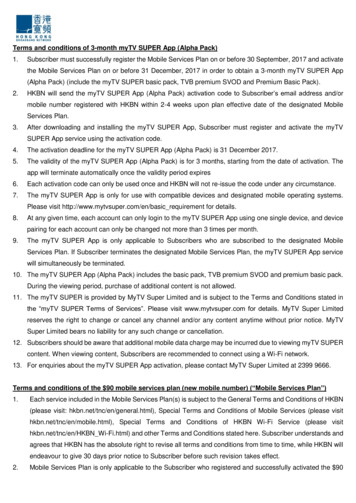

Table 1. Quantitative results of different VSR models evaluated on our RealVSR testing set.MetricBicubic (LR)PSNR SSIM NIQE BRISQUE T-diff 24.670.77985.062743.10713.9145RCAN [34]Vimeo-90k .09364.25613.6938FSTRN [19]Vimeo-90k .25934.37963.8844TOF [31]Vimeo-90k .48834.61013.7706TDAN [26]Vimeo-90k .23314.41743.8695EDVR [27]Vimeo-90k .96994.42293.6860LRRCAN (Vimeo-90k)FSTRN (Vimeo-90k)TOF (Vimeo-90k)TDAN (Vimeo-90k)EDVR (Vimeo-90k)HRRCAN (RealVSR)FSTRN (RealVSR)TOF (RealVSR)TDAN (RealVSR)EDVR (RealVSR)LRRCAN (Vimeo-90k)FSTRN (Vimeo-90k)TOF (Vimeo-90k)TDAN (Vimeo-90k)EDVR (Vimeo-90k)RCAN (RealVSR)FSTRN (RealVSR)TOF (RealVSR)TDAN (RealVSR)EDVR (RealVSR)HR frame from028 sequenceHR frame from374 sequenceHRFigure 6. 2 VSR results on our RealVSR testing set by different models.the quantitative results are listed in Table 2. We evaluatethe fidelity-oriented models by PSNR and SSIM and theperceptual-oriented models by LPIPS [34] and DISTS [8].As shown in Table 2, models trained with Ls Ld LCbCrCBand Lv1 achieve better PSNR/SSIM results than thosetrained with baseline LYCbCr. As we mentioned in SecCBtion 4.2, PSNR/SSIM may not be able to faithfully reflectthe improvement of a VSR model considering the possible misalignment and luminance difference between the LRand HR sequences, while our losses in Lv1 aim to improve the frame details under these conditions but not onlyPSNR/SSIM. Therefore, we further visualize the VSR results obtained by the EDVR models in Fig. 7. One cansee that, compared to the baseline, the proposed decomposition based losses (Ls Ld LCbCrand Lv1 ) help networksCBreconstruct sharper edges and more fine-scale details, showing better visual quality.Regarding the perceptual-oriented models, referring toTable 2, our proposed Lv2 results in better LPIPS/DISTSscores than Lv1 RaGAN, demonstrating the role of multiscale edge based discriminator. Regarding the qualitativecomparison, as shown in Fig. 7, the proposed Lv2 enablenetworks to generate sharper details than Lv1 RaGAN. Italso improves the visual quality of the results obtained byVSR models trained with Lv1 . More visual examples canbe found in the supplementary file.4787

HR frame from170 sequenceLRLYCbCrCBLs Ld LCbCrCBLv1Lv1 RaGANLv2Figure 7. 2 VSR results on videos from the RealVSR testing set by the EDVR [27] model trained with different losses.Sequence captured by OPPO Reno 2LRVimeo-90kRealVSR Lv1RealVSR Lv2Sequence captured by Huawei Mate 30 ProLRVimeo-90kRealVSR Lv1RealVSR Lv2Figure 8. 2 VSR results on real-world videos outside RealVSR dataset by the EDVR [27] models trained on synthetic Vimeo-90k [31]and our RealVSR.Table 2. Ablation studies on losses. PSNR/SSIM are evaluted onY channel. LPIPS/DISTS are evaluated on RGB channels.ModelTOF [31]EDVR [27]ModelTOF [31]EDVR [27]Fidelity-oriented ComparisonLYCbCrLs Ld LCbCrCBCBPSNR SSIM PSNR SSIM tion-oriented ComparisonLv1L

world VSR dataset to narrow this synthetic-to-real gap. Real-world image super-resolution datasets. Though there is no real-world VSR dataset publically available yet, several real-world SISR datasets have been built and re-leased. Chen et al. [6] collected 100 LR-HR image pairs of printed postcards in a carefully controlled indoor environ-ment.