Transcription

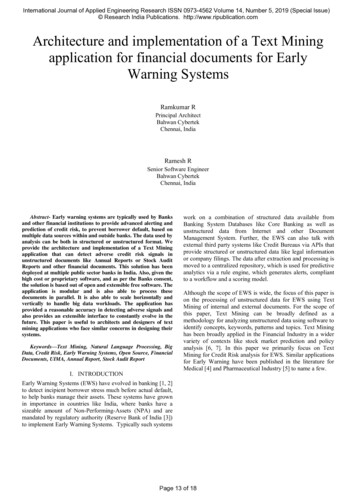

International Journal of Applied Engineering Research ISSN 0973-4562 Volume 14, Number 5, 2019 (Special Issue) Research India Publications. http://www.ripublication.comArchitecture and implementation of a Text Miningapplication for financial documents for EarlyWarning SystemsRamkumar RPrincipal ArchitectBahwan CybertekChennai, IndiaRamesh RSenior Software EngineerBahwan CybertekChennai, IndiaAbstract- Early warning systems are typically used by Banksand other financial institutions to provide advanced alerting andprediction of credit risk, to prevent borrower default, based onmultiple data sources within and outside banks. The data used byanalysis can be both in structured or unstructured format. Weprovide the architecture and implementation of a Text Miningapplication that can detect adverse credit risk signals inunstructured documents like Annual Reports or Stock AuditReports and other financial documents. This solution has beendeployed at multiple public sector banks in India. Also, given thehigh cost or proprietary software, and as per the Banks consent,the solution is based out of open and extensible free software. Theapplication is modular and is also able to process thesedocuments in parallel. It is also able to scale horizontally andvertically to handle big data workloads. The application hasprovided a reasonable accuracy in detecting adverse signals andalso provides an extensible interface to constantly evolve in thefuture. This paper is useful to architects and designers of textmining applications who face similar concerns in designing theirsystems.Keywords—Text Mining, Natural Language Processing, BigData, Credit Risk, Early Warning Systems, Open Source, FinancialDocuments, UIMA, Annual Report, Stock Audit ReportI. INTRODUCTIONwork on a combination of structured data available fromBanking System Databases like Core Banking as well asunstructured data from Internet and other DocumentManagement System. Further, the EWS can also talk withexternal third party systems like Credit Bureaus via APIs thatprovide structured or unstructured data like legal informationor company filings. The data after extraction and processing ismoved to a centralized repository, which is used for predictiveanalytics via a rule engine, which generates alerts, compliantto a workflow and a scoring model.Although the scope of EWS is wide, the focus of this paper ison the processing of unstructured data for EWS using TextMining of internal and external documents. For the scope ofthis paper, Text Mining can be broadly defined as amethodology for analyzing unstructured data using software toidentify concepts, keywords, patterns and topics. Text Mininghas been broadly applied in the Financial Industry in a widervariety of contexts like stock market prediction and policyanalysis [6, 7]. In this paper we primarily focus on TextMining for Credit Risk analysis for EWS. Similar applicationsfor Early Warning have been published in the literature forMedical [4] and Pharmaceutical Industry [5] to name a few.Early Warning Systems (EWS) have evolved in banking [1, 2]to detect incipient borrower stress much before actual default,to help banks manage their assets. These systems have grownin importance in countries like India, where banks have asizeable amount of Non-Performing-Assets (NPA) and aremandated by regulatory authority (Reserve Bank of India [3])to implement Early Warning Systems. Typically such systemsPage 13 of 18

International Journal of Applied Engineering Research ISSN 0973-4562 Volume 14, Number 5, 2019 (Special Issue) Research India Publications. http://www.ripublication.comA. Presentation LayerFig 1: Early Warning System and Context of TextMiningThe presentation layer was developed using PHP andHTML5/CSS. The presentation layer was kept very simple asthe core user of this application was a knowledgeabletechnical staff instead of a business user. The presentationlayer allows user management, configuration of documenttypes, upload of documents and configuration of keywordsspecific to a document type along with an exclusion list. Thekey words also support regular expressions like the usage of and “” to detect multiple patterns. It also allows the users toupload a bunch of documents, all at once, and start theprocessing of the documents. The results are then available forthe users to see and download if required. One of the keychallenges that we faced was the inability to auto-detect theclass of document (e.g. Stock Audit Report, Annual Reportetc.) that was being uploaded. As a result the user had tospecify what type of document they were uploading. It wouldbe fruitful here to use AI/ML based techniques to auto detectthe document class after suitable training.II. SYSTEM ARCHITECTUREThe Text Mining System is developed using LayeredArchitecture consisting of the presentation layer, middlewarelayer, and services layer with interfacing to a relationaldatabase. Further, once the unstructured data is processed andstored in the relational database, the integration systemdistributes the data to the data warehouse for furtherprocessing. The various layers and functionalities that wererequired for effective operation of this application are depictedbelow along with their description.Fig 3: Document Type Configuration fromPresentation LayerFig 2: Layered Architecture of Text MiningApplicationFig 4: Keyword Configuration from PresentationLayerPage 14 of 18

International Journal of Applied Engineering Research ISSN 0973-4562 Volume 14, Number 5, 2019 (Special Issue) Research India Publications. http://www.ripublication.comIII. SERVICE LAYER IN DEPTHA. Scanned Document ConversionFig 5: Viewing of Results from PresentationLayerOne of the critical problems for Banks is that documents areoften available only in scanned formats. The free and opensource Tesseract Library [8] was used for scanned documentconversion from Scanned PDF to Text based PDF. Theaccuracy of the Tesseract library is reliable for documents inwhich the text is printed and scanned, but performs poorly forhandwritten documents. Further the library is memory andCPU intensive and takes a considerable amount of time (up to5 minutes on a dual core machine with 16GB RAM) toprocess a single document.B. Middleware LayerThe middleware layer was composed of standard RESTfulservices which expose the backend services as a set of APIs.The communication happens over HTTPS for securityreasons.C. Services LayerThe services layer will be the primary focus of discussion inthis paper. It was built using the Spring Boot Framework andJava. Various types of services are provided by this layerincludinga)b)c)d)e)f)g)Scanned Document ConversionDocument Format ConversionParsing, Extraction & Keyword MatchingSynonym and Negation matchingDatabase InterfacingParallel ProcessingLogging and MonitoringD. Database LayerThe Services Layer interacts with the Database Layer using anObject Relational Mapping framework. The application wasprimarily deployed on MySQL and MS SQL Server databasebut is compliant with Oracle and Postgres.B. Document Format ConversionThe Text Mining application is able to process PDF as well asDOCX documents. It does not support the proprietary DOCformat. For DOC conversion, the WORDCONV.EXEprogram was invoked (that ships with Microsoft Office) froma Java Process programmatically. Using other open source orcommercial solutions was ineffective, as often criticalformatting information was lost. Since, most Banks hadMicrosoft Office Licenses, this was not a critical issue.Further, in some cases, table detection was to be performedwithin the PDF and DOCX document. Numerous open sourceoptions for PDF were explored and Tabula [15] performedreasonable accuracy to partially automate table discovery withhuman supervision. However full automation, with goodreliability and zero human intervention was possible only withDOCX documents as the underlying structure is XML, withTables as nodes, which can be processed with the open sourceApache POI library [9]. Hence, wherever tables wereinvolved, PDF documents were converted to DOCX using thecommercial version of Adobe PDF. As the volume of suchdocuments was low, a manual process was executed by thesite engineer for PDF documents, whenever tables wereinvolved.C. Parsing, Extraction and Key Word MatchingOne of the key architectures for Text Mining is the UIMA(Unstructured Information Management Architecture) that isan open standard to build and manage Natural LanguageProcessing software using a Pipeline and AnnotationFramework [10]. In addition, UIMA provides a high levelscripting language called RUTA [16] which can be used toprocess documents without using the low level API. SinceUIMA [11] is only a specification, the open source ApacheUIMA implementation was used to write the keywordmatching solution. This allows access to industry standardlibraries like Stanford Core NLP through a simpler API.Page 15 of 18

International Journal of Applied Engineering Research ISSN 0973-4562 Volume 14, Number 5, 2019 (Special Issue) Research India Publications. http://www.ripublication.comRFA 39Fig 5: Sample RUTA script for Topic Annotationof Stock Audit Report generated dynamicallyThe parsing and keyword matching are illustrated with twoexamples. The first example, is an internal document calledthe Stock Audit Report, which is a report prepared by theBank auditors to physically verify the quality of stocks in aborrower inventory. Each Bank has its own template forcollecting the Stock Audit Report. In one of the Banks theStock Audit Report was a set of questions and answers. Thequestions and answers were laid out one below the other,however the question-answer order was not fixed. Keywordslike “undervalued” or “missing” or “discrepancy” wereconfigured under each question topic from the frontend. Thefirst task was to identify the list of questions and matchinganswers. Since the questions could appear in any order, wewrote an algorithm in RUTA that would automatically assumethe text between one question and another question was theanswer to the former. All the text under the last question wasassumed as the answer to that question. Once the question andanswer correspondence had been established we wrote anotherRUTA script dynamically that would match the keywords,patterns, synonyms and antonyms. Using this approach wewere able to have nearly 100% accuracy.RedFlagIndicator asper [3]RFA 31DescriptionFile icyAnnualReportNature ofchange inaccountingpolicyEffect ofchange inaccountingpolicyRFA 37RFA 16Materialdiscrepanciesin the annualreport.AnnualReportCriticalStock Auditissueshighlightedin the stockaudit reportPoordisclosure thestatutoryauditorsReportstockAnnualReportDoes not true and fairErodednetworthTable 1: List of Keywords and indicatorscaptured in financial documents by theapplicationThe second example, is the commonly available AnnualReport for Indian Companies from the borrowers. This is inthe PDF format. The approach used for Stock Audit Reportcould not be generalized to Stock Audit Report as the AnnualReport is not in a question answer format. After a searchthrough the research and implementation literature, theapproach in literature for UK financial reports [12] wasidentified as promising, which was implemented to suit theIndian Annual Report Context. For financial reports, for earlywarning signals, matching keywords like “adverse opinion”are required to be found only in sections like the “IndependentAuditor Report”. To detect the particular section, a variety ofheuristics were used to identify the “Table of Contents”section of the Annual Report. Then a fuzzy match wasconducted for the “Independent Auditor Report” section andextract the relevant starting page and ending page (whichcould appear in numerous formats like “100”, “100-200” etc.These patterns and numerous others were mined from around100 sample Annual Reports. Once the relevant starting andending page of the section were identified the keywordmatching proceeds much like the Stock Audit Report. In manycases, there was no explicit Table of Contents header, so ageneral pattern matching was done to detect such a format inthe first 10 pages (that is a configurable property). Using thisapproach we were able to process around 80% of AnnualReports.AdverseopinionBasis forQualifiedopinionObsoletePage 16 of 18

International Journal of Applied Engineering Research ISSN 0973-4562 Volume 14, Number 5, 2019 (Special Issue) Research India Publications. http://www.ripublication.comFig 6: Diversity of the Table of Contents of IndianAnnual Reports that were processed successfullyby the applicationaccuracy, tested over a database of 500 reports and StockAudit Reports had a 100 percent accuracy rate.D. Synonym and Negation MatchingThe application automatically matches the synonyms andexcludes negations of the keywords. For this purpose,wrappers over Wordnet Library were used [14].Fig 7: Sample reports generated by theapplication for Jindal Steel and Adani companies.E. Parallel ProcessingThe multithreaded abilities of Java were used to create aconfigurable thread pool. Once a document was uploaded, itwas allocated a thread which utilizes it for the entire processof conversion, parsing and keyword matching. Once all thethreads of the thread pool are being used, future documentsqueue up till a thread finishes the task. The size of the threadpool depends primarily on the memory of the system and themaximum document size. Based on this, an estimate of themaximum threads was arrived under worst case scenario thatcan be used for processing documents without the applicationbeing out of memory.Fig 8: Integration into the Early Warning SystemReport Menu.F. Logging and MonitoringAll the services were instrumented to capture the start timeand end time of their tasks which is update in the database.The database was monitored to identify

HTML5/CSS. The presentation layer was kept very simple as the core user of this application was a knowledgeable technical staff instead of a business user. The presentation layer allows user management, configuration of document types, upload of documents and configuration of keywords specific to a document type along with an exclusion list. The key words also support regular