Transcription

GPU Scripting PyOpenCL News RTCG ShowcasePyCUDA: Even SimplerGPU Programming with PythonAndreas KlöcknerCourant Institute of Mathematical SciencesNew York UniversityNvidia GTC · September 22, 2010Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseThanksJan Hesthaven (Brown)Tim Warburton (Rice)Leslie Greengard (NYU)PyCUDA contributorsPyOpenCL contributorsNvidia CorporationAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOutline1 Scripting GPUs with PyCUDA2 PyOpenCL3 The News4 Run-Time Code Generation5 ShowcaseAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductiveOutline1 Scripting GPUs with PyCUDAPyCUDA: An OverviewDo More, Faster with PyCUDA2 PyOpenCL3 The News4 Run-Time Code Generation5 ShowcaseAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductiveWhetting your appetite1234567import pycuda.driver as cudaimport pycuda.autoinitimport numpya numpy.random.randn(4,4).astype(numpy.float32)a gpu cuda.mem alloc(a.nbytes)cuda.memcpy htod(a gpu, a)[This is examples/demo.py in the PyCUDA distribution.]Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductiveWhetting your appetite1 mod cuda.SourceModule(”””2globalvoid twice( float a)3{4int idx threadIdx.x threadIdx.y 4;5a[ idx ] 2;6}7”””)89 func mod.get function(”twice”)10 func(a gpu, block (4,4,1))1112 a doubled numpy.empty like(a)13 cuda.memcpy dtoh(a doubled, a gpu)14 print a doubled15 print aAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductiveWhetting your appetite1 mod cuda.SourceModule(”””2globalvoid twice( float a)3{4int idx threadIdx.x threadIdx.y 4;5a[ idx ] 2;6}7”””)89 func mod.get function(”twice”)10 func(a gpu, block (4,4,1))1112 a doubled numpy.empty like(a)13 cuda.memcpy dtoh(a doubled, a gpu)14 print a doubled15 print aAndreas KlöcknerCompute kernelPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductiveWhy do Scripting for GPUs?GPUs are everything that scriptinglanguages are not.Highly parallelVery architecture-sensitiveBuilt for maximum FP/memorythroughput complement each otherCPU: largely restricted to controltasks ( 1000/sec)Scripting fast enoughPython CUDA PyCUDAPython OpenCL PyOpenCLAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductiveScripting: PythonOne example of a scripting language: PythonMatureLarge and active communityEmphasizes readabilityWritten in widely-portable CA ‘multi-paradigm’ languageRich ecosystem of sci-comp relatedsoftwareAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductiveScripting: Interpreted, not CompiledProgram creation workflow:EditCompileLinkRunAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductiveScripting: Interpreted, not CompiledProgram creation workflow:EditCompileLinkRunAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductiveScripting: Interpreted, not CompiledProgram creation workflow:EditCompileLinkRunAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductivePyCUDA: WorkflowEditCache?noRunnvccSourceModule(".")Upload to GPU.cubinPyCUDARun on GPUAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductiveHow are High-Performance Codes constructed?“Traditional” Construction ofHigh-Performance Codes:C/C /FortranLibraries“Alternative” Construction ofHigh-Performance Codes:Scripting for ‘brains’GPUs for ‘inner loops’Play to the strengths of eachprogramming environment.Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductivePyCUDA PhilosophyProvide complete accessAutomatically manage resourcesProvide abstractionsCheck for and report errorsautomaticallyFull documentationIntegrate tightly with numpyAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductiveWhat’s this “numpy”, anyway?Numpy: package for large,multi-dimensional arrays.Vectors, Matrices, . . .A B, sin(A), dot(A,B)la.solve(A, b), la.eig(A)cube[:, :, n-k:n k], cube 5All much faster than functional equivalents inPython.“Python’s MATLAB”:Basis for SciPy, plotting, . . .Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being Productivegpuarray: Simple Linear Algebrapycuda.gpuarray:Meant to look and feel just like numpy.gpuarray.to gpu(numpy array)numpy array gpuarray.get() , -, , /, fill, sin, exp, rand,basic indexing, norm, inner product, . . .Mixed types (int32 float32 float64)print gpuarray for debugging.Allows access to raw bitsUse as kernel arguments, textures, etc.Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductiveWhetting your appetite, Part II123456789import numpyimport pycuda.autoinitimport pycuda.gpuarray as gpuarraya gpu gpuarray.to a doubled (2 a gpu).get()print a doubledprint a gpuAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being Productivegpuarray: Elementwise expressionsAvoiding extra store-fetch cycles for elementwise math:from pycuda.curandom import rand as curanda gpu curand((50,))b gpu curand((50,))from pycuda.elementwise import ElementwiseKernellin comb ElementwiseKernel(” float a, float x, float b, float y, float z”,”z[ i ] a x[i ] b y[i]”)c gpu gpuarray.empty like (a gpu)lin comb(5, a gpu, 6, b gpu, c gpu)assert la .norm((c gpu (5 a gpu 6 b gpu)).get()) 1e 5Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being Productivegpuarray: Reduction made easyExample: A scalar product calculationfrom pycuda.reduction import ReductionKerneldot ReductionKernel(dtype out numpy.float32, neutral ”0”,reduce expr ”a b”, map expr ”x[i] y[i]”,arguments ”const float x, const float y”)from pycuda.curandom import rand as curandx curand((1000 1000), dtype numpy.float32)y curand((1000 1000), dtype numpy.float32)x dot y dot(x, y ). get()x dot y cpu numpy.dot(x.get(), y. get ())Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOverview Being ProductivePyCUDA: Vital aComplete documentationMIT License(no warranty, free for all use)Requires: numpy, Python 2.4 (Win/OS X/Linux)Support via mailing listAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOutline1 Scripting GPUs with PyCUDA2 PyOpenCL3 The News4 Run-Time Code Generation5 ShowcaseAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseOpenCL’s perception problemOpenCL does not presently get thecredit it deserves.Single abstraction works well forGPUs, CPUsVendor-independenceCompute Dependency DAGA JIT C compiler baked into alibraryAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseIntroducing. . . PyOpenCLPyOpenCL is“PyCUDA for OpenCL”Complete, mature API wrapperHas: Arrays, elementwiseoperations, RNG, . . .Near feature parity with PyCUDATested on all availableImplementations, lAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseIntroducing. . . PyOpenCLSame flavor, different recipe:import pyopencl as cl , numpya numpy.random.rand(50000).astype(numpy.float32)ctx cl. create some context ()queue cl.CommandQueue(ctx)a buf cl. Buffer (ctx , cl .mem flags.READ WRITE, size a.nbytes)cl . enqueue write buffer (queue, a buf , a)prg cl.Program(ctx, ”””kernel void twice( global float a){int gid get global id (0);a[ gid ] 2;}”””). build ()prg. twice(queue, a.shape, None, a buf ). wait()Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseExciting Developments in GPU-PythonOutline1 Scripting GPUs with PyCUDA2 PyOpenCL3 The NewsExciting Developments in GPU-Python4 Run-Time Code Generation5 ShowcaseAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseExciting Developments in GPU-PythonStep 1: DownloadHot off the presses:PyCUDA 0.94.1PyOpenCL 0.92All the goodies from this talk, plusSupports all new features in CUDA3.0, 3.1, 3.2rc, OpenCL 1.1Allows printf()(see example in Wiki)New stuff shows up in git very quickly.Still needed: better release schedule.Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseExciting Developments in GPU-PythonStep 2: InstallationPyCUDA and PyOpenCL no longerdepend on Boost C Eliminates major install obstacleEasier to depend on PyCUDA andPyOpenCLeasy install pyopencl workson Macs out of the boxBoost is still there–just notuser-visible by default.Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseExciting Developments in GPU-PythonStep 3: UsageComplex numbers. . . in GPUArray. . . in user code(pycuda-complex.hpp)If/then/else for GPUArraysSupport for custom device pointersSmarter device picking/contextcreationPyFFT: FFT for PyOpenCL andPyCUDAscikits.cuda: CUFFT, CUBLAS,CULAAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseExciting Developments in GPU-PythonSparse Matrix-Vector on the GPUNew feature in 0.94:Sparse matrix-vectormultiplicationUses “packeted format”by Garland and Bell (alsoincludes parts of their code)Integrates with scipy.sparse.Conjugate-gradients solverincludedDeferred convergencecheckingAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseExciting Developments in GPU-PythonStep 4: DebuggingNew in 0.94.1: Support for CUDA gdb: cuda-gdb --args python -mpycuda.debug demo.pyAutomatically:Sets Compiler flagsRetains source codeDisables compiler cacheAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableOutline1 Scripting GPUs with PyCUDA2 PyOpenCL3 The News4 Run-Time Code GenerationWriting Code when the most Knowledge is Available5 ShowcaseAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableGPU Programming: Implementation ChoicesMany difficult questionsInsufficient heuristicsAnswers are hardware-specific andhave no lasting valueAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableGPU Programming: Implementation ChoicesMany difficult questionsInsufficient heuristicsAnswers are hardware-specific andhave no lasting valueProposed Solution: Tune automaticallyfor hardware at run time, cache tuningresults.Decrease reliance on knowledge ofhardware internalsShift emphasis fromtuning results to tuning ideasAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableMetaprogrammingIn GPU scripting,GPU code doesnot need to bea compile-timeconstant.Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableMetaprogrammingIn GPU scripting,GPU code doesnot need to bea compile-timeconstant.(Key: Code is data–it wants to bereasoned about at run time)Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableMetaprogrammingIdeaIn GPU scripting,GPU code doesnot need to bea compile-timeconstant.(Key: Code is data–it wants to bereasoned about at run time)Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableMetaprogrammingIdeaIn GPU scripting,GPU code doesnot need to bea compile-timeconstant.Python CodeGPU CodeGPU CompilerGPU Binary(Key: Code is data–it wants to bereasoned about at run time)GPUResultAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableMetaprogrammingIdeaIn GPU scripting,GPU code doesnot need to bea compile-timeconstant.Python CodeGPU CodeGPU CompilerGPU BinaryMachine(Key: Code is data–it wants to bereasoned about at run time)GPUResultAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableMetaprogrammingIdeaHumanPython CodeGPU CodeGPU CompilerIn GPU scripting,GPU code doesnot need to bea compile-timeconstant.GPU Binary(Key: Code is data–it wants to bereasoned about at run time)GPUResultAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableMetaprogrammingIdeaPython CodeGood for codegenerationGPU CodeGPU CompilerIn GPU scripting,GPU code doesnot need to bea compile-timeconstant.GPU Binary(Key: Code is data–it wants to bereasoned about at run time)GPUResultAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableMetaprogrammingIdeaPython CodeGood for codegenerationGPU CodeGPU CompilerUDAPyCscripting,In GPUGPU code doesnot need to bea compile-timeconstant.GPU Binary(Key: Code is data–it wants to bereasoned about at run time)GPUResultAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableMetaprogrammingIdeaPython CodeGood for codegenerationGPU CodeGPU CompilerAPyPOpUDCLenyCscripting,In GPUGPU code doesnot need to bea compile-timeconstant.GPU Binary(Key: Code is data–it wants to bereasoned about at run time)GPUResultAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableMachine-generated CodeWhy machine-generate code?Automated Tuning(cf. ATLAS, FFTW)Data typesSpecialize code for given problemConstants faster than variables( register pressure)Loop UnrollingAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcaseWriting Code when the most Knowledge is AvailableRTCG via Templatesfrom jinja2 import Templatetpl Template(”””globalvoid twice({{ type name }} tgt){int idx threadIdx.x {{ thread block size }} {{ block size }} blockIdx .x;{% for i in range( block size ) %}{% set offset i thread block size %}tgt [ idx {{ offset }}] 2;{% endfor %}}”””)rendered tpl tpl . render(type name ”float”, block size block size ,thread block size thread block size )smod SourceModule(rendered tpl)Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsOutline1 Scripting GPUs with PyCUDA2 PyOpenCL3 The News4 Run-Time Code Generation5 ShowcasePython GPUs in ActionConclusionsAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsDiscontinuous Galerkin MethodLet Ω : SiDk Rd .Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsDiscontinuous Galerkin MethodLet Ω : SiDk Rd .GoalSolve a conservation law on Ω:Andreas Klöcknerut · F (u) 0PyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsDiscontinuous Galerkin MethodLet Ω : SiDk Rd .Goalut · F (u) 0Solve a conservation law on Ω:ExampleMaxwell’s Equations: EM field: E (x, t), H(x, t) on Ω governed byj1 t E H ,εερ ·E ,εAndreas Klöckner t H 1 E 0,µ · H 0.PyCUDA: Even Simpler GPU Programming with Python

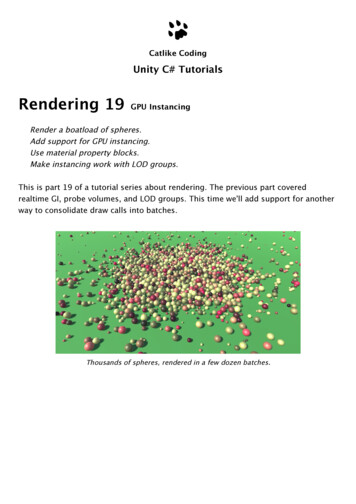

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsGPU DG ShowcaseEletromagnetismAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsGPU DG ShowcaseEletromagnetismPoissonAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsGPU DG ShowcaseEletromagnetismPoissonCFDAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsGPU DG ShowcaseEletromagnetismPoissonCFDAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsGPU DG ShowcaseEletromagnetismShock-laden flowsPoissonCFDAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsGPU-DG: Performance on al Order NAndreas Klöckner810PyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action Conclusions16 T10s vs. 64 8 2 4 Xeon E5472Flop Rates and Speedups: 16 GPUs vs 64 CPU coresGPU4000CPUGFlops/s3000200010000246Polynomial Order NAndreas Klöckner8PyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action Conclusions16 T10s vs. 64 8 2 4 Xeon E5472Flop Rates and Speedups: 16 GPUs vs 64 CPU coresGPU4000CPUGFlops/s30002000100002Tim Warburton: Shockingly fast and accurateCFD simulationsWednesday, 11:00–11:50(Several posters/talks on GPU-DG at GTC.)468Polynomial Order NAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsComputational Visual NeuroscienceAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsComputational Visual NeuroscienceNicolas Pinto: Easy GPU Metaprogramming:A Case Study in Biologically-Inspired Computer VisionThursday, 10:00–10:50, Room A1Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsCopperheadfrom copperhead import import numpy as np@cudef axpy(a, x, y ):return [a xi yi for xi , yi in zip (x, y )]x np.arange(100, dtype np.float64)y np.arange(100, dtype np.float64)with places .gpu0:gpu axpy(2.0, x, y)with places . here :cpu axpy(2.0, x, y)Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsCopperheadfrom copperhead import import numpy as np@cudef axpy(a, x, y ):return [a xi yi for xi , yi in zip (x, y )]x np.arange(100, dtype np.float64)y np.arange(100, dtype np.float64)with places .gpu0:gpu axpy(2.0, x,Bryany) Catanzaro: Copperhead: Data-ParallelPython for the GPUwith places . here : Wednesday, 15:00–15:50 (next slot!), Room Ncpu axpy(2.0, x, y)Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsConclusionsFun time to be in computational scienceEven more fun with Python and Py{CUDA,OpenCL}With no compromise in performanceGPUs and scripting work well togetherEnable MetaprogrammingThe “Right” way to develop computational codesBake all runtime-available knowledge into codeAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsWhere to from here?More at. . . http://mathema.tician.de/CUDA-DGAK, T. Warburton, J. Bridge, J.S. Hesthaven, “NodalDiscontinuous Galerkin Methods on Graphics Processors”,J. Comp. Phys., 2009.GPU RTCGAK, N. Pinto et al. PyCUDA: GPU Run-Time Code Generation forHigh-Performance Computing, in prep.Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsQuestions?Thank you for your attention!http://mathema.tician.de/image creditsAndreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

GPU Scripting PyOpenCL News RTCG ShowcasePython GPUs in Action ConclusionsImage CreditsFermi GPU: Nvidia Corp.C870 GPU: Nvidia Corp.Python logo: python.orgOld Books: flickr.com/ppdigitalAdding Machine: flickr.com/thomashawkFloppy disk: flickr.com/ethanheinThumbs up: sxc.hu/thiagofestOpenCL logo: Ars Technica/Apple Corp.Newspaper: sxc.hu/brandcoreBoost C logo: The Boost C project?/! Marks: sxc.hu/svilen001Machine: flickr.com/13521837@N00Andreas KlöcknerPyCUDA: Even Simpler GPU Programming with Python

Python Code GPU Code GPU Compiler GPU Binary GPU Result Machine Human In GPU scripting, GPU code does not need to be a compile-time constant. (Key: Code is data{it wants to be reasoned about at run time) Good for code generation A enCL Andreas Kl ockner PyCUDA: Even Simpler GPU Programming with Python