Transcription

IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 17, NO. 1, JANUARY 19983Faster Minimization of LinearWirelength for Global PlacementCharles J. Alpert, Member, IEEE, Tony F. Chan, Andrew B. Kahng, Associate Member, IEEE,Igor L. Markov, Student Member, IEEE, and Pep Mulet, Member, IEEEAbstract— A linear wirelength objective more effectively captures timing, congestion, and other global placement considerations than a squared wirelength objective. The GORDIAN-L cellplacement tool [19] minimizes linear wirelength by first approximating the linear wirelength objective by a modified squaredwirelength objective, then executing the following loop—1) minimize the current objective to yield some approximate solutionand 2) use the resulting solution to construct a more accurateobjective—until the solution converges. This paper shows howto apply a generalization [5], [6] of a 1937 algorithm due toWeiszfeld [22] to placement with a linear wirelength objective andthat the main GORDIAN-L loop is actually a special case of thisalgorithm. We then propose applying a regularization parameterto the generalized Weiszfeld algorithm to control the tradeoffbetween convergence and solution accuracy; the GORDIAN-Literation is equivalent to setting this regularization parameter tozero. We also apply novel numerical methods, such as the primalNewton and primal-dual Newton iterations, to optimize the linearwirelength objective. Finally, we show both theoretically andempirically that the primal-dual Newton iteration stably attainsquadratic convergence, while the generalized Weiszfeld iterationis linear convergent. Hence, primal-dual Newton is a superiorchoice for implementing a placer such as GORDIAN-L, or forany linear wirelength optimization.Index Terms—Analytic placement, GORDIAN-L, interconnectdelay, linear placement, linear wirelength, Newton, primal dual,quadratic placement, Weiszfeld.I. INTRODUCTIONTHE placement phase of layout has a significant impacton routability and performance of a given IC design.Quadratic placement techniques have received wide attentionover the past decade, since they are efficient enough tohandle large designs while retaining good solution quality.The quadratic placement approach can be traced back to [3],[8], [21], [23], and other early works. The basic idea is tosolve recursively generated sparse systems of linear equations,where each system captures a one-dimensional (1-D) placement problem with minimum squared wirelength objective.The solution to any given 1-D placement can be refined inManuscript received June 20, 1997. An earlier version of this paper waspresented at ISPD-97. This work was supported by a grant from CadenceDesign Systems, San Jose, CA 95134 USA. This paper was recommended byAssociate Editor M. Sarrafzadeh.C. J. Alpert is with the IBM Austin Research Laboratory, Austin, TX 78758USA (e-mail: alpert@austin.ibm.com).T. F. Chan, I. L. Markov, and P. Mulet are with the MathematicsDepartment, University of California, Los Angeles 90095 USA.A. B. Kahng is with the Computer Science Department, University ofCalifornia, Los Angeles 90095 USA.Publisher Item Identifier S 0278-0070(98)02052-1.various ways, e.g., the PROUD algorithm of [21] appliesmin-cut partitioning to induce hierarchical subproblems, andthe GORDIAN algorithm of [13] applies min-cut partitioningas well as center-of-gravity constraints that define a moreconstrained version of the original problem.The heart of the quadratic placement technique lies insolving for the 1-D placements. Two such placements inthe - and -directions will induce a two-dimensional (2-D)“global placement,” which due to the objective function andcontinuous formulation will have most cells clumped in thecenter of the layout region. Quadratic placers vary mostly inhow they map a global placement to a feasible cell placement(i.e., with nonoverlapped cells in legal locations). Min-cutpartitioning and center-of-gravity constraints are simply ameans of gradually “spreading out” the global placementduring the course of this mapping. The well-known example ofGORDIAN [13] uses a conjugate-gradient iteration to solve foroptimal cell locations under the squared wirelength objectivethen partitions the cells by assigning each cell to one of fourcenters of gravity that correspond to the four quadrants of thelayout. Constraints are defined such that all cells assigned to agiven center of gravity must have average - and -coordinatesat that location. The numerical optimization is performed againwith the added constraints and each quadrant is subdivided intofour subquadrants. GORDIAN terminates when each cell hasbeen assigned to a unique center of gravity.Observe that the squared wirelength objective is appliedonly because it allows the 1-D placement problem to bereduced to the solution of a system of linear equations. Themain difficulty with the squared wirelength objective is thatit overpenalizes long wires and underpenalizes short wires.Thus, a strongly connected cluster may be spread out over theplacement, which increases wiring congestion for the router.The extra wiring caused by the spread of highly connectedcomponents can also reduce the routing resource flexibilityneeded to satisfy timing and signal integrity constraints.Mahmoud et al. [16] have compared the linear and squaredwirelength objectives for analog placement and concluded thatthe linear wirelength objective is superior. Indeed, whenevera more “detailed” placement objective is feasible, as withsimulated annealing approaches [20], the preferred objectivehas always been based on minimum spanning tree, single-trunkSteiner tree, or bounding-box perimeter routing estimates.Such routing estimates reflect linear wirelength and moreaccurately capture congestion (routing resource utilization) and0278–0070/98 10.00 1998 IEEE

4IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 17, NO. 1, JANUARY 1998interconnect-related signal delay.1 Works such as [18] havefurther shown that a linear wirelength objective can be used toform 1-D placements that directly yield effective bipartitioningsolutions.The 1991 work of Sigl et al. [19] proposed an important modification of GORDIAN, called GORDIAN-L, whichoptimizes the linear wirelength objective.2 Since the linearwirelength objective cannot be addressed directly by numericalmethods, GORDIAN-L approximates the linear objective by aquadratic objective, then executes the following loop: 1) minimize the current quadratic objective to yield some approximatesolution and 2) use this solution to find a better quadraticobjective until the solution converges. GORDIAN-L achievessolutions with up to 20% less area than GORDIAN whilesignificantly reducing routing density and total minimum spanning tree cost [19]; it has been used widely in industry for bothASIC and structured-custom design (e.g., Motorola PrediXfloorplanner, Siemens LINPLACE placer, etc.). However, theGORDIAN-L improvement comes at the price of significantlyincreased central processing unit (CPU) cost ([19] reports afactor of five increase over GORDIAN). To achieve reducedCPU cost, or substantially improved solution accuracy withingiven CPU cost bounds, our work has developed alternativenumerical methods for linear wirelength minimization.The purpose of our paper is to apply new numerical methodsto the problem of analytic very large scale integration (VLSI)placement with a linear wirelength objective. Our contributionsare as follows. We show that the main GORDIAN-L loop can be viewedas a special case of a generalized version [5], [6] of a1937 algorithm due to Weiszfeld [24]. This relationshipallows us to extend the technique to other objectives. The GORDIAN-L solver uses a technique called minimalgate width (cf., p. 429 of the original GORDIAN-L paper[19]) to avoid numerical errors when the distance betweentwo placed objects is close to zero. While we can usethe minimal gate width with generalized Weiszfield, weinstead propose using a -regularization parameter tocontrol the tradeoff between convergence and solution accuracy. We show that the original GORDIAN-L iterationwith zero minimal gate width is equivalent to settingequal to zero.1 Recent literature has noted that a first-moment RC delay estimate suchas Elmore delay [7] has a term that is quadratic in the length of a sourcesink routing path. In performance-driven deep-submicron design, this factneeds to be taken into some perspective. We observe that a local net [say,O(100) m in length] will typically be driven by a small device and routedon high-impedance lower routing layers: when the driver resistance is high,the dominant portion of interconnect-related delay is from capacitive loading,i.e., it is linearly dependent on total wirelength. On the other hand, a (timingcritical) global net [say, O(1000) m in length] will be driven by a largerdevice and routed (with appropriate spacing and topology) on wider, lowerimpedance upper layers. Even if the ratio of driver/wire resistances is lowenough for wire resistance effects to become significant, design methodologysuch as repeater insertion (needed to reduce gate load delay and improve noiseimmunity, as well as reduce interconnect delay) and wire width sizing willyield interconnect-related delay that is more “linear” than “quadratic.”2 Morerecent works also discuss the linear wirelength objective. Notably,Li et al. [14] use spectral techniques and clustering to address a combinationof the linear and squared wirelength objectives; see also [10]. We note that our generalization of GORDIAN-L canhandle not only linear wirelength, but also wirelength of.an arbitrary real exponent Next, we apply modern numerical methods such as primalNewton and primal-dual Newton to placement with alinear wirelength objective. While such methods havebeen previously used in fields such as image processing,our work is the first to mathematically derive how suchmethods can be applied to the linear wirelength placement objective. We further show that primal-dual Newtonstably attains quadratic convergence, thus improving substantially over the linearly convergent Weiszfeld iteration.Primal-dual Newton solves the same problem that theGORDIAN-L engine solves, except that it is more general(thus enabling other placement formulations). Extensive experiments show that primal-dual Newton converges significantly faster than the Weiszfeld(GORDIAN-L) solver over a range of instance complexities and -regularization regimes. It is straightforward tointegrate the primal-dual Newton iteration into existingnumerical placement engines.II. PRELIMINARIESA quadratic placer takes a netlist hypergraph as input andproduces a placement of the cells. To apply existing numericaloptimizations, the netlist must first be transformed into a graph.conDefinition: An undirected weighted graphand a set ofsists of a set of verticesedgeswhere each edge is an unorderedpair of vertices. A weight functionassigns anonnegative weightto each edge in .To convert the netlist into a graph, the authors of [13] usea star model wherein a new “net node” is created for eachnet in the netlist, and an edge is added between the net nodeand each cell connected to the net. Placing the net node at thecenter of its incident cells (as GORDIAN assumes) makes thestar model equivalent to a clique model which introduces anbetween every pair of cells incident to aedge of weightgiven -pin net. The total squared wirelength will be the samefor any placement under either the star or clique models.adjacency matrixfor theDefinition: Thegraph has entryifandotherwise.Definition: TheLaplacian matrixofhas entryequal toifand entryequal to, i.e.,is the degree of vertex .Definition: The -dimensional placement vectorcorresponds to the physical locations of cellsonthe real line, i.e.,is the coordinate of vertex .GORDIAN [13] uses a squared wirelength objective.Squared Wirelength Formulation: Minimizes.t.(1)is aconstraint matrix that represents centerHere,of gravity constraints [a special case is that of fixed (pad)

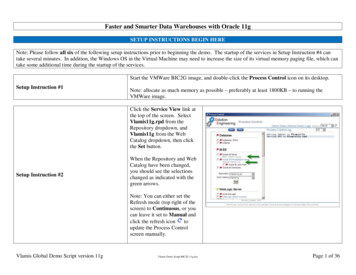

ALPERT et al.: FASTER MINIMIZATION OF LINEAR WIRELENGTH5locations]. Vector gives the coordinates of the centers ofgravity. For belonging to the th group of vertices, theentry ofis set to be, whereis the total number ofrepresentsvertices in the group. The optional linear termconnections of cells to fixed I/O pads. The vector can alsocapture pin offsets [19].Definition: Theincidence matrixforrepresents the relationship between edges and vertices of. For each edge,and; the orientation of edges (signs ofand) can be arbitrary. All other entries of are zero.Note that there arenonzero entries inand that eachrow sum is zero. The GORDIAN-L linear wirelength objectiveis as follows.Linear Wirelength Formulation: Minimizes.t.(2)A termcan again be added to incorporate pin offsets andconnections to pads.III. GORDIANANDGORDIAN-LGORDIAN [13] obtains a placement by repeatedly optimizing, alternating between the horizontal and verticaldirections. Constraints for the optimizations are respectivelyand, corresponding to thegiven byand directions as explained above. To handle nonunit areasfor each vertex , entry can be changed towhereis the sum of the areas of all vertices with centerof gravity .The algorithm must ensure that at each iterationandhave maximal rank and constrain each cell by exactly onecenter of gravity. This implies that there is exactly one nonzeroand.element in each column ofGORDIAN begins with each cell attracted to the samecenter of gravity located in the center of the layout. During aniteration, for each center of gravity we consider the group ofcells (if of size 2, i.e., if the constraint is nontrivial) attractedto it. The corresponding region is cut in two by a vertical orhorizontal line passing through the center of gravity, and twonew groups of cells with respective new centers of gravityreplace the old group. This leads to a final solution wherecells do not overlap.Fig. 1 summarizes the flow of the GORDIAN algorithm.obtained by applyThe algorithm takes as input a graph-clique model to a circuit netlist; it outputs theing thecoordinates of the placed cells. The repeat loop in Steps 2–8continues as long as the number of cells is larger than thenumber of center of gravity constraints. At each iteration, Step3 minimizes a quadratic objective function which is derivedbelow. The resulting placement is used to refine the centerof gravity constraints, yielding left and right constraints andlarger linear systems (Steps 5 and 6). This process is thenrepeated in thedirection (Step 7), with each nontrivialconstraint refined into top and bottom constraints. In eachiteration, the number of subregions can quadruple, so theFig. 1. The GORDIAN solver.number of iterations through the repeat loop in Step 2 is.We find the unique minimizerfordefined in(1) by solving the possibly undetermined constraint systempassing to an unconstrained formulation and finallysolving a quadratic programming problem as follows. Matrixis diagonalized by a permutation of columns into[is-diagonal;has size]. Thisdiagonalization is possible since every column has exactlyone nonzero element and the constraints are nondegenerate.Correspondingly, the placement vectorsplits intoindependent variablesand dependent variables, sothat we can rewriteasor.Inverting the diagonal matrix, we getThis allows us to expressasor aswith obvious notation forand . Wecombine this formula with (1) to reduce the dimension of theunknown minimizer and obtain an unconstrained formulationwhere represents all constant terms. Asdepends ononly, we introduce, so thatwhere. We see now thatgives an ()dimensional unconstrained quadratic programming problem.

6IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 17, NO. 1, JANUARY 1998To determine its optimal solution, the gradientto zero, yielding thelinear systemis setwhich can be efficiently solved with, e.g., conjugate gradient oranother Krylov subspace solver [9]. Once the optimal valueis obtained, the optimal solution for is given by.GORDIAN-L: Placement with minimum squared wirelength objective has a unique solution that can be foundby solving the corresponding linear system. In contrast,placement with a minimum linear wirelength objectivecan have multiple optimal solutions. For example, a singlemovable cell connected to two fixed pads by edges of equalweight can be optimally placed anywhere between the twopads. In general, the set of optimal placements is closed andlies within the convex hull of fixed pads (see [21]). Directminimization of a linear objective function can be achievedby linear programming, but this is usually computationallyexpensive.Sigl et al. [19] minimize the linear wirelength objectiveby repeatedly applying the GORDIAN quadratic solver.They observe that the linear objective can be rewritten asIfwere constant in the denominator of the lastterm, then a quadratic objective would be obtained and couldbe handled easily. The GORDIAN-L solver first solves theto obtain a reasonable approximation for eachsystemterm. Call this solution . GORDIAN-L then derivessuccessively improved solutionsuntil there is nosignificant difference betweenand. From a given, the next solutionis obtained by minimizingsolution(3)where. Note that the coefficientsare adjusted between iterations. The iterations terminate whenthe factorsno longer change significantly.3 Just as, we can minimizein (3) by applying awithKrylov subspace solver.The GORDIAN algorithm can be transformed intoGORDIAN-L by replacing Step 3 of Fig. 1 with the solvershown in Fig. 2. Note that GORDIAN-L [19] also includesan additional modification. Rather than subdivide each regioninto two subregions, GORDIAN-L subdivides each regioninto three subregions and then minimizes the objective;the result is then used to subdivide the region into fivesubregions and the minimization is performed again. Theresulting solution is used as the output for Step 3 in Fig. 1and centers of gravity are assigned as before. This modification3 Someconvergence criterion must be specified in any implementation. Wedo not know the convergence criterion used in GORDIAN-L, which makesCPU time comparisons impossible.Fig. 2. The GORDIAN-L solver.improves performance but also increases the number of callsto the numerical solver.IV. APPLYING THE WEISZFELD ALGORITHM TO PLACEMENTIn 1937, Weiszfeld [22] proposed a technique to optimizethe placement of nodes in which the instance is a completeunweighted graph. In other words, the objective function isto minimize the total sum of distances between all placedobjects. Later Eckhardt [5], [6] generalized this technique forarbitrary connectivity matrices. Eckhardt proves the globallinear4 convergence of this technique (see also [15]).We show that by choosing the appropriate matrix, Eckhardt’s extension of the Weiszfeld algorithm is actually equivalent to GORDIAN-L (with an initial assumption of zerominimal gate width, as opposed to a small fixed minimal gatewidth). This enables us to apply Eckhardt’s linear convergenceproof to GORDIAN-L. To guarantee numerical stability, atechnique called -regularization is used in the Weiszfeldalgorithm.A. Generalized Weiszfeld Algorithm inthe Context of GORDIAN-LWe now explain the generalized Weiszfeld algorithm in thecontext of the GORDIAN-L numerical solver. The objectiveis to minimizesubject towith the-matriximposing constraints on cell locations.Observe thatwhereis a small constant. The purpose ofisto approximate the nondifferentiable objective function by asmooth function. In order to write the derivative ofcompactly, notice that5(4)4 Choose a norm and let (k ) denote the norm of the residual vector at thek th iteration. Assume that log[ (0) (k )]Bk s for some constant B ands1; 2 . We say that the convergence is linear when s 1 and quadraticwhen s 2.5 HereT j jj a.k.a. the Kronecker product.j2f gC CCC

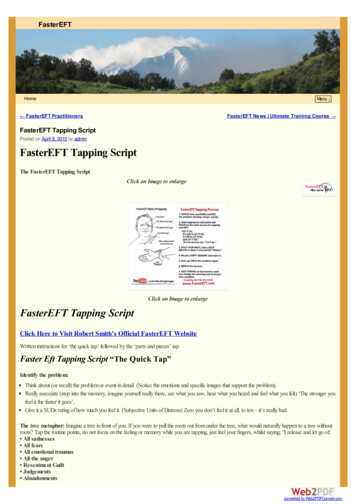

ALPERT et al.: FASTER MINIMIZATION OF LINEAR WIRELENGTH7Henceand the partial derivatives of the Lagrangianare(5)(6)Thus, the original minimization problem has been transformedinto two systems of equations which can be combined to yieldthe nonlinear system(7). This system iswherethe generalized Weiszfeld system of Eckhardt [5], [6]. Thischoice for the matrixenables us to apply this technique toplacement with a linear wirelength objective.To solve this system, we guess an initial approximationand solve the system with. This solution iscalled, which is the next iterate. In general, we computefrom the previous valuebythe Weiszfeld iterate6solving the linear system(8)which we call the low-level system. The iterations continueuntil a convergence criterion is met. For example, such acriterion may be based on the norm of the residual vector[the difference between the left-hand side and the right-handside of (7)]. This is the main flow of the generalized Weiszfeldalgorithm.We now show that this technique is actually the same technique used in the GORDIAN-L solver. Recall that GORDIANL approximates the linear wirelength objective by a quadraticobjective(9)Thus, like the generalized Weiszfeld algorithm, GORDIAN-L)th iterate to solve for the th iterate. Equationuses the ((9) can be rewritten asFig. 3. Comparing the minimal gate width function (MinGW) with the-regularization (Reg) in one dimension. The original objective is represented0:02 and the minimal gate width is set to0:141. Noteby x . Herethat the discontinuous MinGW has continuous one-sided derivative (optimalityand x . By demandingconditions), as its branches are given by x2 2continuity of the objective function and therefore dropping the factor of 1/2,we would make the optimality conditions discontinuous.jj7!mathematical idea behind solving (7) is to build an iteration xkwhose fixed point is the unknown solution. Hence, Weiszfeld can beclassified as a fixed-point method.6 Thexk01pp jjand(10)and using (6), weSettingobtain the same system as in (8) except that the matrixis now replaced with . The only difference between thesetwo matrices is that applying the -regularization techniqueapproximateswith. This is necessary tobecomes tooavoid numerical problems whensmall (cf., Step 3 of Fig. 2). In GORDIAN-L (see [19]), ifthis term becomes smaller than the minimal gate width, it isreplaced with this minimal gate width. In summary, it is simplya matter of using two different schemes ( -regularizationversus minimal gate width, cf. Fig. 3) to guarantee reasonablebehavior of the solver at the cusps of the objective function.Either scheme can be used with Weiszfeld, and we observethat -regularization is superior to using minimal gate width:it is closer to the original objective function and its uniqueminimizer has convenient limit behavior.Theorem: Letbe the -regularization of a linear wire, thenlength objective functiona)uniformly onb),c)Proof: It is enough to prove a) and b) for only one. For a) this is done by the observing that, the inequalities b) for one term are true bysquaring both sides; c) follows from a) and b).To follow up on the minimal gate width, notice that ifone changes the termin the original formulation to, one gets an equivalent of minimal gatewidth, as defined in GORDIAN-L, but on a per-edge basis( can be made quite large if one wishes not to penalize aparticular edge once it is shorter than ). By considering termsof the form, one gets atermfor which the Lagrangian is

8IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 17, NO. 1, JANUARY 19982-D generalization of the minimal gate width. Both variationsof the objection functions need to be -regularized.In addition to handling the linear wirelength, the Weiszfeldalgorithm can also be applied to the wirelength of an arbitraryby considering termsand the like.real exponentIndeed, to derive Weiszfeld, we only need to differentiate theobjective function (cf., GORDIAN-L whose algorithm relieson a specific form of the obj. function) andis smooth (it), so no -regularization would be needed.is not forB.-RegularizationIn our application of the generalized Weiszfeld algorithmto placement with linear wirelength, the objective functionwas -regularized to bound the denominator in (5) awayfrom zero. We now highlight properties of the regularizedobjective function and its relation to the original placementobjective function.in the origBy changing all expressions of the form, the resulting objective becomesinal objective tostrictly convex and therefore has an unique global minimizer., this minimizer approaches that of the originalAslinear objective, which in turn can have plenty of minimizers.For example, given a single movable vertex connected to twohas uncountablyfixed pads on opposite ends of the layout,many optimal solutions, while the -regularization will have).only one (forClearly, as increases, the disparity between the regularizedobjective and the original objective increases as well (and thederived solutions will be further from optimal). On the otherhand, if is too small, the derivative of the objective function(which is used in variational methods) will behave badly nearpoints where the original objective is not smooth: matrices inlinear systems will become ill-conditioned and solving themwill become computationally expensive if not impossible.The first question is: “How should be expressed to havecomparable effects for various unrelated placement problems?”In the original objective function, all expressions of form are. These expressions are upper bounded by ,actuallythe length of placement interval which varies across differentplacement instances. If we set, whereis a smallnumber, thenwithandbeing on order of 100 for any placement problem.Our experiments show that in this way we obtain similarbehavior for different placement problems if we use the samevalue of —independent of problem size. We have workedwith values ranging from 101 to 10 7 .The second question, how to choose good values of ,is harder; the answer largely depends on how the solutionsproduced by the Weiszfeld method are used. One can repeatedly solve Weiszfeld for decreasing values ofandstop when the difference between successive placements issmall; alternatively, one can stop when the objective functionstabilizes. Both strategies can lead to premature stopping, andfinding a good heuristic is an open question.V. THE PRIMAL-NEWTON METHODThe Newton approach is often used as a base for developing more sophisticated methods with superlinear convergence(e.g., in [2], [15]). In this section, we develop what we call theprimal-Newton method for minimizing the linear wirelengthobjective. Our main purpose is to introduce the reader totechniques that we will use in developing the primal-dualNewton method.7 Primal-dual will also be a Newton method,but with an additional set of dual variables. Because theprimal-dual Newton method converges at least as fast as theprimal Newton method and is more stable (i.e., its region ofconvergence is strictly larger), we do not report experimentaldata for primal Newton.s. t.,Consider minimizingwhere the-matriximposes constraints on celllocations. As before,contains only two nonzeroentries, plus and minus the th edge weight, at locationscorresponding to the two vertices of the edge.The Lagrangian for this problem is(11)Taking partial derivatives and using (4) gives us(12)(13)Applying the Newton method to this nonlinear system, werewrite the system (invoking the fact that rows of matrixare preciselyand applying some linear algebra) as follows:(14)where(15)and the following notation is used. , with defined as for the Weiszfeldalgorithm.is andiagonal matrix with values in the th row equal to is andiagonal matrix with values in the throw equal toAt the end of each iteration, we update and asStarting with initial values ofand , we compute corand, then updateresponding values forand by solving the system in (14). This is repeated untilsome convergence criterion is met. We call this particularimplementation of the Newton method primal Newton.7 The key issue addressed by primal-dual Newton is global convergence,which primal Newton lacks. No precise mathematical statement about globalconvergence of primal-dual Newton has been proven, but its reliable convergence properties have been observed in the literature (e.g., [2], [15]) and ourexperiments.

ALPERT et al.: FASTER MINIMIZATION OF LINEAR WIRELENGTH9The primal-Newton method does not possess any kind ofglobal convergence property. Local convergence takes place(a proof can be found in [17]) but we do not know of anyestimates of the size of the local convergence region. Onecan use various globalization techniques (e.g., line searchand trust regions) to guarantee convergence of the primalNewton method everywhere. However, all of these globalization techniques are known to be inefficient in a numberof applications (such as placement and image processing)due to the small size of the region where primal Newtonconverges quadratically. Modifications to a Newton methodwhich allow it to achieve global convergence can be found in[15] where a corresponding theorem is proven and numericalresults demonstrating advantages over the Weiszfeld methodare shown. Reference [15] also contains a discussion ofdegeneracy, a feature of some placement problems for whichNewton-like methods are only linearly convergent.The various considerations related to top-level stoppingcriteria for Weiszfeld in the previous section do not carryover to the Newton method since we are searching forwhich cannot be characterized as satisfying a particular linearsystem. In other words, we do not have an analogue forthe residual norm. However, convergence criteria in termsis theof successive iterates are easily defined sincedifference between successive iterates. Alternatively, variousconvergence criteria can be deduced from the observation thatthe nonlinear residual, the right-hand side of

4 ieee transactions on computer-aided design of integrated circuits and systems, vol. 17, no. 1, january 1998 interconnect-related signal delay. 1 Works such as [18] have further shown that a