Transcription

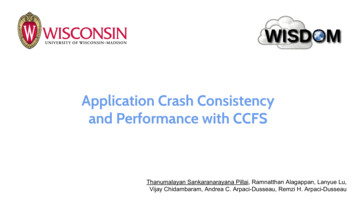

Application Crash Consistencyand Performance with CCFSThanumalayan Sankaranarayana Pillai, Ramnatthan Alagappan, Lanyue Lu,Vijay Chidambaram, Andrea C. Arpaci-Dusseau, Remzi H. Arpaci-Dusseau

Application-Level Crash ConsistencyStorage must be robust even with system crashes- Power loss (2016 UPS issues: Github outage, Internet outage across UK)[source:www.datacenterknowledge.com]- Kernel bugs [Lu et al., OSDI 2014, Palix et al., ASPLOS 2011, Chou et al., SOSP 2001]

Application-Level Crash ConsistencyStorage must be robust even with system crashes- Power loss (2016 UPS issues: Github outage, Internet outage across UK)[source:www.datacenterknowledge.com]- Kernel bugs [Lu et al., OSDI 2014, Palix et al., ASPLOS 2011, Chou et al., SOSP 2001]Applications need to implement crash consistency- E.g., Database applications ensure transactions are atomic

Application-Level Crash ConsistencyStorage must be robust even with system crashes- Power loss (2016 UPS issues: Github outage, Internet outage across UK)[source:www.datacenterknowledge.com]- Kernel bugs [Lu et al., OSDI 2014, Palix et al., ASPLOS 2011, Chou et al., SOSP 2001]Applications need to implement crash consistency- E.g., Database applications ensure transactions are atomicApplications implement crash consistency wrongly- Pillai et al., OSDI 2014 (11 applications) and Zhou et al., OSDI 2014 (8 databases)- Conclusion: All applications had some form of incorrectness

Ordering and Application ConsistencyApp crash consistency depends on FS behavior[Pillai et al., OSDI 2014]- E.g., Bad FS behavior: 60 vulnerabilities in 11 applications- Good FS behavior: 10 vulnerabilities in 11 applications

Ordering and Application ConsistencyApp crash consistency depends on FS behavior[Pillai et al., OSDI 2014]- E.g., Bad FS behavior: 60 vulnerabilities in 11 applications- Good FS behavior: 10 vulnerabilities in 11 applicationsFS-level ordering is important for applications- All writes should (logically) be persisted in their issued order- Major factor affecting application crash consistency

Ordering and Application ConsistencyApp crash consistency depends on FS behavior[Pillai et al., OSDI 2014]- E.g., Bad FS behavior: 60 vulnerabilities in 11 applications- Good FS behavior: 10 vulnerabilities in 11 applicationsFS-level ordering is important for applications- All writes should (logically) be persisted in their issued order- Major factor affecting application crash consistencyFew FS configurations provide FS-level ordering- Ordering is considered bad for performance

In this paper .Stream abstraction- Allows FS-level ordering with little performance overhead- Needs a single, backward-compatible change to user code- Flexible: More code changes improve performance

In this paper .Stream abstraction- Allows FS-level ordering with little performance overhead- Needs a single, backward-compatible change to user code- Flexible: More code changes improve performanceCrash-Consistent File System (CCFS)- Efficient implementation of stream abstraction on ext4- High performance similar to ext4- Noticeably higher crash consistency for applications

OutlineIntroductionBackgroundStream APICrash-Consistent File SystemEvaluationConclusion

File-System BehaviorEach file system behaves differently across a crash- Little standardization of behavior across crashes

File-System BehaviorEach file system behaves differently across a crash- Little standardization of behavior across crashesFS Crash BehaviorAtomicityOrdering

File-System BehaviorEach file system behaves differently across a crash- Little standardization of behavior across crashesFS Crash BehaviorAtomicityEffects of a write()system call atomic on asystem crash?Orderingcreat(A);creat(B);Possible after crash that Bexists, but A does not?

File-System BehaviorEach file system behaves differently across a crash- Little standardization of behavior across crashesFS Crash BehaviorAtomicityOrderingDirectory operationsFile writesE.g., rename() atomic?Entire system call?Sector-level?.

Vulnerabilities StudyPrevious work: App crash consistency vs FS behavior[Pillai et al., OSDI 2014]

Vulnerabilities StudyPrevious work: App crash consistency vs FS behavior[Pillai et al., OSDI 2014]“Vulnerability”: Place in application source code that can lead toinconsistency, depending on FS behavior

Vulnerabilities Study: oKeeperTotalExt2-like 0

Vulnerabilities Study: ResultsApplicationsFile oKeeperTotalExt2-like 0Vulnerabilities under safestapplication configuration

Vulnerabilities Study: urialVMWareHDFSZooKeeperTotal Ext2-like 0File-system behavior

Vulnerabilities Study: urialVMWareHDFSZooKeeperTotal Ext2-like 0Under FS with few guaranteesof atomicity and ordering, 60vulnerabilities are exposed-Serious consequences:unavailability, data loss

Vulnerabilities Study: urialVMWareHDFSZooKeeperTotal Ext2-like ious consequences111Under btrfs, with atomicitybut lots of re-ordering, 31vulnerabilities10Repository corruptionUnavailability

Vulnerabilities Study: urialVMWareHDFSZooKeeperTotal Ext2-like on error5823Dirstate corruption60113110Under data-journaled ext3,with both atomicity andordering, 10 vulnerabilities-Minor consequences

Real-world vs Ideal FS behaviorIdeal behavior: Ordering, “weak atomicity”- All file system updates should be persisted in-order- Writes can split at sector boundary; everything else atomic

Real-world vs Ideal FS behaviorIdeal behavior: Ordering, “weak atomicity”- All file system updates should be persisted in-order- Writes can split at sector boundary; everything else atomicModern file systems already provide weak atomicity- E.g.: Default modes of ext4, btrfs, xfs

Real-world vs Ideal FS behaviorIdeal behavior: Ordering, “weak atomicity”- All file system updates should be persisted in-order- Writes can split at sector boundary; everything else atomicModern file systems already provide weak atomicity- E.g.: Default modes of ext4, btrfs, xfsOnly rarely used FS configurations provide ordering- E.g.: Data-journaling mode of ext4, ext3

Background: SummaryFile-system behavior affects application consistency- Behavior is not standardized- 60 vulnerabilities with ext2-like FS; 10 with well-behaved FSDesired behavior: Ordering and weak atomicity- Weak atomicity already provided by modern file systems- Ordering provided only by rarely-used FS configurations

OutlineIntroductionBackgroundStream APICrash-Consistent File SystemEvaluationConclusion

Why not use an order-preserving FS?Some existing file systems preserve order- Example: ext3 and ext4 under data-journaling mode- Performance overhead?

Why not use an order-preserving FS?Some existing file systems preserve order- Example: ext3 and ext4 under data-journaling mode- Performance overhead?New techniques are efficient in maintaining order- CoW, optimized forms of journaling- Ordering doesn’t require disk-level seeks

Why not use an order-preserving FS?Some existing file systems preserve order- Example: ext3 and ext4 under data-journaling mode- Performance overhead?New techniques are efficient in maintaining order- CoW, optimized forms of journaling- Ordering doesn’t require disk-level seeksReason: False ordering dependencies- Inherent overhead of ordering, irrespective of technique used

False Ordering DependenciesApplication AApplication B31

False Ordering DependenciesTimeApplication A1pwrite(f1, 0, 150 MB);Application B32

False Ordering DependenciesTimeApplication A1pwrite(f1, 0, 150 MB);23Application Bwrite(f2, “hello”);write(f3, “world”);33

False Ordering DependenciesTimeApplication A1pwrite(f1, 0, 150 MB);234Application Bwrite(f2, “hello”);write(f3, “world”);fsync(f3);34

False Ordering DependenciesIn a globally ordered file system .Time1234Application AApplication Bwrite(f1) has to be sentto disk before write(f2)pwrite(f1, 0, 150 MB);write(f2, “hello”);write(f3, “world”);fsync(f3);35

False Ordering DependenciesIn a globally ordered file system .Time1234Application AApplication B2 seconds, irrespectiveof implementation usedto get ordering!pwrite(f1, 0, 150 MB);write(f2, “hello”);write(f3, “world”);fsync(f3);36

False Ordering DependenciesProblem: Ordering between independent applicationsIn a globally ordered file system .Time1234Application AApplication B2 seconds, irrespectiveof implementation usedto get ordering!pwrite(f1, 0, 150 MB);write(f2, “hello”);write(f3, “world”);fsync(f3);37

False Ordering DependenciesProblem: Ordering between independent applicationsSolution: Order only within each application- Avoids performance overhead, provides app consistencyTimeApplication A1pwrite(f1, 0, 150 MB);234Application Bwrite(f2, “hello”);write(f3, “world”);fsync(f3);38

Stream AbstractionNew abstraction: Order only within a “stream”- Each application is usually put into a separate streamTimeApplication AApplication Bstream-A1234pwrite(f1, 0, 150 MB);stream-B0.06 secondswrite(f2, “hello”);write(f3, “world”);fsync(f3);39

Stream API: Normal UsageNew set stream() call- All updates after set stream(X) associated with stream X- When process forks, previous stream is adoptedTimeApplication A1set stream(A)pwrite(f1, 0, 150 MB);234Application Bset stream(B)write(f2, “hello”);write(f3, “world”);fsync(f3);40

Stream API: Normal UsageNew set stream() call- All updates after set stream(X) associated with stream X- When process forks, previous stream is adoptedUsing streams is easy- Add a single set stream() call in beginning of application- Backward-compatible: set stream() is no-op in older FSes41

Stream API: Extended Usageset stream() is versatile- Many applications can be assigned the same stream- Threads within an application can use different streams- Single thread can keep switching between streams42

Stream API: Extended Usageset stream() is versatile- Many applications can be assigned the same stream- Threads within an application can use different streams- Single thread can keep switching between streamsOrdering vs durability: stream sync(), IGNORE FSYNC flag- Applications use fsync() for both ordering and durability [Chidambaram et al., SOSP2013]- IGNORE FSYNC ignores fsync(), respects stream sync()43

Streams: SummaryIn an ordered FS, false dependencies cause overhead- Inherent overhead, independent of technique usedStreams provide order only within application- Writes across applications can be re-ordered for performance- For consistency, ordering required only within applicationEasy to use!44

OutlineIntroductionBackgroundStream APICrash-Consistent File SystemEvaluationConclusion

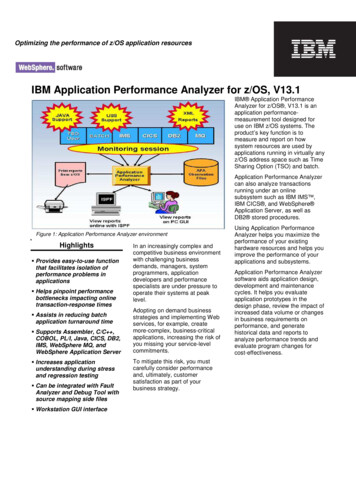

CCFS: Design“Crash consistent file system”- Efficient implementation of stream abstraction46

CCFS: Design“Crash consistent file system”- Efficient implementation of stream abstractionBasic design: Based on ext4 with data-journaling- Ext4 data-journaling guarantees global ordering- Ordering across all applications: false dependencies- CCFS uses separate transactions for each stream47

CCFS: Design“Crash consistent file system”- Efficient implementation of stream abstractionBasic design: Based on ext4 with data-journaling- Ext4 data-journaling guarantees global ordering- Ordering across all applications: false dependencies- CCFS uses separate transactions for each streamMultiple challenges48

Ext4 Journaling: Global OrderExt4 has 1) main-memory structure, “running transaction”,2) on-disk journal structureRunning transactionMain memoryOn-disk journal49

Ext4 Journaling: Global OrderApplication modificationsrecorded in main-memoryApplication AApplication BModify blocks #1,#3Modify blocks #2,#4running transactionRunning transactionMain memory1324On-disk journal50

Ext4 Journaling: Global OrderOn fsync() call, runningtransaction “committed” toon-disk journalApplication AApplication BModify blocks #1,#3Modify blocks #2,#4fsync()Running transactionMain memory1324On-disk journal51

Ext4 Journaling: Global OrderOn fsync() call, runningtransaction “committed” toon-disk journalApplication AApplication BModify blocks #1,#3Modify blocks #2,#4fsync()Running transaction1324endOn-disk journalbeginMain memory52

Ext4 Journaling: Global OrderFurther application writesrecorded in new runningtransaction and committedApplication AApplication BModify blocks #1,#3Modify blocks #2,#4fsync()Modify blocks #5,#6Running transactionMain memory13246endbeginOn-disk journal553

Ext4 Journaling: Global OrderFurther application writesrecorded in new runningtransaction and committedApplication AApplication BModify blocks #1,#3Modify blocks #2,#4fsync()Modify blocks #5,#6Running transactionMain memory13246endbeginOn-disk journal554

Ext4 Journaling: Global OrderFurther application writesrecorded in new runningtransaction and committedApplication AApplication BModify blocks #1,#3Modify blocks #2,#4fsync()Modify blocks #5,#6Running transaction2456end3begin1endOn-disk journalbeginMain memory55

Ext4 Journaling: Global OrderOn system crash, on-diskjournal transactions recoveredatomically, in sequential orderRunning transaction2456end3begin1endOn-disk journalbeginMain memory56

Ext4 Journaling: Global OrderOn system crash, on-diskjournal transactions recoveredatomically, in sequential orderGlobal ordering is maintained!Running transaction2456end3begin1endOn-disk journalbeginMain memory57

CCFS: Stream OrderCCFS maintains separate runningtransaction per streamApplication Aset stream(A)Modify blocks #1,#3Application Bset stream(B)Modify blocks #2,#4stream-A transactionMain memory13stream-B transaction24On-disk journal58

CCFS: Stream OrderOn fsync(), only that stream iscommittedApplication Aset stream(A)Modify blocks #1,#3Application Bset stream(B)Modify blocks #2,#4fsync()stream-A transactionMain memory13stream-B transaction24On-disk journal59

CCFS: Stream OrderOn fsync(), only that stream iscommittedApplication Aset stream(A)Modify blocks #1,#3Application Bset stream(B)Modify blocks #2,#4fsync()stream-A transactionbeginOn-disk journal1234endMain memorystream-B transaction60

CCFS: Stream OrderOrdering maintained withinstream, re-order across streams!Application Aset stream(A)Modify blocks #1,#3Application Bset stream(B)Modify blocks #2,#4fsync()stream-A transactionbeginOn-disk journal1234endMain memorystream-B transaction61

CCFS: Multiple ChallengesExample: Two streams updating adjoining dir-entriesApplication Aset stream(A)create(/X/A)Application Bset stream(B)create(/X/B)62

CCFS: Multiple ChallengesExample: Two streams updating adjoining dir-entriesApplication ABlock-1 (belonging to directory X)Entry-AEntry-Bset stream(A)create(/X/A)Application Bset stream(B)create(/X/B)63

Challenge #1: Block-Level JournalingTwo independent streams canupdate same block!Application Aset stream(A)create(/X/A)set stream(B)create(/X/B)Block-1Entry-AEntry-BMain memoryApplication Bstream-A transaction?stream-B transaction?64

Challenge #1: Block-Level JournalingTwo independent streams canupdate same block!Application Aset stream(A)create(/X/A)set stream(B)create(/X/B)Block-1Entry-AEntry-BMain memoryApplication Bstream-A transaction?stream-B transaction?Faulty solution: Perform journaling at byte-granularity-Disables optimizations, complicates disk updates65

Challenge #1: Block-Level JournalingCCFS solution:Record running transactions atbyte granularitystream-A transactionMain memoryEntry-AApplication Aset stream(A)create(/X/A)Application Bset stream(B)create(/X/B)stream-B transactionEntry-B66

Challenge #1: Block-Level JournalingCCFS solution:Record running transactions atbyte granularityApplication Aset stream(A)create(/X/A)Application Bset stream(B)create(/X/B)Commit at block granularitystream-A transactionMain memoryEntry-Astream-B transactionEntry-BOn-disk journal67

Challenge #1: Block-Level JournalingCCFS solution:Record running transactions atbyte granularityApplication Aset stream(A)create(/X/A)Application Bset stream(B)create(/X/B)Commit at block granularitystream-A transactionMain memorystream-B transactionEntry-BEntry-AEntry-AEntry-BendOn-disk journalbeginOld versionof entry-AEntire block-1 committed68

More Challenges .1. Both streams update directory’s modification date-Solution: Delta journaling69

More Challenges .1. Both streams update directory’s modification date-Solution: Delta journaling2. Directory entries contain pointers to adjoining entry-Solution: Pointer-less data structures70

More Challenges .1. Both streams update directory’s modification date-Solution: Delta journaling2. Directory entries contain pointers to adjoining entry-Solution: Pointer-less data structures3. Directory entry freed by stream A can be reused by stream B-Solution: Order-less space reuse71

More Challenges .1. Both streams update directory’s modification date-Solution: Delta journaling2. Directory entries contain pointers to adjoining entry-Solution: Pointer-less data structures3. Directory entry freed by stream A can be reused by stream B-Solution: Order-less space reuse4. Ordering technique: Data journaling cost-Solution: Selective data journaling [Chidambaram et al., SOSP 2013]72

More Challenges .1. Both streams update directory’s modification date-Solution: Delta journaling2. Directory entries contain pointers to adjoining entry-Solution: Pointer-less data structures3. Directory entry freed by stream A can be reused by stream B-Solution: Order-less space reuse4. Ordering technique: Data journaling cost-Solution: Selective data journaling [Chidambaram et al., SOSP 2013]5. Ordering technique: Delayed allocation requires re-ordering-Solution: Order-preserving delayed allocation73

More Challenges .1. Both streams update directory’s modification date-Solution: Delta journaling2. Directory entries contain pointers to adjoining entry-Solution: Pointer-less data structures3. Directory entry freed by stream A can be reused by stream B-Solution: Order-less space reuse4. Ordering technique: Data journaling cost-Solution: Selective data journaling [Chidambaram et al., SOSP 2013]5. Ordering technique: Delayed allocation requires re-ordering-Solution: Order-preserving delayed allocationDetails in the paper!74

OutlineIntroductionBackgroundStream APICrash-Consistent File SystemEvaluationConclusion

Evaluation1. Does CCFS solve application vulnerabilities?76

Evaluation1. Does CCFS solve application vulnerabilities?-Tested five applications: LevelDB, SQLite, Git, Mercurial, ZooKeeperMethod similar to previous study (ALICE tool) [Pillai et al., OSDI 2014]New versions of applicationsDefault configuration, instead of safe configuration77

Evaluation1. Does CCFS solve application 8

Evaluation1. Does CCFS solve application xt4: 9 Vulnerabilities-Consistency lost in LevelDBRepository corrupted in Git, MercurialZooKeeper becomes unavailable79

Evaluation1. Does CCFS solve application xt4: 9 Vulnerabilities-Consistency lost in LevelDBRepository corrupted in Git, MercurialZooKeeper becomes unavailableCCFS: 2 vulnerabilities in Mercurial- Dirstate corruption80

Evaluation2. Performance within an application- Do false dependencies reduce performance inside application?- Or, do we need more than one stream per application?81

EvaluationThroughput: normalized to ext4(Higher is better)2. Performance within an applicationext4ccfs82

EvaluationThroughput: normalized to ext4(Higher is better)2. Performance within an applicationStandard benchmarksext4ccfsReal applications83

EvaluationThroughput: normalized to ext4(Higher is better)2. Performance within an applicationStandard workloads:Similar performancefor ext4, ccfsBut ext4 re-orders!ext4ccfs84

EvaluationThroughput: normalized to ext4(Higher is better)2. Performance within an applicationGit under ext4 is slowbecauseofsaferconfiguration neededfor correctnessext4ccfs85

EvaluationThroughput: normalized to ext4(Higher is better)2. Performance within an applicationSQLite and LevelDB :Similar performancefor ext4, ccfsext4ccfs86

EvaluationThroughput: normalized to ext4(Higher is better)2. Performance within an applicationBut, performance canbeimprovedwithIGNORE FSYNCandstream sync()!ext4ccfsccfs 87

Evaluation: SummaryCrash consistency: Better than ext4- 9 vulnerabilities in ext4, 2 minor in CCFSPerformance: Like ext4 with little programmer overhead- Much better with additional programmer effortMore results in paper!88

ConclusionFS crash behavior is currently not standardized89

ConclusionFS crash behavior is currently not standardizedIdeal FS behavior can improve application consistency90

ConclusionFS crash behavior is currently not standardizedIdeal FS behavior can improve application consistencyIdeal FS behavior is considered bad for performance91

ConclusionFS crash behavior is currently not standardizedIdeal FS behavior can improve application consistencyIdeal FS behavior is considered bad for performanceStream abstraction and CCFS solve this dilemma92

ConclusionFS crash behavior is currently not standardizedIdeal FS behavior can improve application consistencyIdeal FS behavior is considered bad for performanceStream abstraction and CCFS solve this dilemmaThank you! Questions?93

Examples1.2.3.LevelDB:a. creat(tmp); write(tmp); fsync(tmp); rename(tmp, CURRENT); -- unlink(MANIFEST-old);i. Unable to open the databaseb. write(file1, kv1); write(file1, kv2); -- creat(file2, kv3);i. kv1 and kv2 might disappear, while kv3 still existsGit:a. append(index.lock) -- rename(index.lock, index)i. “Corruption “ returned by various Git commandsb. write(tmp); link(tmp, object) -- rename(master.lock, master)i. “Corruption “ returned by various Git commandsHDFS:a. creat(ckpt); append(ckpt); fsync(ckpt); creat(md5.tmp); append(md5.tmp); fsync(md5.tmp);rename(md5.tmp, md5); -- rename(ckpt, fsimage);i. Unable to boot the server and use the data

File System Study: ResultsOne sector overwrite: Atomic becauseof device characteristicsAppends: Garbage in some file systemsFile systems do not usually provideatomicity for big writesFile systemconfigurationext2ext3One sectorappendMany sectorwriteDirectoryoperationasync sync writeback xfsOne sectoroverwriteordered data-journal writebackext4Atomicity ordered no-delalloc data-journal btrfs default wsync

File System Study: ResultsOne sector overwrite: Atomic becauseof device characteristicsAppends: Garbage in some file systemsFile systemconfigurationext2File systems do not usually provideatomicity for big writesext3Directory operations are usually atomicext4One sectorappendMany sectorwriteDirectoryoperationasync sync writeback One sectoroverwriteordered data-journal writebackxfsAtomicity ordered no-delalloc data-journal btrfs default wsync

Collecting System Call Tracegit add file1Application WorkloadRecord strace, memory accesses (for mmapwrites), initial state of datastoreInitial tmp, data, 4K)fsync(tmp)link(tmp, permanent)append(index.lock)rename(index.lock, index)

Calculating Intermediate Statesa. Convert system calls into atomic , 4K)fsync(tmp)link(tmp, permanent).creat(inode 1, dentry index.lock)creat(inode 2, dentry tmp)truncate(inode 2, 1)truncate(inode 2, 2).truncate(inode 2, 4K)write(inode 2, garbage)write(inode 2, actual data).link(inode 2, dentry permanent).

Calculating Intermediate Statesb. Find ordering dependenciescreat(index.lock)creat(tmp)append(tmp, 4K)fsync(tmp)link(tmp, permanent).creat(inode 1, dentry index.lock)creat(inode 2, dentry tmp)truncate(inode 2, 1)truncate(inode 2, 2).truncate(inode 2, 4K)write(inode 2, garbage)write(inode 2, actual data).link(inode 2, dentry permanent).

Calculating Intermediate Statesc. Choose a few sets of modifications obeying dependenciescreat(inode 1, dentry index.lock)creat(inode 2, dentry tmp)truncate(inode 2, 1)truncate(inode 2, 2).truncate(inode 2, 4K)write(inode 2, garbage)write(inode 2, actual data).link(inode 2, dentry permanent).Set 1:creat(inode 1, dentry index.lock) all truncates and writes to inode 2 Set 2:creat(inode 1, dentry index.lock) all truncates and writes to inode 2 link(inode 2, dentry permanent)Set 3:creat(inode 1, dentry index.lock)creat(inode 2, dentry tmp)truncate(inode 2, 1). more sets

Calculating Crash States from a Traced. Reconstruct states from sets of modificationsSet 1:.git/index.lock (0)creat(inode 1, dentry index.lock) all truncates and writes to inode 2 Set 2:creat(inode 1, dentry index.lock) all truncates and writes to inode 2 link(inode 2, dentry permanent).git/index.lock (0).git/permanent (4K)creat(inode 1, dentry index.lock)creat(inode 2, dentry tmp)truncate(inode 2, 1).git/index.lock (0).git/tmp (1)Set 3:. more sets

Checking ALC on Intermediate StatesMultiple Possible Intermediate States.git/tmp (4K).git/index (1K).git/tmp (4K:garbage).git/index.lock (1K).git/permanent (4K).git/tmp (4K).git/index (0K)git status; git fsck;ERRORCORRECT OUTPUTCORRECT OUTPUT

Why is ALC problematic?Applications implement complex update protocols‐ Aiming for both correctness and performance‐ Each protocol is differentUpdate protocols hard to implement and testApplications many and varied‐ Little effort to test eachUnfortunately, file systems make ALC more difficult

Persistence Models: Too ComplexPersistence models used by us to find vulnerabilitesBut, persistence models can be complex‐ Example: write() ordered before unlink() iff they act on thesame directory and write() is more than 4KB‐ Useful for verifying ALC atop a file systemPersistence models not suitable to discuss ALC‐ Is fsync() required after writes to log file in ext3?‐ Or, do write() calls persist in-order?

Persistence PropertiesDoes FS obey a particular interesting behavior?‐ Example: Do write() calls persist in-order?‐ Are write() calls atomic?Applications typically depend on some properties‐ Forgot an fsync(): depends on ordering properties‐ Forgot checksum verification: depends on atomic write()

Persistence Properties: Example #1Content-Atomicity of AppendsDoes an append result in garbage?System call sequencelseek(file1, End of file)write(file1, “hello”)ImpossibleIntermediate State/file1 “he#@!”AllowedIntermediate State/file1 “he”

Persistence Properties: Example #2Ordered WritesAre the effects of write() sent to disk in-order?System call sequencewrite(file1, “hello”)write(file2, “world”)ImpossibleIntermediate State/file1 “”/file2 “world”AllowedIntermediate State/file1 “hello”/file2 “”

Example: Git012345creat(index.lock)(i) store i) git addstdout(finished add)mkdir(o/x)creat(o/x/tmp y)append(o/x/tmp y)fsync(o/x/tmp y)link(o/x/tmp y, o/x/y)unlink(o/x/tmp y)(i) store object(i) store name(branch.lock,x/branch)(iii) git commitstdout(finished commit)

Example: GitAtomicity012345creat(index.lock)(i) store i) git addstdout(finished add)mkdir(o/x)creat(o/x/tmp y)append(o/x/tmp y)fsync(o/x/tmp y)link(o/x/tmp y, o/x/y)unlink(o/x/tmp y)(i) store object(i) store name(branch.lock,x/branch)(iii) git commitstdout(finished commit)

Example: Git(i) store name(branch.lock,x/branch)(iii) git commitstdout(finished commit)(i)0,(i)4i)creat(index.lock)(i) store i) git addstdout(finished add)mkdir(o/x)creat(o/x/tmp y)append(o/x/tmp y)fsync(o/x/tmp y)link(o/x/tmp y, o/x/y)unlink(o/x/tmp y)(i) store object(i)0,(4Ordering012345

Example: GitDurability012345creat(index.lock)(i) store ii) git addstdout(finished add)mkdir(o/x)creat(o/x/tmp y)append(o/x/tmp y)fsync(o/x/tmp y)link(o/x/tmp y, o/x/y)unlink(o/x/tmp y)(i) store object(i) store branch.lock)append(logs/branch)append(logs/HEAD) drename(branch.lock,x/branch)(iii) git commitstdout(finished commit)

Vulnerability Study: Patterns

Vulnerability Study: PatternsAcross syscall atomicity: Few, minor consequences

Vulnerability Study: PatternsGarbage during appends cause 4 vulnerabilitiesFile writes seemingly need only sector-level atomicity

Vulnerability Study: PatternsA separate fsync() on parent directory:

Applications need to implement crash consistency - E.g., Database applications ensure transactions are atomic Applications implement crash consistency wrongly-Pillai et al., OSDI 2014 (11 applications) and Zhou et al., OSDI 2014 (8 databases) - Conclusion: All applications had some form of incorrectness Application-Level Crash Consistency