Transcription

Buyer’s GuideChoosing data quality tools andsoftwareAll the reporting tools in the world mean nothing if you‟re using dirtydata. In this buyer's guide, learn how to weed through the variousdata quality tool vendors and get tips on selecting the right dataquality management software for your business.Readers will learn:The pros and cons of open source data quality softwareHow to get executive approval and financing for data qualityimprovement projectsTips on keeping data quality projects on track and moreSponsored By:

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareBuyer’s GuideChoosing data quality tools andsoftwareTable of ContentsChoosing data quality tool and softwareGartner: Open source data quality software focuses on data profilingData quality improvement projects require dollars and business senseGartner Magic Quadrant ranks data quality tools vendorsAbout IBMSponsored By:Page 2 of 26

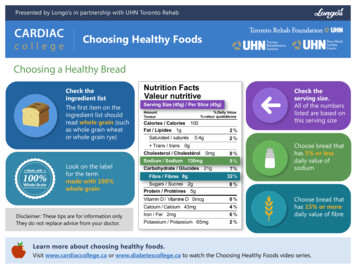

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareChoosing data quality tools and softwareBy David Loshin, ContributorAll the reporting tools in the world mean nothing if you’re using dirty data. In this buyer'sguide, learn how to weed through the various data quality tool vendors and get tips onselecting the right data quality management software for your business. Also, read abouttopics such as the pros and cons of open source data quality software. This opening sectionprovides a detailed overview of the issues to consider in evaluating and choosing dataquality tools.As more business analysts recognize the relationship between high quality data and thesuccess of the business, there is a growing interest in integrating data quality managementwithin the organization. And while the lion‟s share of the effort involves putting good datamanagement practices in place and establishing data governance, there will always be arequirement for technology to support data quality maturity.That being said, until relatively recently, most people equated the phrases “data quality”and “data cleansing” with the expectation that data quality tools were intended only to helpidentify data errors and then correct those errors. In reality, there are many techniquesapplied within the context of a data quality management program, with different types oftools used to support those techniques.Data quality management incorporates a “virtuous cycle,” shown in FIGURE 1, essentiallyconsisting of two phases: analysis and assessment, followed by monitoring andimprovement.Sponsored By:Page 3 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareData quality tools and technology are necessary to support both the analysis andassessment phases. Data profiling tools are used for data analysis and identification ofpotential anomalies, while parsing and standardization tools are employed for recognizingerrors, normalizing representations and values, and some degree of data correction. Thesetools can be used to define data quality rules that assert validity of data and are used to flagnon-conformant values and to aid the correction process.A commonly used technology for customer and business name correction is identityresolution, which helps in linking and resolving variant representations of the same entities.After normalization and identity resolution have been performed, data enhancements suchas address standardization and enhancement and geocoding are applied. Lastly, the dataquality rules can be integrated within a data quality auditing tool that measures compliancewith defined data quality expectations. The results of these measurements can be fed into adata quality metrics scorecard, and if the metrics are defined in relation to the businessimpacts incurred by violating the expectations, that scorecard will provide an accurategauge of how improving data quality goes straight to the bottom line.Sponsored By:Page 4 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareData profilingThe initial attempts to evaluate data quality are processes of analysis and discovery, andthis analysis is predicated on an objective review of the actual data values. The valuespopulating the data sets under review are assessed through quantitative measures andanalyst review. While a data analyst may not necessarily be able to pinpoint all instances offlawed data, the ability to document situations where there may be anomalies provides ameans to communicate these instances with subject matter experts whose businessknowledge can confirm the existence of data problems.Data profiling is a set of algorithms for statistical analysis and assessment of the quality ofdata values within a data set, as well as exploring relationships that exist between valuecollections both within and across data sets. For each column in a table, a data profiling toolwill provide a frequency distribution of the different values, providing insight into the typeand use of each column. Cross-column analysis can expose embedded value dependencies,while inter-table analysis explores overlapping value sets that may represent foreign keyrelationships between entities, and it is in this way that profiling can be used for anomalyanalysis and assessment.The data profiling process often sheds light on business rules inherent to each businessprocess‟s use of the data. These rules can be documented and used during the auditing andmonitoring activity to measure validity of data.Data parsing and standardizationIn any data set, slight variations in representation of data values easily lead to situations ofconfusion or ambiguity for both individuals and other applications. For example, considerthese different character strings:301-754-6350(301) 754-6350301.754.6350866-BIZRULESponsored By:Page 5 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareAll of these formats use digits, some have alphabetic characters, and all use differentspecial characters for separation, but to the human eye they are all recognized asreasonable telephone number formats. To determine whether these numbers are accurateor to investigate whether duplicate telephone numbers exist, the values must be parsed intotheir component segments (area code, exchange and line) and then transformed into astandard format.When analysts are able to describe the different component and format patterns used torepresent a data object (person's name, product description, etc.), data quality tools canparse data values that conform to any of those patterns and even transform them into asingle, standardized form that feeds the assessment, matching and cleansing processes.Pattern-based parsing can automate the recognition and subsequent standardization ofmeaningful value components.In general, parsing uses defined patterns managed within a rules engine used to distinguishbetween valid and invalid data values. When patterns are recognized, other rules andactions can be triggered, either to standardize the representation (presuming a validrepresentation) or to correct the values (should known errors be identified).Identify Resolution: Similarity, linkage and matchingA common requirement for data quality management involves two sides of the same coin:when multiple data instances actually refer to the same real-world entity, as opposed to theperception that a record does not exist for a real-world entity when in fact it really does.Both of these problems indicate the need for techniques to help identify approximatematches to determine similarity between different records. In the first situation, similar, yetslightly variant representations in data values may have been inadvertently introduced intothe system, while in the second situation, a slight variation in representation prevents theidentification of an exact match of the existing record in the data set.Both of these issues are addressed through a process called identity resolution, in which thedegree of similarity between any two records is scored, most often based on weightedapproximate matching between a set of attribute values between the two records. If thescore is above a specific threshold, the two records are deemed to be a match and areSponsored By:Page 6 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwarepresented to the end client as most likely to represent the same entity. Identity resolution isused to recognize when only slight variations suggest that different records are connectedand where values may be cleansed.Attempting to compare each record against all the others to provide a similarity score is notonly ambitious but also time-consuming and computationally intensive. Most data qualitytool suites use advanced algorithms for blocking records that are most likely to containmatches into smaller sets, whereupon different approaches are taken to measure similarity.In addition, there are different approaches to matching—a deterministic approach relies ona broad knowledge base for matching, while probabilistic approaches employ statisticaltechniques to contribute to the similarity scoring process. Identifying similar records withinthe same data set probably means that the records are most likely duplicated and may besubjected to cleansing and/or elimination. Identifying similar records in different sets mayindicate a link across the data sets, which helps facilitate cleansing, knowledge discovery,reverse engineering and master data aggregation.Data cleansing and enhancementData cleansing incorporates techniques such as data imputation, address correction,elimination of extraneous data, and duplicate elimination, as well as pattern-basedtransformations. Data cleansing complements (and relies on) parsing and standardization aswell as identity resolution and record linkage. Data enhancement is a data improvementprocess that relies on record linkage, along with value-added improvement from third-partydata sets (such as address correction, geo-demographic/psychographic imports, listappends). This is often performed by partnering with data providers, using their aggregateddata as a “source of truth” against which records are matched and then enhanced.Data auditing and monitoringThe same types of data quality rules exposed through conversations with subject matterexperts and profiling can be used to describe end-user data quality expectations. Monitoringdefined data quality rules and auditing the results provides a proactive assessment ofcompliance with expectations, and the results of these audits can feed data quality metricspopulating management dashboards and scorecards.Sponsored By:Page 7 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareData profiling tools, as well as standalone auditing utilities, often provide capabilities forproactively validating data against a set of defined (or discovered) business rules. In thisway, the analysts can distinguish those records that conform to defined data qualityexpectations from those that don‟t, which in turn can contribute to baseline measurementsand ongoing auditing for data quality reporting.What to look for in data quality tools and vendorsThere are two interesting notions to keep in mind when considering data quality tools. First,every organization‟s needs are different, depending on the type of company, industry andbusiness processes and their corresponding dependence on the use of high-quality data.Second, while the needs may be different, the ways that those can be addressed are oftenvery similar, although different vendors may address those issues with greater degrees ofaccuracy and precision (and, naturally, cost).Weighing both of these notions together, theconclusion is that what will distinguish the suitability of one product over the others is morethan just functionality, especially as data quality technology becomes more of a commoditycapability.Along with functionality, consider cost, installed base, vendor stability, training and supportcapabilities, as well as the pool of talent that can be tapped to help integrate the toolswithin a governed data quality program. In addition, because there have been manycorporate acquisitions within the data quality market, consider whether or not a specific tooloffering necessitates purchasing a full-blown suite of products. Alternatively, one mustconsider the level of comfort of purchasing one component of a vendor‟s tools suite with theexpectation that it will integrate well with other tools already in use within the environment.Business needs assessment and data quality tools requirementsThe desire to acquire data quality tools should be tempered by the assessment process—toooften, the technology is purchased long before the specific business needs have beendetermined. Therefore, it is worthwhile to perform a high-level data quality assessment withthese specific objectives:Identify business processes that are affected by data quality issues.Sponsored By:Page 8 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareIdentify the data elements that are critical to the successful execution of thosebusiness processes.Evaluate the types of errors and data flaws that can occur.Quantify business impacts associated with each of those errors.Prioritize issues based on potential business impacts.Consider the data quality improvements that can be applied to alleviate the businessimpacts.While this process is presented simply above, there are many subtleties that may requireadditional expertise. To expedite this assessment, you may consider partnering with expertconsultants that can perform the rapid assessment while simultaneously training your staffto replicate the process on other data sets. General requirements for data quality toolsAs a result of this process, companies should arrive at a prioritized list of improvements,which should frame the discussion of requirements analysis, both from the data qualitystandpoint and from the systemic and environmental aspects. For example, determiningthat duplicated customer records lead to business risks would suggest that duplicateelimination is advisable. This requires tools for determining when there are duplicates(identity resolution) and for cleansing (parsing and standardization, enhancement).There are also degrees of precision, however. If your company is a mail-order vendorsending out duplicate catalogs, the risk is increased costs and lowered response, but 100%de-duplication may not be a requirement. But attempting to identify terrorists at the airportsecurity gate may pose a significant risk in terms of passenger safety, necessitating muchgreater precision in identity resolution. Increased precision is likely to correspond toincreased costs, and this is another consideration.In terms of environmental and systemic aspects, one must consider how well differentproducts can integrate within the organization‟s system architecture. Hard requirementssuch as platform compliance are relatively easy to specify. Architectural expectations aretoo, such as the different deployment options such as whether the tools support real-timeoperations, whether they only execute in batch, or can they be integrated “in-line” are alsorelevant questions. In addition, as more organizations migrate toward services-orientedarchitectures (SOAs), determining whether the tools support services also becomes aSponsored By:Page 9 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwarerequirement. From the business side, one must consider the license, support, training, andongoing maintenance costs, as well as the internal staffing requirements to manage and usethe products.Because many vendors provide tools that may (or may not) address the organization‟sissues, it is worthwhile to carefully delineate your business needs and technologyrequirements within a request for proposal (RFP). Providing an RFP provides two clearbenefits. First, it clarifies your needs to the vendors so they can more effectively determineif their product will meet them. Second, it provides a framework for comparing vendor tools,scoring their relative suitability and narrowing the field. It is a good idea to include specificmetrics associated with the quality of the data that can be used to compare and measureeffectiveness of the products.Narrowing data quality vendorsReviewing the RFP responses will help filter out those vendors that make the grade fromthose whose products are not entirely appropriate to address the business needs. But tonarrow the remaining vendors to a short list, set up meetings for the vendors to presenttheir technology along with a proposal for how their products will be used to address thebusiness needs. Again, it may be worthwhile to engage individuals with experience in dataquality tools and techniques to clarify the distinctions among the vendor products, translateany “tech-talk” into terms that are understood, and to ask the tough questions to ensurethat the vendors are properly representing what their products can and cannot do.By this time, your team should be able to whittle down the field to at most threecompetitors. The final test is to try out the tools yourself—arrange for the installation of anevaluation version of the product and run it over your own data sets. Having specified abenchmark data set for comparison, one can compare not just how well the productsperform but also the ease of use and adoption by internal staff.Sponsored By:Page 10 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareSpecific requirementsThe full details of what can be expected from the data quality tools described is beyond thescope of this article, but this table provides some high-level, “no-miss” capabilities for eachof the tools described.TechnologyCore capabilitiesData profilingColumn valuefrequency analysisand related statistics(number of distinctvalues, null counts,maximum, minimum,mean, standarddeviation)Table structureanalysisCross-tableredundancy analysisData mappinganalysisMetadata captureDDL generationBusiness ruledocumentation andvalidationParsing and standardizationFlexible definition ofpatterns and rules forparsing]Flexible definition ofrules fortransformationKnowledge base ofknown patternsAbility to supportmultiple dataconcepts (individual,business, red By:Page 11 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareIdentity resolutionEntity identificationRecord matchingRecord linkageRecord merging andconsolidationFlexible definition ofbusiness rulesKnowledge base ofrules and patternsIntegration withparsing andstandardization toolsAdvanced algorithmsfor deterministic orprobabilistic matchingCleansing and enhancementFlexible definition ofcleansing rulesKnowledge base ofcommon patterns (forcleansing)Knowledge base ofenhancements (e.g.,address cleansing,geocoding)Auditing and monitoringData validationData controlsServices-orientedRule ven though many of the more established data quality tool vendors have been acquired byeven bigger fish, there are still companies emerging with better approaches to fill the void.Whether better algorithms packaged in a different way, improvements in performance,better suitability to SOA, or even an open source offering, there is a wide range of vendors,products and tools to fit almost any organization‟s needs. Even armed with the knowledgeof what you should look for in data quality tools, there is one last caveat: If yourSponsored By:Page 12 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareorganization has an opportunity for data quality improvement, make sure that you havedone your homework in business needs assessment and development of a reasonable RFPbefore evaluating and purchasing tools.About the author: David Loshin, president of Knowledge Integrity, Inc, is a recognizedthought leader and expert consultant in the areas of data governance, data qualitymethods, tools, and techniques, master data management, and business intelligence. Davidis a prolific author regarding BI best practices, either via his B-Eye Network expert channeland numerous books on BI and data quality. His book, Master Data Management, has beenendorsed by data management industry leaders, and his valuable MDM insights can bereviewed at www.mdmbook.com. David can be reached at loshin@knowledge-integrity.com, or 301-754-6350.Sponsored By:Page 13 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareGartner: Open source data quality software focuseson data profilingBy Jeff Kelly, News EditorThe open source data quality market is still in its infancy, but a handful of vendors offeradequate data profiling software, according to Gartner. In this section of the Data QualitySoftware Buyer's Guide, readers will learn more about open source data quality and dataprofiling tools to help them decide if it's the right technology for their businesses.Open source data quality software could be a good fit for companies looking for aninexpensive way to conduct data profiling -- but that's about it, according to Gartner.While open source vendors like JasperSoft and Talend have enjoyed significant success inbusiness intelligence (BI), data integration and other data management domains, they arejust starting to explore the data quality market, according to Ted Friedman, an analyst withthe Stamford, Conn.-based research firm and author of a recent report on the topic."The significant increase in interest in [open source] data integration seems to be spillingover into the related field of data quality," Friedman said.Not surprisingly for a new entrant to the market, however, open source data qualitysoftware and applications tend to be less mature than their open source data managementcousins, he said.They rarely incorporate more than one functional requirement, he said, and most lack moresophisticated data quality capabilities like data matching and monitoring.But that doesn't mean open source data quality software can't benefit some organizations. Ahandful of open source data quality products on the market are adequate for basic dataprofiling, according to Friedman.Data profiling involves collecting and analyzing statistics on the quality of a data set in orderto identify problem areas. It is often the first step in a data quality project.Sponsored By:Page 14 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareCompanies undertaking a broad data quality initiative can distribute open source dataprofiling software to multiple users in various departments, because data profiling is oftenrecommended during a project's early phases, Friedman wrote in his report, co-authoredwith fellow analyst Andreas Bitterer.Data profiling is also useful "for educational or initial assessment purposes and to assist indeveloping requirements for data transformation and data migration projects," according tothe report.Open source data quality's low price tag is offset by a number of factors, however. Friedmansaid the software lacks business user-friendly interfaces, meaning that it requires significanttechnical expertise to use. There is also generally little in the way of support from thevendors.The "most advanced" of the open source vendors offering data quality tools is Talend, basedin Los Altos, Calif., Friedman said. While better known for its open source data integrationsoftware, Talend recently released the Talend Open Profiler, available for free download, aswell as a commercial data quality product that includes some limited data cleansing andmatching capabilities.Another recent entrant to the market is the Denmark-based DataCleaner, whose software"consists of a quick download and an easy installation, including some sample data thatallows you to try out the profiling functionality," Friedman said.Other vendors offering open source data quality software include Toronto-based SQL Power,and Infosolve, based in South Brunswick, N.J.Still, open source data quality software vendors have a long way to go if they want to grabtheir portion of the 500 million data quality market. And that could take significant time."It will be well beyond 2012 before open source data quality platforms have broadly caughtup in terms of their capabilities with the commercial data quality tool vendors and areconsidered a viable alternative for enterprise-wide usage," Friedman wrote.Sponsored By:Page 15 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareAs open source data quality offerings do mature, Friedman said he would not be surprisedto see an acquisition or two by larger data integration vendors, as the two technologies -data quality and data integration -- continue to converge.Sponsored By:Page 16 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareData quality improvement projects require dollarsand business senseBy Mark Brunelli, News EditorGetting executive approval and financing for data quality improvement projects can be along and difficult process. In this section of the Data Quality Software Buyer's Guide,readers will get tips on selling investments in data quality technology to business executivesand learn how one IT organization was able to win the approval needed to embark on a dataquality initiative.The IT workers at Annapolis, Md.-based iJET Intelligent Risk Systems Inc. understand howtough it can be to get executive backing for a major data quality improvement initiative.A provider of travel risk management services, iJET‟s core business involves alertingbusiness travelers to any threats they might face when visiting countries around the globe.If the water is contaminated in Moscow, if there is a terrorist threat in Mumbai or if atornado is about to touch down in Kansas City, iJET‟s job is to let its clients‟ businesstravelers know about it.The task requires iJET, which was founded in 1999, to collect loads of global threat data andcompare it to the travel plans of its clients‟ employees, said Richard Murnane, iJET‟senterprise data operations manager. Any pertinent information about potential threats isthen automatically related to travelers' itineraries via iJET‟s Worldcue Travel RiskManagement tool.“For a small shop of about 100 people, we have a lot of data,” Murnane explained. “Weingest all of the travel data [as well as] other types of asset data like some supply chainroutes, warehouse [data] and all kinds of information that is important to our clients.”With so much information coming in from so many places, it was clear to Murnane uponjoining the company nearly five years ago that a host of minor data quality and duplicationissues could eventually mushroom into a bigger problem.Sponsored By:Page 17 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareBut it would take three summers of product research, testing and convincing -- not tomention a few complaints from customers about duplicate files -- before companyexecutives agreed to finance a sweeping new data quality improvement project.In the end, Murnane won approval for the initiative in part by explaining to seniormanagement how much time a particular employee was taking to clean and merge duplicatefiles.“I was doing metrics for my senior management and [explaining that] I have a senior dataanalyst who is making whatever dollars per year, and she is spending months cleaning up athousand records,” Murnane recalled. “But according to the dog and pony shows from [dataquality tools vendors], she could be spending a day instead.”Looking back at that time, Murnane said he understands why senior management wasreluctant to sign off on the data quality improvement project for so long.“We were really focusing on technology issues,” he said. “We were saying: „Well, this reportshows a person twice.‟ And a senior manager said: „Well, why do I need to spend a lot ofmoney to fix that?‟”Making the business case for data quality improvementMurnane‟s experiences in trying to get executive buy-in for data quality improvements arenot uncommon, according to Rob Karel, a data governance and data quality analyst withCambridge, Mass.-based Forrester Research Inc. But there are some tips to keep in mindthat can ultimately make the road to executive buy-in a less bumpy trip.The first thing to do is stop evangelizing data to company leaders because they‟re generallynot interested in data, Karel said. They are, however, interested in making decisions thatwill make the business a success.The best way to justify a data quality initiative, he continued, is to figure out how dataquality is positively or negatively impacting the ability of the business to increase revenue,improve operational efficiencies, reduce risks and differentiate itself from the competition.Sponsored By:Page 18 of 26

SearchDataManagement.com Buyer‟s GuideChoosing data quality tools and softwareKarel said data management pros can begin this process by asking some key questions,including: What business processes are most important to the organization? Whatinformation is used to support those processes? What people, systems and processescreate, capture and update that information? What is the level of confidence in using thatinformation?It‟s also a good idea to find the business leader who is most affected by data quality and getthem on board first.“There are plenty of business stakeholders that are screaming about processes [that arebroken] because of poor quality data,” Karel said. “Work with them to build a business caseinstead of trying to convince others that they have a problem when they might not see it.”Trying data quality improvement to busine

Choosing data quality tools and software Table of Contents Choosing data quality tool and software Gartner: Open source data quality software focuses on data profiling Data quality improvement projects require dollars and business sense Gartner Magic Quadrant ranks data quality tools vendors About IBM