Transcription

This Unit: Data/Thread Level ParallelismApplication Data-level parallelismOSCIS 501Introduction to Computer ArchitectureCompilerCPUFirmwareI/OMemoryDigital CircuitsUnit 10: Data-Level ParallelismCIS 501 (Martin/Roth): DLP Vector processors Message-passing multiprocessors Thread-level parallelism Shared-memory multiprocessors Flynn TaxonomyGates & Transistors1CIS 501 (Martin/Roth): DLPReadingsLatency, Bandwidth, and Parallelism H P Latency Appendix E (skim)2 Time to perform a single task– Hard to make smaller Bandwidth Number of tasks that can be performed in a given amount of time Easier to make larger: overlap tasks, execute tasks in parallel One form of parallelism: insn-level parallelism (ILP) CIS 501 (Martin/Roth): DLP3Parallel execution of insns from a single sequential programPipelining: overlap processing stages of different insnsSuperscalar: multiple insns in one stage at a timeHave seenCIS 501 (Martin/Roth): DLP4

Exposing and Exploiting ILPFundamental Problem with ILP ILP is out there Clock rate and IPC are at odds with each other Integer programs (e.g., gcc, gzip): 10–20 Floating-point programs (e.g., face-rec, weather-sim): 50–250 It does make sense to build 4-way and 8-way superscalar but compiler/processor work hard to exploit it Independent insns separated by branches, stores, function calls Overcome with dynamic scheduling and speculation– Modern processors extract ILP of 1–3 Pipelining Fast clock– Increased hazards lower IPC Wide issue Higher IPC– N2 bypassing slows down clock Can we get both fast clock and wide issue? Yes, but with a parallelism model less general than ILP Data-level parallelism (DLP) Single operation repeated on multiple data elements Less general than ILP: parallel insns are same operationCIS 501 (Martin/Roth): DLP5Data-Level Parallelism (DLP)6Exploiting DLP With Vectorsfor (I 0; I 100; I )Z[I] A*X[I] Y[I];0: ldf X(r1),f1mulf f0,f1,f2ldf Y(r1),f3addf f2,f3,f4stf f4,Z(r1)addi r1,4,r1blti r1,400,0CIS 501 (Martin/Roth): DLPregfileI // I is in r1// A is in f0D BPV-regfile One example of DLP: inner loop-level parallelism One way to exploit DLP: vectors Iterations can be performed in parallel Extend processor with vector “data type” Vector: array of MVL 32-bit FP numbers Maximum vector length (MVL): typically 8–64 Vector register file: 8–16 vector registers (v0–v15)CIS 501 (Martin/Roth): DLP7CIS 501 (Martin/Roth): DLP8

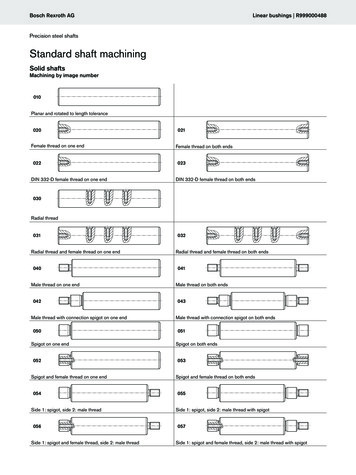

Vector ISA ExtensionsVectorizing SAXPY Vector operationsldf X(r1),f1mulf f0,f1,f2ldf Y(r1),f3addf f2,f3,f4stf f4,Z(r1)addi r1,4,r1blti r1,400,0 Versions of scalar operations: op.v Each performs an implicit loop over MVL elementsfor (I 0;I MVL;I ) op[I]; Examples ldf.v X(r1),v1: load vectorfor (I 0;I MVL;I ) ldf X I(r1),v1[I]; stf.v v1,X(r1): store vectorfor (I 0;I MVL;I ) stf v1[I],X I(r1); addf.vv v1,v2,v3: add two vectorsfor (I 0;I MVL;I ) addf v1[I],v2[I],v3[I]; addf.vs v1,f2,v3: add vector to scalarfor (I 0;I MVL;I ) addf v1[I],f2,v3[I];CIS 501 (Martin/Roth): DLPldf.v X(r1),v1mulf.vs v1,f0,v2ldf.v Y(r1),v3addf.vv v2,v3,v4stf.v v4,Z(r1)addi r1,16,r1blti r1,400,09Scalar SAXPY Performanceldf X(r1),f1mulf f0,f1,f2ldf Y(r1),f3addf f2,f3,f4stf f4,Z(r1)addi r1,4,r1slti r1,400,r2bne Loopldf X(r1),f1mulf f0,f1,f2ldf Y(r1),f3addf f2,f3,f4stf f4,Z(r1)addi r1,4,r1blt r1,r2,0ldf X(r1),f1CIS 501 (Martin/Roth): DLP 5-cycle mulf, 2-cycle addf, 1 cycle others 100 iters * 11 cycles/iter 1100 cyclesE*Md*p*E* E* WWd* d* E E p* p* D XF DFldf X(r1),f1mulf f0,f1,f2ldf Y(r1),f3addf f2,f3,f4stf f4,Z(r1)addi r1,4,r1blti r1,400,0 Pack loop body into vector insns Horizontal packing changes execution order Aggregate loop control Add increment immediatesCIS 501 (Martin/Roth): DLPldf.v X(r1),v1mulf.vs v1,f0,v2ldf.v Y(r1),v3addf.vv v2,v3,v4stf.v v4,Z(r1)addi r1,16,r1slti r1,400,r2bne r2,Loop6 7 8 9 10 11 12 13 14 15 16 17 18 19E*XDFldf X(r1),f1mulf f0,f1,f2ldf Y(r1),f3addf f2,f3,f4stf f4,Z(r1)addi r1,4,r1blti r1,400,010Vector SAXPY Performance Scalar version1 2 3 4 5F D X M WF D d* E*F p* DFldf X(r1),f1mulf f0,f1,f2ldf Y(r1),f3addf f2,f3,f4stf f4,Z(r1)addi r1,4,r1blti r1,400,0WMXDFldf.v X(r1),v1mulf.vv v1,f0,v2ldf.v Y(r1),v3addf.vv v2,v3,v4stf.v f4,Z(r1)addi r1,4,r1blt r1,r2,0ldf X(r1),f1WM WX M WD X M W11CIS 501 (Martin/Roth): DLP Vector version 4 element vectors 25 iters * 11 insns/iteration * 275 cycles Factor of 4 speedup1 2 3 4 5F D X M WF D d* E*F p* DF6 7 8 9 10 11 12 13 14 15 16 17 18 19E*XDFE*Md*p*E* E* WWd* d* E E p* p* D XF DFWMXDFWM WX M WD X M W12

Not So FastPipelined Vector SAXPY Performance A processor with 32-element vectorsldf.v X(r1),v1mulf.vs v1,f0,v2ldf.v Y(r1),v3addf.vv v2,v3,v4stf.v v4,Z(r1)addi r1,16,r1slti r1,400,r2bne r2,Loop 1 Kb (32 * 32) to cache? 32 FP multipliers? No: vector load/store/arithmetic units are pipelined Processors have L (1 or 2) of each type of functional unit L is called number of vector lanes Micro-code streams vectors through units M data elements at once Pipelined vector insn timing ldf.v X(r1),v1mulf.sv v1,f0,v2ldf.v Y(r1),v3addf.vv v2,v3,v4stf.v f4,Z(r1)addi r1,4,r1blt r1,r2,0ldf.v X(r1),f1Tvector Tscalar (MVL / L) – 1Example: 64-element vectors, 10-cycle multiply, 2 lanesTmulf.vv 10 (64 / 2) – 1 41Not bad for a loop with 64 10-cycle multipliesCIS 501 (Martin/Roth): DLP13 Vector version 4-element vectors, 1 lane4-cycle ldf.v/stf.v8-cycle mulf.sv, 5-cycle addf.vv25 iters * 20 cycles/iter 500 cyclesFactor of 2.2 speedup1 2 3 4 5F D X M MF D d* d*F p* p*6Md*p*7 8 9 10 11 12 13M Wd* E* E* E* E* E* E*p* D X M M M MF D d* d* d* d*F p* p* p* p*14 15 16 17 18 19E* E* WWd* d* E E E E p* p* D X d* d*F D p* p*F p* p*CIS 501 (Martin/Roth): DLP14Not So SlowChained Vector SAXPY Performance For a given vector operationldf.v X(r1),v1mulf.vs v1,f0,v2ldf.v Y(r1),v3addf.vv v2,v3,v4stf.v v4,Z(r1)addi r1,16,r1slti r1,400,r2bne r2,Loop All MVL results complete after Tscalar (MVL / L) – 1 First M results (e.g., v1[0] and v1[1]) ready after Tscalar Start dependent vector operation as soon as those are ready Chaining: pipelined vector forwarding Tvector1 Tscalar1 (MVL / L) – 1 Tvector2 Tscalar2 (MVL / L) – 1 Tvector1 Tvector2 Tscalar1 Tscalar2 (MVL / L) – 1CIS 501 (Martin/Roth): DLPldf.v X(r1),v1mulf.vv v1,f0,v2ldf.v Y(r1),v3addf.vv v2,v3,v4stf.v f4,Z(r1)addi r1,4,r1blt r1,r2,0ldf.v X(r1),f115CIS 501 (Martin/Roth): DLP Vector version 1 lane4-cycle ldf.v/stf.v8-cycle mulf.sv, 5-cycle addf.vv25 iters * 11 cycles/iter 275 cyclesFactor of 4 speedup again1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19F D X M M M M WF D d* E* E* E* E* E* E* E* E* WF p* D X s* M M M M WF D p* d* d* E E E E E WF p* p* p* D X M M M M WF D X M WF D X M WF D X M M M M W16

Vector PerformanceAmdahl’s Law Where does it come from? Amdahl’s law: the law of diminishing returns Fewer loop control insns: addi, blt, etc. speeduptotal 1 / [%vector / speedupvector (1–%vector)] Speedup due to vectorization limited by non-vector portion In general: optimization speedup limited by unoptimized portion Vector insns contain implicit loop control RAW stalls taken only once, on “first iteration” Vector pipelines hide stalls of “subsequent iterations” Example: %opt 90% speedupopt 10 ! speeduptotal 1 / [0.9/10 0.1] 5.3 speedupopt 100 ! speeduptotal 1 / [0.9/100 0.1] 9.1 Speedupopt ! ! speeduptotal 1 / [0.9/! 0.1] 10 How does it change with vector length? Theoretically increases, think of Tvector/MVL Tvector Tscalar (MVL / L) – 1 MVL 1 ! (Tvector/MVL) Tscalar MVL 1000 ! (Tvector/MVL) 1 CRAY-1 rocked because it had fastest vector unit and the fastest scalar unit– But vector regfile becomes larger and slowerCIS 501 (Martin/Roth): DLP17CIS 501 (Martin/Roth): DLP18Variable Length VectorsVector Predicates Vector Length Register (VLR): 0 VLR MVL Vector Mask Register (VMR): 1 bit per vector element Implicit in all vector operationsfor (I 0; I VLR; I ) { vop } Used to handle vectors of different sizes General scheme for cutting up loops is strip mining Similar to loop blocking (cuts arrays into cache-sized chunks)for (I 0; I 32; I )if (X[I] ! 0) Z[I] A/X[I];for (I 0; I N; I )Z[I] A*X[I] Y[I];ldf X(r1),v1sne.v v1,f0divf.sv v1,f1,v2stf.v v2,Z(r1)VLR N % MVL;for (J 0; J N; J VLR, VLR MVL)for (I J; I J VLR; I )Z[I] A*X[I] Y[I];CIS 501 (Martin/Roth): DLP Implicit predicate in all vector operationsfor (I 0; I VLR; I ) if (VMR[I]) { vop } Used to vectorize loops with conditionals in themseq.v, slt.v, slti.v, etc.: sets vector predicatescvmr: clear vector mask register (set to ones)// 0.0 is in f0// A is in f1cvmr19CIS 501 (Martin/Roth): DLP20

ILP vs. DLPHistory of Vectors Recall: fundamental conflict of ILP Vector-register architectures: “RISC” vectors High clock frequency or high IPC, not both High clock frequency ! deep pipeline ! more hazards ! low IPC High IPC ! superscalar ! complex issue/bypass ! slow clock DLP (vectors) sidesteps this conflict Memory-memory vector architectures: “CISC” vectorsKey: operations within a vector insn are parallel ! no data hazardsKey: loop control is implicit ! no control hazardsHigh clock frequency ! deep pipeline no hazards ! high IPCHigh IPC ! natural wide issue no bypass ! fast clockCIS 501 (Martin/Roth): DLP Most modern vector supercomputers (Cray-1, Convex) Like we have talked about so far Optimized for short-medium sized (8–64 element) vectors21 ––Early vector supercomputers (TI ASC, CDC STAR100)Optimized for (arbitrarily) long vectorsAll vectors reside in memoryRequire a lot of memory bandwidthLong startup latencyCIS 501 (Martin/Roth): DLPShort, Single-Cycle Vector InstructionsModern Vectors Pipelining technique used in scientific vector architectures Both floating-point and integer vectors common today Many elements per vector: 8 to 64 Large basic data type: 32- or 64- bit FP Complex operations: addf.vv, mulf.vs But both of the parallel (not pipelined) variety Integer vectors Image processing: a pixel is 4 bytes (RGBA) Also: speech recognition, geometry, audio, tele-communications More recently, multimedia vector architectures Floating-point vectors Few elements per vector: 4 or 8 (64-bit or 128-bit vectors) Short, simple basic data type: 8- or 16-bit integers Entire vector can be “packed” into one 32- or 64-bit integer Simple operations: and, or, add Operations implemented in parallel in single ALU Do 4 16-bit adds in 64-bit ALU by disabling some carries This form of data-level parallelism called subword parallelismCIS 501 (Martin/Roth): DLP2223 Useful for geometry processing: 4x4 translation/rotation matrices Also: scientific/engineering programs, digital signal processing Examples Intel MMX: 64-bit integer (2x32b, 4x16b, 8x8b)Intel SSE: 64-bit FP (2x32b)Intel SSE2: 128-bit FP (2x64b, 4x32b)Motorola AltiVEC: 128-bit integer/FP (2x64b, 4x32b, 8x16b, 16x8b)CIS 501 (Martin/Roth): DLP24

Automatic VectorizationVector Energy Automatic vectorization Vectors are more power efficient than superscalar – Compiler conversion of sequential code to vector codeVery difficultVectorization implicitly reorders operationsInvariably, loads and stores are some of those operationsHow to tell whether load/store reordering is legal? Possible in languages without references: e.g., FORTRAN– Hard (impossible?) in languages with references: e.g., C, Java Compilers don’t generate MMX and SSE code Libraries of routines that exploit MMX and SSE are hand assembledCIS 501 (Martin/Roth): DLP25Not Everything Easy To Vectorize For a given loop, vector code Fetches, decodes, issues fewer insns (obvious) Actually executes fewer operations too (loop control) Also remember: clock frequency is not power efficient Vectors can trade frequency (pipelining) for parallelism (lanes) In general: hardware more power efficient than software Custom circuits more efficient than insns on general circuits Think of vectors as custom hardware for array-based loopsCIS 501 (Martin/Roth): DLP26Exploiting DLP With Parallel Processingfor (I 0; I N; I )for (J 0; J N; J )for (K 0; K N; K )C[I][J] A[I][K] * B[K][J];for (I 0; I 100; I )for (J 0; J 100; J )for (K 0; K 100; K )C[I][J] A[I][K] * B[K][J]; Matrix multiply difficult to vectorize Matrix multiplication can also be parallelized Vectorization works on inner loops The iterations in this inner loop are not independent Outer loop parallelism Need to transform it for (I 0; I N; I )for (J 0; J N; J MVL)for (K 0; K N; K )for (JJ 0; JJ MVL; JJ )C[I][J JJ] A[I][K] * B[K][J JJ];Outer loop iterations are parallelRun entire I or J loop iterations in parallelEach iteration runs on a different processorEach processor runs all K inner loop iterations sequentially Which is better? Do both!CIS 501 (Martin/Roth): DLP27CIS 501 (Martin/Roth): DLP28

Parallelizing Matrix MultiplyXAmy id()my id()Parallelizing Matrix Multiplyfor (J 0; J 100; J ) {if (J my id()) {memcpy(tmp B, my B, 100);for (id 0; id 100; id )if (id ! my id())send(id, &my B, 100);}else recv(J, &tmp B, 100);for (K 0; K 100; K )my C[J] my A[K] * tmp B[K];}my id() BCfor (J 0; J N; J )for (K 0; K N; K )C[my id()][J] A[my id()][K] * B[K][J]; How to parallelize matrix multiply over N processors? Or N machines in a cluster Data communication One possibility: give each processor an 1 iteration Processors send their portions of B (my B) to other processors Library provides send(), recv() functions for this Each processor runs copy of loop above my id() function gives each processor ID from 0 to N Parallel processing library (e.g., MPI) provides this function Have to also divide matrices between N processors Each processor gets row my id() of A, C, column my id()of BCIS 501 (Martin/Roth): DLP29Parallelizing Matrix Multiply30Parallel Matrix Multiply Performanceif (my id() 0) {memcpy(tmp A, &A[I][0], 100);memcpy(tmp B, &B[0][J], 100);for (id 1; id 100; id ){ send(id, &A[id][0], 100); send(id, &B[0][id], 100); }}else { recv(0, &my A, 100); recv(0, &my B, 100); } Gross assumptions 10 cycles per FP instruction, all other instructions free 50 cycles 1 cycle for every 4 B to send/receive a message Sequential version: no communication Computation: 2M FP-insn * 10 cycle/FP insn 20M cycles Parallel version: calculate for processor 0 (takes longest)if (my id() 0)for (id 1; id 100; id )recv(id, &C[id][0], 100);else send(0, &my C, 100); Data initialization/collection Processor 0 must initialize others with portions of A, B matrices Processor 0 must collect C matrix portions from other processorsCIS 501 (Martin/Roth): DLPCIS 501 (Martin/Roth): DLP31 –Computation: 20K FP-insn * 10 cycle/FP-insn 200K cyclesInitialization: 200 send * 150 cycle/send 30K cyclesCommunication: 200 send * 150 cycle/send 30K cyclesCollection: 100 send * 150 cycle/send 15K cyclesTotal: 275K cycles73X speedup (not quite 100X)32% communication overheadCIS 501 (Martin/Roth): DLP32

Parallel PerformanceP (peak ectionTotalActual speedupActual/Peak10200,000*10 2M20*(50 1000) 21K20*(50 1000) 21K10*(50 1000) 11K2.05M9.797%Automatic Parallelization?100100020,000*10 200K2000*10 20K200*(50 100) 30K 2000*(50 10) 120K200*(50 100) 30K 2000*(50 10) 120K100*(50 100) 15K 1000*(50 10) 60K275K320K736373%6.3% Same as automatic vectorization: hard Same reason: difficult to analyze memory access patterns Maybe even harder Outer loop analysis harder than inner loop analysis How does it scale with number of processors P?– 97% efficiency for 10 processors, 73% for 100, 6.3% for 1000– 1000 processors actually slower than 100 Must initialize/collect data from too many processors Each transfer is too small, can’t amortize constant overhead Amdahl’s law again Speedup due to parallelization limited by non-parallel portionCIS 501 (Martin/Roth): DLP33Message Passing34Shared Memory Parallel matrix multiply we saw uses message passing Each copy of the program has a private virtual address space Explicit communication through messages Messages to other processors look like I/O Simple hardware Any network configuration will will do No need to synchronize memories– Complex software Must orchestrate communication Only programs with regular (static) communication patterns Message passing systems called multi-computersCIS 501 (Martin/Roth): DLPCIS 501 (Martin/Roth): DLP35“shared” float A[100][100], B[100][100], C[100][100];for (J 0; J 100; J )for (K 0; K 100; K )C[my id()][J] A[my id()][K] * B[K][J]; Alternative: shared memory All copies of program share (part of) an address space Implicit (automatic) communication via loads and stores Simple software No need for messages, communication happens naturally– Maybe too naturally Supports irregular, dynamic communication patterns– Complex hardware Create a uniform view of memory More complex on with cachesCIS 501 (Martin/Roth): DLP36

Issues for Shared MemoryThread Level Parallelism (TLP) Shared memory not without issuesstruct acct t { int bal; };shared struct acct t accts[MAX ACCT];int id,amt;if (accts[id].bal amt){accts[id].bal - amt;dispense cash();} Cache coherenceSynchronizationSomething called “memory consistency model”Not unrelated to each otherNot issues for message passing systemsTopic of next unit0:1:2:3:4:5:addi r1,&accts,r3ld 0(r3),r4blt r4,r2,6sub r4,r2,r4st r4,0(r3)call dispense cash But can also exploit thread-level parallelism (TLP) Collection of asynchronous tasks: not started and stopped together Data shared loosely, dynamically Dynamically allocate tasks to processors Example: database server (each query is a thread) accts is shared, can’t register allocate even if it were scalar id and amt are private variables, register allocated to r1, r2 Confusion: outer-loop DLP sometimes also called TLPCIS 501 (Martin/Roth): DLP37CIS 501 (Martin/Roth): DLPSummary: Flynn TaxonomySISD vs. SIMD vs. SPMD Flynn taxonomy: taxonomy of parallelism SISD ruled the 1990s Two dimensions Number of instruction streams: single vs. multiple Number of data streams: single vs. multiple ILP techniques found in all processors SIMD has its niche Multimedia, tele-communications, engineering SISD: single-instruction single-data Pipelining and ILP on a uniprocessor SPMD is starting to dominate commercially SIMD: single-instruction multiple-data Handles more forms of parallelism Inner-loop DLP, outer-loop DLP, and TLP More economical: just glue together cheap uniprocessors Better scalability: start small, add uniprocessors DLP on a vector processor MIMD: multiple-instruction multiple-data DLP, TLP on a parallel processor SPMD: single-program multiple dataCIS 501 (Martin/Roth): DLP3839CIS 501 (Martin/Roth): DLP40

Summary Data-level parallelism (DLP) Easier form of parallelism than ILP– Hard to exploit automatically Vectors (SIMD) Extend processor with new data type: vector Very effective– Only handles inner-loop parallelism Parallel Processing (MIMD) Multiple uniprocessors glued together Glue? explicit messages or shared memory The way of the future: inner-loop and outer-loop DLP and TLP The way of the future: inner-loop and outer-loop DLP and TLPCIS 501 (Martin/Roth): DLP41

This Unit: Data/Thread Level Parallelism Data-level parallelism Vector processors Message-passing multiprocessors Thread-level parallelism Shared-memory multiprocessors Flynn Taxonomy Application OS Compiler Firmware I/O Memory Digital Circuits Gates & Transistors CPU CIS 501 (Martin/Roth): DLP 3 Readings H P Appendix E (skim .