Transcription

COMPUTINGPRACTICESEdgar H. SibleyPanel EditorThe state of the art in data compression is arithmetic coding, not the betterknown Huffman method. Arithmetic coding gives greater compression, isfaster for adaptive models, and clearly separatesthe model from the channelencoding.ARITHMETIC CODING FORDATA COIUPRESSIONIAN H. WIllEN, RADFORD M. NEAL, and JOHN G. CLEARYArithmetic coding is superior in most respects to thebetter-known Huffman [lo] method. It represents information at least as compactly-sometimesconsiderably more so. Its performance is optimal withoutthe need for blocking of input data. It encourages aclear separation between the model for representingdata and the encoding of information with respect tothat model. It accommodates adaptive models easilyand is computationally efficient. Yet many authorsand practitioners seem unaware of the technique.Indeed there is a widespread belief that Huffmancoding cannot be improved upon.We aim to rectify this situation by presenting anaccessible implementation of arithmetic coding andby detailing its performance characteristics. We startby briefly reviewing basic concepts of data compression and introducing the model-based approach thatunderlies most modern techniques. We then outlinethe idea of arithmetic coding using a simple example, before presenting programs for both encodingand decoding. In these programs the model occupiesa separate module so that different models can easilybe used. Next we discuss the construction of fixedand adaptive models and detail the compressionefficiency and execution time of the programs,including the effect of different arithmetic wordlengths on compression efficiency. Finally, we outline a few applications where arithmetic coding isappropriate.Financial support for this work has been provided by the Natural Sciencesand E@neering Research Council of Canada.UNIX is a registered trademark of AT&T Bell Laboratories.0 1987 ACM OOOl-0782/87/OtiOO-0520 750520Communications of the ACMDATACOMPRESSIONTo many, data compression conjures up an assortment of ad hoc techniques such as conversion ofspaces in text to tabs, creation of special codes forcommon words, or run-length coding of picture data(e.g., see [8]). This contrasts with the more modernmodel-based paradigm for coding, where, from aninput string of symbols and a model, an encoded stringis produced that is (usually) a compressed version ofthe input. The decoder, which must have access tothe same model, regenerates the exact input stringfrom the encoded string. Input symbols are drawnfrom some well-defined set such as the ASCII orbinary alphabets; the encoded string is a plain sequence of bits. The model is a way of calculating, inany given context, the distribution of probabilitiesfor the next input symbol. It must be possible for thedecoder to produce exactly the same probability distribution in the same context. Compression isachieved by transmitting the more probable symbolsin fewer bits than the less probable ones.For example, the model may assign a predetermined probability to each symbol in the ASCIIalphabet. No context is involved. These probabilitiescan be determined by counting frequencies in representative samples of text to be transmitted. Such afixed model is communicated in advance to both encoder and decoder, after which it is used for manymessages.Alternatively, the probabilities that an adaptivemodel assigns may change as each symbol is transmitted, based on the symbol frequencies seen so farin the message. There is no need for a representativeJune 1987Volume 30Number 6

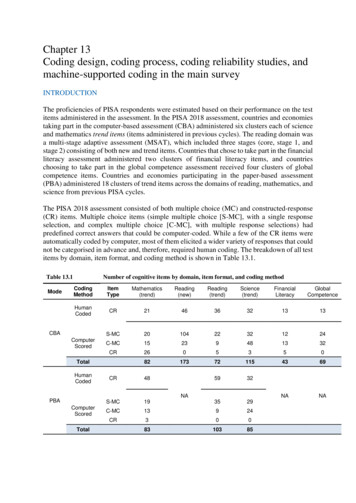

Computing Practicessample of text, because each message is treated asan independent unit, starting from scratch. The encoder’s model changes with each symbol transmitted, and the decoder’s changes with each symbolreceived, in sympathy.More complex models can provide more accurateprobabilistic predictions and hence achieve greatercompression. For example, several characters of previous context could condition the next-symbol probability. Such methods have enabled mixed-case English text to be encoded in around 2.2 bits/characterwith two quite different kinds of model [4, 61. Techniques that do not separate modeling from codingso distinctly, like that of Ziv and Lempel (231, donot seem to show such great potential for compression, although they may be appropriate when theaim is raw speed rather than compression performance [22].The effectiveness of any model can be measuredby the entropy of the message with respect to it,usually expressed in bits/symbol. Shannon’s fundamental theorem of coding states that, given messagesrandomly generated from a model, it is impossible toencode them into less bits (on average) than the entropy of that model [21].A message can be coded with respect to a modelusing either Huffman or arithmetic coding. The former method is frequently advocated as the best possible technique for reducing the encoded data rate.It is not. Given that each symbol in the alphabetmust translate into an integral number of bits in theencoding, Huffman coding indeed achieves “minimum redundancy.” In other words, it performs optimally if all symbol probabilities are integral powersof %. But this is not normally the case in practice;indeed, Huffman coding can take up to one extra bitper symbol. The worst case is realized by a sourcein which one symbol has probability approachingunity. Symbols emanating from such a source convey negligible information on average, but require atleast one bit to transmit [7]. Arithmetic coding dispenses with the restriction that each symbol musttranslate into an integral number of bits, therebycoding more efficiently. It actually achieves the theoretical entropy bound to compression efficiency forany source, including the one just mentioned.In general, sophisticated models expose the deficiencies of Huffman coding more starkly than simpleones. This is because they more often predict symbols with probabilities close to one, the worst casefor Huffman coding. For example, the techniquesmentioned above that code English text in 2.2 bits/character both use arithmetic coding as the finalstep, and performance would be impacted severelyJune 1987Volume 30Number 6if Huffman coding were substituted. Nevertheless,since our topic is coding and not modeling, the illustrations in this article all employ simple models.Even so, as we shall see, Huffman coding is inferiorto arithmetic coding.The basic concept of arithmetic coding can betraced back to Elias in the early 1960s (see [l,pp. 61-621). Practical techniques were first introduced by Rissanen [16] and Pasco [15], and developed further by Rissanen [17]. Details of theimplementation presented here have not appearedin the literature before; Rubin [2O] is closest to ourapproach. The reader interested in the broader classof arithmetic codes is referred to [18]; a tutorial isavailable in [l3]. Despite these publications, themethod is not widely known. A number of recentbooks and papers on data compression mention itonly in passing, or not at all.THEIDEAOF ARITHMETICCODINGIn arithmetic coding, a message is represented by aninterval of real numbers between 0 and 1. As themessage becomes longer, the interval needed’to represent it becomes smaller, and the number of bitsneeded to specify that interval grows. Successivesymbols of the message reduce the size of the interval in accordance with the symbol probabilities generated by the model. The more likely symbols reduce the range by less than the unlikely symbolsand hence add fewer bits to the message.Before anything is transmitted, the range for themessage is the entire interval [0, l), denoting thehalf-open interval 0 5 x 1. As each symbol isprocessed, the range is narrowed to that portion of itallocated to the symbol. For example, suppose thealphabet is (a, e, i, O, u, !I, and a fixed model is usedwith probabilities shown in Table I. Imagine transTABLE I. Example Fixed Model for Alphabet (a, e, i, o, u, !)SymbolProbabilityRange.2.3.l.2.lLO, 0.2)[0.2, 0.5)[0.5, 0.6)[0.6,0.8)[0.8, 0.9).l[0.9, 1.0)mitting the message eaii!. Initially, both encoderand decoder know that the range is [0, 1). Afterseeing the first symbol, e, the encoder narrows it to[0.2, 04, the range the model allocates to this symbol. The second symbol, a, will narrow this newrange to the first one-fifth of it, since a has beenCommunications of the ACM521

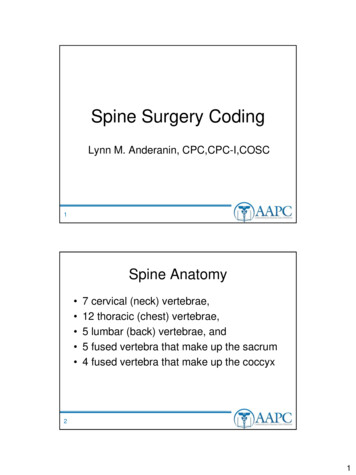

Computing Practicesallocated [0, 0.2). This produces [O.Z, 0.26), since theprevious range was 0.3 units long and one-fifth ofthat is 0.06. The next symbol, i, is allocated [0.5, 0.6),which when applied to [0.2, 0.26) gives the smallerrange [0.23, 0.236). Proceeding in this way, the encoded message builds up as follows:InitiallyAfter seeing 36)0.2336)0.2336)Figure 1 shows another representation of the encoding process. The vertical bars with ticks represent the symbol probabilities stipulated by themodel. After the first symbol has been processed, themodel is scaled into the range [0.2, 0.5), as shown sentation of the Arithmetic Coding ProcessNothing1!uea0.50.260InitiallyAfter seeing eP, 1)[0.2, 0.5)This makes it clear that the second character is a,since this will produce the range[0.2, 0.26),After seeing awhich entirely encloses the given range [0.23354,0.2336). Proceeding like this, the decoder can identify the whole message.It is not really necessary for the decoder to knowboth ends of the range produced by the encoder.Instead, a single number within the range--for example, 0.23355-willsuffice. (Other numbers, like0.23354, 0.23357, or even 0.23354321, would do justas well.) However, the decoder will face the problemof detecting the end of the message, to determinewhen to stop decoding. After all, the single number0.0 could represent any of a, aa, aaa, aaaa, . . . . Toresolve the ambiguity, we ensure that each messageends with a special EOF symbol known to both encoder and decoder. For the alphabet of Table I, ! willbe used to terminate messages, and only to termi-UFIGUREla.Figure la. The second symbol scales it again into therange [0.2, 0.26). But the picture cannot be continued in this way without a magnifying glass! Consequently, Figure lb shows the ranges expanded tofull height at every stage and marked with a scalethat gives the endpoints as numbers.Suppose all the decoder knows about the messageis the final range, [0.23354, 0.2336). It can -immediately deduce that the first character was e! since therange lies entirely within the space the model ofTable I allocates for e. Now it can simulate the operation of the encoder:ii!uU00!//iiieeea\a\aa0i0.2i0.2i!FIGURElb. Representation of the Arithmetic CodingProcess with the interval Scaled Up at Each Stage522Communications of the ACMJune 1987Volume 30Number 6

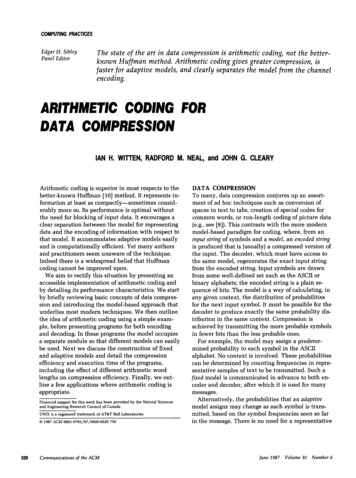

Computing Practices/* ARITHMETIC ENCODING ALGORITHM. *//* Call encode-symbolrepeatedlyfor each symbol in the message./* Ensure that a distinguished"terminator"symbol is encoded last,/* transmitany value in the range [low, e high - lowhigh low f range*cum-freq[symbol-11low low f range*cum-freq(symbol1/* ARITHMETIC DECODING ALGORITHM. *//* "Value" is the number that has been received./* Continue callingdecode-symboluntilthe terminator*/*/symbol is returned.decode-symbol(cum-freq)find symbol such thatcum-freq[symbol] (value-low)/(high-low) cum-freqrsymbol-11/* This ensures that value lies withinthe new l /;* (low, high) range that will be calculatedby *//* the followinglines of code.*/range high - lowhigh low t range*cum-freq[symbol-111OW low t range*cum-freq[symbol]returnsymbolFIGURE2. Pseudocode for the Encoding and Decoding Proceduresnate messages. When the decoder sees this symbol,it stops decoding.Relative to the fixed model of Table I, the entropyof the five-symbol message eaii! is-log 0.3 - log 0.2 - log 0.1 - log 0.1 - log 0.1 -log 0.00006 4.22(using base 10, since the above encoding was performed in decimal). This explains why it takes fivedecimal digits to encode the message. In fact, thesize of the final range is 0.2336 - 0.23354 0.00006,and the entropy is the negative logarithm of thisfigure. Of course, we normally work in binary,transmitting binary digits and measuring entropyin bits.Five decimal digits seems a lot to encode a message comprising four vowels! It is perhaps unfortunate that our example ended up by expandingrather than compressing. Needless to say, however,different models will give different entropies. Thebest single-character model of the message eaii! isthe set of symbol frequencies (e(O.2), a(0.2), i(O.4),!(0.2)), which gives an entropy of 2.89 decimal digits.Using this model the encoding would be only threedigits long. Moreover, as noted earlier, more sophisticated models give much better performancein general.]une 1987Volume 30Number 6A PROGRAMFOR ARITHMETICCODINGFigure 2 shows a pseudocode fragment that summarizes the encoding and decoding procedures developed in the last section. Symbols are numbered, 1, 2,3 . . . The frequency range for the ith symbol isfrom cum-freq[i] to cum-freq[i - 11. As i decreases,cum-freq[i] increases, and cum-freq[O] 1. (Thereason for this “backwards” convention is thatcum-freq[O] will later contain a normalizing factor,and it will be convenient to have it begin the array.]The “current interval” is [Zozu,high), and for bothencoding and decoding, this should be initializedto [O, 1).Unfortunately, Figure 2 is overly simplistic. Inpractice, there are several factors that complicateboth encoding and decoding:Incremental transmission and reception. The encodealgorithm as described does not transmit anythinguntil the entire message has been encoded; neitherdoes the decode algorithm begin decoding until ithas received the complete transmission. In mostapplications an incremental mode of operation isnecessary.The desire to use integer arithmetic. The precisionrequired to represent the [low, high) interval growswith the length of the message. Incremental operation will help overcome this, but the potential forCommunications of the ACM523

Computing Practicesoverflow and underflowcarefully.must still be examinedRepresenting the model so thnt it can be consultedefficiently. The representation used for the modelshould minimize the time required for the decodealgorithm to identify the next symbol. Moreover,an adaptive model should be organized to minimizethe time-consuming task of maintaining cumulativefrequencies.Figure 3 shows working code, in C, for arithmeticencoding and decoding. It is considerably lmore detailed than the bare-bones sketch of Figure Z! Implementations of two different models are given inFigure 4; the Figure 3 code can use either one.The remainder of this section examines the codeof Figure 3 more closely, and includes a proof thatdecoding is still correct in the integer implementation and a review of constraints on word lengths inthe /'DECLARATIONS USED FOR ARITHMETIC ENCODING AND DECODING l //* SIZE OF ARITHMETIC CODE VALUES. l /#definetypedefCode-value-bitslong code-value:fdefineTop-value/'16/* Number of bits in a code value/* Type of an arithmeticcode value(((long)l Code value blts)-1)/* Largestcode valuel/l/l /HALF AND QUARTER POINTS IN THE CODE VALUE RANGE. l alue/ltl)(Z'First-qtr)(3’Firat-qtr)/* Point/* Point/* arterl 3738/'INTERFACE TO THE MODEL. '//'THE SET OF SYMBOLS THAT MAY BE ENCODED. l ymbols/'256(No-of-charetl)(No-of-charstll/* Number of character/* Index of EOF symbol/*Totalsymbolsnumber of symbols'/'/*/TRANSLATION TABLES BETWEENCHARACTERSAND SYMBOL INDEXES. l /int char-to-index[No-of-chars];unsigned char index to char[No of symbols l]:/*To index from character/* To characterfrom indexl/'//* Maximum allowedfrequencycount2a14 - 1/*/* Cumulativesymbol frequenciesl/l/l//* CUMULATIVE FREQUENCYTABLE. */IdefineintMax-frequencycum frsq[No of symbols l];16383FIGURE3. C Implementation of Arithmetic Encoding and Decoding524Communicationso the ACMJune 1987Volume 30Number 6

Computing 55657585960/'MAIN PROGRAMFOR ENCODING. l /#include#include stdlo.h "mode1.h"main0startmodel ):1start-outputlng-bitsO;start-encodingo;for (::I(int ch; lnt symbol;ch - getc(stdin);If (ch--EOF) l,cum-freq);update-model(symbo1);1encode symbol(EOF symbol,cum freq);done-encoding();done-outputinebitso;exit (0) 0Bl82838485868188a99091929394/* Set up othermodules.l//'characters.l/Loop through/* Read the next character./* Exitloop on end-of-file.*//* Translateto an index./* Encode that symbol./* Update the model.l//* Encode the EOF symbol./* Send the last few blts.l/l/follows‘/l/l/l/- encode.c/* ARITHMETIC ENCODING ALGORITHM. */fincludestatic/'voldbll-plus-follov();code value low,long-bits-to-follow;thathigh;/* Ends of the current/* Number of opposite/* the next bit.code regionbits to output*/afterl /'/START ENCODING A STREAM OF SYMBOLS. l /start-encoding0low - 0;Ihiah - Top-value;bits to follow- 0;1-/*/* RoutineCURRENT STATE OF THE ENCODING. '/staticstatic/*"arithmetic-cod1ng.h"/* Fullcode/* No bits'/range.to follownext.l/ENCODEA SYMBOL. l /encode-symhoI(symbol,cum-freq)int symbol:/* Symbol to encodeint cum-freql]:/* Cumulativesymbol frequencieslong range;/' Size of the currentcode regionIrange- (long) (high-low) l;high - low /* Narrow the code region(ranye'cum-freq[symbol-ll)/cum-freq[O)-1:/* to that allottedto this/' symbol.low - low ranpe*cum freq(symbol))/cum freq(O);'/'/l/l/l/l/FIGURE3. C Implementationof ArithmeticEncodingand Decoding(continued)]une 1987Volume 30Number 6Communications of the ACM525

wt*'1/* Output0 ifIn low half./* Output1 ifin hiQh half.*/offsetl/to top.*/l/bithalf./1;/*Subtractoffset/ Otherwise/* Scaleexitto middle'/loop.'1up code range.l /FINISH ENCODING THE STREAM. l /done-encoding0bits-to-follow * 1:Iif (low First-qtr)bit plus follow(0):else bit-plus-follow(l):1/* OUTPUT BITS PLUS FOLLOWING OPPOSITE BITS.staticfbits./* Output an oppositeif in middle/. later(1else break:low * 2'low;high * 2*hightl;/'Loop to ouLput/ Subtractlow -- First-qtr;high -- Iif (high Half)Ibit plus follow(0);Ielse if (low -Half)(bit plus follow(1):low -- Half:high -* Half:Ielse if (low -Flrstqtrsc hiQh ThirdIqtr)void bitglus-foIlow(blt)int bit:output-bitcbit);while (bits-to-follow O)output-bit(Ibit);bits to-follow-* 1;1-/* Output two bits that/' Select the quarterthat/* the currentcode range/* contains.'1'//*l/l /l/l/Outputthe bit.i/* to zero.opposftel 9150151152153154/* MAIN PROGPAMFOR DECODING. */#include(include stdio.h "mode1.h"main ()start-model0 :Istart-inpUtinQ-bitso;start-decodingo:for (;;)(lnt ch: int symboi;symbol - decode-symbolicurn-freq):if (symbol**EOF symbol) break;ch * lndex to ;1exit./* Set up othermodules.'//*Loop throughcharacters.'//*/'/*/'/*Decode next symbol.Exit loop if EOF symbol.Translateto a character.*/Write that character.Update the model.'/'I'/l/(0) ;1FIGURE3. C Implementation of Arithmetic Encoding and Decoding (continued)526Communicationsof the ACM]une 1987Volume 30Number 6

Computing Practicesarithmetic- lude/'ALGORITHM.l/OF THE DECODING.l/“arithmetic-cod1ng.h”CURRENT e;low, high;START DECODINGA/*Currently-seen/*EndsSTREAM OF SYMBOLS.ofcodecodecurrentl /‘/valuereplan'/Start-deC”di”Q()(lnt i:value- 0;for(1 - 1; I -Code-value-bits;value- 2'value,lnput bit();1low " 0:hiQh- Top-value;it )(/*/*Inputcodebitsvalue./*Fullcodetoflllthel/l 08209210211212213214215lnt!DECODE THE NEXT SYMBOL.l esymbolfrequencieslO”Q ra”Qe;/* Size of currentcoderegionint cum;/* Cumulativefrequencycalculatedint symbol:/*Symboldecodedra”Qe- (lO"Q)(hlQh-1oW)tl;cum /*Flndcum freqfor value.(((lonQl(value-lou)tl)*cum freq[O]-l)/ranQe:for eyn!bol - 1; cum-fraq[symbolJ%wm;symboltt); /* Then -freq[O]-1;low - low t(ra"Qe'cum freq[symbo1])/cum freq[O];forI::1(if1else(hiQh Half)/’nothingIfifl/(low -Half)((low -First-qtr‘L hiQh Thlrd-qtr)value-- First-qtr;low -- First-qtr;high--Narrowthe/*/*to eMovetocodereQlontorldofthisbits.‘/l /l/llll////l/Ivalue-- Half;low -- Half:hlQh -- Half;else/*l/l/half.'/'/half.offsetmiddletotop.*Il /half.(offsettomIddlr*/First-qtr;1elsebxeak:low - 2*1ow:high- 2'hiQhtl:value- 2*valuetlnput bit():1relucnexitl/loop.up coderange.in next inputblt.l/l/symbol;tFIGURE3. C Implementation of Arithmetic Encoding and Decoding (continued)]une 1987Volume 30Number 6Communications of the ACM527

bit-input-c216217218/* BIT INPUT ROUTINES. 249250251252253254255256 stdlo.h "arithmetic-cod1ng.h" include/'THE BIT BUFFER. ‘/staticstaticstatic/*intbuffer:/*Bitslntlntblts ngto beof eBIT INPUT. */St6rt inpUting blt6()bits-to-golgarbage-bits-0;- 0:/*Buffer/*no/*Readbit6l/l /out withstartsinbitsit.1/*int(INPUT A BIT.l /input-bit0lnt t:if (bits-to-go--O)1buffer- (garbage ,blts Code value henextareleftReturnif no '/in buffer.*'/bytearbitrarybits*//* aftereof, but check/*for too many such.l/l /(-1);)1bits-to-go-1/* Return the next bit from/* the bottom of the byte.t - buffer&l;buffera - 1;bits-to-goreturn-*8:l/l/I:t;FIGURE3. C Implementation of Arithmetic Encoding and Decoding (continued)528Communications of the ACMJune 1987Volume 30Number 6

86287288209290291292293294/* BIT OUTPUT ROUTINES. '/#include stdlo.h /" THE BIT BUFFER. /* Bits bufferedfor output/* Number of bits free in bufferl/l/FOR BIT OUTPUT. l /start .outputing-.bits()buffer- 0;1bitS tO-QO8;)/* OUTPUT A BIT./* Buffer/' with.Is empty to start'/l/l/output-blt(bit)lnt bit:buffer - 1;(if (bit)bufferI- 0x00;bits-to-go-- 1;if (bits-to-go--O)(putc(buffer,stdout):bits-to-go- 8;tt/* FLUSH OUT THE LAST BITS./* Dot bitIn top of buffer.*//* Output buffer/' now full.ifIt1sl/l /l/done-outputlng-bits0putc(buffer blts to-go,stdout):FIGURE3. C Implementation of Arithmetic Encoding and Decoding (continued)]une 1987Volume 30Number 6Communications of the ACM529

Computing 546474049505152535455565758596061c/* THE FIXED SDDRCE MODEL l 1.1,1,1,1,- (1,1,1,1,1,1,1, 124,1,1,1,1,1l 1, 1,?*/1,O*/11,//*1236, *@/'P/*I'1, 49:./*pldb1.1,1.1,1.1,1,1,f85, 17;,232, 74:,8r5, 388, 375,t531, ,1,1,1,1,1,1,6,Yx12, 101,1.1.1.1,1,1,1,I,j293, 418,127, 9, 250, 139,l/5,0 l ,1,1.1,1.1,1,1,I,l/*/1,I,1’,/*INITIALIZETHE MODEL. '/start-model0!lnt 1:for (i - 0; i No-of-chars:it )char-to-index[i]- itl;index to-char[i l)- i;(/* Set up tablesthat/* translatebetween symbol/*indexesandcharacters.l/l/l /Icum-freq[No-of-symbols]- 0;for (1 - No-of-symbols;i O; i--l(cum-freq[i-lj- cum-freq[i]t freq(iJ;Iif (cum-freq[Ol Max-frequency)abort0;/* Set up cumulative/* frequencycounts./* Check countsl /'/withinlimit*//* UPDATE THE MODEL TO ACCOUNT FOR A NEW SYMBOL. GURE4. Fixed and Adaptive Models for Use with Figure 3530Communications of the ACM]une 1987Volume .30 Number 6

Computing 4344454647404950515253/* THE ADAPTIVE SOURCE MODEL l ALIZE/ symbolfrequenciesl/THE MODEL. l /start-model0int i:Ifor(i - 0; i No-of-chars;it )(char-to-index[l]- itl:index-to-char[itl]- i:Ifor (i - 0; i -No-of-symbols;it )freq[i]- 1;cum-freq[i]- No-of-symbols-i;(/* Set up tablesthat/* translatebetween symbol/* indexes and characters.l/l/l//*/*/*Set up initialfrequencycounts to be one for allsymbols.l /l/l//*/*not be thesame as freq[l].l /l/tfreq[O]- 0;t/'Freq[OlmustUPDATE THE MODEL TO ACCOUNT FOR A NEW SYMBOL. '/update model(symbo1)int symbol:/* Index of newlnt 1;/* New index for(if (cum-freq]O]--Max-frequency)(int cum;cum - 0:for (1 - No-of-symbols;la-O; i--)(freq[i]- (freq(i] 1]/2:cum-freq[l]- cum;cum t-symbolsymbol/*'1maxlawn.l /l/l/If so, halve all thecounts (keeplng themnon-zero).l/l/l/Findl /Seeiffrequency/* are at thslr/*/*/*count8freq[l];ttiorif(i - symbol;freq(i]--freq[i-1):(i symbol)]int ch-i,ch-symbol :ch-i - index-to-char[i];ch-symbol - index-to-char[aymbol];index-to-char[i]- ch-symbol:index-to-char[symbol]- ch-1;char-to-index]ch-i]- symbol:dex[ch-symbol]- i;char-to-in'Ifreq[i] - 1;while (i O) (i -- 1:1 tcum-freq]!t1;l--l: /*symbol'snew Index./* Update the translation/* tableslf the symbol/* moved./*/*/*/*l /has l /Increment the frequencycount for the symbol andupdate the cumulativefrequencies.l/l/l/l/'/tFIGURE4. Fixed and Adaptive Models for Use with Figure 3 (continued)/me 1987Volume 30Number 6Communications of the ACM531

Computing PracticesRepresenting the ModelImplementations of models are discussed in the nextsection; here we are concerned only with the interface to the model (lines 20-38). In C, a byte is represented as an integer between 0 and 255 (a char).Internally, we represent a byte as an integer between 1 and 257 inclusive (an index), EOF beingtreated as a 257th symbol. It is advantageous to sortthe model into frequency order, so as to minimizethe number of executions of the decoding loop(line 189). To permit such reordering, the char/indextranslation is implemented as a pair of tables,index-to-char[]and char-to-index[].In one of ourmodels, these tables simply form the index by adding1 to the char, but another implements a more complex translation that assigns small indexes to frequently used symbols.The probabilities in the model are represented asinteger frequency counts, and cumulative counts arestored in the array cum- freq[]. As previously, thisarray is “backwards,” and the total frequency count,which is used to normalize all frequencies, appearsin cum - fre9 [O]. Cumulative counts must not exceeda predetermined maximum, Max- frequency, and themodel implementation must prevent overflow byscaling appropriately. It must also ensure that neighboring values in the cum- freq [ ] array differ by atleast 1; otherwise the affected symbol cannot betransmitted.forif532Communications of the ACM[Ielse if (low I Half)output-bit(l);low 2*(low-Half);high 2*(high-Half) l;(Ielsebreak;Iwhich ensures that, upon completion, low HalfI high. This can be found in lines 95-113 ofencode-symbol (1, although there are some extra complications caused by underflow possibilities (see thenext subsection). Care is taken to shift ones in at thebottom when high is scaled, as noted above.Incremental reception is done using a numbercalled value as in Figure 2, in which processedbits flow out the top (high-significance) end andnewly received ones flow in the bottom. Initially,start-decoding0(lines 168-176) fills value with received bits. Once decode-symbol () has identified thenext input symbol, it shifts out now-useless highorder bits that are the same in low and high, shiftingvalue by the same amount (and replacing lost bits byfresh input bits at the bottom end):forIncremental Transmission and ReceptionUnlike Figure 2 the program in Figure 3 represents low and high as integers. A special data type,code-value, is defined for these quantities, togetherwith some useful constants: Top-value, representingthe largest possible code-value, and First-qtr, Half,and Third-@, representing parts of the range(lines 6-16). Whereas in Figure 2 the current interval is represented by [low, high), in Figure 3 it is[low, high]; that is, the range now includes the valueof high. Actually, it is more accurate (though moreconfusing) to say that, in the program in Figure 3,the interval represented is [low, high 0.11111 . .o).This is because when the bounds are scaled up toincrease the precision, zeros are shifted into the loworder bits of low, but ones are shifted into high. Although it is possible to write the program to use adifferent convention, this one has some advantagesin simplifying the code.As the code range narrows, the top bits of low andhigh become the same. Any bits that are the samecan be transmitted immediately, since they cannotbe affected by future narrowing. For encoding, sincewe know that low 5 high, th

Arithmetic coding gives greater compression, is faster for adaptive models, and clearly separates the model from the channel encoding. ARITHMETIC CODING FOR DATA COIUPRESSION IAN H. WIllEN, RADFORD M. NEAL, and JOHN G. CLEARY Arithmetic coding is superior in most respects to the better-known Huffman [lo] method. It represents in-