Transcription

Efficient CRUD Queries in MongoDBTim VaillancourtSr. Technical Operations ArchitectPercona

Agenda What is CRUD? CRUD-related MongoDB Features Troubleshooting Explain Database Profiler Log File Read Preference Read and Write Concerns Deletion/Retention Strategies Monitoring

About Me Started at Percona in January 2016 Experience Web Publishing Big-scale LAMP-based web infrastructures Ecommerce Large Inventory SaaS at Amazon / AbeBooks Gaming DevOps / NoSQL DBA at EA SPORTS DBA at EA DICE

About Me Technologies MongoDB, Cassandra and Couchbase MySQL Redis and Memcached RabbitMQ, Kafka and ActiveMQ Solr and Elasticsearch Mesos (Non-tech) Distributed Systems and Infrastructure Architecture

Terminology What is CRUD? Create Operations that create entire documents, eg: .insert() Relatively light operation with low cache/disk IO impact Read Operations that read documents, eg: .find(), .aggregate(), Generally the main source of slowness

Terminology What is CRUD? Update Operations that find and replace data, eg: .update() Expensive, operates similar to a .find() and updates data after Delete Operations that remove documents, eg: .remove()

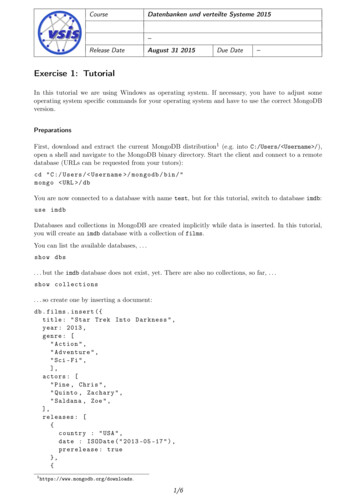

Terminology Document - a single MongoDB document (JSON/BSON)Collection - a collection of documents, similar to “table”Database - a grouping of MongoDB collectionsIndex - a BTree Index applied to a MongoDB collectionCRUD? Create: operations that create documents, eg: .insert() Read: operations that read documents, eg: .find(), .aggregate(), Update: operations that find and replace data, eg: .update() Delete: operations that deletes documents, eg: .delete()

Terminology What is efficient CRUD? Use the minimal server resources possible Scalable Metrics keysExamined - # of Index items examined docsExamined - # of Documents (cache or disk) examined Nreturned - # of Documents returned to client Lag The delay seen in reading data from a replication replica

Storage Engines MMAPv1: Default in 1.x and 2.x Good read performance, poor write performance No compression Collection-level Locking(!) WiredTiger: Default in 3.2 , available since 3.x Good read performance, good write performance Compression supported RocksDB: Available in Percona Server for MongoDB (or patch) Good 95% read performance, very good write performance Compression supported

Isolation and Atomicity Isolation Read Uncommitted Any session can see a change, even before ack’d Essentially no isolation compared to RDBMs Atomicity Single-document Update A reader will never see a partially-changed document Multi-document Update Multi-operation not atomic, no rollback

Replication Async changelog replication Primary Single Primary via election Serves Read and Write operations Secondary One or more, three required for reliable elections Serves Read queries only May take-over Primary in election Consistency Driver-level tunables for Read and Write consistency(!)

Insert Operations A Single-document Insert triggers: 1 x append to the journal 1 x document to be added to the data file(s) MMAPv1 Considerations MMAP will scan for a free slot for the insert Shown as the “nmoved” metric Multi-document Insert Operations Can be used to batch inserts Improves insert performance in many cases Can be ordered true/false in options document

Insert Operations Write Concern accepted RocksDB or WiredTiger recommended for high write volumes

Insert : “tim”,“password”: 123456,“createDate”: new Date(),},{.}], {“writeConcern”: { w: ”majority” },ordered: true})

Read Operations .find() Returns cursor of document(s) matching a set of conditions Option to specify which fields to return, default: full doc! .aggregate() Powerful framework for data aggregations, summaries, etc No .explain() support Scans entire collection unless using a match as first stage db. coll .find( match conditions ).explain() to explain! .mapReduce() Runs in JavaScript and offers little insight

Read Operations Read Concern Important for strict data integrity (often combined with WCs) Replica Set failovers can cause some data “rollback” “Rolledback” data is written to a json file in your dbPath Tunable read consistency “local” default, local node read only “majority” read from a majority of members “linearizable” read and ack’d from a majority of members RC can be changed per query

Read Operations Example:test1:PRIMARY db.getMongo().setReadPref('primary')test1:PRIMARY db.pages.find({title : "Geography of Guinea-Bissau"}, { id: 1, title:1}).pretty(){" id" : "0c25a313481757720f5e9e46b4cffddd08a13fef","title" : "Geography of Guinea-Bissau"}

Update Operations Operates much like a .find() with special re-insertion Cannot run .explain() on .update() Convert the .update() match to a .find( conds).explain() Can change one or more documents { multi: true } option required for multi-document updates Avoid Multi-document updates if possible MMAPv1 Considerations In many cases MMAP can do in-place updates (no replace) Provides many update operators to change the data Examples: set, inc, mul, min, max

Update Operations Efficient Updates Use indexes on the match condition (very crucial) Avoid multi-document updates in your design

Update Operations Example:test1:PRIMARY db.pages.update({title : "Geography of Guinea-Bissau"},{ set: {title: "Geography of Guinea-Bisso"}})WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

Delete Operations Similar in execution impact to an .update() Accepts array of delete conditions (batching) Using TTL Index to Delete Deletes documents based on MongoDB ISODate() objects Batches deletes by minute (by default) Doubles as an index for the date field Batch delete by field Mark a field as {deleted:true} Run controlled batch job to .remove({deleted:true}) Requires some scripting / cronjob

Delete Operations RocksDB Considerations Compaction may struggle with very high delete volume “Toombstones” are written in place of the document Considered by read operations until compaction

Delete Operations Example:test1:PRIMARY db.pages.remove({title : "Geography of Guinea-Bisso"})WriteResult({ "nRemoved" : 1 })

Indexing MongoDB supports BTree, text and geo indexes Default behaviour Collection lock until indexing completes {background:true} Runs indexing in the background avoiding pauses Hard to monitor and troubleshoot progress Unpredictable performance impact Avoid drivers that auto-create indexes. Use real performance data Too many indexes hurts performance Indexes have a forward or backward direction

Indexing Compound Indexes Several fields supported Fields can be in forward or backward direction Consider any .sort() query options and match sort direction! Read Left - Right Index can be partially-read Left-most fields do not need to be duplicated, example:You have an index with fields: {status:1, date:1} and a 2ndwith: {status:1}. {status:1} is duplicated!

Indexing Get Indexes Example:test1:PRIMARY db.pages.getIndexes()[{"v" : 1,"key" : {" id" : 1},"name" : " id ","ns" : "wikipedia.pages"}]

Operation Profiling Writes slow database operations to a new MongoDB collection foranalysis Capped Collection: “system.profile” in each database, default 1mb The collection is capped, ie: profile data doesn’t last forever Enable operationProfiling in “slowOp” mode Start with a very high threshold and decrease it in stepsUsually 50-100ms is a good thresholdEnable in mongod.conf operationProfiling:slowOpThresholdMs: 100mode: slowOp

Operation Profiling op/ns/query: type, namespace andquery of a profilekeysExamined: # of index keysexamineddocsExamined: # of docs examinedto achieve resultwriteConflicts: # of WCEencountered during updatenumYields: # of times operationyielded for otherslocks: detailed lock statistics

.explain() and Profiler .explain() Example:Profiler:

Log File: Slow Queries, etc Interesting details are logged to the mongod/mongos log files Slow queries Storage engine details (sometimes) Index operations Chunk moves Connections

Query Efficiency Index Efficiency: keysExamined / nreturned Document Efficiency: docsExamined / nreturned End goal: Examine only as many Documents as you return Example: a query scanning 10 documents to return 1 has efficiency 0.1

Schema Design: Data Types Strings Only use strings if required Do not store numbers as strings! Look for {field:“123456”} instead of {field:123456} “12345678” moved to a integer uses 25% less space Range queries on proper integers is more efficient

Schema Design: Data Types Strings Example JavaScript to convert a field in an entire collection:db.items.find().forEach(function(x) {newItemId parseInt(x.itemId);db.containers.update({ id: x. id },{ set: {itemId: itemId } })}); Do not store dates as strings! The field "2017-08-17 10:00:04 CEST" stores in 52.5% lessspace!

Schema Design: Data Types Strings Do not store booleans as strings! “true” - true 47% less space wasted DBRef DBRefs provide pointers to another document DBRefs can be cross-collection

Schema Design: Data Locality MongoDB optimised for single-document operations Single Document / Centralised Greate cache/disk-footprint efficiency Centralised schemas may create a hotspot for write locking Multi Document / Decentralised MongoDB rarely stores data sequentially on disk Multi-document operations are less efficient Less potential for hotspots/write locking Increased overhead due to fan-out of updates

Schema Design: Data Locality Multi Document / Decentralised Suited for a background worker model Example: Social Media status update, graph relationships,etc

Batching Operations Batching Inserts/Updates Requires less network commands Allows the server to do some internal batching Operations will be slower overall Suited for queue worker scenarios batching many changes Traditional user-facing database traffic should aim tooperate on a single (or few) document(s)

Batching Operations Thread-per-connection model 1 x DB operation 1 x CPU core only Executing Parallel Reads Large batch queries benefit from several parallel sessions Break query range or conditions into several client- serverthreads Not recommended for Primary nodes or Secondaries withheavy reads

Read Preference / Scaling Controls the node type (not consistency) for a session: “primary” - Only read from Primary “primaryPreferred” - Read from Primary unless there is none “secondary” - Only read from Secondary “secondaryPreferred” - Read from Secondary unless there arenone “nearest” - Connect to the first node you can Using a secondary-focussed Read Preference provides read scalability Adding more secondaries provides more read capacity! 2/3rds of a replset is often sitting around idle!

Query Antipatterns No list of fields specified in .find() MongoDB returns entire documents unless fields are specified Only return the fields required for an application operation! Covered-index operations require only the index fields to bespecified Using where operators This executes JavaScript with a global lock Many and or or conditions MongoDB doesn’t handle large lists of and or or efficiently

Monitoring MongoDB Methodology Monitor Frequently Monitor every N seconds (not minutes!) Problems can begin/end in seconds Iterative / Responsive “What graph would have told us the problem faster?” Correlate Database and Operating System

Monitoring MongoDB Database Operation counters Cache Traffic and Capacity Checkpoint / Compaction Performance Concurrency Tickets (WiredTiger and RocksDB) Document and Index scanning Various engine-specific details

Monitoring MongoDB Operating System CPU Disk Bandwidth / Util Average Wait Time Memory Network

Monitoring MongoDB: Percona PMM Open-source databasemonitoring from Percona! Based on open-sourcetechnologies Simple deployment Graphs in this demo arefrom PMM 800 metrics per ping Includes Linux OSmetrics

Monitoring MongoDB: Percona PMM QAN QAN (“QueryAnalyser”) forMongoDB! Query datausing theMongoDBprofiler! Still in Beta

Testing Performance and CapacityGeneral Database Restore Production backups Use mongoreplay or Parse/flashback tools to capture replay realdatabase traffic Use PMM or other monitoring to analyse changes Full stack Try to emulate the real user traffic Add micro-pauses to simulate reality Cloud-based providers are great for running load generation

Themes Perform as few client- server operations as possible (batch) For read or updates: Consider if a query will benefit from an index Only request/set the data fields required Use the log file and profiler to find slow performers Only add indexes if they’re required Store data in the smallest data type possible Use Secondaries to scale read traffic Use Read/Write Concerns for strong integrity Review performance data (profiler, monitoring, etc) on a schedule

Questions?

Thanks for joining!Be sure to checkoutthe Percona Blog for moretechnical blogs and topics!

MongoDB rarely stores data sequentially on disk Multi-document operations are less efficient Less potential for hotspots/write locking Increased overhead due to fan-out of updates. Schema Design: Data Locality Multi Document / Decentralised Suited for a background worker model Example: Social Media status update, graph relationships, etc. Batching Operations Batching Inserts/Updates Requires .