Transcription

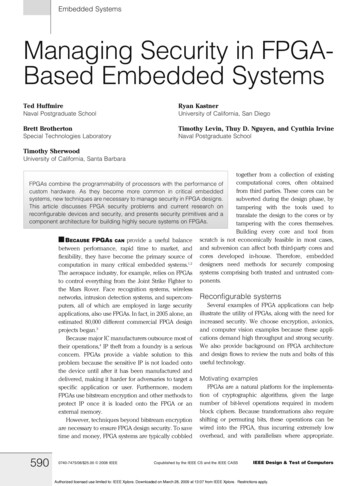

Embedded SystemsManaging Security in FPGABased Embedded SystemsTed HuffmireNaval Postgraduate SchoolRyan KastnerUniversity of California, San DiegoBrett BrothertonSpecial Technologies LaboratoryTimothy Levin, Thuy D. Nguyen, and Cynthia IrvineNaval Postgraduate SchoolTimothy SherwoodUniversity of California, Santa Barbaratogether from a collection of existingcomputational cores, often obtainedFPGAs combine the programmability of processors with the performance offrom third parties. These cores can becustom hardware. As they become more common in critical embeddedsystems, new techniques are necessary to manage security in FPGA designs.subverted during the design phase, byThis article discusses FPGA security problems and current research ontampering with the tools used toreconfigurable devices and security, and presents security primitives and atranslate the design to the cores or bycomponent architecture for building highly secure systems on FPGAs.tampering with the cores themselves.Building every core and tool fromscratchisnoteconomically feasible in most cases,&BECAUSE FPGAS CAN provide a useful balancebetween performance, rapid time to market, and and subversion can affect both third-party cores andflexibility, they have become the primary source of cores developed in-house. Therefore, embeddedcomputation in many critical embedded systems.1,2 designers need methods for securely composingThe aerospace industry, for example, relies on FPGAs systems comprising both trusted and untrusted comto control everything from the Joint Strike Fighter to ponents.the Mars Rover. Face recognition systems, wirelessnetworks, intrusion detection systems, and supercom- Reconfigurable systemsSeveral examples of FPGA applications can helpputers, all of which are employed in large securityapplications, also use FPGAs. In fact, in 2005 alone, an illustrate the utility of FPGAs, along with the need forestimated 80,000 different commercial FPGA design increased security. We choose encryption, avionics,and computer vision examples because these appliprojects began.3Because major IC manufacturers outsource most of cations demand high throughput and strong security.their operations,4 IP theft from a foundry is a serious We also provide background on FPGA architectureconcern. FPGAs provide a viable solution to this and design flows to review the nuts and bolts of thisproblem because the sensitive IP is not loaded onto useful technology.the device until after it has been manufactured anddelivered, making it harder for adversaries to target a Motivating examplesFPGAs are a natural platform for the implementaspecific application or user. Furthermore, modernFPGAs use bitstream encryption and other methods to tion of cryptographic algorithms, given the largeprotect IP once it is loaded onto the FPGA or an number of bit-level operations required in modernblock ciphers. Because transformations also requireexternal memory.However, techniques beyond bitstream encryption shifting or permuting bits, these operations can beare necessary to ensure FPGA design security. To save wired into the FPGA, thus incurring extremely lowtime and money, FPGA systems are typically cobbled overhead, and with parallelism where appropriate.5900740-7475/08/ 25.00G2008 IEEECopublished by the IEEE CS and the IEEE CASSIEEE Design & Test of ComputersAuthorized licensed use limited to: IEEE Xplore. Downloaded on March 28, 2009 at 13:07 from IEEE Xplore. Restrictions apply.

FPGA-based implementations of MD5, SHA-2, andvarious other cryptographic functions have exploitedthis sort of bit-level operation. Even public-keycryptographic systems have been built atop FPGAs.Similarly, there are various FPGA-based intrusiondetection systems (IDS).All this work centers around exploiting FPGAs tospeed cryptographic or intrusion-detection primitives,but it is not concerned with protecting the FPGAsthemselves. Researchers are just now starting to realizethe security ramifications of building such systemsaround FPGAs.Cryptographic systems such as encryption devicesrequire strong isolation to segregate plaintext (red)from ciphertext (black). Typically, red and blacknetworks (as well as related storage and I/O media)are attached to the device responsible for encryptingand decrypting data and enforcing the security policy;this policy ensures that unencrypted information isunavailable to the black network.In more concrete terms, Figure 1 shows anembedded system with its components divided intotwo domains, which we have illustrated with differentshading. One domain consists of MicroBlaze0 (aprocessor), an RS-232 interface, and a distinct memorypartition. The other domain consists of MicroBlaze1,an Ethernet interface, and another distinct partition ofmemory. Both domains share an AES (AdvancedEncryption Standard) encryption core, and all thecomponents are connected over the on-chip peripheral bus (OPB), which contains policy enforcementlogic to prevent unintended information flows between domains. An authentication function to interpret data from a biometric iris scanner (which mightbe attached to the RS-232 port) could be added tosuch a layout. However, if the authentication requireda high degree of trustworthiness, the implementationof the function would need to reside in a (new orexisting) trusted core.In the aviation field, both military and commercialsectors rely on commercial off-the-shelf (COTS) FPGAcomponents to save time and money. In militaryaircraft, sensitive targeting data is processed on thesame device as less-sensitive maintenance data. Also,certain processing components are dedicated todifferent levels of data in some military hardwaresystems. Because airplane designs must minimizeweight, it is impractical to have a separate device forevery function or level. Allocation of functions toprovide separation of logical modules is a commonFigure 1. A system consisting of two processors, a shared AES(Advanced Encryption Standard) encryption core, an Ethernetinterface, an RS-232 interface, and shared external DRAM(dynamic RAM), all connected over a shared bus. (DDR: doubledata rate; SDRAM: synchronous DRAM.) (Revised from T.Huffmire et al., ‘‘Designing Secure Systems on ReconfigurableHardware,’’ ACM Trans. Design Automation of ElectronicSystems (TODAES), vol. 13, no. 3, July 2008, article 44. G 2008ACM with permission.5)practice in avionics to resolve this problem and toprovide fault tolerance—for example, if a bulletdestroys one component.Lastly, intelligent video surveillance systems canidentify potentially suspicious human behavior andbring it to the attention of a human operator, who canmake a judgment about how to respond. Such systemsrely on a network of video cameras and embeddedprocessors that can encrypt or analyze video in realtime using computer vision technology, such ashuman behavior analysis and face recognition. FPGAsare a natural choice for any streaming applicationbecause they can provide deep computation pipelines, with no shortage of parallelism. Implementingsuch a system would require at least three cores on theFPGA: a video interface for decoding the video stream,an encryption or computer vision mechanism forprocessing the video, and a network interface forsending data to a security guard’s station. Each ofthese modules must be isolated to prevent sensitiveinformation from being shared between modulesNovember/December 2008Authorized licensed use limited to: IEEE Xplore. Downloaded on March 28, 2009 at 13:07 from IEEE Xplore. Restrictions apply.591

Embedded SystemsFigure 2. A modern FPGA-based embedded system in which distinct cores with different pedigrees and varied trustrequirements occupy the same silicon. Reconfigurable logic, hardwired soft-processor cores, SRAM (static RAM)blocks, and other soft IP cores all share the FPGA and the same off-chip memory. (BRAM: block RAM; DSP: digitalsignal processing; HDL: hardware description language; mP: microprocessor.)improperly—for example, directly between the videointerface and the network.FPGA architectureFPGAs use programmability and an array ofuniform logic blocks to create a flexible computingfabric that can lower design costs, reduce systemcomplexity, and decrease time to market, usingparallelism and hardware acceleration to achieveperformance gains. The growing popularity of FPGAshas forced practitioners to begin integrating security asa first-order design consideration, but the resourceconstrained nature of embedded systems makes itchallenging to provide a high level of security.An FPGA is a collection of programmable gatesembedded in a flexible interconnect network that cancontain several hard or soft microprocessors. FPGAsuse truth tables or lookup tables (LUTs) to implementlogic gates, flip-flops for timing and registers, switchable interconnects to route logic signals betweendifferent units, and I/O blocks for transferring data intoand out of the device. A circuit can be mapped to anFPGA by loading the LUTs and switch boxes with aconfiguration, a method that is analogous to the way atraditional circuit might be mapped to a set of ANDand OR gates.592An FPGA is programmed using a bitstream. Thisbinary data, loaded into the FPGA through specific I/Oports on the device, defines how the internal resourcesare used for performing logic operations. (For adetailed discussion of the architecture of a modernFPGA, see the survey by Compton and Hauck.1)Design flowFigure 2 shows some of the many different designflows used to compose a single modern embeddedsystem. The FPGA implementation relies on severalsophisticated software tools created by many differentpeople and organizations. Special-purpose processingcores, such as an AES core, can be distributed in theform of the hardware description language (HDL),netlists (which are a list of logical gates and theirinterconnections), or a bitstream. These cores can bedesigned by hand, or they can be automaticallygenerated by design tools. For example, the XilinxEmbedded Development Kit (EDK) generates a softmicroprocessor on which C code can be executed.There are even tools that convert C code to HDL,including Mentor Graphics Catapult C and Celoxica.An example of an especially complex design flow isAccelDSP, which first translates Matlab algorithms intoHDL; logic synthesis then translates this HDL into aIEEE Design & Test of ComputersAuthorized licensed use limited to: IEEE Xplore. Downloaded on March 28, 2009 at 13:07 from IEEE Xplore. Restrictions apply.

netlist. Next, a synthesis tool uses a place-and-routealgorithm to convert this netlist into a bitstream, withthe final result being an implementation of aspecialized signal-processing core. Security vulnerabilities can be introduced into the life cycle inadvertentlybecause designers sometimes leave ‘‘hooks’’ (featuresincluded to simplify later changes or additions) in thefinished design. In addition, the life cycle can besubverted when engineers inject unintended functionality, some of which might be malicious, into bothtools and cores.Reconfigurable security problemsDesign-tool subversion, composition, trusted foundries, and bitstream protection are problems oftenassociated with reconfigurable hardware.Design-tool subversionThe subversion of design tools could easily result ina malicious design being loaded onto a device. Forexample, a malicious design could physically destroythe FPGA by causing the device to short circuit. In fact,major design-tool developers have few or no checks inplace to ensure that attacks on specific functionalityare not included. However, we are not proposing amethod that makes possible the use of subverteddesign tools to create a trusted core. Rather, ourmethods make it possible to safely combine trustedcores, developed with trusted tools (perhaps using inhouse tools that might not be fully optimized) withuntrusted cores. FPGA manufacturers such as Xilinxprovide signed cores that embedded designers cantrust. Freely available cores obtained from sourcessuch as OpenCores might have vulnerabilities introduced after distribution from the original source.However, a digital signature does not prevent avulnerability either.protect IP. Few researchers have begun to consider thesecurity ramifications of compromised hardware.Industry needs a holistic approach to managesecurity in FPGA-based embedded-systems design.Systems can be composed at the device, board, andnetwork levels. At the device level, one or more IPcores reside on a single chip. At the board level, one ormore chips reside on a board. At the network level,multiple boards are connected over a network. Thesemultiple scales of design present different potentialavenues for attack. Attacks at the device level caninvolve malicious software as well as sophisticatedsand-and-scan techniques. Attacks at the board levelcan involve passive snooping on the wires thatconnect chips and the networks that connect boardsas well as active modification of data traffic. There aresecurity advantages to using a separate chip for eachcore, because doing so eliminates the threat of coreson the same device interfering with one another. Thisadvantage must be weighed against the increasedpower and area cost of having more chips and theincreased risk of snooping on the communicationlines between chips.Composing secure systems using COTS components also presents difficulties:&&&&The composition problemGiven that different design tools produce a set ofinteroperating cores, and in the absence of anoverarching security architecture, you can only trustyour final system as much as you trust your leasttrusted design path.6 If there is security-criticalfunctionality (such as a unit that protects and operateson secret keys), there is no way to verify that othercores cannot snoop on it or tamper with it.One major problem is that it’s now possible to copyhardware, not just software, from existing products,and industry has invested heavily in mechanisms toDid the manufacturer insert unintended functionality into the FPGA fabric? Was the devicetampered with en route from the factory to theconsumer?Does one of the cores in the design have a flaw(intentional or otherwise) that an attacker couldexploit? Have the design tools been tamperedwith?Does a security flaw exist in the software runningon general-purpose CPU cores or in the compilerused to build the software?If an embedded device depends on other partsof a larger network (wired or wireless) of otherdevices (a system of systems), are those partsmalicious?We propose a holistic approach to secure systemcomposition on an FPGA that employs many differenttechniques, both static and runtime, including lifecycle management, reconfigurable mechanisms, spatial isolation, and a coherent security architecture. Asuccessful security architecture must help designersmanage system complexity without requiring allsystem developers to have complete knowledge ofNovember/December 2008Authorized licensed use limited to: IEEE Xplore. Downloaded on March 28, 2009 at 13:07 from IEEE Xplore. Restrictions apply.593

Embedded Systemsthe inner workings of all hardware and softwarecomponents, which are far too complex for completeanalysis. An architecture that enables the use of bothevaluated and unevaluated components would let usbuild systems without having to reassess all theelements for every new composition.The trusted-foundry problemFPGAs provide an important security benefit overASICs. When an ASIC is manufactured, the sensitivedesign is transformed from a software description to ahardware realization, so the description is exposed tothe risk of IP theft. For sensitive military content, thiscould create a national security threat. Trimbergerexplains how FPGAs address the problem for thefabrication phase, but the security problem ofpreventing the design from being stolen from theFPGA itself remains and is similar to that of an ASIC.7Bitstream protectionMost prior work relating to FPGA security focuseson preventing IP theft and securely uploading bitstreams in the field. Because such theft directlyimpacts the ‘‘bottom line,’’ industry has alreadydeveloped several techniques to combat FPGA IPtheft, such as encryption, fingerprinting, and watermarking. However, establishing a root of trust on afielded device is challenging because it requiresincorporating a decryption key into the finishedproduct. Some FPGAs can be remotely updated inthe field, and industry has devised secure hardwareupdate channels that use authentication mechanismsto prevent a subverted bitstream from being uploaded.These techniques were developed to prevent anattacker from uploading a malicious design thatcauses unintended functionality. (Trimberger providesa more extensive overview of bitstream protectionschemes.7)Reconfigurable security solutionsSolutions to reconfigurable security problems fallinto two categories: life-cycle management and asecure architecture.Life-cycle managementClearly, industry needs an approach to ensure thetrustworthiness of all the tools involved in the complexFPGA design flow. Industry already deals with this lifecycle management problem with software configuration management, which covers operating systems,594security kernels, applications, and compilers. Configuration management stores software in a repositoryand assigns it a version number. The reputation of atool’s specific version is based on how extensively ithas been evaluated and tested, the extent of itsadoption by practitioners, and whether it has a historyof generating output with a security flaw. The rationalebehind taking a snapshot in time of a particularversion of a tool is that later versions of the tool mightbe flawed. For example, because automatic updatescan introduce system flaws, it is often more secure todelay upgrades until the new version has beenthoroughly tested.A similar strategy is needed for life-cycle protectionof hardware to provide accountability in the development process, including control of the developmentenvironment and tools, as well as trusted delivery ofthe chips from the factory. Both cores and tools shouldbe placed under a configuration management system.Ideally, it should be possible to verify that the output ofeach stage of the design flow faithfully implements theinput to that stage through the use of formal methodssuch as model checking. However, such static analysissuffers from the problem of false positives, and acomplete security analysis of a complex tool chain isnot possible with current technology, owing to theexponential explosion in the number of states thatmust be checked.An alternative is to build a custom set of trustedtools for security-critical hardware. This tool chainwould implement a subset of the commercial toolchain’s optimization functions, and the resultingdesigns would likely sacrifice some measure ofperformance for additional security. Existing researchon trusted compilers could be exploited to minimizethe development effort. A critical function of life-cycleprotection is to ensure that the output (and transitivelythe input) does not contain malicious artifacts.8Testing can also help ensure fidelity to requirementsand common failure modes. For example, it shouldconsider the location of the system’s entry points, itsdependencies, and its behavior during failure.Life-cycle management also includes delivery andmaintenance. Trusted delivery ensures that the FPGAhas not been tampered with from manufacturing tocustomer delivery. For an FPGA, maintenance includes updates to the configuration, which can occurremotely on some FPGAs. For example, a vendormight release an improved version of the bitstreamthat fixes bugs in the earlier version.IEEE Design & Test of ComputersAuthorized licensed use limited to: IEEE Xplore. Downloaded on March 28, 2009 at 13:07 from IEEE Xplore. Restrictions apply.

Secure architectureProgrammability of FPGAs is a major advantage forproviding on-chip security, but this malleabilityintroduces unique vulnerabilities. Industry is reluctantto add security features to ASICs, because the editcompile-run cycle cost can be prohibitive. FPGAs, onthe other hand, provide the opportunity to incorporateself-protective security mechanisms at a far lower cost.Memory protection. One example of a runtimesecurity mechanism we can build into reconfigurablehardware is memory protection. On most embeddeddevices, memory is flat and unprotected. A referencemonitor, a well-understood concept from computersecurity, can enforce a policy that specifies the legalsharing of memory (and other computing resources)among cores on a chip.9 A reference monitor is anaccess control mechanism that possesses threeproperties: it is self protecting, its enforcementmechanisms cannot be bypassed, and it can besubjected to analysis that ensures its correctness andcompleteness.10 Reference monitors are useful incomposing systems because they are small and donot require any knowledge of a core’s innerworkings.Spatial isolation. Although synthesis tools cangenerate designs in which the cores are intertwined,increasing the possibility of interference, FPGAsprovide a powerful means of isolation. Becauseapplications are mapped spatially to the device, wecan isolate computation resources such as cores inspace by controlling the layout function,11 as Figure 3shows. A side benefit of the use of physical isolation ofcomponents is that it more cleanly modularizes thesystem. Checks for the design’s correctness are easierbecause all parts of the chip that are not relevant to thecomponent under test can be masked.McLean and Moore provide similar concurrentwork.12 Although they do not provide extensive details,they appear to be using a similar technique to isolateregions of the chip by placing a buffer between them,which they call a fence.Tags. As opposed to explicitly monitoring attempts toaccess memory, the ability to track information and itstransformation as it flows through a system is a usefulprimitive for composing secure systems. A tag ismetadata that can be attached to individual pieces ofsystem data. Tags can be used as security labels, and,Figure 3. Sample layout for a design with fourcores and a moat size of two. There are severaldifferent drawbridge configurations between thecores. (IOB: I/O block; CLB: configurationlogic block.)thanks to their flexibility, they can tag data in an FPGAat different granularities.For example, a tag can be associated with anindividual bit, byte, word, or larger data chunk. Oncethis data is tagged, static analysis can be used to testthat tags are tightly bound to data and are immutablewithin a given program. Although techniques currentlyexist to enhance general-purpose processors with tagssuch that only the most privileged software level canadd or change a tag, automatic methods of adding tagsto other types of cores are needed for tags to be usefulas a runtime protection mechanism. Early experimentsin tagged architectures should be carefully assessed toavoid previous pitfalls.13Secure communication. To get work done, coresmust communicate with one another and thereforecannot be completely isolated. Current cores cancommunicate via either shared memory, directconnections, or a shared bus. (RF communicationmight be possible in the future.) For communicationvia shared memory, the reference monitor can enforcethe security policy as a function of its ability to controlaccess to memory in general. For communicationvia direct connections, static analysis can verify thatonly specified direct connections are permitted, aswe discussed earlier. Such interconnect-tracingNovember/December 2008Authorized licensed use limited to: IEEE Xplore. Downloaded on March 28, 2009 at 13:07 from IEEE Xplore. Restrictions apply.595

Embedded Systemstechniques can be applied at both the device andboard levels.Communication via a shared bus must addressseveral threats. If traffic sent over the bus is encrypted,snooping is not a problem. To address covert channelsresulting from bus contention, every core can be givena fixed slice of time to use the bus. Although variousoptimizations have been proposed, this type ofmultiplexing is clearly inefficient, because a core’sneeds can change over time.regions, an efficient new mechanism for ensuringisolation is possible.11 However, if a computingresource, such as an encryption unit, must be sharedamong security domains, then a temporal scheme(possibly based on data tagging) might be required.We are pursuing development of formal and practicalmethods that cooperatively apply spatial schemes,temporal schemes, and tagging to a design in a waythat meets security requirements and minimizesoverhead.Future workReconfigurable updatesMany modern FPGAs can dynamically change partof their configuration at runtime. Partial reconfiguration makes it possible to update the circuitry in afielded device to patch errors in the design, provide amore efficient version, change algorithm parameters,or add new data sets (such as Snort IDS rules). Theavionics industry, for example, would like the ability toupdate systems in flight as a fault tolerance measure.Also, some supercomputers have partially reconfigurable coprocessors.A dynamic system is more complicated anddifficult to build than a static one, and this is true ofthe security of such a system as well. In many cases,secure state must be preserved across updates. A hotswappable system is especially challenging becausestate must be transferred from the executing core tothe updated core. In addition, data from the executingcore must be mapped to the updated core, whichmight need to store the same data in a completelydifferent location as the previous version.In fact, the practical difficulties of implementingsystems that employ partial reconfiguration hasprevented its widespread use. The costs of dealingwith these complexities are rarely worth the savings inon-chip area, which doubles every year anyway.However, practitioners should understand the securityimplications of partial reconfiguration as it applies todynamic updates. For example, an updated coremight have different security properties than theprevious core. We are investigating the requirementsfor partial reconfiguration within our security architecture.Embedded devices perform a critical role in boththe commercial and military sectors. Increasinglymore functionality is being packed onto a singledevice to realize the cost savings of increasedintegration, yet researchers have yet to address onchip security. FPGAs can have multiple cores on thesame device operating at different trust levels. BecauseFPGAs are at the heart of many embedded devices,new efficient security primitives are needed. We seeopportunities for future work in multicore systems,further integration of our security primitives, reconfigurable updates, and both covert and side channels.Multicore systemsAs computing changes from a general-purposeuniprocessor model with disk and virtual memory to amodel in which embedded devices such as cellphones perform more computing tasks, a newapproach to system development is needed. Mostfuture systems will likely be chip multiprocessorsystems running multiple threads, SoCs with multiplespecial-purpose cores on a single ASIC, or a compromise between those two extremes on an FPGA. Whenthe number of cores becomes large, communicationbetween the cores over a single shared bus isimpractical, and the use of direct connections (suchas grid or mesh communication) becomes necessary.New techniques are necessary to mediate secure,efficient communication of multiple cores on a singlechip. System design under this new model will requirechanges to the way in which implementations aredeveloped to ensure performance, correctness, andsecurity.Further integration of security primitivesOur recent work has shown that by physicallylocating computations in different chip regions, andby validating the hardware boundaries between these596Channels and information leakageEven if cores are spatially isolated, they might stillbe able to communicate through a covert channel. Ina covert-channel attack, a high core leads classifieddata to a low core that is not authorized to accessIEEE Design & Test of ComputersAuthorized licensed use limited to: IEEE Xplore. Downloaded on March 28, 2009 at 13:07 from IEEE Xplore. Restrictions apply.

classified data directly. The high source is alsoconstrained by rules that prevent it from writingdirectly to the low destination. A covert channel istypically exploited by encoding data into a sharedresource’s visible state, such as disk usage, errorconditions, or processor activity. Classical covertchannel analysis involves enumerating all sharedresources and metadata on chip, identifying theshared points, determining if the shared resource isexploitable, determining the bandwidth of the covertchannel, and determining whether remedial actioncan be taken.A side channel is slightly different from a covertchannel in that the recipient is an entity outside thesystem that observes benign processing and can infersecrets from those observations. An example of a sidechannel attack is the power-analysis attack, in whichthe power consumption of a cryptocore is externallyobserved to extract the cryptographic keys. Finally,there are overt illegal channels (such as directchannels or trap doors). An example of a directchannel is a system that lacks memory protection. Acore can transmit data to another core simply bycopying it into a memory buffer.Clearly, new techniques are necessary to addressthe problem of covert, side, and direct channels inembedded systems. In theory, a design could bestatically analyzed to detect the presence of possibleunintended information flows, although the scalabilityof this approach runs into computability limits. We arecontinuing to investigate solutions to this problem.hardware security for granted. Clearly, embeddeddesign practitioners must become acquainted withthese problems and with related new

pret data from a biometric iris scanner (which might be attached to the RS-232 port) could be added to such a layout. However, if the authentication required a high degree of trustworthiness, the implementation of the function would need to reside in a (new or existing) trusted core. In the aviation field, both military and commercial