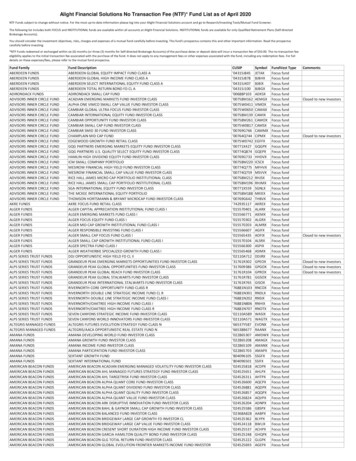

Transcription

Hadoop on Beacon:An IntroductionJunqi Yin and Pragnesh PatelNICS Seminar, Feb. 20, 2015

Outline Intro to Hadoop Hadoop architecture on Beacon WordCount example – “Hello World” in Hadoop HiBench sort example – Intel Hadoop benchmark Hive example – Data warehouse based on Hadoop2

Intro to Hadoop Hadoop, a Apacha Software Foundation project, isdesigned to efficiently process large volumes ofinformation by connecting many commodity computerstogether to work in parallel. Hadoop mainly offers two things:1. HDFS (Hadoop Distributed File System)2. MapReduce framework3

Intro to Hadoop HDFS is structuredsimilarly to a regularUnix filesystem exceptthat data storage isdistributed acrossseveral machines HDFS contains:Datanode – where data actually storesNamenode – controls meta dataSecondary Namenode – keeps edit logs, filesystem image, etc4

Intro to Hadoop A given file is brokendown into blocks( default 64MB), thenblocks are replicatedacross cluster (default 3) Optimized for1. Throughput2. Scalability3. Fault tolerant5

Intro to Hadoop MapReduceframework:1. APIs for writingMapReduceprograms2. Services formanaging theexecution ofthese programs Mapper perform a transformation Reducer perform an aggregation6

Intro to Hadoop MapReduce 2.0(YARN):1. Resourcemanagerincludes jobscheduler andapplicationmanager2. NodeManager is the per-machine agent who isresponsible for containers and reports to ResourceManager7

Intro to Hadoop Why Hadoop1. Cost effective: tie smaller and more reasonably pricedmachines together into a single compute cluster2. Flat scalability: orders of magnitude of growth can bemanaged with little re-work required3. Reliability: fault tolerance in HDFS and MapReducejobs8

NamenodeNodemanagerSSDFDRInfiniband

BeaconHadoopConfigura4on HADOOP CONF DIR: core-site.xml, hdfs-site.xml,yarn-site.xml, mapred-site.xml By default, generated from the template files at HADOOP HOME/etc/hadoop/template/optimized User can customize configuration byexport HADOOP CONF DIR /path/to/your/configurationafter loading the Hadoop module

BeaconHadoopConfigura4on Some important ://beaconxxx-ib0:8020Other than hdfs, e.g. file:/// path/to/ramdisk or lustreio.file.buffer.size131072Size of read/write file:///tmp/xxx/hadoop/hdfs/nnPath on the local filesystem to /xxx/hadoop/hdfs/dnPath on the local filesystem to DataNodedirectory.dfs.blocksize134217728HDFS blocksize of 128MB for large file-systems.dfs.namenode.handler.count100More NameNode server threads to handle largenumber of DataNodes.dfs.replication1Number of duplicates

BeaconHadoopConfigura4on Some important inimumallocation-mb2048Minimum limit of memory to allocate to 96The maximum allocation for every containerrequest at the RM, in lpaths on the local filesystem where intermediatedata is gspaths on the local filesystem where logs mbAmount of physical memory, in MB, that can beallocated for umber of CPU cores that can be allocated forcontainers

BeaconHadoopConfigura4on Some important ework.nameyarnExecution framework set to Hadoop YARNmapreduce.map.memory.mb2048Larger resource limit for mapsmapreduce.map.java.opts-Xmx1638mLarger heap-size for child jvms of mapsmapreduce.reduce.memory.4096mbLarger resource limit for reduces.mapreduce.task.io.sort.mb1066Higher memory-limit while sorting data duces64Control when reduce jobs startThe default number of map tasks per jobThe default number of reduce tasks per job

BeaconHadoopUsage[jqyin@beacon-login2 ] module help hadoop/2.5.0----------- Module Specific Help for 'hadoop/2.5.0' --------------Sets up environment to use Hadoop 2.5.0.Usage:module load hadoop/2.5.0cluster start (setup hadoop cluster with at least 3 nodes)copy your data to HDFSrun hadoop application with your datacopy results back from HDFScluster stop (shutdown hadoop IMPORTANT:By default, HDFS is set up on local SSD,the data on which will be purged once job exits******************************************

WordCount example: “hello world” in Hadoop Source code (WordCount.java) at /lustre/medusa/jqyin/hadoop/WordCount.java Steps to run on Beacon:1. qsub -I -l nodes 3 # request a interactive job2. module load hadoop/2.5.0 #load the module3. cluster start # launch hadoop services15

WordCount example: “hello world” in Hadoop Steps to run on Beacon:4. hadoop com.sun.tools.javac.Main WordCount.java# compile the java code5. jar cf wc.jar WordCount*.class # create jar file6. hadoop fs -mkdir -p /user/wordcount/input# create input folder on hdfs7. cat EOF file0Hello World Bye World16EOF

WordCount example: “hello world” in Hadoop Steps to run on Beacon:7. cat EOF file1Hello Hadoop Goodbye HadoopEOF8. hadoop fs -put file* /user/wordcount/input# copy files to hdfs9. hadoop jar wc.jar WordCount /user/wordcount/input /user/wordcount/output# run the application17

WordCount example: “hello world” in Hadoop Check result:hadoop fs -cat /user/wordcount/output/part-r-*Bye 1Goodbye 1Hadoop 2Hello 2World 218

WordCount example:“hello world” in Hadoop Mapperpublic void map(Object key, Text value, Context context ) throwsIOException, InterruptedException {StringTokenizer itr new StringTokenizer(value.toString());while (itr.hasMoreTokens()) {word.set(itr.nextToken());context.write(word, one);}} Output: file0 Hello, 1 World,1 Bye,1 World,1 file1 Hello,1 Hadoop,1 Goodbye, 1 Hadoop,1 19

WordCount example:“hello world” in Hadoop Reducerpublic void reduce(Text key, Iterable IntWritable values,Context context ) throws IOException, InterruptedException {int sum 0;for (IntWritable val : values) {sum val.get();}result.set(sum);context.write(key, result);} Output: Bye, 1 Goodbye, 1 Hadoop, 2 Hello, 2 World, 2 20

S#PBS-A-j-l-lyour-account-numberoenodes 6walltime 1:00:00#load the Hadoop modulemodule load hadoop/2.5.0#start the Hadoop cluster with one name node,#one secondary name node plus resource manager and job history #manager,four data nodes plus node managers#this command will also setup the directories on HDFS for Hadoop #and Hivecluster start#Run Hadoop application: HiBench sort HADOOP HOME/HiBench/HiBench.sh sortcluster stop

-j-l-lyour-account-numberoenodes 6walltime 1:00:00#load the Hadoop modulemodule load hadoop/2.5.0cluster start#Run Hadoop application: Hivewget 00k.zipunzip ml-100k.zipcd ml-100kcat EOF hive-script.sqlCREATE TABLE u data (userid INT,movieid INT,rating INT,unixtime STRING)ROW FORMAT DELIMITEDFIELDS TERMINATED BY '\t'STORED AS TEXTFILE;LOAD DATA LOCAL INPATH './u.data'OVERWRITE INTO TABLE u data;SELECT COUNT(*) FROM u data;EOFhive -f hive-script.sql#stop hadoop clustercluster stop

Reference utorial.html https://developer.yahoo.com/hadoop/tutorial/ RHEL Big Data HDPReference Architechure FINAL.pdf23

Running'Hadoop'on'Beacon #PBS -A your-account-number #PBS -j oe #PBS -l nodes 6 #PBS -l walltime 1:00:00 #load the Hadoop module module load hadoop/2.5.0 #start the Hadoop cluster with one name node, #one secondary name node plus resource manager and job history #manager, four data nodes plus node managers