Transcription

TWhitepapertestingBigData usinghadoop EcoSystemTrusted partner for your Digital Journey

What is Big Data?Big data is the term for a collection of largedatasets that cannot be processed usingtraditional computing techniques. EnterpriseSystems generate huge amount of data fromTerabytes to and even Petabytes of information. Big data is not merely a data, rather it hasbecome a complete subject, which involvesvarious tools, techniques and frameworks.Specifically, Big Data relates to data creation,storage, retrieval and analysis that is remarkable in terms of volume, velocity, and variety.Table of contents03What is Big Data?03Hadoop and Big Data03Hadoop Explained04Hadoop Architecture09How Atos Is Using Hadoop12Testing AccomplishmentsHadoop and Big Data15Conclusion15AppendicesHadoop is one of the tools designed tohandle big data. Hadoop and other softwareproducts work to interpret or parse theresults of big data searches through specific proprietary algorithms and methods.05Hadoop Eco System TestingHadoop is an open-source program under theApache license that is maintained by a globalcommunity of users. Apache Hadoop is 100%open source, and pioneered a fundamentallynew way of storing and processing data. Insteadof relying on expensive, proprietary hardwareand different systems to store and process data.08Testing Hadoop in CloudEnvironmentHadoop Explained .Apache Hadoop runs on a cluster of industry-standard servers configured withdirect-attached storage. Using Hadoop,you can store petabytes of data reliably ontens of thousands of servers while scalingperformance cost-effectively by merelyadding inexpensive nodes to the cluster.Author profilePadma Samvaba Panda is a test manager of Atos testing Practice. He has 10 years of IT experience encompassing in softwarequality control, testing, requirement analysis and professional services. During his diversified career, He has delivered multifacetedsoftware projects in a wide array of domains in specialized testingareas like (big data, crm, erp, data migration). He is responsible forthe quality and testing processes and strategizing tools for big-data implementations. He is a CSM (Certified Scrum Master) andISTQB-certified. He can be reached at padma.panda@atos.netTesting – BigData Using Hadoop Eco Systemversion 1.023The Apache Hadoop platform also includes theHadoop Distributed File System (HDFS), whichis designed for scalability and fault-tolerance.HDFS stores large files by dividing them intoblocks (usually 64 or 128 MB) and replicating the blocks on three or more servers.HDFS provides APIs for MapReduce applications to read and write data in parallel.Capacity and performance can be scaled byadding Data Nodes, and a single NameNodemechanism manages data placement andmonitors server availability. HDFS clustersin production use today reliably hold petabytes of data on thousands of nodes.Testing – BigData Using Hadoop Eco System

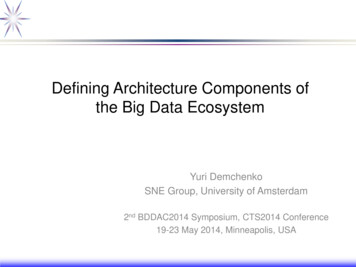

Hadoop Eco SystemTestingHadoop ArchitectureApache Hadoop is not actually a single product but instead a collection of several components, below screen provides the details ofHadoop Ecosystem.Test ApproachComponentsAs Google, Facebook, Twitter and other companies extended their services to web-scale, theamount of data they collected routinely from user interactions online would have overwhelmedthe capabilities of traditional IT architectures. So they built their own, they released code formany of the components into open source. Of these components, Apache Hadoop has rapidlyemerged as the de facto standard for managing large volumes of unstructured data. ApacheHadoop is an open source distributed software platform for storing and processing data. Theframework shuffles and sorts outputs of the map tasks, sending the intermediate (key, value)pairs to the reduce tasks, which group them into final results. MapReduce uses JobTracker andTaskTracker mechanisms to schedule tasks, monitor them, and restart any that fail.Testing – BigData Using Hadoop Eco System45ElementsComponentsDistributed FilesystemApache HDFS, CEPH File systemDistributed ProgrammingMapReduce,Pig,SparkNoSQL DatabasesCassandra, Apache HBASE, MongoDBSQL-On-HadoopApache Hive, Cloudera ImpalaData IngestionApache Flume, Apache SqoopService ProgrammingApache ZookeeperSchedulingApache OozieMachine LearningMlib,MahoutBenchmarkingApache Hadoop BenchmarkingSecurityApache Ranger,Apache KnoxSystem DeploymentApache Amabari ,Cloudera HueApplicationsPivotalR,Apache NutchDevelopment FrameworksJumpbuneBI ToolsBIRTETLTalendTesting – BigData Using Hadoop Eco System

Hadoop testers have to learn the components of the Hadoop eco system from thescratch. Till the time, the market evolves andfully automated testing tools are availablefor Hadoop validation, the tester does nothave any other option but to acquire thesame skill set as the Hadoop developer inthe context of leveraging the technologies.When it comes to validation on the map-reduceprocess stage, it definitely helps if the tester hasgood experience on programming languages.The reason is because unlike SQL wherequeries can be constructed to work through thedata MapReduce framework transforms a list ofkey-value pairs into a list of values. A good unittesting framework like Junit or PyUnit can helpvalidate the individual parts of the MapReducejob but they do not test them as a whole.Building a test automation framework using aprogramming language like Java can help here.The automation framework can focus onthe bigger picture pertaining to MapReducejobs while encompassing the unit tests aswell. Setting up the automation frameworkto a continuous integration server like Jenkins can be even more helpful. However,building the right framework for big dataapplications relies on how the test environment is setup as the processing happens ina distributed manner here. There could be acluster of machines on the QA server wheretesting of MapReduce jobs should happen.Challenges andBest PracticesIn traditional approach, there are several challenges in terms of validation of data traversaland load testing. Hadoop involves distributedNoSQL databases instance. With the combination of Talend (open source Big data tool),we can explore list of big data tasks work flow.Following this, you can develop a framework tovalidate and verify the workflow, tasks and taskscomplete. You can also identify the testing toolto be used for this operation. Test automationcan be a good approach in testing big dataimplementations. Identifying the requirementsand building a robust automation frameworkcan help in doing comprehensive testing.However, a lot would depend on how the skillsof the tester and how the big data environmentis setup. In addition to functional testing of bigdata applications using approaches such astest automation, given the large size of datathere are definitely needs for performanceand load testing in big data implementations.Some of the Important Hadoop Component Level Test ApproachTesting TypesTesting in Hadoop Eco System canbe categorized as below:MapReduce»» Core components testing(HDFS, MapReduce)»» Data Ingestion testing (Sqoop,Flume)»» Essential components testing(Hive, Cassandra)In the first stage which is the pre-Hadoop process validation, major testing activities includecomparing input file and source systems datato ensure extraction has happened correctlyand confirm that files are loaded correctly intothe HDFS (Hadoop Distributed File System).There is a lot of unstructured or semi structureddata at this stage. The next stage in line is themap-reduce process which involves running themap-reduce programs to process the incoming data from different sources. The key areasof testing in this stage include business logicvalidation on every node and then validatingthem after running against multiple nodes,making sure that the map reduce program /process is working correctly and key valuepairs are generated correctly and validatingthe data post the map reduce process. Thelast step in the map reduce process stage isto make sure that the output data files aregenerated correctly and are in the right format.The third or final stage is the output validationphase. The data output files are generatedand ready to be moved to an EDW (EnterpriseData Warehouse) or any other system basedon the requirement. Here, the tester needs toensure that the transformation rules are appliedcorrectly, check the data load in the targetsystem including data integrity and confirmthat there is no data corruption by comparingthe target data with the HDFS file system data.In functional testing with Hadoop, testers needto check data files are correctly processed andloaded in database, and after data processedthe output report should generated properlyalso need to check the business logic on astandalone node and then on multiple nodes.Load in target system and also validatingaggregation of data and data integrity.Programming Hadoop at the MapReducelevel means working with the Java APIs andmanually loading data files into HDFS. TestingMapReduce requires some skills in white-boxtesting. QA teams need to validate whethertransformation and aggregation are handledcorrectly by the MapReduce code. Testersneed to begin thinking as developers.YARNIt is a cluster and resource managementtechnology. YARN enables Hadoop clusters torun interactive querying and streaming dataapplications simultaneously with MapReducebatch jobs. Testing YARN involves validatingwhether MapReduce jobs are getting distributed across all the data nodes in the cluster.Apache HueHadoop has provided a web interface to makeit easy to work with Hadoop data. It providesa centralized apoint of access for componentslike Hive, Oozie, HBase, and HDFS.From Testingpoint of view it involves checking whethera user is able to work with all the aforementioned components after logging in to Hue.Apache SparkApache Spark is an open-source clustercomputing framework originally developed in the AMPLab at UC Berkeley.Spark is an in-memory data processing framework where data divided into smaller RDD.Sparkperformance is up to 100 times faster thanhadoop mapreduce for some applications. FromQA standpoint it involves validating whetherspark worker nodes are working and processingthe streaming data supplied by the spark jobrunning in the namenode.Since it is integratedwith other nodes (E.g. Cassandra) it shouldhave appropriate failure handling capability.Performanace is also an important benchmarkof a spark job as it is used as an enhancement over existing MapReduce Operation.Jasper ReportIt is integrated with Data Lake layer to fetch therequired data (Hive). Report designed usingJaspersoft Studio are deployed on JasperReport Server. Analytical and transactionaldata coming from Hive database is used byJasper Report Designer to generate complexreports. The Testing comprises the following:Jaspersoft Studio is installed properly.Testing – BigData Using Hadoop Eco System67Hive is properly integrated with Jasper Studioand Jasper Report Server via a JDBC connection.Reports are exported in specified format correctly.Auto Complete Login Form, Password ExpirationDays and allow User Password Change criteria.User not having admin role cannot create newUser and new Role.Apache CassandraIt is a non-relational, distributed, open-sourceand horizontally scalable database. A NoSQLdatabase tester will need to acquire knowledgeof CQL (Cassandra Query Language) in orderto perform quality testing. It is independent ofspecific application or schema and can operateon a variety of platforms and operating systems.QA areas for Cassandra include data typechecks, count checks, CRUD operations checks,timestamp and its format checks, checks relatedto cluster failure handling and data integrityand redundancy checks on task failure.Flume and SqoopBig data is equipped with data ingestion toolssuch as Flume and Sqoop, which can beused to move data into and out of Hadoop.Instead of writing a stand-alone applicationto move data to HDFS, these tools can beconsidered for ingesting data, say for example from RDBMS since they offer most of thecommon functions.General QA checkpointsinclude successfully generating streamingdata from Web sources using Flume, checksover data propagation from conventional datastorages into Hive and HBase, and vice versa.TalendTalend is an ETL tool that simplifies the integration of big data without having to write ormaintain complicated Apache Hadoop code.Enable existing developers to start working withHadoop and NoSQL databases. Using Talenddata can be transferred between Cassandra,HDFS and Hive.Validating talend activitiesinvolve data loading is happening as perbusiness rules,counts match, it appropriatelyrejects, replaces with default values and reportsinvalid data. Time taken and performance is alsoimportant while validating the above scenarios.KNIME TestingKonstanz Information Miner, is an opensource data analytics, reporting and integration platform. We have integrated andtested KNIME with various components(Ex: R, Hive, Jasper Report Server).Testing includes:»» Proper KNIME installation and configuration.(KNIME R) integration with Hive.»» Testing KNIME analytical results using R scripts.»» Checking reports that are exported fromKNIME analytical results in specified formatfrom Hive database in Jasper Report.AmbariAll administrative tasks (e.g.: configuration, start/stop service) are done from Ambari Web.Tester need to check that Ambari is integrated with all other applications. E.g.: Nagios,Sqoop, WebHDFS, Pig, Hive, YARN etc.Apache HiveHive enables Hadoop to operate as a datawarehouse. It superimposes structure on datain HDFS, and then permits queries over thedata using a familiar SQL-like syntax. SinceHive is recommended for analysis of terabytesof data, the volume and velocity of big dataare extensively covered in Hive testing froma functional standpoint, Hive testing requirestester to know HQL (Hive Query Language).It incorporates validation of successful setupof the Hive meta-store database; data integritybetween HDFS vs. Hive and Hive vs. MySQL(meta-store); correctness of the query anddata transformation logic, checks related tonumber of MapReduce jobs triggered foreach business logic, export / import of datafrom / toHive, data integrity and redundancy checks when MapReduce jobs fail.Apache OozieIt’s a really nice scalable and reliable solutionin Hadoop ecosystem for job scheduling.Both Map Reduce and Hive, Pig scripts canbe scheduled along with the job duration. QAactivities involve validating an Oozie workflow. Validating the execution of a workflowjob based on user defined timeline.Nagios testingNagios enables to perform health checks of Hadoop & other components and checks whetherthe platform is running according to normalbehavior. We need to configure all the servicesin Nagios, so that we can check the healthand performance from Nagios web portal.- The health check includes:. If a process is running. If a service is running. If the service is accepting connections. Storage capacity on data nodes.Testing – BigData Using Hadoop Eco System

Testing Hadoop inCloud EnvironmentHow Atos IsUsing HadoopBefore Testing Hadoop in Cloud:Information Culture is changing Leading to increased Volume,Variety & Velocity1. Document the high level cloud testinfrastructure (Disk space, RAM required for each node, etc.)2. Identify the cloud infrastructureservice provider3. Document the data security plan4. Document high level test strategy, testingrelease cycles, testing types, volume of dataprocessed by Hadoop, third party tools.Atos and Big DataService Overview, from critical IT to Business supportSTRATEGY &TRANSFORMATIONTechnology principlesBig data in the cloudTypesCloud ServicesValue added services:brokerage, integration, aggregation, securityBig Data»» Application, data, computing and storage»» Fully used or hybrid cloud»» Public or on-premise»» Multi-tennant or single -tennantAwareness andDiscovery WorkshopOpportunity AssessmentReadiness AssessmentADVISORYComputingas a ServiceSoftware as a ServiceInfrastructure as aServiceExternalCloudServicesProvidersProof of Value/ PoCCharacheristicsBig Data Visualization»» Scalability»» Elasticity»» Resource pooling»» Self service»» Pay as you goBig Data AnalyticsBig Data PatternsInsight ServicesBig Data Strategyand DesignBusiness processmodeling andreengineeringBig data enhancementplanningBig Data ImplementationArchitecture ServicesBIG DATAPLATFORMIntegration ServicesStorage ServicesHosting ServicesCloudINSIGHITSBroader ImpactThe main key features that leverage Bigdata testframework in cloud are:We use a four-stage framework to deliver our Big Data Analytics solutions and services»» On demand Hadoop testbed to test Big data»» Virtualized application / service availability that need to be tested»» Virtualized testing tool suiteTalend and jmeter»» Managed test life cycle in Cloud»» Different types of Big Datatest metrics in cloud»» Operations like import / export configurations and test artifacts in / out of the testbed.Testing – BigData Using Hadoop Eco System89AdvisoryStrategy and transformationBig Data platformInsightsWorkshops – we deliver successfulworkshops for clients across all marketsProof of Concept / Proof of Value –practical and ready PoC/PoV scenarioscan be deployed.Big Data strategy and design; howshould your business approach big dataBusiness process modeling and reengineering; what implications does bigdata have on your business.Big Data implementation such as IDAArchitecture, Integration, Storage andHosting Services, with Canopy.Big Data Visualization, Analytics,Patterns and Insight Services.Testing – BigData Using Hadoop Eco System

Economics ofBig DataData examples1Structured dataSemi-structured dataUnstructured data32New insights andbusiness opportunitiesdue to three key factorsCloud-basedBI & AnalyticsAnalytics is the keyenabler of change andthe cloud helps myMachine-generatedImpact felt in formationFinancialGovernmentHealthcareOperational& decisionsupportTransportManufacturingInternet ofThingsAgility & CostOptimizationTelcoModernization,C&H of DWH, BI& E(IDCR)MEnvironmentsEnergyDriving the need toanalyse and harvestCustomer DataOpen dataContextual MobilityHuman-generatedCitizen DataProvision of theright services, atthe rightmoment to theright personStructured dataSemi-structured dataUnstructured data»» Machine-generated: Input data, click-streamdata, gaming-related data, sensor data»» Human-generated: Web log data [! weblog], point-of-sale data (when something is bought), financial data»» Machine-generated: Electronic data interchange (EDI), SWIFT, XML, RSS feeds, sensordata»» Human-generated: Emails, spreadsheets,incident tickets, CRM records»» Machine-generated: Satellite images, scientific data, photographs and video, radar orsonar data»» Human-generated: Internal company textdata, social media, mobile data, websitecontentAtos has a clear vision of the importance of BigData as a Business Success factor. This sectiongives a single, overall view of how we see theanalytics marketplace and how we operatewithin it.Atos believes that analytics is the key to gainingtrue business insight and to achieving competitive advantage. Organizations must turn onanalytics everywhere to realize this understanding and apply it effectively.We have a three level approach on analytics:Enterprise Analytics: Better decision-making,enabled by customized business intelligencesolutionsVertical & Industry-specific Analytics: Processoptimization and improved operational efficiency through automated use of real-time data,intelligence, monitoring & analytics.The top line of the graph shows the principalinputs, the flows of real-time or near real-timedata that provide raw material for analytics.The three connected circles show the mainareas of focus and activity for Atos:Digital transformation is all about digitizing andoptimizin

performance cost-effectively by merely adding inexpensive nodes to the cluster. Table of contents 03 What is Big Data? 03 Hadoop and Big Data 03 Hadoop Explained 04 Hadoop Architecture 05 Hadoop Eco System Testing 08 Testing Hadoop in Cloud Environment Author profile Padma Samvaba Panda is a