Transcription

Optimal Demand Response Using Device BasedReinforcement LearningZheng Wen 1Joint Work with Hamid Reza Maei1and Dan O’Neill11 Departmentof Electrical EngineeringStanford Universityzhengwen@stanford.eduJune 12, 2012Wen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 20121 / 23

Outline1Motivation2Device Based MDP ModelRL-EMS and Consumer RequestsDis-utility Function and Performance MetricDevice Based MDP Model3RL-EMS Algorithm4Simulation Result5ConclusionWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 20122 / 23

MotivationDemand response (DR) systems dynamically adjust electrical demandin response to changing electricity prices (or other grid signals)By suitably adjusting electricity prices, load can be shifted from “peak”periods to other periodsDR is a key component in smart gridDR can potentiallyImprove operational efficiency and capital efficiencyReduce harmful emissions and risk of outagesBetter match energy demand with unforecasted changes in electricalenergy generationWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 20123 / 23

Motivation (Cont.)DR has been extensively investigated for larger energy usersResidential and small building DR offers similar potential benefitsHowever, “decision fatigue” prevents residential consumers to respondto real-time electricity price (see O’Neill et al. 2010)We can’t stay at home every day, watching the real-time electricityprice and deciding when to use each deviceFully-automated Energy Management Systems (EMS) are a necessaryprerequisite to DR in residential and small building settingsWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 20124 / 23

Energy Management SystemsEMS makes the DR decisions for the consumerCritical to a successful DR EMS approach is learning theconsequences of shifting energy consumption on consumersatisfaction, cost and future energy behaviorO’Neill et al. 2010 has proposed a residential EMS algorithm basedon reinforcement learning (RL), called CAES algorithmIn this project, we extend the work of O’Neill et al. 2010 and proposea new RL-based energy management algorithm called RL-EMSalgorithmWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 20125 / 23

RL-EMS SystemWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 20126 / 23

What is New?Compared with CAES, RL-EMS is new in the following aspects:1234RL-EMS is allowed to perform speculative jobsA consumer request’s target time can be different from its request timeAn unsatisfied consumer request can be cancelled by the consumerRL-EMS learns the dis-satisfaction of the consumer on completed jobsand cancelled delaysNew Insight: A device-centric view of the problemWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 20127 / 23

Outline1Motivation2Device Based MDP ModelRL-EMS and Consumer RequestsDis-utility Function and Performance MetricDevice Based MDP Model3RL-EMS Algorithm4Simulation Result5ConclusionWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 20128 / 23

RL-EMS and Consumer RequestsOur proposed RL-EMS performs the following functions:12It receives requests from the consumer, and then schedules when tofulfill the received requests (requested jobs)If a device is idle, RL-EMS could speculatively power on that device(speculative job)Each consumer request is a four-tuple J (requested device n, requested time τr , target time τg , priority g )Jobs are standardized and can be completed in one time stepRequire τr τg τr W (n), where W (n) is a knowndevice-dependent time windowAt each time, there is at most one unsatisfied request for each deviceWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 20129 / 23

Consumer Preference and Dis-utiltiy FunctionIn economics, utility function is used to model the preference ofconsumersIn this project, we work with the negative of utility function, calleddis-utility function, which models the dissatisfaction of the consumerAssumption 1: the dis-utility of the consumer at time t the sum ofhis evaluations on jobs completed/cancelled at time tDis-utility is additive over jobsAt each time there is at most one job completed/cancelled at a device,hence, dis-utility is also additive over devicesWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201210 / 23

Performance MetricWe assume that the “instantaneous cost” at time t iselectricity bill paid at time t dis-utility at time tRL-EMS aims to minimize the expected infinite-horizon discountedcostThe instantaneous cost is additive over devicesWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201211 / 23

Device Based MDP ModelAssumption 2: Both the electricity price and the consumer requeststo the RL-EMS follow exogenous Markov chains. Furthermore, weassume thatElectricity price process is independent of the consumer requestsprocessConsumer requests to different devices are independentUnder Assumption 1 and 2, RL-EMS aims to solve an infinite-horizondiscounted MDP, and this MDP decomposes over devicesHence, we can derive an optimal scheduling policy for a single deviceby solving the associated device-based MDPWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201212 / 23

Probability Transition ModelElectricity price follows an exogenous Markov chainThe timeline for a device can be divided into “episodes”Whenever a device completes a job, or an unsatisfied request iscancelled, the current episode terminatesAt the next time step, the device “regenerates” its state according to afixed distributionWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201213 / 23

Probability Transition Model (Cont.)hi S Pmax (2W 1)gmax Ŵ 1Wen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201214 / 23

DP Solution and Motivation for RLIf RL-EMS knows12The transition model of the device based MDPThe dis-utility function of the consumerthen the optimal scheduling strategy can be derived based onfinite-horizon dynamic programming (DP)In fact, it is an optimal stopping problem in each episodeHowever, in practice, RL-EMS needs to learn the transition model anddis-utility function while interacting with the consumer and theelectricity priceRL is the approach in this caseWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201215 / 23

Outline1Motivation2Device Based MDP ModelRL-EMS and Consumer RequestsDis-utility Function and Performance MetricDevice Based MDP Model3RL-EMS Algorithm4Simulation Result5ConclusionWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201216 / 23

RL-EMS AlgorithmIn the classical RL literature, the environment consists of an unknownMDP, and an agent learns how to make decisions while interactingwith the environmentIn this case, the “agent” is the RL-EMS and the “environment”includes both the electricity price and the consumerIn this project, we use Q(λ) algorithm, which combines classicalQ-learning with (1) Eligibility traces and (2) Importance samplingWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201217 / 23

Q(λ) AlgorithmInitialize Q0 arbitrarily, set eligibility parameter λ [0, 1].Repeat for each episode:Choose a small constant step-size β 0 for each episode.Initialize eligibility trace vector et 1 0.Take a(t) from x(t) according to µb (e.g. -softmin policy), and arrive atx(t 1).for each time step in an episode doObserve sample, (x(t), a(t), x(t 1), Φt ) at time step t, where Φt is theinstantaneous cost.defδt Φ (x(t), a(t), x(t 1)) α mina0 Qt (x(t 1), a0 ) Qt (x(t), a(t)).1; otherwise ρt 0.If a(t) argmina Qt (x(t), a), then ρt µb (a(t) x(t))et ψt ρt αλet 1 , where ψt is a binary vector whose only nonzeroelement is (x(t), a(t)).Qt 1 Qt βδt et .end forWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201218 / 23

Outline1Motivation2Device Based MDP ModelRL-EMS and Consumer RequestsDis-utility Function and Performance MetricDevice Based MDP Model3RL-EMS Algorithm4Simulation Result5ConclusionWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201219 / 23

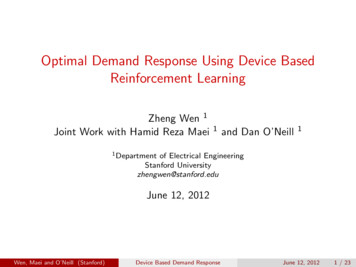

Simulation ResultA toy examplePmax 4, gmax 2, W 4, Ŵ 5 and S 96Assume that at the beginning of each episode, the “device portion” ofthe regenerated state is fixed (i.e. (0, 0))For each scheduling policy µ, its performance is V (µ) EP πP min Qµ [P, 0, 0]T , a ,a Aand we normalize the performance with respect to the optimalperformance.We run the proposed RL-EMS algorithm for 8, 000 episodes, andrepeat the simulation for 100 timesBaseline: the default policy without DRWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201220 / 23

Simulation Result (Cont.)2.4Normalized Performance of Qt2.22Performance of QtOptimal PerformanceBaseline 0600070008000EpisodeReduce the consumer’s cost by 56%Wen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201221 / 23

Outline1Motivation2Device Based MDP ModelRL-EMS and Consumer RequestsDis-utility Function and Performance MetricDevice Based MDP Model3RL-EMS Algorithm4Simulation Result5ConclusionWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201222 / 23

ConclusionWe present a RL approach (RL-EMS Algorithm) to DR for residentialand small commercial buildingsRL-EMS does not require the prior knowledge of models on electricityprice, consumer behavior and consumer dis-utilityRL-EMS learns to make optimal decisions byrescheduling the requested jobsanticipating the future uses of devices and doing speculative jobsFuture Work: RL algorithms with better sample efficiencyWen, Maei and O’Neill (Stanford)Device Based Demand ResponseJune 12, 201223 / 23

a new RL-based energy management algorithm calledRL-EMS algorithm Wen, Maei and O'Neill (Stanford) Device Based Demand Response June 12, 2012 5 / 23. . Wen, Maei and O'Neill (Stanford) Device Based Demand Response June 12, 2012 10 / 23. Performance Metric We assume that the \instantaneous cost" at time t is