Transcription

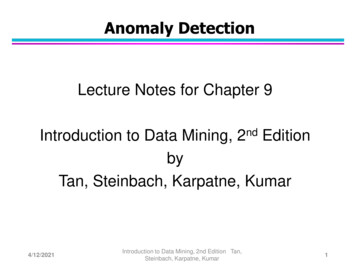

Anomaly DetectionLecture Notes for Chapter 9Introduction to Data Mining, 2nd EditionbyTan, Steinbach, Karpatne, Kumar4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar1

Anomaly/Outlier DetectionWhat are anomalies/outliers?– The set of data points that areconsiderably different than theremainder of the dataNatural implication is thatanomalies are relatively rare– One in a thousand occurs often if you have lots of data– Context is important, e.g., freezing temps in JulyCan be important or a nuisance– Unusually high blood pressure– 200 pound, 2 year old4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar2

Importance of Anomaly DetectionOzone Depletion HistoryIn 1985 three researchers (Farman,Gardinar and Shanklin) werepuzzled by data gathered by theBritish Antarctic Survey showing thatozone levels for Antarctica haddropped 10% below normal levelsWhy did the Nimbus 7 satellite,which had instruments aboard forrecording ozone levels, not recordsimilarly low ozone concentrations?The ozone concentrations recordedby the satellite were so low theywere being treated as outliers by acomputer program and /science/hole/size.htmlIntroduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar3

Causes of AnomaliesData from different classes– Measuring the weights of oranges, but a few grapefruitare mixed inNatural variationhttps://umn.zoom.us/my/kumar001– Unusually tall peopleData errors– 200 pound 2 year old4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar4

Distinction Between Noise and AnomaliesNoise doesn’t necessarily produce unusual values orobjectsNoise is not interestingNoise and anomalies are related but distinct concepts4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar5

Model-based vs Model-freeModel-based Approaches Modelcan be parametric or non-parametric Anomalies are those points that don’t fit well Anomalies are those points that distort the modelModel-free Approaches Anomaliesare identified directly from the data withoutbuilding a modelOften the underlying assumption is that themost of the points in the data are normal4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar6

General Issues: Label vs ScoreSome anomaly detection techniques provide only abinary categorizationOther approaches measure the degree to which anobject is an anomaly– This allows objects to be ranked– Scores can also have associated meaning (e.g., statisticalsignificance)4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar7

Anomaly Detection TechniquesStatistical ApproachesProximity-based– Anomalies are points far away from other pointsClustering-based– Points far away from cluster centers are outliers– Small clusters are outliersReconstruction Based4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar8

Statistical ApproachesProbabilistic definition of an outlier: An outlier is an object thathas a low probability with respect to a probability distributionmodel of the data.Usually assume a parametric model describing the distributionof the data (e.g., normal distribution)Apply a statistical test that depends on– Data distribution– Parameters of distribution (e.g., mean, variance)– Number of expected outliers (confidence limit)Issues– Identifying the distribution of a data set Heavy tailed distribution– Number of attributes– Is the data a mixture of distributions?4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar9

Normal x4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar10

Grubbs’ TestDetect outliers in univariate dataAssume data comes from normal distributionDetects one outlier at a time, remove the outlier,and repeat– H0: There is no outlier in data– HA: There is at least one outlierGrubbs’ test statistic:Reject H0 if:4/12/2021( N 1)G NG max X Xst (2 / N , N 2 )N 2 t (2 / N , N 2 )Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar11

Statistically-based – Likelihood ApproachAssume the data set D contains samples from amixture of two probability distributions:– M (majority distribution)– A (anomalous distribution)General Approach:– Initially, assume all the data points belong to M– Let Lt(D) be the log likelihood of D at time t– For each point xt that belongs to M, move it to A Let Lt 1 (D) be the new log likelihood. Compute the difference, Lt(D) – Lt 1 (D)If c (some threshold), then xt is declared as an anomalyand moved permanently from M to A 4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar12

Statistically-based – Likelihood ApproachData distribution, D (1 – ) M AM is a probability distribution estimated from data– Can be based on any modeling method (naïve Bayes,maximum entropy, etc.)A is initially assumed to be uniform distributionLikelihood at time t: At M t Lt ( D ) PD ( xi ) (1 ) PM t ( xi ) PAt ( xi ) i 1xi M t xi At LLt ( D ) M t log(1 ) log PM t ( xi ) At log log PAt ( xi )Nxi M t4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumarxi At13

Strengths/Weaknesses of Statistical ApproachesFirm mathematical foundationCan be very efficientGood results if distribution is knownIn many cases, data distribution may not be knownFor high dimensional data, it may be difficult to estimatethe true distributionAnomalies can distort the parameters of the distribution4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar14

Distance-Based ApproachesThe outlier score of an object is the distance toits kth nearest neighbor4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar15

One Nearest Neighbor - One OutlierD21.81.61.41.210.80.60.4Outlier Score4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar16

One Nearest Neighbor - Two tlier Score4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar17

Five Nearest Neighbors - Small Cluster2D1.81.61.41.210.80.60.4Outlier Score4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar18

Five Nearest Neighbors - Differing DensityD1.81.61.41.210.80.60.40.2Outlier Score4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar19

Strengths/Weaknesses of Distance-Based ApproachesSimpleExpensive – O(n2)Sensitive to parametersSensitive to variations in densityDistance becomes less meaningful in highdimensional space4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar20

Density-Based ApproachesDensity-based Outlier: The outlier score of anobject is the inverse of the density around theobject.– Can be defined in terms of the k nearest neighbors– One definition: Inverse of distance to kth neighbor– Another definition: Inverse of the average distance to kneighbors– DBSCAN definitionIf there are regions of different density, thisapproach can have problems4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar21

Relative DensityConsider the density of a point relative to that ofits k nearest neighborsLet 𝑦1 , , 𝑦𝑘 be the 𝑘 nearest neighbors of 𝒙11𝑑𝑒𝑛𝑠𝑖𝑡𝑦 𝒙, 𝑘 𝑑𝑖𝑠𝑡 𝒙, 𝑘𝑑𝑖𝑠𝑡(𝒙, 𝒚𝑘 )𝑟𝑒𝑙𝑎𝑡𝑖𝑣𝑒 𝑑𝑒𝑛𝑠𝑖𝑡𝑦 𝒙, 𝑘 𝑑𝑖𝑠𝑡(𝒙,𝑘)σ𝑘𝑖 1 𝑑𝑖𝑠𝑡(𝒚𝑖 ,𝑘)/𝑘 σ𝑘𝑖 1 𝑑𝑒𝑛𝑠𝑖𝑡𝑦(𝒚𝑖 𝑑𝑖𝑠𝑡(𝒙,𝒚)σ𝑘𝑖 1 𝑑𝑖𝑠𝑡(𝒚𝑖 ,𝑘)/𝑘Can use average distance instead4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar22

Relative Density Outlier Scores6.856C541.40D31.332A1Outlier Score4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar23

Relative Density-based: LOF approachFor each point, compute the density of its local neighborhoodCompute local outlier factor (LOF) of a sample p as the average ofthe ratios of the density of sample p and the density of its nearestneighborsOutliers are points with largest LOF valueIn the NN approach, p2 isnot considered as outlier,while LOF approach findboth p1 and p2 as outliers p2 4/12/2021p1Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar24

Strengths/Weaknesses of Density-Based ApproachesSimpleExpensive – O(n2)Sensitive to parametersDensity becomes less meaningful in highdimensional space4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar25

Clustering-Based ApproachesAn object is a cluster-basedoutlier if it does not stronglybelong to any cluster– For prototype-based clusters, anobject is an outlier if it is not closeenough to a cluster center Outliers can impact the clustering produced– For density-based clusters, an objectis an outlier if its density is too low Can’t distinguish between noise and outliers– For graph-based clusters, an objectis an outlier if it is not well connected4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar26

Distance of Points from Closest Centroids4.54.64C3.532.5D0.1721.51.21A0.5Outlier Score4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar27

Relative Distance of Points from Closest Centroid43.532.521.510.5Outlier Score4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar28

Strengths/Weaknesses of Clustering-Based ApproachesSimpleMany clustering techniques can be usedCan be difficult to decide on a clusteringtechniqueCan be difficult to decide on number of clustersOutliers can distort the clusters4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar29

Reconstruction-Based ApproachesBased on assumptions there are patterns in thedistribution of the normal class that can becaptured using lower-dimensionalrepresentationsReduce data to lower dimensional data– E.g. Use Principal Components Analysis (PCA) orAuto-encodersMeasure the reconstruction error for each object– The difference between original and reduceddimensionality version4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar30

Reconstruction ErrorLet 𝐱 be the original data objectFind the representation of the object in a lowerdimensional spaceProject the object back to the original spaceCall this object 𝐱ොReconstruction Error(x) x xොObjects with large reconstruction errors areanomalies4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar31

Reconstruction of two-dimensional data4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar32

Basic Architecture of an AutoencoderAn autoencoder is a multi-layer neural networkThe number of input and output neurons is equalto the number of original attributes.4/12/2021Introduction to Data Mining, 2nd EditionTan, Steinbach, Karpatne, Kumar33

Strengths and WeaknessesDoes not require assumptions about distributionof normal classCan use many dimensionality reductionapproachesThe reconstruction error is computed in theoriginal space– This can be a problem if dimensionality is high4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar34

One Class SVMUses an SVM approach to classify normal objectsUses the given data to construct such a modelThis data may contain outliersBut the data does not contain class labelsHow to build a classifier given one class?4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar35

How Does One-Class SVM Work?Uses the “origin” trickUse a Gaussian kernel– Every point mapped to a unit hypersphere– Every point in the same orthant (quadrant)Aim to maximize the distance of the separatingplane from the origin4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar36

Two-dimensional One Class SVM4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar37

Equations for One-Class SVMEquation of hyperplane𝜙 is the mapping to high dimensional spaceWeight vector isν is fraction of outliersOptimization condition is the following4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar38

Finding Outliers with a One-Class SVMDecision boundary with 𝜈 0.14/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar39

Finding Outliers with a One-Class SVMDecision boundary with 𝜈 0.05 and 𝜈 0.24/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar40

Strengths and WeaknessesStrong theoretical foundationChoice of ν is difficultComputationally expensive4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar41

Information Theoretic ApproachesKey idea is to measure how much informationdecreases when you delete an observationAnomalies should show higher gainNormal points should have less gain4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar42

Information Theoretic ExampleSurvey of height and weight for 100 participantsEliminating last group give a gain of2.08 1.89 0.194/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar43

Strengths and WeaknessesSolid theoretical foundationTheoretically applicable to all kinds of dataDifficult and computationally expensive toimplement in practice4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar44

Evaluation of Anomaly DetectionIf class labels are present, then use standardevaluation approaches for rare class such asprecision, recall, or false positive rate– FPR is also know as false alarm rateFor unsupervised anomaly detection usemeasures provided by the anomaly method– E.g. reconstruction error or gainCan also look at histograms of anomaly scores.4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar45

Distribution of Anomaly ScoresAnomaly scores should show a tail4/12/2021Introduction to Data Mining, 2nd Edition Tan,Steinbach, Karpatne, Kumar46

Outliers can impact the clustering produced – For density-based clusters, an object is an outlier if its density is too low Can’t distinguish between noise and outliers – For graph-based clusters, an object is an outlier if it is not well connected 4/12/2021 Introduction to Da