Transcription

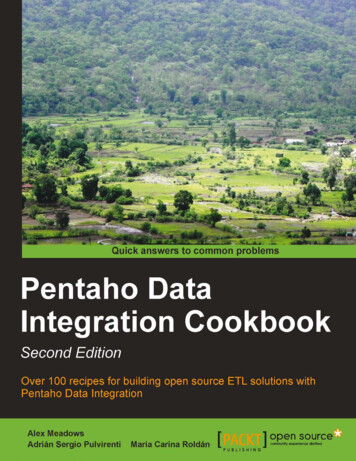

Pentaho DataIntegration CookbookSecond EditionOver 100 recipes for building open source ETL solutionswith Pentaho Data IntegrationAlex MeadowsAdrián Sergio PulvirentiMaría Carina RoldánBIRMINGHAM - MUMBAI

Pentaho Data Integration CookbookSecond EditionCopyright 2013 Packt PublishingAll rights reserved. No part of this book may be reproduced, stored in a retrieval system,or transmitted in any form or by any means, without the prior written permission of thepublisher, except in the case of brief quotations embedded in critical articles or reviews.Every effort has been made in the preparation of this book to ensure the accuracy of theinformation presented. However, the information contained in this book is sold withoutwarranty, either express or implied. Neither the author, nor Packt Publishing, and its dealersand distributors will be held liable for any damages caused or alleged to be caused directlyor indirectly by this book.Packt Publishing has endeavored to provide trademark information about all of the companiesand products mentioned in this book by the appropriate use of capitals. However, PacktPublishing cannot guarantee the accuracy of this information.First published: June 2011Second Edition: November 2013Production Reference: 2221113Published by Packt Publishing Ltd.Livery Place35 Livery StreetBirmingham B3 2PB, UK.ISBN 978-1-78328-067-4www.packtpub.comCover Image by Aniket Sawant (aniket sawant photography@hotmail.com)

CreditsAuthorAlex MeadowsProject CoordinatorWendell PalmerAdrián Sergio PulvirentiMaría Carina RoldánReviewersWesley Seidel CarvalhoDaniel LemireCoty SutherlandAcquisition EditorUsha IyerMeeta RajaniLead Technical EditorArvind KoulTechnical EditorsDennis JohnAdrian RaposoGaurav ThingalayaProofreaderKevin McGowanIndexerMonica Ajmera MehtaGraphicsRonak DhruvProduction CoordinatorNilesh R. MohiteCover WorkNilesh R. Mohite

About the AuthorAlex Meadows has worked with open source Business Intelligence solutions for nearly10 years and has worked in various industries such as plastics manufacturing, social ande-mail marketing, and most recently with software at Red Hat, Inc. He has been very active inPentaho and other open source communities to learn, share, and help newcomers with the bestpractices in BI, analytics, and data management. He received his Bachelor's degree in BusinessAdministration from Chowan University in Murfreesboro, North Carolina, and his Master's degreein Business Intelligence from St. Joseph's University in Philadelphia, Pennsylvania.First and foremost, thank you Christina for being there for me before, during,and after taking on the challenge of writing and revising a book. I knowit's not been easy, but thank you for allowing me the opportunity. To mygrandmother, thank you for teaching me at a young age to always go for goalsthat may just be out of reach. Finally, this book would be no where withoutthe Pentaho community and the friends I've made over the years being a partof it.Adrián Sergio Pulvirenti was born in Buenos Aires, Argentina, in 1972. He earned hisBachelor's degree in Computer Sciences at UBA, one of the most prestigious universities inSouth America.He has dedicated more than 15 years to developing desktop and web-based softwaresolutions. Over the last few years he has been leading integration projects and developmentof BI solutions.I'd like to thank my lovely kids, Camila and Nicolas, who understood thatI couldn't share with them the usual video game sessions during thewriting process. I'd also like to thank my wife, who introduced me to thePentaho world.

María Carina Roldán was born in Esquel, Argentina, in 1970. She earned her Bachelor'sdegree in Computer Science at UNLP in La Plata; after that she did a postgraduate course inStatistics at the University of Buenos Aires (UBA) in Buenos Aires city, where she has beenliving since 1994.She has worked as a BI consultant for more than 10 years. Over the last four years, she hasbeen dedicated full time to developing BI solutions using Pentaho Suite. Currently, she worksfor Webdetails, one of the main Pentaho contributors. She is the author of Pentaho 3.2 DataIntegration: Beginner's Guide published by Packt Publishing in April 2010.You can follow her on Twitter at @mariacroldan.I'd like to thank those who have encouraged me to write this book: On onehand, the Pentaho community; they have given me a rewarding feedbackafter the Beginner's book. On the other side, my husband, who withouthesitation, agreed to write the book with me. Without them I'm not sure Iwould have embarked on a new book project.I'd also like to thank the technical reviewers for the time and dedication thatthey have put in reviewing the book. In particular, thanks to my colleagues atWebdetails; it's a pleasure and a privilege to work with them every day.

About the ReviewersWesley Seidel Carvalho got his Master's degree in Computer Science from the Instituteof Mathematics and Statistics, University of São Paulo (IME-USP), Brazil, where he researchedon (his dissertation) Natural Language Processing (NLP) for the Portuguese language. Heis a Database Specialist from the Federal University of Pará (UFPa). He has a degree inMathematics from the State University of Pará (Uepa).Since 2010, he has been working with Pentaho and researching Open Data government.He is an active member of the communities and lists of Free Software, Open Data, andPentaho in Brazil, contributing software "Grammar Checker for OpenOffice - CoGrOO" andCoGrOO Community.He has worked with technology, database, and systems development since 1997, BusinessIntelligence since 2003, and has been involved with Pentaho and NLP since 2009. He iscurrently serving its customers through its s.com.brDaniel Lemire has a B.Sc. and a M.Sc. in Mathematics from the University of Toronto,and a Ph.D. in Engineering Mathematics from the Ecole Polytechnique and the Université deMontréal. He is a Computer Science professor at TELUQ (Université du Québec) where heteaches Primarily Online. He has also been a research officer at the National Research Councilof Canada and an entrepreneur. He has written over 45 peer-reviewed publications, includingmore than 25 journal articles. He has held competitive research grants for the last 15 years.He has served as a program committee member on leading computer science conferences(for example, ACM CIKM, ACM WSDM, and ACM RecSys). His open source software has beenused by major corporations such as Google and Facebook. His research interests includedatabases, information retrieval, and high performance programming. He blogs regularly oncomputer science at http://lemire.me/blog/.

Coty Sutherland was first introduced to computing around the age of 10. At that time,he was immersed in various aspects of computers and it became apparent that he had apropensity for software manipulation. From then until now, he has stayed involved in learningnew things in the software space and adapting to the changing environment that is SoftwareDevelopment. He graduated from Appalachian State University in 2009 with a Bachelor'sDegree in Computer Science. After graduation, he focused mainly on software applicationdevelopment and support, but recently transitioned to the Business Intelligence field topursue new and exciting things with data. He is currently employed by the open sourcecompany, Red Hat, as a Business Intelligence Engineer.

www.PacktPub.comSupport files, eBooks, discount offers and moreYou might want to visit www.PacktPub.com for support files and downloads related toyour book.Did you know that Packt offers eBook versions of every book published, with PDF and ePubfiles available? You can upgrade to the eBook version at www.PacktPub.com and as a printbook customer, you are entitled to a discount on the eBook copy. Get in touch with us atservice@packtpub.com for more details.At www.PacktPub.com, you can also read a collection of free technical articles, sign upfor a range of free newsletters and receive exclusive discounts and offers on Packt booksand eBooks.TMhttp://PacktLib.PacktPub.comDo you need instant solutions to your IT questions? PacktLib is Packt's online digital booklibrary. Here, you can access, read and search across Packt's entire library of books.Why Subscribe?ffFully searchable across every book published by PacktffCopy and paste, print and bookmark contentffOn demand and accessible via web browserFree Access for Packt account holdersIf you have an account with Packt at www.PacktPub.com, you can use this to accessPacktLib today and view nine entirely free books. Simply use your login credentials forimmediate access.

Table of ContentsPrefaceChapter 1: Working with DatabasesIntroductionConnecting to a databaseGetting data from a databaseGetting data from a database by providing parametersGetting data from a database by running a query built at runtimeInserting or updating rows in a tableInserting new rows where a simple primary key has to be generatedInserting new rows where the primary key has to be generated basedon stored valuesDeleting data from a tableCreating or altering a database table from PDI (design time)Creating or altering a database table from PDI (runtime)Inserting, deleting, or updating a table depending on a fieldChanging the database connection at runtimeLoading a parent-child tableBuilding SQL queries via database metadataPerforming repetitive database design tasks from PDIChapter 2: Reading and Writing FilesIntroductionReading a simple fileReading several files at the same timeReading semi-structured filesReading files having one field per rowReading files with some fields occupying two or more rowsWriting a simple fileWriting a semi-structured 8487

Table of ContentsProviding the name of a file (for reading or writing) dynamicallyUsing the name of a file (or part of it) as a fieldReading an Excel fileGetting the value of specific cells in an Excel fileWriting an Excel file with several sheetsWriting an Excel file with a dynamic number of sheetsReading data from an AWS S3 Instance90939597101105107Chapter 3: Working with Big Data and Cloud Sources111Chapter 4: Manipulating XML Structures133Chapter 5: File Management171IntroductionLoading data into Salesforce.comGetting data from Salesforce.comLoading data into HadoopGetting data from HadoopLoading data into HBaseGetting data from HBaseLoading data into MongoDBGetting data from MongoDBIntroductionReading simple XML filesSpecifying fields by using the Path notationValidating well-formed XML filesValidating an XML file against DTD definitionsValidating an XML file against an XSD schemaGenerating a simple XML documentGenerating complex XML structuresGenerating an HTML page using XML and XSL transformationsReading an RSS FeedGenerating an RSS FeedIntroductionCopying or moving one or more filesDeleting one or more filesGetting files from a remote serverPutting files on a remote serverCopying or moving a custom list of filesDeleting a custom list of filesComparing files and foldersWorking with ZIP filesEncrypting and decrypting 48153155162165167171172175178181183185188191195

Table of ContentsChapter 6: Looking for Data199Chapter 7: Understanding and Optimizing Data Flows231IntroductionLooking for values in a database tableLooking for values in a database with complex conditionsLooking for values in a database with dynamic queriesLooking for values in a variety of sourcesLooking for values by proximityLooking for values by using a web serviceLooking for values over intranet or the InternetValidating data at runtimeIntroductionSplitting a stream into two or more streams based on a conditionMerging rows of two streams with the same or different structuresAdding checksums to verify datasetsComparing two streams and generating differencesGenerating all possible pairs formed from two datasetsJoining two or more streams based on given conditionsInterspersing new rows between existent rowsExecuting steps even when your stream is emptyProcessing rows differently based on the row numberProcessing data into shared transformations via filter criteria andsubtransformationsAltering a data stream with Select valuesProcessing multiple jobs or transformations in parallelChapter 8: Executing and Re-using Jobs and TransformationsIntroductionLaunching jobs and transformationsExecuting a job or a transformation by setting static argumentsand parametersExecuting a job or a transformation from a job by setting arguments andparameters dynamicallyExecuting a job or a transformation whose name is determined at runtimeExecuting part of a job once for every row in a datasetExecuting part of a job several times until a condition is trueMoving part of a transformation to a subtransformationUsing Metadata Injection to re-use transformationsChapter 9: Integrating Kettle and the Pentaho SuiteIntroductionCreating a Pentaho report with data coming from 16321321324iii

Table of ContentsCreating a Pentaho report directly from PDIConfiguring the Pentaho BI Server for running PDI jobs and transformationsExecuting a PDI transformation as part of a Pentaho processExecuting a PDI job from the Pentaho User ConsolePopulating a CDF dashboard with data coming from a PDI transformation329332334341350Chapter 10: Getting the Most Out of Kettle357Chapter 11: Utilizing Visualization Tools in Kettle401Chapter 12: Data Analytics417Appendix A: Data Structures427Appendix B: References433Index435IntroductionSending e-mails with attached filesGenerating a custom logfileRunning commands on another serverProgramming custom functionalityGenerating sample data for testing purposesWorking with JSON filesGetting information about transformations and jobs (file-based)Getting information about transformations and jobs (repository-based)Using Spoon's built-in optimization toolsIntroductionManaging plugins with the MarketplaceData profiling with DataCleanerVisualizing data with AgileBIUsing Instaview to analyze and visualize dataIntroductionReading data from a SAS datafileStudying data via stream statisticsBuilding a random data sample for WekaBooks data structuremuseums data structureoutdoor data structureSteel Wheels data structureLahman Baseball 5401402404409413417417420424427429430431432433434

PrefacePentaho Data Integration (also known as Kettle) is one of the leading open source dataintegration solutions. With Kettle, you can take data from a multitude of sources, transformand conform the data to given requirements, and load the data into just as many targetsystems. Not only is PDI capable of transforming and cleaning data, it also provides anever-growing number of plugins to augment what is already a very robust list of features.Pentaho Data Integration Cookbook, Second Edition picks up where the first edition left off,by updating the recipes to the latest edition of PDI and diving into new topics such as workingwith Big Data and cloud sources, data analytics, and more.Pentaho Data Integration Cookbook, Second Edition shows you how to take advantage of allthe aspects of Kettle through a set of practical recipes organized to find quick solutions toyour needs. The book starts with showing you how to work with data sources such as files,relational databases, Big Data, and cloud sources. Then we go into how to work with datastreams such as merging data from different sources, how to take advantage of the differenttools to clean up and transform data, and how to build nested jobs and transformations. Moreadvanced topics are also covered, such as data analytics, data visualization, plugins, andintegration of Kettle with other tools in the Pentaho suite.Pentaho Data Integration Cookbook, Second Edition provides recipes with easy step-by-stepinstructions to accomplish specific tasks. The code for the recipes can be adapted and builtupon to meet individual needs.What this book coversChapter 1, Working with Databases, shows you how to work with relational databases withKettle. The recipes show you how to create and share database connections, perform typicaldatabase functions (select, insert, update, and delete), as well as more advanced tricks suchas building and executing queries at runtime.Chapter 2, Reading and Writing Files, not only shows you how to read and write files, but alsohow to work with semi-structured files, and read data from Amazon Web Services.

PrefaceChapter 3, Working with Big Data and Cloud Sources, covers how to load and read data fromsome of the many different NoSQL data sources as well as from Salesforce.com.Chapter 4, Manipulating XML Structures, shows you how to read, write, and validate XML.Simple and complex XML structures are shown as well as more specialized formats suchas RSS feeds.Chapter 5, File Management, demonstrates how to copy, move, transfer, and encrypt filesand directories.Chapter 6, Looking for Data, shows you how to search for information through variousmethods via databases, web services, files, and more. This chapter also shows you howto validate data with Kettle's built-in validation steps.Chapter 7, Understanding and Optimizing Data Flows, details how Kettle moves data throughjobs and transformations and how to optimize data flows.Chapter 8, Executing and Re-using Jobs and Transformations, shows you how to launch jobsand transformations in various ways through static or dynamic arguments and parameterization.Object-oriented transformations through subtransformations are also explained.Chapter 9, Integrating Kettle and the Pentaho Suite, works with some of the other tools in thePentaho suite to show how combining tools provides even more capabilities and functionalityfor reporting, dashboards, and more.Chapter 10, Getting the Most Out of Kettle, works with some of the commonly neededfeatures (e-mail and logging) as well as building sample data sets, and using Kettle to readmeta information on jobs and transformations via files or Kettle's database repository.Chapter 11, Utilizing Visualization Tools in Kettle, explains how to work with plugins andfocuses on DataCleaner, AgileBI, and Instaview, an Enterprise feature that allows for fastanalysis of data sources.Chapter 12, Data Analytics, shows you how to work with the various analytical tools built intoKettle, focusing on statistics gathering steps and building datasets for Weka.Appendix A, Data Structures, shows the different data structures used throughout the book.Appendix B, References, provides a list of books and other resources that will help youconnect with the rest of the Pentaho community and learn more about Kettle and the othertools that are part of the Pentaho suite.2

PrefaceWhat you need for this bookPDI is written in Java. Any operating system that can run JVM 1.5 or higher should be able torun PDI. Some of the recipes will require other software, as listed:ffHortonworks Sandbox: This is Hadoop in a box, and consists of a great environmentto learn how to work with NoSQL solutions without having to install everything.ffWeb Server with ASP support: This is needed for two recipes to show how to workwith web services.ffDataCleaner: This is one of the top open source data profiling tools and integrateswith Kettle.ffMySQL: All the relational database recipes have scripts for MySQL provided. Feel freeto use another relational database for those recipes.In addition, it's recommended to have access to Excel or Calc and a decent text editor (likeNotepad or gedit).Having access to an Internet connection will be useful for some of the recipes that usecloud services, as well as making it possible to access the additional links that provide moreinformation about given topics throughout the book.Who this book is forIf you are a software developer, data scientist, or anyone else looking for a tool that will helpextract, transform, and load data as well as provide the tools to perform analytics and datacleansing, then this book is for you! This book does not cover the basics of PDI, SQL, databasetheory, data profiling, and data analytics.ConventionsIn this book, you will find a number of styles of text that distinguish between different kinds ofinformation. Here are some examples of these styles, and an explanation of their meaning.Code words in text, database table names, folder names, filenames, file extensions,pathnames, dummy URLs, user input, and Twitter handles are shown as follows: "Copy the.jar file containing the driver to the lib directory inside the Kettle installation directory."A block of code is set as ,"American",1947"Hiaasen","Carl ","American",19533

PrefaceWhen we wish to draw your attention to a particular part of a code block, the relevant lines oritems are set in bold: request type City /type query Buenos Aires, Argentina /query /request New terms and important words are shown in bold. Words that you see on the screen, inmenus or dialog boxes for example, appear in the text like this: "clicking on the Next buttonmoves you to the next screen".Warnings or important notes appear in a box like this.Tips and tricks appear like this.Reader feedbackFeedback from our readers is always welcome. Let us know what you think about thisbook—what you liked or may have disliked. Reader feedback is important for us to developtitles that you really get the most out of.To send us general feedback, simply send an e-mail to feedback@packtpub.com, andmention the book title via the subject of your message.If there is a topic that you have expertise in and you are interested in either writing orcontributing to a book, see our author guide on www.packtpub.com/authors.Customer supportNow that you are the proud owner of a Packt book, we have a number of things to help you toget the most from your purchase.Downloading the example codeYou can download the example code files for all Packt books you have purchased fromyour account at http://www.packtpub.com. If you purchased this book elsewhere, youcan visit http://www.packtpub.com/support and register to have the files e-maileddirectly to you.4

PrefaceErrataAlthough we have taken every care to ensure the accuracy of our content, mistakes do happen.If you find a mistake in one of our books—maybe a mistake in the text or the code—we would begrateful if you would report this to us. By doing so, you can save other readers from frustrationand help us improve subsequent versions of this book. If you find any errata, please reportthem by visiting http://www.packtpub.com/submit-errata, selecting your book,clicking on the errata submission form link, and entering the details of your errata. Once yourerrata are verified, your submission will be accepted and the errata will be uploaded on ourwebsite, or added to any list of existing errata, under the Errata section of that title. Any existingerrata can be viewed by selecting your title from http://www.packtpub.com/support.PiracyPiracy of copyright material on the Internet is an ongoing problem across all media. At Packt,we take the protection of our copyright and licenses very seriously. If you come across anyillegal copies of our works, in any form, on the Internet, please provide us with the locationaddress or website name immediately so that we can pursue a remedy.Please contact us at copyright@packtpub.com with a link to the suspectedpirated material.We appreciate your help in protecting our authors, and our ability to bring youvaluable content.QuestionsYou can contact us at questions@packtpub.com if you are having a problem with anyaspect of the book, and we will do our best to address it.5

1Working with DatabasesIn this chapter, we will cover:ffConnecting to a databaseffGetting data from a databaseffGetting data from a database by providing parametersffGetting data from a database by running a query built at runtimeffInserting or updating rows in a tableffInserting new rows when a simple primary key has to be generatedffInserting new rows when the primary key has to be generated based on stored valuesffDeleting data from a tableffCreating or altering a table from PDI (design time)ffCreating or altering a table from PDI (runtime)ffInserting, deleting, or updating a table depending on a fieldffChanging the database connection at runtimeffLoading a parent-child tableffBuilding SQL queries via database metadataffPerforming repetitive database design tasks from PDIIntroductionDatabases are broadly used by organizations to store and administer transactional data suchas customer service history, bank transactions, purchases, sales, and so on. They are alsoused to store data warehouse data used for Business Intelligence solutions.

Working with DatabasesIn this chapter, you will learn to deal with databases in Kettle. The first recipe tells you how toconnect to a database, which is a prerequisite for all the other recipes. The rest of the chapterteaches you how to perform different operations and can be read in any order according toyour needs.The focus of this chapter is on relational databases (RDBMS).Thus, the term database is used as a synonym for relationaldatabase throughout the recipes.Sample databasesThrough the chapter you will use a couple of sample databases. Those databases can becreated and loaded by running the scripts available at the book's website. The scripts areready to run under MySQL.If you work with a different DBMS, you may have to modifythe scripts slightly.For more information about the structure of the sample databases and the meaning of thetables and fields, please refer to Appendix A, Data Structures. Feel free to adapt the recipesto different databases. You could try some well-known databases; for example, Foodmart(available as part of the Mondrian distribution at http://sourceforge.net/projects/mondrian/) or the MySQL sample databases (available at http://dev.mysql.com/doc/index-other.html).Pentaho BI platform databasesAs part of the sample databases used in this chapter you will use the Pentaho BI platformDemo databases. The Pentaho BI Platform Demo is a preconfigured installation that lets youexplore the capabilities of the Pentaho platform. It relies on the following databases:8Database namehibernateDescriptionQuartzRepository for Quartz; the scheduler used by Pentaho.SampledataData for Steel Wheels, a fictional company that sells allkind of scale replicas of vehicles.Administrative information including userauthentication and authorization data.

Chapter 1By default, all those databases are stored in Hypersonic (HSQLDB). The script for creating thedatabases in HSQLDB can be found at http://sourceforge.net/projects/pentaho/files. Under Business Intelligence Server 1.7.1-stable look for pentaho sample data1.7.1.zip. While there are newer versions of the actual Business Intelligence Server, they alluse the same sample dataset.These databases can be stored in other DBMSs as well. Scripts for creating and loading thesedatabases in other popular DBMSs for example, MySQL or Oracle can be found in PrashantRaju's blog, at e the scripts you will find instructions for creating and loading the databases.Prashant Raju, an expert Pentaho developer, providesseveral excellent tutorials related to the Pentaho platform.If you are interested in knowing more about Pentaho, it'sworth taking a look at his blog.Connecting to a databaseIf you intend to work with a database, either reading, writing, looking up data, and so on, thefirst thing you will have to do is to create a connection to that database. This recipe will teachyou how to do this.Getting readyIn order to create the connection, you will need to know the connection settings. At least youwill need the following:ffHost name: Domain name or IP address of the database server.ffDatabase name: The schema or other database identifier.ffPort number: The port the database connects to. Each database has its own defaultport.ffUsername: The username to access the database.ffPassword: The password to access the database.It's recommended that you also have access to the database at the moment of creatinga connection.9

Working with DatabasesHow to do it.Open Spoon and create a new transformation.1. Select the View option that appears in the upper-left corner of the screen, right-clickon the Database connections option, and select New. The Database Connectiondialog window appears.2. Under Connection Type, select the database engine that matches your DBMS.3. Fill in the Settings options and give the connection a name by typing it in theConnection Name: textbox. Your window should look like the following:4. Press the Test button. A message should appear informing you that the connection toyour database is OK.If you get an error message instead, you should recheckthe data entered, as well as the availability of the databaseserver. The server might be down, or it might not bereachable from your machine.10

Chapter 1How it works.A database connection is the definition that allows you to access a database from Kettle.With the data you provide, Kettle can instantiate real database connections and perform thedifferent operations related to databases. Once you define a database connection, you will beable to access that database and execute arbitrary SQL statements: create schema objectslike tables, execute SELECT statements, modify rows, and so on.In this recipe you created the connection from the Database connections tree. You mayalso create a connection by pressing the New. button in the Configuration window of anydatabase-related step in a transformation or job entry in a job. Alternatively, ther

been dedicated full time to developing BI solutions using Pentaho Suite. Currently, she works for Webdetails, one of the main Pentaho contributors. She is the author of Pentaho 3.2 Data Integration: Beginner's Guide published by Packt Publishing in Ap