Transcription

See discussions, stats, and author profiles for this publication at: Game Tree Search Based on Non-DeterministicAction Scripts in Real-Time Strategy GamesArticle in IEEE Transactions on Computational Intelligence and AI in Games · June 2017DOI: 10.1109/TCIAIG.2017.2717902CITATIONSREADS01093 authors, including:Nicolas A. BarrigaMarius StanescuUniversity of AlbertaUniversity of Alberta18 PUBLICATIONS 54 CITATIONS14 PUBLICATIONS 32 CITATIONSSEE PROFILESEE PROFILESome of the authors of this publication are also working on these related projects:Deep Learning for Real-Time Strategy Games (StarCraft) View projectAll content following this page was uploaded by Nicolas A. Barriga on 24 June 2017.The user has requested enhancement of the downloaded file. All in-text references underlined in blue are added to the original documentand are linked to publications on ResearchGate, letting you access and read them immediately.

1Game Tree Search Based on Non-DeterministicAction Scripts in Real-Time Strategy GamesNicolas A. Barriga, Marius Stanescu and Michael BuroAbstract—Significant progress has been made in recent yearstowards stronger Real-Time Strategy (RTS) game playing agents.Some of the latest approaches have focused on enhancing standard game tree search techniques with a smart sampling of thesearch space, or on directly reducing this search space. However,experiments have thus far only been performed using smallscenarios. We provide experimental results on the performance ofthese agents on increasingly larger scenarios. Our main contribution is Puppet Search, a new adversarial search framework thatreduces the search space by using scripts that can expose choicepoints to a look-ahead search procedure. Selecting a combinationof a script and decisions for its choice points represents anabstract move to be applied next. Such moves can be directlyexecuted in the actual game, or in an abstract representation ofthe game state which can be used by an adversarial tree searchalgorithm. We tested Puppet Search in µRTS, an abstract RTSgame popular within the research community, allowing us todirectly compare our algorithm against state-of-the-art agentspublished in the last few years. We show a similar performanceto other scripted and search based agents on smaller scenarios,while outperforming them on larger ones.Index Terms—Real-Time Strategy (RTS) Games, AdversarialSearch, Heuristic Search, Monte-Carlo Tree SearchI. I NTRODUCTIONIN the past 20 years AI systems for abstract games suchas Backgammon, Checkers, Chess, Othello, and Go havebecome much stronger and are now able to defeat even thebest human players. Video games introduce a host of newchallenges not common to abstract games. Actions in videogames can usually be issued simultaneously by all playersmultiple times each second. Moreover, action effects are oftenstochastic and delayed, players only have a partial view ofthe game state, and the size of the playing area, the numberof units available and of possible actions at any given timeare several orders of magnitude larger than in most abstractgames.The rise of professional eSports communities enables us toseriously engage in the development of competitive AI forvideo games. A game played just by amateurs could lookintriguingly difficult at first glance, but top players might beeasily defeated by standard game AI techniques. An exampleof this is Arimaa [1], which was purposely designed to bedifficult for computers but easy for human players. It tooka decade for game playing programs to defeat the top humanplayers, but no new AI technique was required other than thosealready in use in Chess and Go programs. And after a decadeN. Barriga, M. Stanescu and M. Buro are with the Department of Computing Science, University of Alberta, Edmonton, Alberta, Canada, T6G 2E8(e-mail: {barriga astanesc mburo}@ualberta.ca)we still don’t know if the computer players are really strongat Arimaa or no human player has a truly deep understandingof the game. In this respect a game with a large professionalplayer base provides a more solid challenge.One particularly interesting and popular video game classis Real-Time Strategy (RTS) games, which are real-time warsimulations in which players instruct units to gather resources,build structures and armies, seek out new resource locationsand opponent positions, and destroy all opponent’s buildings towin the game. Typical RTS games also feature the so-called“fog of war” (which restricts player vision to vicinities offriendly units), large game maps, possibly hundreds of mobileunits that have to be orchestrated, and fast-paced game playallowing players to issue multiple commands to their units persecond. Popular RTS games, such as StarCraft and Companyof Heroes, constitute a multi billion dollar market and areplayed competitively by thousands of players.Despite the similarities between RTS games and abstractstrategy games like Chess and Go, there is a big gap in stateand action-space complexity [2] that has to be overcome ifwe are to successfully apply traditional AI techniques suchas game tree search and solving (small) imperfect informationgames. These challenges, in addition to good human playersstill outperforming the best AI systems, make RTS games afruitful AI research target, with applications to many othercomplex decision domains featuring large action spaces, imperfect information, and simultaneous real-time action execution.To evaluate the state of the art in RTS game AI, severalcompetitions are organized yearly, such as the ones organizedat the AIIDE1 and CIG conferences, and SSCAI2 . Even thoughbot competitions show small incremental improvements everyyear, strong human players continue to defeat the best botswith ease at the annual man-machine matches organizedalongside AIIDE. When analyzing the games, several reasonsfor this playing strength gap can be identified. Good humanplayers have knowledge about strong game openings and playing preferences of opponents they encountered before. Theycan also quickly identify and exploit non-optimal opponentbehaviour, and — crucially — they are able to generate robustlong-term plans, starting with multi-purpose build orders inthe opening phase. RTS game AI systems, on the other hand,are still mostly scripted, have only modest opponent modelingabilities, and generally don’t seem to be able to adapt tounforeseen circumstances well. In games with small branching1 http://starcraftaicompetition.com2 http://sscaitournament.com/

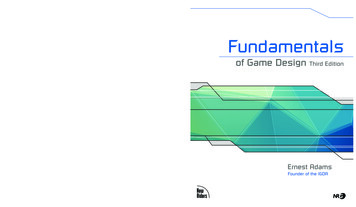

2factors a successful approach to overcome these issues is lookahead search, i.e. simulating the effects of action sequencesand choosing those that maximize the agent’s utility. In thispaper we present and evaluate an approach that mimics thisprocess in video games featuring vast search spaces. Ouralgorithm uses scripts that expose choice points to the lookahead search in order to reduce the number of action choices.This work builds on the Puppet Search algorithm proposed in[3]. Here we introduce new action scripts and search regimens,along with exploring different game tree search algorithms.In the following sections we first describe the proposedalgorithm in detail, then show experimental results obtainedfrom matches played against other state-of-the-art RTS gameagents, and finish the paper with discussing related work, ourconclusions and motivating future work in this ain soldiersII. A LGORITHM D ETAILSOur new search framework is called Puppet Search. Atits core it is an action abstraction mechanism that, givena non-deterministic strategy, works by constantly selectingaction choices that dictate how to continue the game basedon look-ahead search results. Non-deterministic strategies aredescribed by scripts that have to be able to handle all aspects ofthe game and may expose choice points to a search algorithmthe user specifies. Such choice points mark locations in thescript where alternative actions are to be considered duringsearch, very much like non-deterministic automata that arefree to execute any action listed in the transition relation. So,in a sense, Puppet Search works like a puppeteer who controlsthe limbs (choice points) of a set of puppets (scripts).More formally, we can think of applying the Puppet Searchidea to a game as 1) creating a new game in which moveoptions are restricted by replacing original move choices withpotentially far fewer choice points exposed by a non-deterministic script, and 2) applying an AI technique of ourchoice to the transformed game to find or approximate optimalmoves. The method, such as look-ahead search or findingNash-equilibria by solving linear programs, will depend oncharacteristics of the new game, such as being a perfect orimperfect information game, or a zero sum or general sumgame.Because we control the number of choice points in thisprocess, we can tailor the resulting AI systems to meet givensearch time constraints. For instance, suppose we are interestedin creating a fast reactive system for combat in an RTS game.In this case we will allow scripts to expose only a few carefullychosen choice points, if at all, resulting in fast searches thatmay sometimes miss optimal moves, but generally produceacceptable action sequences quickly. Note that scripts exposingonly a few choice points or none do not necessarily producemediocre actions because script computations can themselvesbe based on (local) search or other forms of optimization. Ifmore time is available for computing moves, our search canvisit more choice points to generate better moves.The idea of scripts exposing choice points originated fromwitnessing poor performance of scripted RTS AI systems andrealizing that one possible improvement is to let look-aheadAttackFig. 1. Decision tree representing script choices.search make fundamental decisions based on evaluating theimpact of chosen action sequences. Currently, RTS game AIsystems still rely on scripted high level strategies [4], which,for example, may contain code that checks whether now is agood time to launch an all-in attack based on some state featurevalues. However, designing code that can accurately predictthe winner of such an assault is challenging, and comparableto deciding whether there is a mate in k moves in Chessusing static rules. In terms of code complexity and accuracyit is much more preferable to use look-ahead search to decidethe issue, assuming sufficient computational resources areavailable. Likewise, given that currently high-level strategiesare still mostly scripted, letting search decide which scriptchoice point actions to take in complex video games hasthe potential to improve decision quality considerably whilesimplifying code complexity.A. ScriptsFor our purposes we define a script as a function that takesa game state and returns a set of actions to be performednow. The method for generating actions is immaterial: it couldbe a rule based player, hand coded with expert knowledge,a machine learning or search based agent, etc. The onlyrequirement is that the method must be able to generate actionsfor any legal game state. As an example consider a “rush”,which is a common strategy in RTS games that tries to buildas many combat units as fast as possible in an effort to destroythe opponent’s base before suitable defenses can be built. Awide range of these aggressive attacks are possible. At oneextreme end, the fastest attack can be executed using onlyworkers, which usually deal very little damage and barely haveany armor. Alternatively, the attack can be delayed until morepowerful units become available.Figure 1 shows a decision tree representing a script that firstgathers resources, builds some defensive buildings, expandsto a second resource location, creates soldiers and finallyattacks the enemy. This decision tree is executed at every gamesimulation frame to decide what actions to issue next.

3B. Choice PointsWhen writing a script, we must make some potentially hardchoices, such as when to expand to create a new base. Aftertraining a certain number of workers, or maybe only after mostof the current bases’ resources are depleted. Regardless of thedecision, it will be hardcoded in the script, according to a set ofstatic rules about the state of the game. Discovering predictablepatterns in the way the AI acts might be frustrating for allbut beginner players. Whether the behavior implemented issensible or not in the given situation, opponents will quicklylearn to exploit it and the game will likely lose some of itsreplay value in the process. As script writers, we would liketo be able to leave some choices open, such as which units torush with. But the script also needs to deal with any and allpossible events happening during the strategy execution. Thebase might be attacked before it is ready to launch its ownattack, or maybe the base is undefended while our infantryunits are out looking for the enemy. Should they continuein hope of destroying their base before they raze ours? Orshould they come back to defend? What if when we arriveat the enemy’s base we realize we don’t have the strength todestroy it? Should we push on nonetheless? Some, or all, ofthese decisions are best left open, so that they can be exploreddynamically and the most appropriate choice taken duringthe game. We call such non-deterministic script parts choicepoints.C. Using Non-Deterministic ScriptsScripts with choice points can transform a given complexgame with a large action space into a smaller games to whichstandard solution methods can be applied, such as learningpolicies using machine learning (ML) techniques, MiniMax orMonte Carlo Tree Search, or approximating Nash-equilibriain imperfect information games using linear programmingor iterative methods. The number of choice points and theavailable choices are configurable parameters that determinethe strength and speed of the resulting AI system. Feweroptions will produce faster but more predictable AI systemswhich are suitable for beginner players, while increasingtheir number will lead to stronger players, at the expense ofincreased computational work.ML-based agents rely on a function that takes the currentgame state as input, and produces a decision for each choicein the script. The parameters of that function would thenbe optimized either by supervised learning methods on a setof game traces, or by reinforcement learning via self-play.Once the parameters are learned, the model acts like a static(but possibly stochastic) rule based system. If the system isallowed to keep learning after the game has shipped, thenthere are no guarantees as to how it will evolve, possiblyleading to unwanted behavior. Another approach, look-aheadsearch, involves executing action sequences and evaluatingtheir outcomes. Both methods can work well. It is possible tohave an unbeatable ML player if the features and training dataare good enough, as well as a perfect search based player if weexplore the full search space. In practice, neither requirementis easy to meet: good representations are hard to design, andAlgorithm 1 Puppet ABCD Search1: procedure P UPPETABCD(state, height, prevM ove, α, β)2:player PLAYERT O M OVE(state, policy, height)3:if heigth 0 or TERMINAL(state) then4:return EVALUATE(state, player)5:end if6:for move in GET C HOICES(state, player) do7:if prevM ove then8:v P UPPETABCD(state, h 1, move, α, β)9:else10:state0 COPY(state)11:EXEC C HOICES (state0 , prevM ove, move)12:v P UPPETABCD(state0 , height 1, , α, β)13:end if14:if player MAX and v α then α v15:if player MIN and v β then β v16:if α β then break17:end for18:return player MAX ? α : β19: end procedure20:21:22:#Example call:#state: current game state23: #depth: maximum search depth, has to be even24: value P UPPETABCD(state, depth, , , )time constraints prevent covering the search space in mostgames. Good practical results are often achieved by combiningboth approaches [5].D. Game Tree SearchTo decide which choice to take, we can execute a scriptfor a given timespan, look at the resulting state, and thenbacktrack to the original state to try other action choices,taking into account that the opponent also has choices. Tokeep the implementation as general as possible, we will useno explicit opponent model. We’ll assume he uses the samescripts and evaluates states the same way we do.Algorithm 1 shows a variant of Puppet Search which isbased on ABCD (Alpha-Beta Considering Durations) search,that itself is an adaptation of alpha-beta search to games withsimultaneous and durative actions [6]. To reduce the computational complexity of solving multi-step simultaneous movegames, ABCD search implements approximations based onmove serialization policies which specify the player which isto move next (line 2) and the opponent thereafter. Commonlyused strategies include random, alternating, and alternating in1-2-2-1 fashion, to even out first or second player advantages.To fit into the Puppet Search framework for our hypotheticalsimultaneous move game we modified ABCD search so thatit considers puppet move sequences — series of script andchoice combinations — and takes into account that at anypoint in time both players execute a puppet move, to performactions at every frame in the game. The maximum searchdepth is assumed to be even, which allows both playersto select a puppet move to forward the world in line 11.

4Moves for the current player are generated in line 6. Theycontain choice point decisions as well as the player whosemove it is. Afterwards, if no move was passed from theprevious recursive call (line 7), the current player’s movepreviousM ove is passed on to a subsequent P UPPETABCDcall at line 8. Otherwise, both players’ moves are applied tothe state (line 11).RTS games are usually fast paced. In StarCraft bot competitions, for example rules allow up to 41 milliseconds computingtime per simulation frame. However, as actions take sometime to complete, it is often the case that the game doesn’treally change between one game frame and the next one. Thismeans that an agent’s computations can be split among severalconsecutive frames until an action has to be issued. To takeadvantage of this split computation, the recursive Algorithm 1has to be transformed into an iterative one, by manuallymanaging the call stack. The details of this implementationare beyond the scope of this paper, and can be reviewed inour published code at the µRTS repository3 .Alpha-beta search variants conduct depth-first search, inwhich the search depth needs to be predefined, and no solutionis returned until the algorithm runs to completion. In mostadversarial video games, the game proceeds even when noactions are emitted, resulting in a disadvantage if a player isnot able to issue actions in a predefined time. Algorithms toplay such games have to be anytime algorithms, that is, beable to return a valid solution even when interrupted beforecompletion. Iterative deepening has traditionally been usedto transform alpha-beta search into an anytime algorithm.It works by calling the search procedure multiple times,increasing the search depth in each call. The time lost dueto repeating computations is usually very low, due to theexponential growth of game trees. However, even this minimalloss can be offset by subsequently reusing the informationgained in previous iterations for move sorting.In our implementation we reuse previous information in twoways: a move cache and a hash move. The move cache is a mapfrom a state and a pair of moves (one for each player) to theresulting state. With this cache, we can greatly reduce the timeit takes to forward the world, which is the slowest operationin our search algorithm. The hash move is a standard moveordering technique, in which the best move from a previousiteration is used first, in order to increase the chances of anearlier beta cut. Algorithm 2 shows an UCT version of PuppetSearch. The node structure on line 1 contains the game state,the player to move (moves are being serialized as in ABCD),a list of the children nodes already expanded, a list of legalmoves (applicable choice point combinations), and a previousmove, which can be empty for the first player.Procedure PuppetUCTCD in line 9 shows the main loop,which calls the selectLeaf procedure at line 12, which willselect a leaf, expand a new node, and return it. Then a playoutis performed in line 14 using a randomized policy for bothplayers. The playout is cut short after a predefined numberof frames, and an evaluation function is used. Afterwards,a standard UCT update rule is applied. Finally, when the3 https://github.com/santiontanon/micrortsAlgorithm 2 Puppet UCTCD Search1: Structure 7: End 39:procedure P UPPET UCTCD(state)root N ODE(state)while not timeUp doleaf SELECT L EAF(root)newState SIMULATE (leaf.state, policy, policy, leaf.parent.player,leaf.player, EVAL PLAYOUT TIME)e EVALUATE(newState, leaf.player)UPDATE (leaf, e)end whilereturn GET B EST(root)end procedureprocedure SELECT L EAF(root)if SIZE(root.children) SIZE(root.moves) thenmove NEXT(root.moves)if root.previousMove thennode N ODE(root,move)APPEND (root.children, node)return SELECT L EAF(node)elsenewState SIMULATE (root.state, root.previousMove, move,root.parent.player, root.player, STEP PLAYOUT TIME)node N ODE(newState)APPEND (root.children, node)return nodeend ifelsenode UCB1(root.children)return SELECT L EAF(node)end ifend procedurecomputation time is spent, the best move at the root is selectedand returned.Procedure selectLeaf in line 21 either expands a new siblingof a leaf node, or if there are none, uses a standard UCB1algorithm to select a node to follow down the tree. Whenexpanding a new node, it always expands two levels, becauseplayouts can only be performed on nodes for which bothplayers have selected moves.E. State EvaluationForwarding the state using different choices is only usefulif we can evaluate the merit of the resulting states. Weneed to decide which of those states is more desirable from

5Barracks: train attack unitsHeavy: powerful slow melee unitLight: low power fast melee unitRanged: long ranged attack unitBases: accumulate resources and train workersWorkers: can harvest minerals and construct buildingsMinerals: harvested by workersFig. 2. Screenshot of µRTS, with explanations of the different in-game symbols.the point of view of the player performing the search. Inother words, we need to evaluate those states, assign eacha numerical value and use it to compare them. In zerosum games it is sufficient to consider symmetric evaluationfunctions eval(state, player) that return positive values forthe winning player and negative values for the losing playerwith eval(state, p1) eval(state, p2).The most common approach to state evaluation in RTSgames, and the one we use in our experiments, is to use alinear function that adds a set of values that are each multipliedby a weight. The values usually represent simple features,such as the number of units of each type a player has, withdifferent weights reflecting their estimated worth. Weights canbe either hand-tuned or learned from records of past gamesusing logistic regression or similar methods. An example of apopular metric in RTS games is Life-Time Damage, or LTD[7], which tries to estimate the amount of damage a unit coulddeal to the enemy during its lifetime. Another feature could bethe cost of building a unit, which takes advantage of the gamebalancing already performed by the game designers. Costlierunits are highly likely to be more useful, thus the player thathas a higher total unit cost has a better chance of winning.[8] describes a state-of-the-art evaluation method based onLanchester’s attrition laws that takes into account combat unittypes and their health.[9] presents a global evaluation function that takes intoaccount not just military features, but economic, spatial andplayer skill as well. [10] implements a Convolutional NeuralNetwork for game state evaluation that provides a significantincrease in accuracy compared to other methods, at the costof execution speed. How much the trade-off is worth dependson the search algorithm using it.A somewhat different state evaluation method involvesMonte Carlo simulations. Instead of invoking a static function,one could have a pair of fast scripts, either deterministic orrandomized, play out the remainder of the game, and assign apositive score to the winning player [6]. The rationale behindthis method is that, even if the scripts are not of high quality, asboth players are using the same policy, it is likely that whoeverwins more simulations is the one that was ahead in the firstplace. If running a simulation until the end of the game isinfeasible, a hybrid method can be used that performs a limitedplayout for a predetermined amount of frames, and then callsthe evaluation function. Evaluation functions are usually moreaccurate closer to the end of a game, when the game outcomeis easier to predict. Therefore, moving the application of theevaluation function to the end of the playout often results ina more accurate assessment of the value of the game state.F. Long Term PlansRTS game states tend to change gradually, due to actionstaking several frames to execute. To take advantage of thisslow rate of change, we assume that the game state doesn’tchange for a predefined amount of time and try to perform adeeper search than otherwise possible during a single frame.We can then use the generated solution (a series of choicesfor a script’s choice points) to control the game playing agent,while the search produces the next solution. We call thisapproach having a standing plan.Experiments reported in the following section investigatehow advantageous is the trade-off between computation timeand recency of the data being used to inform the search.III. E XPERIMENTS AND R ESULTSThe experiments reported below were performed in µRTS4 ,an abstract RTS game implemented in Java and designed totest AI techniques. It provides the core features of RTS games,while keeping things as simple as possible. In the basic setuponly four unit and two building types are supported (all ofthem occupying one map tile), and there is only one resourcetype. µRTS is a 2-player real-time game featuring simultaneous and durative actions, possibly large maps (althouth sizesof 8x8 to 16x16 are most common), and by default all statevariables are fully observable. µRTS comes with a few basicscripted players, as well as search-based players that implement several state-of-the-art RTS game search techniques. Thismakes it an useful tool for benchmarking new algorithms.Figure 2 shows an annotated screenshot.µRTS comes with four scripted players, each implementinga rush strategy with different unit types. A rush is a simple4 https://github.com/santiontanon/microrts

6strategy in which long term economic development is sacrificed for a quick army buildup and early assault on theopponent’s base. The first Puppet Search version we testedincludes a single choice point to select among these 4 existingscripts, generating a game tree with constant branching factor4. We call this version PuppetSingle. A slightly more complexscript was implemented as well. In addition to the choicepoint for selecting the unit type to build, PuppetBasic hasan extra choice point for deciding whether to expand (i.e.,build a second base) or not. Because this choice point is onlyactive under certain conditions, the branching factor is 4 or8, depending on the specific game state. Both ABCD andUCTCD search were used, with and without a standing plan,leading to a total of eight different agents.The algorithms used as a benchmark are Naı̈veMCTS andtwo versions of AHTNs: AHTN-F, the strongest one onsmall maps, and AHTN-P, the more scalable version. Thesealgorithms are described in detail in section IV.All experiments were conducted on computers equippedwith an Intel Core i7-2600 CPU @ 3.4 GHz and 8 GB ofRAM. The operating system was Ubuntu 14.04.5 LTS, andJava JDK 1.8.0 was used.µRTS was configured in a similar manner to experimentsreported in previous papers. A computational budget of 100mswas given to each player between every simulation frame. In[11], [12] games ended in ties after running for 3000 gameframes without establishing a winner, i.e. a player eliminatingall the other player’s units. On bigger maps this produces alarge number of ties, so we used different cutoff thresholdsaccording to the map sizes:SizeFrames8x8300016x16400024x24500064x648000 64x6412000Applying these tie rules produced tie percentages ranging from1.1% to 3.9% in our experiments.All algorithms share the same simple evaluation function,a randomized playout policy and a playout length of 100simulation frames ([11], [12]), which were chosen to easilycompare our new results with previously published results.The Puppet Search versions that use a standing plan have 5seconds of planning time between plan switches, which wasexperimentally determined to produce the strongest agent. TheUCTCD Puppet Search versions use exploration factor c 1 for the 8x8 map, 10 for the 16x16 map, and 0.1 for allothers, tuned experimentally. All other algorithms maintaintheir original settings from the papers in which they wereintroduced.The following maps were used in the experiments. Startingpositions with a single base were chosen because they areby far the most common in commercial RTS games. Unlessspecified, 24 different starting positions were used for eachmap.8x8: an 8x8 map with each player starting with one baseand one worker. This map has been used in previouspapers ([11], [12]).16x16: a 16x16 map with each player starting with

free to execute any action listed in the transition relation. So, in a sense, Puppet Search works like a puppeteer who controls the limbs (choice points) of a set of puppets (scripts). More formally, we can think