Transcription

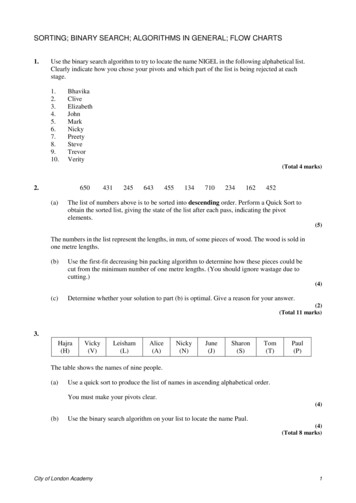

Algorithms for Optimization

Algorithms for OptimizationMykel J. KochenderferTim A. WheelerThe MIT PressCambridge, MassachusettsLondon, England

This work is licensed under a Creative Commons “Attribution-NonCommercialNoDerivatives 4.0 International” license. 2019 Massachusetts Institute of TechnologyCopyright in this monograph has been licensed exclusively to The MIT Press, http://mitpress.mit.edu.All inquiries regarding rights should be addressed to The MIT Press, Rights and Permissions Department.This book was set in TEX Gyre Pagella by the authors in LATEX.

To our families.

11.1A History1.2Optimization Process1.3Basic Optimization Problem1.4Constraints1.5Critical Points1.6Conditions for Local Minima1.7Contour tives and Gradients2.1Derivatives24567811171919

viii c on te n ts2.2Derivatives in Multiple Dimensions2.3Numerical Differentiation232.4Automatic 3.1Unimodality3.2Finding an Initial Bracket3.3Fibonacci Search3.4Golden Section Search3.5Quadratic Fit Search3.6Shubert-Piyavskii Method3.7Bisection Method3.8Summary3.9Exercises4Local Descent4.1Descent Direction Iteration4.2Line Search4.3Approximate Line Search4.4Trust Region Methods4.5Termination 454951515353545561636666 2019 Massachusetts Institute of Technology, shared under a under a Creative Commons CC-BY-NC-ND license.2021-05-10 23:08:45-07:00, revision e07b689, comments to bugs@algorithmsbook.com

c on te n ts5First-Order Methods5.1Gradient Descent5.2Conjugate Gradient5.3Momentum5.4Nesterov ypergradient Descent5.10Summary5.11Exercises6Second-Order Methods6.1Newton’s Method6.2Secant Method6.3Quasi-Newton Methods6.4Summary6.5Exercises7Direct Methods7.1Cyclic Coordinate Search7.2Powell’s Method7.3Hooke-Jeeves7.4Generalized Pattern 0102103 2019 Massachusetts Institute of Technology, shared under a under a Creative Commons CC-BY-NC-ND license.2021-05-10 23:08:45-07:00, revision e07b689, comments to bugs@algorithmsbook.com

xc on te n ts7.5Nelder-Mead Simplex Method1057.6Divided Rectangles7.7Summary7.8Exercises8Stochastic Methods8.1Noisy Descent8.2Mesh Adaptive Direct Search8.3Simulated Annealing8.4Cross-Entropy Method8.5Natural Evolution Strategies8.6Covariance Matrix Adaptation8.7Summary8.8Exercises9Population Methods9.1Initialization9.2Genetic Algorithms9.3Differential Evolution9.4Particle Swarm Optimization9.5Firefly Algorithm9.6Cuckoo Search9.7Hybrid 133137138142142147147148157158159161162165165 2019 Massachusetts Institute of Technology, shared under a under a Creative Commons CC-BY-NC-ND license.2021-05-10 23:08:45-07:00, revision e07b689, comments to bugs@algorithmsbook.com

c on te n ts10Constraints10.2Constraint Types10.110.310.410.510.6167Constrained OptimizationTransformations to Remove ConstraintsDuality177Penalty Methods10.9Interior Point Methods174178Augmented Lagrange Method10.10 Summary10.11 Exercises18318618318611Linear Constrained Optimization11.2Simplex Algorithm11.111.311.411.5Problem FormulationDual tive Optimization12.2Constraint Methods12.312.412.512.612.7Pareto OptimalityWeight Methods211Preference ElicitationExercises232211216218Multiobjective Population MethodsSummary1891891212.1169171Inequality Constraints10.710.8167168Lagrange Multipliersxi228221232 2019 Massachusetts Institute of Technology, shared under a under a Creative Commons CC-BY-NC-ND license.2021-05-10 23:08:45-07:00, revision e07b689, comments to bugs@algorithmsbook.com

xiic on te n ts13Sampling Plans23513.1Full Factorial13.2Random Sampling13.3Uniform Projection Plans13.4Stratified Sampling13.5Space-Filling Metrics23913.6Space-Filling Subsets24413.7Quasi-Random Sequences13.8Summary13.9Exercises14Surrogate Models14.1Fitting Surrogate Models14.2Linear Models14.3Basis Functions14.4Fitting Noisy Objective Functions14.5Model Selection14.6Summary14.7Exercises15Probabilistic Surrogate Models15.1Gaussian Distribution15.2Gaussian Processes15.3Prediction15.4Gradient 4274274275275277280282 2019 Massachusetts Institute of Technology, shared under a under a Creative Commons CC-BY-NC-ND license.2021-05-10 23:08:45-07:00, revision e07b689, comments to bugs@algorithmsbook.com

c on te n ts15.5Noisy Measurements15.6Fitting Gaussian Processes15.7Summary15.8Exercises16Surrogate Optimization29116.1Prediction-Based Exploration29116.2Error-Based Exploration16.3Lower Confidence Bound Exploration16.4Probability of Improvement Exploration16.5Expected Improvement Exploration16.6Safe Optimization16.7Summary16.8Exercises17Optimization under Uncertainty17.1Uncertainty17.2Set-Based Uncertainty17.3Probabilistic Uncertainty17.4Summary17.5Exercises18Uncertainty Propagation18.1Sampling Methods18.2Taylor Approximation18.3Polynomial 9312318318321321322323 2019 Massachusetts Institute of Technology, shared under a under a Creative Commons CC-BY-NC-ND license.2021-05-10 23:08:45-07:00, revision e07b689, comments to bugs@algorithmsbook.com

xivc on te n ts18.4Bayesian Monte Carlo33418.5Summary18.6Exercises19Discrete Optimization19.1Integer Programs19.2Rounding19.3Cutting Planes19.4Branch and Bound19.5Dynamic Programming19.6Ant Colony Optimization19.7Summary19.8Exercises20Expression Optimization20.1Grammars20.2Genetic Programming20.3Grammatical Evolution37020.4Probabilistic Grammars37520.5Probabilistic Prototype Trees20.6Summary20.7Exercises21Multidisciplinary Optimization21.1Disciplinary Analyses21.2Interdisciplinary 61364377382382387387389 2019 Massachusetts Institute of Technology, shared under a under a Creative Commons CC-BY-NC-ND license.2021-05-10 23:08:45-07:00, revision e07b689, comments to bugs@algorithmsbook.com

c on te n ts21.3Architectures21.4Multidisciplinary Design Feasible21.5Sequential Optimization21.6Individual Discipline Feasible21.7Collaborative Optimization21.8Simultaneous Analysis and Design21.9Summaryxv39339339639840340640721.10 l FlowA.4PackagesBTest Functions425B.1Ackley’s Function425B.2Booth’s Function426B.3Branin Function427B.4Flower Function428B.5Michalewicz FunctionB.6Rosenbrock’s Banana FunctionB.7Wheeler’s Ridge431B.8Circle Function432411420422423429430 2019 Massachusetts Institute of Technology, shared under a under a Creative Commons CC-BY-NC-ND license.2021-05-10 23:08:45-07:00, revision e07b689, comments to bugs@algorithmsbook.com

xvic on te n tsCMathematical ConceptsC.1Asymptotic NotationC.2Taylor ExpansionC.3ConvexityC.4NormsC.5Matrix CalculusC.6Positive DefinitenessC.7Gaussian DistributionC.8Gaussian 39439442442443447483495 2019 Massachusetts Institute of Technology, shared under a under a Creative Commons CC-BY-NC-ND license.2021-05-10 23:08:45-07:00, revision e07b689, comments to bugs@algorithmsbook.com

PrefaceThis book provides a broad introduction to optimization with a focus on practicalalgorithms for the design of engineering systems. We cover a wide variety ofoptimization topics, introducing the underlying mathematical problem formulations and the algorithms for solving them. Figures, examples, and exercises areprovided to convey the intuition behind the various approaches.This text is intended for advanced undergraduates and graduate studentsas well as professionals. The book requires some mathematical maturity andassumes prior exposure to multivariable calculus, linear algebra, and probabilityconcepts. Some review material is provided in the appendix. Disciplines wherethe book would be especially useful include mathematics, statistics, computerscience, aerospace, electrical engineering, and operations research.Fundamental to this textbook are the algorithms, which are all implementedin the Julia programming language. We have found the language to be ideal forspecifying algorithms in human readable form. Permission is granted, free ofcharge, to use the code snippets associated with this book, subject to the conditionthat the source of the code is acknowledged. We anticipate that others may wantto contribute translations of these algorithms to other programming languages.As translations become available, we will link to them from the book’s webpage.M y ke l J. Koc he n d e rfe rTi m A. W he e l e rStanford, Calif.October 30, 2018Ancillary material is available on the book’s imization

AcknowledgmentsThis textbook has grown from a course on engineering design optimization taughtat Stanford. We are grateful to the students and teaching assistants who havehelped shape the course over the past five years. We are also indebted to thefaculty who have taught variations of the course before in our department basedon lecture notes from Joaquim Martins, Juan Alonso, Ilan Kroo, Dev Rajnarayan,and Jason Hicken. Many of the topics discussed in this textbook were inspired bytheir lecture notes.The authors wish to thank the many individuals who have provided valuable feedback on early drafts of our manuscript, including Mohamed Abdelaty,Atish Agarwala, Ross Alexander, Piergiorgio Alotto, David Ata, Rishi Bedi, Felix Berkenkamp, Raunak Bhattacharyya, Hans Borchers, Maxime Bouton, EllisBrown, Abhishek Cauligi, Mo Chen, Zhengyu Chen, Vince Chiu, Anthony Corso,Holly Dinkel, Jonathan Cox, Katherine Driggs-Campbell, Thai Duong, HamzaEl-Saawy, Sofiane Ennadir, Kaijun Feng, Tamas Gal, Christopher Lazarus Garcia,Wouter Van Gijseghem, Michael Gobble, Robert Goedman, Jayesh Gupta, AaronHavens, Richard Hsieh, Zdeněk Hurák, Masha Itkina, Arec Jamgochian, BogumiłKamiński, Walker Kehoe, Mindaugas Kepalas, Veronika Korneyeva, Erez Krimsky, Petr Krysl, Jessie Lauzon, Ruilin Li, Iblis Lin, Edward Londner, Charles Lu,Miles Lubin, Marcus Luebke, Jacqueline Machesky, Ashe Magalhaes, ZouhairMahboubi, Pranav Maheshwari, Travis McGuire, Jeremy Morton, Robert Moss,Santiago Padrón, Jimin Park, Harsh Patel, Christian Peel, Derek Phillips, Brad Rafferty, Sidd Rao, Andreas Reschka, Alex Reynell, Stuart Rogers, Per Rutquist, RyanSamuels, Orson Sandoval, Jeffrey Sarnoff, Chelsea Sidrane, Sumeet Singh, NathanStacey, Ethan Strijbosch, Alex Toews, Pamela Toman, Rachael Tompa, ZachariaTuten, Raman Vilkhu, Yuri Vishnevsky, Julie Walker, Zijian Wang, Patrick Washington, Jacob West, Adam Wiktor, Brandon Yeung, Anil Yildiz, Robert Young,

xxa c kn ow l e d g me n tsJavier Yu, Andrea Zanette, and Remy Zawislak. In addition, it has been a pleasureworking with Marie Lufkin Lee and Christine Bridget Savage from the MIT Pressin preparing this manuscript for publication.The style of this book was inspired by Edward Tufte. Among other stylisticelements, we adopted his wide margins and use of small multiples. In fact, thetypesetting of this book is heavily based on the Tufte-LaTeX package by KevinGodby, Bil Kleb, and Bill Wood. We were also inspired by the clarity of thetextbooks by Donald Knuth and Stephen Boyd.Over the past few years, we have benefited from discussions with the core Juliadevelopers, including Jeff Bezanson, Stefan Karpinski, and Viral Shah. We havealso benefited from the various open source packages on which this textbookdepends (see appendix A.4). The typesetting of the code is done with the helpof pythontex, which is maintained by Geoffrey Poore. Plotting is handled bypgfplots, which is maintained by Christian Feuersänger. The book’s color schemewas adapted from the Monokai theme by Jon Skinner of Sublime Text. For plots,we use the viridis colormap defined by Stéfan van der Walt and Nathaniel Smith. 2019 Massachusetts Institute of Technology, shared under a under a Creative Commons CC-BY-NC-ND license.2021-05-10 23:08:45-07:00, revision e07b689, comments to bugs@algorithmsbook.com

1 IntroductionMany disciplines involve optimization at their core. In physics, systems are drivento their lowest energy state subject to physical laws. In business, corporationsaim to maximize shareholder value. In biology, fitter organisms are more likelyto survive. This book will focus on optimization from an engineering perspective,where the objective is to design a system that optimizes a set of metrics subject toconstraints. The system could be a complex physical system like an aircraft, or itcould be a simple structure such as a bicycle frame. The system might not even bephysical; for example, we might be interested in designing a control system foran automated vehicle or a computer vision system that detects whether an imageof a tumor biopsy is cancerous. We want these systems to perform as well aspossible. Depending on the application, relevant metrics might include efficiency,safety, and accuracy. Constraints on the design might include cost, weight, andstructural soundness.This book is about the algorithms, or computational processes, for optimization.Given some representation of the system design, such as a set of numbers encodingthe geometry of an airfoil, these algorithms will tell us how to search the spaceof possible designs with the aim of finding the best one. Depending on theapplication, this search may involve running physical experiments, such as windtunnel tests, or it might involve evaluating an analytical expression or runningcomputer simulations. We will discuss computational approaches for addressinga variety of challenges, such as how to search high-dimensional spaces, handlingproblems where there are multiple competing objectives, and accommodatinguncertainty in the metrics.

2 c ha p te r 1. i n trod u c ti on1.1A HistoryWe will begin our discus

xii contents 13 SamplingPlans 235 13.1 FullFactorial 235 13.2 RandomSampling 236 13.3 UniformProjectionPlans 237 13.4 StratiiedSampling 238 13.5 Space-FillingMetrics 239