Transcription

A Nugget-Based Evaluation ParadigmShahzad K. RajputVirgil PavluJaved A. AslamCollege of Computer and Information ScienceNortheastern University360 Huntington Ave, #202 WVHBoston, MA 02115, USA{rajput, vip, jaa}@ccs.neu.eduABSTRACTWe consider the problem of information retrieval relevanceassessment, which is central to the development of information retrieval systems such as search engines. The corefeature of the Cranfield paradigm and its variants, that theinformation relevant to a topic is encoded by documents,gives rise to several related problems, including scalability,reusability, and applicability. The common underlying causefor these problems is that the Cranfield paradigm relies oneffectively complete document relevance assessments in order for the test collection to be accurate and reusable.We propose a new information retrieval evaluation paradigmbased on relevant information as opposed to relevant documents. Once the relevant information is collected, any document can be assessed for relevance, and any retrieved list ofdocuments can be assessed for performance. Using TRECdata, we implement and test a method that, starting witha few relevant “nuggets”, finds (and correctly assesses) thevast majority of relevant documents found by TREC assessors, as well as many relevant documents not found by thoseassessors. We then show applications of these inferred relevance assessments to building highly accurate and reusabletest collections, as well as to training learning-to-rank algorithms.1.INTRODUCTIONWe consider the problem of information retrieval evaluation, which is central to the development of informationretrieval systems such as search engines.Collections of retrieval systems are traditionally evaluatedby (1) constructing a test collection of documents (the “corpus”), (2) constructing a test collection of queries (the “topics”), (3) judging the relevance of the documents to eachquery (the “relevance judgments”), and (4) assessing thequality of the ranked lists of documents returned by eachretrieval system for each topic using standard measures ofperformance such as precision-at-cutoff, nDCG, average precision, and so forth. Much thought and research has beendevoted to each of these steps in, for example, the annualtext retrieval conference TREC [20].For large collections of documents and/or topics, it is impractical to assess the relevance of each document to eachtopic. Instead, a small subset of the documents is chosen,and the relevance of these documents to the topics is assessed. When evaluating the performance of a collection ofretrieval systems, as in the annual TREC conference, thisjudged “pool” of documents is typically constructed by taking the union of the top c documents returned by each sys-tem in response to a given query. In TREC, c 100 hasbeen shown to be an effective cutoff in evaluating the relative performance of retrieval systems. Both shallower anddeeper pools have been studied [37, 20], both for TREC andwithin the greater context of the generation of large test collections. Pooling is an effective technique since many of thedocuments relevant to a topic will appear near the top ofthe lists returned by (quality) retrieval systems; thus, theserelevant documents will be judged and used to effectivelyassess the performance of the collected systems; unjudgeddocuments are assumed to be non-relevant.This Cranfield paradigm for information retrieval evaluation essentially operates in two phases: Phase 1, “CollectionConstruction”, constitutes Steps (1) through (3) above—documents, topics, and relevance assessments are all collected. Following Phase 1, we have a test collection that canbe used to evaluate the performance of systems in Phase 2,“Evaluation” (Step (4) above). Note that evaluation can beperformed on the systems that contributed to the pool, andperhaps even more importantly, it can be performed on newsystems that did not originally contribute to the pool. A testcollection is accurate if it correctly assesses the performanceof systems that contributed to the pool, and it is reusableif it correctly assesses the performance of new systems thatdid not originally contribute to the pool. That a test collection must be accurate is a given, but for a test collection tobe truly useful, it must also be reusable: New informationretrieval technologies will be tested against existing test collections, as happens continually with the various TREC collections, and for those assessments to be meaningful, thesetest collections must be reusable. In order for a Cranfieldparadigm test collection to be both accurate and reusable,the relevance assessments must be effectively complete. Inother words, the vast majority of relevant documents mustbe found and judged; otherwise, a novel retrieval systemcould return these unseen relevant documents, and the assessment of this system with respect to the test collectionwill be highly inaccurate. Unfortunately, the burden of effectively complete assessments is quite large; in TREC 8, forexample, 86,830 relevance judgments were collected in orderto build a test collection over a relatively small documentcollection for just 50 topics.1.1Inherent LimitationsThe key limitation of the Cranfield paradigm is that (1)during collection construction the information relevant toa topic is encoded by documents and (2) during evaluationthe information retrieved by a system is encoded by documents. Thus, in order to assess the performance of a system,

one must determine which relevant documents are retrieved(and how), and this necessitates effectively complete relevance judgments.Other retrieval tasks engender variants on the Cranfieldparadigm, but they all tend to retain the central featureabove, that the information relevant to a topic is encodedby documents. The difference is that other metadata is often collected which is specific to the retrieval task. For example, Graded Relevance was introduced in web search; instead of documents being “relevant” or “non-relevant”, theycan be “highly relevant”, “relevant”, “marginally relevant”,or “non-relevant”. However, the information relevant to atopic is still encoded by documents (together with their relevance grades), and the information retrieved by a systemis also encoded by documents. For Novelty and Diversitymeasurements, the information relevant to a query is encoded by documents, and the information retrieved by asystem is encoded by documents and their “marginal utility” with respect to previously retrieved documents, or associated “subtopics” and some measure of the coverage ofthose documents over the subtopics.This central feature of the Cranfield paradigm and itsvariants, that the information relevant to a topic is encoded by documents, resulted in hundred of thousands ofdocuments analyzed, both by governmental organizations(TREC, NTCIR) and large corporations (Google, Microsoft).Even so, and critical to evaluation, many relevant documentsare missed; ultimately, it gives rise to several related problems: Scalability: Given that the information relevant to atopic is encoded by documents, and given the necessityof effectively complete relevance assessments that thisentails for accurate and reusable evaluation, the Cranfield paradigm and its variants cannot scale to largecollections and/or topic sets. For example, the query“Barack Obama” yields 65,800,000 results on Googleas of December 2010, and it would be impossible tojudge all at any reasonable cost or in any reasonabletime. Reusability: The problem of scale directly gives riseto problems of reusability: (1) For a static collection,novel systems will retrieve unjudged but relevant documents, and the assessments of these systems will beinaccurate. (2) For dynamic collections (such as theWorld Wide Web), new documents will be added andold documents removed, rendering even statically constructed “effectively complete” relevance assessmentsincomplete over time, with an attendant loss in reusability. Applicability: It can be difficult to apply a test collection designed for one retrieval task and evaluationto another retrieval task or evaluation, especially fortest collections that are designed to “fix” the scaleand reusability issues described above using currentmethodologies. This issue is discussed below.1.2Related WorkVarious attempts to address the issues described abovehave been proposed. (1) Sampling techniques such as infAP[36], statAP [13], and their variants have been used extensively in various TREC tracks, including the Million QueryTrack, the Web Track, the Relevance Feedback Track, theEnterprise Track, the Terabyte Track, the Video Track, andthe Legal Track. These techniques are designed to directlyaddress the scale issue described above. A carefully chosensample of documents is drawn from the pool, these documents are judged, and a statistical estimate of the truevalue of a performance measure over that pool is derived.Given that accurate estimates can be derived using samplesas small as roughly 5% of the entire pool, these methods permit the use of pools roughly 20 times the size of standardfully-judged pools. This increases reusability, for example;however, it is only a stop-gap measure. These methods cannot scale to collections the size of the web (where potentially65 million documents are relevant to a query such as “BarackObama”), they only partially address the issue of dynamiccollections such as the web, and they reduce applicability inthat the samples drawn and estimates obtained are typicallytailored to specific evaluation measures such as average precision. (2) The Minimal Test Collection methodology [12]also employed in the TREC Million Query Track has generally similar benefits and drawbacks, as described above. (3)Crowd-sourcing relevance judgments, via Mechanical Turk,for example, has also been proposed to alleviate the scaleissue [1]. However, this too is only a stop-gap measure, inroughly direct proportion to the relative ease (in time orcost) of crowd-sourced judgments vs. assessor judgments: If10 to 100 crowd-sourced judgments can be obtained in thesame time or at the same cost as 1 assessor judgment, thenpools one to two orders of magnitude larger than standardpools can be contemplated, but this still does not scale tothe web or address the issue of dynamic collections, as described above. The use of click-through data has also beenproposed [30], but this is only applicable to the web andonly for those documents with sufficient “clicks”.The rest of the paper is organized as follows: We first describe our nuggets-based assessment methodology in detail.We then demonstrate its utility for test collection construction for evaluation, as well as for training collection construction for learning-to-rank. Finally, we summarize ourresults and describe future work.2.THE NUGGETS METHODConsider the web query “Barack Obama”. The overwhelming majority of the potentially 65 million documents relevantto this topic probably contain (variants of) the informationthat can be found in his biography (or even his Wikipediapage), which has a vastly smaller (and tractable) size. Furthermore, new web pages relevant to the query will likelyalso have this property. Thus, if we could collect and encode the vastly smaller information relevant to a topic, asopposed to the vastly larger and dynamically changing documents relevant to a topic, then we stand a much betterchance of addressing the issues of scalability, reusability, andapplicability described above.2.1ConceptWe propose a new information retrieval evaluation paradigmbased on relevant information as opposed to relevant documents. We refer to atomic units of relevant information as“nuggets” : they are the minimal or atomic units of relevantinformation, and they can range from simple answers suchas people’s names to full sentences or paragraphs, depending on the topic in question. The model effectively gives an

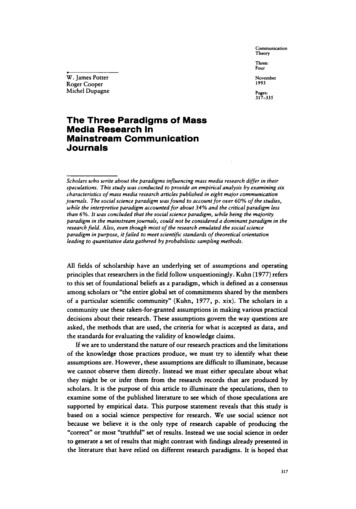

DOCUMENT COLLECTIONDOC SELECTORDOC OCSLABELEDDOCSMATCHINGDOCUMENT COLLECTIONDOCUMENTCOLLECTIONASSESSEDNUGGETSFigure 1: For a given query, selected documents are evaluated by human assessors as “relevant/nonrelevant”.Left: traditional TREC strategy for relevance. Right: proposed nuggets method.assessor the possibility to indicate as relevant only a tinyportion of the document.Figure 1 graphically illustrates the differences betweentraditional Cranfield-style evaluation methodologies (left)and the nugget-based methodology proposed (right). Thenuggets themselves are extremely relevant and useful piecesof information for a given topic; they are effectively the information that the user seeks. As a set, they yield a muchmore natural encoding of the information relevant to a topic.In principle, if this set is complete, we can use the nuggetsto infer the relevance of any document.Thus, in Phase 1 of our nugget-based evaluation paradigm(“Collection Construction”), we retain Steps (1) and (2) fromthe Cranfield paradigm and its variants (“corpus” and “topics”), but we replace Step (3), “relevance judgments”, withthe collection of nuggets instead. Our thesis is that whileeffectively complete document relevance judgment sets areimpractical (on a large scale) or impossible (in a dynamic environment), effectively complete nugget sets are much moretractable. Certainly the problem of collecting effectivelycomplete nugget sets is no harder than the problem of collecting effectively complete relevance judgment sets: any effectively complete set of relevant documents must collectively contain an effectively complete set of nuggets, by definition, and the judges would find these nuggets at the time ofassessment. However, an effectively complete set of relevantdocuments will contain vast quantities of highly redundantinformation (nuggets or their variants), and thus the effectively complete set of nuggets will likely be vastly smaller,more tractable, and more easily found with far fewer totaljudgments.In Phase 2 of our nugget-based evaluation paradigm (“Evaluation”), we dynamically assess the relevance of documentsretrieved by a system under evaluation, using the nuggetscollected. This is accomplished, in principle, by matching the information relevant to a topic (as encoded by thenuggets) with the documents in question. In implementation, we perform this “match” by adopting and modifyingtechniques from near-duplicate detection, though more sophisticated approaches are certainly possibly and worthy ofstudy. For small collections and/or recall-oriented measuresof performance, one could in principle automatically assessthe entire collection via nuggets. In practice, even if the setof nuggets collected is not complete, the inferred set of relevant documents is significantly larger than the set obtainedby assessing documents. The proposed method also permitsthe use of all standard measures of retrieval performance,and probably of all future measures of interest.We further note that this nugget-based evaluation paradigmcan be easily extended to accommodate other retrieval tasks: Learning to Rank: Using nuggets we can create largertraining sets (see the Learning-to-Rank section). Graded Relevance: Nuggets can be graded at the timeof extraction, in much the same manner that documents are graded, and the matching function cantake these nugget grades into account when assigninggrades to documents: the “stronger” the match withmore “relevant” nuggets, the “higher” the overall grade. Novelty: Nuggets could be clustered or categorized(see future work), either automatically or by the assessor. Then a document will be judged relevant ifit contains relevant information (as above), but itsmarginal utility will only be high if it contains relevant information not already seen in the output list,i.e., information from heretofore unseen nugget clusters or categories. Diversity: Nuggets can be assigned to aspects or subtopicsby the assessor. Then the coverage of a documentor list can determined by matching information acrossnugget aspect or subtopic classes, thus permitting diversitybased evaluation. Summarization: Documents retrieved can be clusteredand/or summarized based on what nuggets matched.2.2Background on Extracting and MatchingContentAllan et al. [2] study the relationship between the qualityof an Information Retrieval system and the effectiveness ofits users in performing a task. The users had to find, highlight and label answers facets of a given query. This study

Figure 2: The assessment and nugget extraction web interface powered by a MySQL database and PHP andPerl scripts.concludes that the users can work faster, find more facetsand make fewer errors with better retrieval.Most information extraction techniques depend on a setof domain dependent linguistic patterns; these patterns arematched against the documents in order to extract relevantinformation. Such patterns are either handcrafted (Knowledge Engineering Approach [32, 16]) or obtained automatically (Automatic Training Approach [28, 27]). Informationextraction with predicate argument structure has also beenexplored by researchers including [33]. The solution proposed in [29] for snippet extraction leverages a new semantic similarity measure to generate snippets that are basedon the given query.Matching text. Measures of text similarity, includingLevenshtein distance, cosine similarity, statistical models, ngram model, etc., have been used in a wide range of similarity related tasks ranging from ad-hoc retrieval to Duplicateand Plagiarism Detection [9, 8], passage retrieval, sentenceretrieval, snippet extraction, etc. Duplicate detection hasbeen applied to related tasks such as finding near-duplicateweb pages [21], text reuse detection on the web [7], andtracking the evolution of textual content on the web [5].The Shingling [9, 10] algorithm has also been applied to thenear-duplicate detection. Hoad and Zobel [22] experimentedwith various strategies of selecting k-grams when encodingshingles. In a recent work on near-duplicate detection [18]the authors introduced a method for learning document representation as a real-valued sparse k-gram vector, optimizedfor a specified similarity function. The algorithm presentedin [24] finds eficiently the shortest span of query terms in atext document in linear time.We note that nuggets of a somewhat different kind arewidely used in the evaluation of question answering systems [25, 26, 15, 35] and in a limited context for noveltyand diversity [14]. In the former case, the nuggets tend tobe very short and specific answers to “who”, “when”, and“where” type questions. In the latter case, nuggets were notused as the sole basis of evaluation, and vast quantities ofdocument judgments were still required.2.3Nugget extractionIn order to test the proposed framework, we conductedtwo separate user studies based on well-studied collections:TREC 8 adhoc (50 queries) and ClueWeb09 diversity (50queries).TREC 8 adhoc is a text based collection for which TRECassessed an average of about 1,736 documents per query.This collection is considered to have effectively complete assessments. ClueWeb09 diversity, on the other hand, is aweb-based collection with an average of about 528 documents assessed per query; it is not assumed to have effectively complete assessments. For each query from the TREC 8 adhoc collection, weemployed the statAP document selector [3] to obtain aselection of about 200 documents. The number of documents selected was approximately 11% of the full poolassessed by TREC; we call this (larger) sample “SampleLG”. For testing purposes, we also constructed asmaller sub-sample of about 100 documents per querywhich we call “SampleSM”. The design of the smallersample was such that every retrieval system is treatedfairly in terms of number of documents assessed; thisis not a guarantee for the larger sample. Similar to“SampleLG”, we created a sample of about 200 docu-

ments per query from ClueWeb09 diversity collection,which was approximately 38% of the full pool assessedby TREC; we call this sample “SampleCW”. For each relevant document, we performed nugget extraction using “amateur” assessors (primarily graduatestudents). Since we used samples much smaller thanthe full pool of judged documents, we spent considerably less effort overall than the effort that would havebeen required to judge the full pool.Assuming TREC assessors spent one minute per document, overall the entire TREC 8 qrel took about 36man-weeks. SampleLG, which is about 11% the sizeof entire TREC 8 qrel, by proportion required about 4man-weeks for binary relevance assessments. For therelevant documents found in the sample, we spent anadditional 2.1 man-weeks on extracting nuggets; thusthe total human effort required for our method on SampleLG is about 6.2 man-weeks.Under the same assumption, TREC spent about 11man-weeks in creating the entire ClueWeb09 qrel. SampleCW, which is about 38% the size of entire ClueWeb09qrel, required about 4 man-weeks. Nugget extractionfrom relevant documents in the sample took another1.6 man-weeks, for a total human effort on SampleCWof about 5.6 man-weeks.2.4Nugget MatchingWe implemented a matching algorithm based on a variantof shingle matching, which is often used in 34.5461.82Table 1: Sample statistics (query average)detection. A shingle is a sequence of k words from thenugget text. For example the nugget "John Kennedy waselected president in 1960" has the following shingles fork 3: (John Kennedy elected), (Kennedy elected president), and (elected president 1960). Note that the words“was” and “in” are stopwords and therefore removed.Given the set of nuggets, we computed a relevance scorefor each document by (1) computing a score for each shingle,(2) combining these shingle scores to obtain a score for eachnugget, and (3) combining these nugget scores to obtain ascore for the document: Shingle score: For any nugget and each shingle ofsize k, let S be the minimum span of words in thedocument that contains all shingle words in any order.A shingle matches well if it is contained in a smallspan. We define the shingle score as followsshingleScore λ(S k)/k . We implemented a matching algorithm in order to automatically infer the relevance of documents given thenuggets extracted. Each document received a relevance score, as described below.Our first step is to obtain a sample of documents. Whileany reasonable sample would do, we choose the statAP selection mechanism, since it has been shown practical is several settings, including past TREC tracks [13]. The sampling strategy is fully automatic, and it has the desirabletendency to more often select documents ranked highly bymany systems, and thus those documents are more likely tobe relevant.We built a web assessment interface (GUI, Figure 2) and aback-end database to store nuggets. The interface also permits encoding query aspects for diversity tasks, and viewing/judging HTML documents, however these features werenot used for TREC 8, since TREC 8 data is not appropriatefor them.Given the sample, using the web interface, a human judgeis asked to perform two tasks: (1) assess the document forrelevance and (2) for relevant documents, extract the relevant nuggets. The judge can optionally add keywords necessary for a nugget to be considered in a candidate document;for example the nugget “assassination November 22” maybe restricted to match only documents containing the keyword “Kennedy”. Assessors can also store nuggets slightlymodified from text, or with co-reference disambiguated. Somerelevant documents will not be interesting because they offerno new nuggets.On average, about 87 nuggets were extracted per queryfrom TREC 8 adhoc and about 62 nuggets were extractedper query from ClueWeb09 diversity (Table 1).Samplewhere λ is a fixed decay parameter. A shingle thatmatches “perfectly” will have a score of 1. Note that,in contrast to standard shingle matching used for duplicate detection, we do not require all shingle wordsto be present in the matching document in the sameorder or contiguously.Thus high scores indicate a match of known relevantinformation, and not of redundant or duplicate text;while a known nugget is required in a document fora good match, the matched document often containsnew/unknown relevant information, a significant difference to duplicate detection. Nugget score: To obtain a score for each nugget, weaverage the scores for each of its shingles.X1shingleScore(s)nuggetScore #shingless shingles Document score: Each document gets a relevancescore equal to the maximum matching score with anynugget:docScore 2.5maxn nuggetsnuggetScore(n)Results of the matching strategyWe employed the matching algorithm to infer the relevance of all documents in the TREC depth-100 pool (forTREC 8 adhoc) and those in the TREC depth-10 pool (forClueWeb09 diversity), given the nuggets extracted.In one assessment of our inferred relevance judgments, wesort the documents by their inferred relevance scores and examine prefixes of this list, which we call “SampleLG INF”(large sample plus inferred documents) when the matching used the nuggets from the large sample, by analogy“SampleSM INF” when the matching used nuggets from the

query 434 "Estonia Economy"3501*Relevant documents 1400Nonïrelevant documents incorrectly inferred as relevantFigure 3: Characteristic curve showing the relevantdocuments found (true positives) vs. non-relevantdocuments misclassified (false positives) for query434: “Estonia Economy”Result ListSampleLG INFSampleSM INFSampleCW INFMAP0.760.570.75789101112Table 2: Matching performance as retrieval function: Mean Average Precision separately for resulted retrieved list from matching with nuggetsfrom SampleLG, SampleSM and SampleCW.small sample, and “SampleCW INF” for the sample fromClueWeb09 diversity. Ideally, all of the relevant documentswould appear at the top of this list (with high scores) followed by the non-relevant documents (with low scores). InFigure 3, we show a typical result wherein we plot the number of relevant documents found (true positives on the yaxis) vs. non-relevant documents found (false negatives onthe x-axis) as the length of the prefix is increased. Notethe steep initial slope of the curve, indicating that a greatmany of the documents with high inferred relevance scoresare, indeed, relevant.The matching function can be seen as a retrieval modelthat uses given answers (“nuggets”) as seeds. Measuring itsmean average precision, we obtain 0.76 for SampleLG, 0.56for the SampleSM, and 0.75 for SampleCW. All these resultsare presented in Table 2. For further evidence that extraction of nuggets is a better assessment technique than justindicating relevance on documents, we make a comparisonwith relevance feedback strategies (where one reformulatesthe query by adding important terms from feedback relevantdocuments [31, 11, 19]): a BM25-based retrieval strategywith relevance feedback from SampleLG documents achievesa MAP 0.55.Given the nuggets extracted from SampleCW, we employed the matching algorithm to infer the relevance of documents in the TREC depth-300 pool for ClueWeb09 diversity,which is about 5891 documents per query. We specificallychose the ClueWeb09 data because it is web-based and overwhelming majority of documents relevant to the topic probably contain (variants of) the information collected in theform of nuggets. The matching algorithm inferred aboutSources of ErrorsJudging error: Judged non-relevant document is relevantJudging error: Judged relevant documentis non-relevantRelevant document inferred non-relevantdue to missing aspectNon-relevant document inferred relevantdue to partial match with long nuggetNon-relevant document inferred relevantby matching a nugget which lost meaningafter removing stopwords and stemmingRelevant document inferred non-relevant:not matching any nugget with a high score;a long nugget contains the matchNon-relevant document inferred relevant;nugget matched perfectly; definition of relevance is hard to expressNon-relevant document inferred relevant;nugget matched (in a longer span of text)with score 0.8;Relevant document matched nugget withscore 0.8 but not perfectlyRelevant document inferred non-relevantdue to missing synonym; Aspect existsNon-relevant document inferred relevantdue to matching a wrong nuggetNugget matches, document not on thetopicCount(%)36 10 64 (35.2%)2 (1.1%)4 (2.2%)7 (3.9%)5 (2.8%)49 (26.9%)6 (3.3%)12 (6.6%)18 (9.9%)15 (8.2%)Table 3: Failure Analysis. Percentages are computed out of total count excluding first two rows.430 documents relevant per query, about 360 out of these430 were unjudged.In order to validate the inferred relevant assessments, arandom sample of about 80 unjudged (but inferred relevant)documents per query were judged manually using the Amazon’s Mechanical Turk (mturk.com). The results of this experiment showed an agreement of 73.17% between the Mechanical Turk judges and our inferred assessments on theseunjudged documents. Each Mechanical Turk job was verified for quality 1 . Inter-assessor agreement has been investigated in a number of papers, including [6, 34]. To the bestof our knowledge, this 73.17% inter-assessor agreement ishigher than all agreement numbers previously reported byresearchers.Failure analysis. Next we performed an analysis in order to examine the false positive and false negative “mistakes” that occurred. In Table 3, we give a categorizationof about 230 mistakes, which are the greatest mismatch between the inferred and actual relevance, sampled across allqueries. The first two rows of “mistakes” (cases 1 and 2)correspond to what we believe are legitimate differences ofopinion between our assessors and the TREC assessors. Assuch, we do not count

ics"), but we replace Step (3), "relevance judgments", with the collection of nuggets instead. Our thesis is that while effectively complete document relevance judgment sets are impractical (on a large scale) or impossible (in a dynamic en-vironment), effectively complete nugget sets are much more tractable. Certainly the problem of .