Transcription

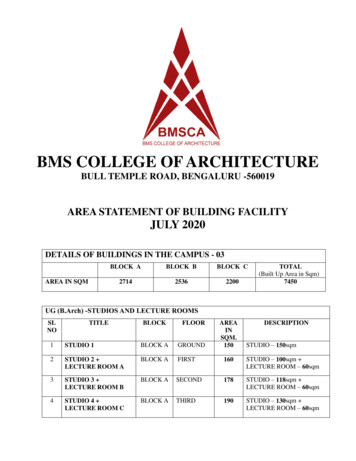

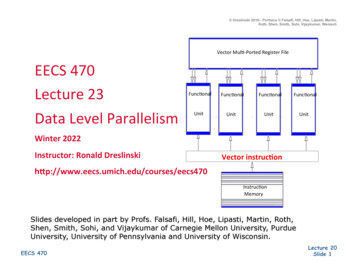

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischVector Mul:-Ported Register FileEECS 470Lecture 23Data Level tUnitUnitUnitWinter 2022Instructor: Ronald DreslinskiVector Instruc:onMemorySlides developed in part by Profs. Falsafi, Hill, Hoe, Lipasti, Martin, Roth,Shen, Smith, Sohi, and Vijaykumar of Carnegie Mellon University, PurdueUniversity, University of Pennsylvania and University of Wisconsin.EECS 470Lecture 20Slide 1

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischLatency, Bandwidth, and ParallelismLatency –Time to perform a single taskHard to make smallerBandwidth Number of tasks that can be performed in a given amount of :meEasier to make larger: overlap tasks, execute tasks in parallelOne form of parallelism: insn-level parallelism (ILP) EECS 470Parallel execu:on of insns from a single sequen:al programPipelining: overlap processing stages of different insnsSuperscalar: mul:ple insns in one stage at a :meHave seenLecture 20Slide 2

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischFundamental Problem with ILPClock rate and IPC are at odds with each other Pipelining – Fast clockIncreased hazards lower IPCWide issue –Higher IPCN2 bypassing slows down clockCan we get both fast clock and wide issue? Yes, but with a parallelism model less general than ILPData-level parallelism (DLP) EECS 470Single opera:on repeated on mul:ple data elementsLess general than ILP: parallel insns are same opera:onLecture 20Slide 3

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischData-Level Parallelism (DLP)for (I 0; I 100; I )Z[I] A*X[I] Y[I];L0: ldf X(r1),f1mulf f0,f1,f2ldf Y(r1),f3addf f2,f3,f4stf f4,Z(r1)addi r1,4,r1blti r1,400,L0// I is in r1// A is in f0One example of DLP: inner loop-level parallelism EECS 470Itera:ons can be performed in parallelLecture 20Slide 4

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischExploiting DLP With VectorsregfileI D BPV-regfileOne way to exploit DLP: vectors Extend processor with vector “data type”Vector: array of MVL 32-bit FP numbers EECS 470Maximum vector length (MVL): typically 8–64Vector register file: 8–16 vector registers (v0–v15)Lecture 20Slide 5

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischWhy Vectors?Vector instruc:ons allow deeper pipelines no intra-vector interlocksno intra-vector hazardsinner loop control hazards eliminatedneed not issue mul:ple instruc:onsvectors can present memory access pabern to hardwareWant deeper pipelines but EECS 470interlock logic is hard to divide into more stagesbubbles due to data hazard increasehard to issue mul:ple instruc:ons per cyclefetch&issue bobleneck (Flynn bobleneck)Lecture 20Slide 6

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischVector ArchitecturesVector-Register Machines EECS 470load/store architecturesvector opera:ons use vector register except ld/stregister ports cheaper than memory portsop:mized for small vectorsLecture 20Slide 7

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischVector Architectures (Cont.)Memory-Memory vector machines all vectors reside in memorylong startup latencymemory ports expensiveop:mized for long vectorsFact: most vectors are short EECS 470early machines were memory-memoryTI ASC, CDC STAR-100modern vector machines use vector-registersLecture 20Slide 8

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischSpatial vs. Temporal Vector DiscussionEECS 470Lecture 20Slide 9

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischVectorizing SAXPYldf X(r1),f1mulf f0,f1,f2ldf Y(r1),f3addf f2,f3,f4stf f4,Z(r1)addi r1,4,r1blti r1,400,0ldf.v X(r1),v1mulf.vs v1,f0,v2ldf.v Y(r1),v3addf.vv v2,v3,v4stf.v v4,Z(r1)addi r1,16,r1blti r1,400,0EECS 470ldf X(r1),f1mulf f0,f1,f2ldf Y(r1),f3addf f2,f3,f4stf f4,Z(r1)addi r1,4,r1blti r1,400,0ldf X(r1),f1mulf f0,f1,f2ldf Y(r1),f3addf f2,f3,f4stf f4,Z(r1)addi r1,4,r1blti r1,400,0ldf X(r1),f1mulf f0,f1,f2ldf Y(r1),f3addf f2,f3,f4stf f4,Z(r1)addi r1,4,r1blti r1,400,0Pack loop body into vector insns Horizontal packing changes execu:on orderAggregate loop control Add increment immediatesLecture 20Slide 10

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischA Would-Be MIPS-V ArchitectureStrongly based on CRAY-1Extend MIPS with vector instruc:ons original MIPS is a scalar unit32 integer and 32 FP registersEight vector registers (V0-V7) 64 double-precision FP each (4K bytes total)Five vector func:onal units FP , FP*, FP/, integer and logicalfully-pipeline with 2-20 stagesVector ld/st units EECS 470fully-pipelined with 10-50 stagesLecture 20Slide 11

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischMIPS-V InstructionsVector-vector instruc:ons operate on two vectorsproduce a third vectoraddvv1, v2, v3Vector-scalar instruc:ons operate on one vector and one scalaraddvv1, f0, v3Vector ld/st instruc:ons EECS 470ld/st a vector from memory into a vector registeroperates on con:guous addresseslv [r1], v1; v[I] M[r1 I]sv v1, [r1]; M[r1 I] v[I]Lecture 20Slide 12

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischMIPS-V Load/Storeld/st vector with stride vectors are not always con:guous in memoryadd non-unit stride on each accesslvws [r1,r2], v1; v[I] M[r1 I*r2]svws v1, [r1, r2]; M[r1 I*r2] v[I]ld/st indexed EECS 470indirect accesses through an index vectorlvws [r1,v2], v1; v[I] M[r1 v2[I]]svws v1, [r1, v2]; M[r1 v2[I]] v[I]Lecture 20Slide 13

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischVector Code ExampleDAXPY: double-precision a * x yfor (I 1; I 64;I )y[I] a*x[I] y[I]VLR 64ld [a], f0lv [rx], v1multv v1, f0, v2lv [ry], v3addv v2, v3, v4sv v4, [ry]EECS 4706 instruc:ons total as compared to600 MIPS instruc:ons!Lecture 20Slide 14

Strip Mining Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischNot all vectors are 64 elements longVector length register (VLR) controls length of vector opera:ons0 VLR MVL 64Strip mining EECS 470a loop of vector codeeach itera:on implements the code for 64 elementsLecture 20Slide 15

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischOther Vector OperationsUse masked vector register for vectorizing if statements a mask specifies which vector elements are operated oncan set the mask using logical compares (sltsv v1, f0)Scaber/gather EECS 470used for sparse arrays/matricesusing an index vector, gather elements into a vector registeroperate on the vectorput the vector backLecture 20Slide 16

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischVector PerformanceWhere does it come from? Fewer loop control insns: addi, blt, etc. RAW stalls taken only once, on “first itera:on” EECS 470Vector insns contain implicit loop controlVector pipelines hide stalls of “subsequent itera:ons”Lecture 20Slide 17

Amdahl’s Law Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischAmdahl’s law: the law of diminishing returns speeduptotal 1 / [%vector / speedupvector (1–%vector)]Speedup due to vectoriza:on limited by non-vector por@onIn general: op:miza:on speedup limited by unop:mized por:onExample: %opt 90% EECS 470speedupopt 10 speeduptotal 1 / [0.9/10 0.1] 5.3speedupopt 100 speeduptotal 1 / [0.9/100 0.1] 9.1Speedupopt speeduptotal 1 / [0.9/ 0.1] 10CRAY-1 rocked because it had fastest vector unit and the fastest scalar unitLecture 20Slide 18

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischExamples of SIMD / VectorIntel MMX, SSE, AVXARM NeonEECS 470Lecture 20Slide 19

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischBringing it All TogetherInstruc:on Level Parallelism – Superscalar Pipeline/OoO Have two or more pipelines in same processorpipelines need to work in tandem to improve single programperformanceThread Level Parallelism – Mul:-core Have two or more processors (Independent Pipelines)Need more programs or a parallel programdoes not improve single program performanceData Level Parallelism – Single Inst. Mul:ple Data (SIMD) Have two or more execu:on pipelines (ID- WB)Share the same fetch and control pipeline to save power(IF cont.)Similar to GPU’s20EECS 470Lecture 20Slide 20

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischILP Techniques: 1EECS 470WritebackLecture 20Slide 21

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischOther Techniques for ILP: Out ofOrder ExecutionElimina:ng stall condi:ons decreases CPIReorder instruc:ons to avoid stallsExample (5-stage LC2K pipeline):addlwnandaddEECS 4701233 2 16267451add 1 2 3lw 3 2 16add 4 5 1nand 2 6 7Lecture 20Slide 22

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischTLP Techniques: Instr.MemoryPCEECS ecture 20Slide 23

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischOther Techniques for TLP: ConvertingTLP to ILP through kMUXVirtual Mul:processor (Mul:-Threading or HyperThreading) EECS 470Duplicate the state (PC, Registers) but :me share hardwareUser/Opera:ng system see 2 cores, but only one execu:onUsed to hide long latencies (i.e. memory access to disk)Lecture 20Slide 24

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischDLP Techniques: Vector 5EECS 470Lecture 20Slide 25

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischBuilding a GPUAdd special func. units in EXto create Superscalar VLIWPCRFRFCombine TechniquesPCRFRFRFRFRF Data MemInst. MemRFSIMD MP MT SIMTMT used to hide memorylatenciesSIMD used to decreasepower of fetch/controlMP used to improvethroughput26EECS 470Lecture 20Slide 26

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischFollowing Slides Taken from John Nickolls @ Stanford CS193GEECS 470Lecture 20Slide 27

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischFermi SM increases instructionlevel parallelism512 CUDA Cores24,576 threadsHost CPUSystem MemoryBridgeCUDACoreMemory ControllerMemory ControllerMemory ControllerSMPolymorph EnginePolymorph EngineSMSMPolymorph EnginePolymorph EngineSMSMSMPolymorph EnginePolymorph EnginePolymorph EnginePolymorph EnginePolymorph EnginePolymorph EngineL2 CachePolymorph EngineSMPolymorph EngineSMPolymorph EngineSMGPCPolymorph EngineSMPolymorph EngineSMSMSMGPCRaster EngineRaster EngineSMMemoryPolymorph EngineGPCSMMemoryMemorySMMemoryGPCMemory ControllerMemoryRaster EngineMemory ControllerEECS 470Raster EngineMemory ControllerMemoryHost InterfaceGigaThread EngineSMLecture 20Slide 28

Dreslinski 2010-- Portions Falsafi, Hill, Hoe, Lipasti, Martin,Roth, Shen, Smith, Sohi, Vijaykumar, WenischSM parallel instruction executionInstruction CacheSIMT (Single Instruction Multiple Thread) execution Threads run in groups of 32 called warpsThreads in a warp share instruction unit (IU)HW automatically handles branch divergenceScheduler SchedulerDispatchDispatchRegister FileCore Core Core CoreCore Core Core CoreCore Core Core CoreHardware multithreading HW resource allocation & thread schedulingHW relies on threads to hide latencyCore Core Core CoreCore Core Core CoreCore Core Core CoreCore Core Core CoreCore Core Core CoreThreads have all resources needed to run Any warp not waiting for something can runWarp context switches are zero overheadLoad/Store Units x 16Special Func Units x 4Interconnect Network64K ConfigurableCache/Shared MemUniform CacheEECS 470Lecture 20Slide 29

Thread Level Parallelism - Mul:-core Have two or more processors (Independent Pipelines) Need more programs or a parallel program does not improve single program performance Data Level Parallelism - Single Inst. Mul:ple Data (SIMD) Have two or more execu:on pipelines (ID- WB)