Transcription

Presented bySimon WinbergAttribution-ShareAlike 4.0 International (CC BY-SA 4.0)Planned to be double period lecture

ParallelComputing Fundamentals Large Scale Parallelism Mainstream parallel Classic Parallel approaches Flynn’s Taxonomy Some Calculations Effectiveparallelism Parallel Efficiency Gustafson’s LawReminder: Review Microcoding activity

Y!A?* Parallel SystemsEEE4120F: Digital SystemsC?-X!B?

Anyone elsebeside mefeel thatway?Major softwareProcessor cores

CPUs at idle

this termHardwaree.g. PCBs, ASICsAdvantages: High speed &performance Efficient (possiblylower power than idleprocessors) ParallelizableDrawbacks: Expensive Static (cannot change)ReconfigurableComputere.g. IBM Blade, FPGA-basedcomputing platformAdvantages: Faster than software alone More flexible than software More flexible than hardware ParallelizableDrawbacks: Expensive Complex(both s/w& h/w)SoftwareProcessore.g. PC, embeddedsoftware onmicrocontrollerAdvantages: Flexible Adaptable Can be muchcheaperDrawbacks: The hardware is static Limit of clock speed Sequentialprocessing

�9th Gen Intel CPU Comparison — i5 vs i7 vs i9 Benchmarks”Core i9: “Intel Core i9 Explained“https://youtu.be/suQnh1TvGHw?list TLPQMjIwMjIwMjAkrwJfYkr9qA

SupplementaryreadingExciting Stuff!at a hefty price tagwhich a GPU will probably beat dramatically for most things(but some motivation for OpenCL)https://youtu.be/I0U6ZMeVrB4?list TLPQMjIwMjIwMjAkrwJfYkr9qA

Intel Core i9 and Core i7 i9Enhanced Intel SpeedStep Technology (EIST) i7 Extreme Edition vs. (regular) i7 processor Intel Xeon Processors 4/8cores; 8/16 threads; 40W to 130W(more cores, exponential growth in power) 1.86-3.3 Ghz CPU clock, 1066-1600 Mhz bus Intel Itanium Scalable.1/2/4 cores; hyperthreading* Allows designs up to 512 cores, 32/64bit Power use starts around 75W 1-2.53 Ghz (Itanium 9500 ‘Poulson’); QPI 6.4GT/s bus* Hyperthreading two virtual/logical processorsper core (more: eading-ht)Suggested clip: https://youtu.be/4HukCp3hxsMgigatransfers per second (GT/s)or megatransfers per second (MT/s): (somewhatinformal) number of operations transferring data thatoccurs each second in a given data-transfer channel.Also known as sample rate, i.e. num data samples captured per second,each sample normally occurring at the clock edge. [1]

Startedas 3-month “trial project” in2010 at UC Berkeley Wanted:A simple extensible ISA Problem: Commercial ISAs were toocomplex and presented IP legal issues Solution: Designed their own high-quality,license-free, royalty-free RISC ISARISC-V FoundersThe rest is(a fairly short)history Andrew WatermanYunsup LeeKrste Asanovic

RISC-V Isa high-quality, license-free, royalty-free RISC ISA A standard maintained by the non-profit RISC-VFoundation Suitable for all types of computing systems, frommicrocontrollers to supercomputers! RISC-V is available freely on a ‘permissive license’ RISC-V is not a company or a CPU implementation!Many companies getting involved seems RISC-V will become a serious player!possibly likened to ARM but free! (at least free for many types of uses)If you want to explore more or participate in RISC-V, see: https://riscv.orgIntel plans to manufacture RISC-V,: https://youtu.be/yU2MHsRMtZE (explains manufacturing IP designed elsewhere)Subtext: As much as I’m a fan of RISC-V, and open-hardware and open-source generally, this course isnevertheless following more commonly applied solutions used in industry and academic.

Most server class machines today are: PCclass SMP’s (Symmetric Multi-Processors *) 2, 4, 8 processors - cheap Run Windows & Linux Delux SMP’s 8to 64 processors Expensive:16-way SMP costs 4 x 4-way SMPsSMP offers all processors and memoryon a common front side bus (FSB –busthat connects the CPU andmotherboard subsystems).Applications: Databases, web servers, internetcommerce / OLTP (online transaction processing) Newer applications: technical computing, threatanalysis, credit card fraud. * Also termed “Shared Memory Processor”

Hundreds of processors, typically as SMPs clustersTraditionally Oftencustom built with government funding (costly!10 to 100 million USD) National / international resource Total sales tiny fraction of PC server sales Few independent software developers Programmed by small set ofmajorly smart people Later trends Cloudsystems Users from all over E.g. Amazon EC, Microsoft Azure

Someapplication examples Codebreaking (CIA, FBI) Weather and climate modeling / prediction Pharmaceutical – drug simulation, DNAmodeling and drug design Scientific research (e.g., astronomy, SETI) Defense and weapons development Large-scaleparallel systems are often usedfor modelling

Digital accelerator cards (including GPGPUs) are everincreasing in popularity, including use in data centres.(launchedend 2018)Xilinx Alevo: AdaptableAccelerator Cards for Data its/alveo.html(ended 2019)HP Intel 5110P Xeon Phi Coprocessor KitIntel Xeon PhiWhere and why it’s no longer being made,replaced by Xeon Scalable PlatformProgram with OpenCL or Xilinx’sowns accelerator design suite.And some of this it may involvedesigning specialized computearchitectures for the need (using acombination of languages and toolse.g. OpenCL / Verilog, C, R, etc.)Replaced by (from2020)Intel Ice Lake(10 nm process)10th generationcore, successorfor Xeon Phis.Core i7 1068G7 (2020 debut) 4 CPUcores 64 Iris GPCPU cores* What was it said in the “Berkeley Landscape” “Small is beautiful” and “manycore is the future of Computing”.

Classic ParallelEEE4120F

Single Program Multiple Data (SPMD) Multiple Program Multiple Data (MPMD) Consider it as running the same program, on different datainputs, on different computers (possibly) at the same timeConsider this one as running the same program withdifferent parameters settings, or recompiling the same codewith different sections of code included (e.g., uisng #ifdefand #endif to do this)Following this approach performance statistics can begathered (without necessarily any parallel code beingwritten) and then evaluated after the effect to deemthe feasibility of implementing a parallel (e.g. actualpthreads version) of the program.*Informally know as the lazy parallel programming model.

TermsEEE4120F

Observed speedup Wallclock time initial versionWallclock time refined version Wallclock time sequential (or gold)Wallclock time parallel versionParallel overhead: Amountof time to coordinate parallel tasks(excludes time doing useful work). Parallel overhead includes operations such as:Task/co-processor start-up time,Synchronizations, communications,parallelization libraries (e.g., OpenMP,Pthreads.so), tools, operating system, tasktermination and clean-up timeThe parallel overhead of the lazy parallel model could clearly be extreme, considering that is would rely onmanual intervention to (e.g.) partition and prepare the data before the program runs.

Embarrassingly Parallel Simultaneouslyperforming many similar, independenttasks, with little to no coordination between tasks. Massively Parallel Hardwarethat has very many processors (execution ofparallel tasks). Can consider this classification of 100000 parallel tasks. { Stupidly Parallel } Whilethis isn’t really an official term it typically relatesto instances where a big (and possibly very complex)coding effort is put into developing a solution that inpractice has negligible savings or worse is a whole lotslower (and possibly more erroneous/buggy) than if itwas just left as a simpler sequential implementation.

Introducing to some important termsrelated to shared memoryUsing scalar product pthreads typeimplementation for scenarios

[a1 a2 a1 a2 am-1]main()starts[b1 b2 b1 b2 bm-1]Thread1[am am 1 am 2 an]sum1[bm bm 1 bm 2 bn]Thread2Assuming a 2-core machinesum2Vectors inglobal / sharedmemorymain()sum sum1 sum2a1b122Memory accessby thread

[a1 a3 a5 a7 an-2]main()starts[b1 b3 b5 b7 bn-2]Thread1[a2 a4 a6 an-1]Vectors inglobal / sharedmemorysum1[b2 b4 b6 bn-1]Thread2main()sum sum1 sum2absum2Assuming a 2 core machine* ‘Data striping’, ‘interleaving’ and ‘interlacing’ usually means the same thing.Memory accessby thread

ContiguousPartitioned (or separated or split)Interleaved/interlaced (or alternating or data striping)Interleaved – small stride (e.g. one word stride)Interleaved – large strides (e.g. row of image pixels at a time)(the ‘stride’ in data interleaving or data striping refers to the size of the blocks alternated, generallythis is fixed but could be changeable, e.g. the last stride might be smaller to avoid padding data.)

TypeATypeFType Type TypeBCDTypeETypeGTypeJTypeHTypeIEEE4120FFlynns Taxonomy of ProcessorArchitectures

Flynn’s(1966) taxonomy was developedas a means to classify parallel computerarchitectures Computer system can be fit into one ofthe following four forms:SISDSingle InstructionSingle DataSIMDSingle InstructionMultiple DataMISDMultiple InstructionsSingle DataMIMDMultiple InstructionsMultiple DataMIMDNot to be confused with the terms of “Single Program Multiple Data (SPMD)” and“Multiple Program Multiple Data (MPMD)” mentioned earlier.

This is (obviously) the classic von NeumannComputer: serial (not parallel) computer, e.g.: Old style single core PC CPUs, e.g. i486Single instruction instruction stream acted on bythe CPU during any one clock cycle Onex 2 * (y z);Single data 0x1000 LD A,[0x2002] Onlyone input data stream for anyone clock cycle Deterministic execution0x1003 LD B,[0x2004]0x1006 ADD A,B0x1007 SHL A,10x1008 ST A,[0x2000]

A form of parallel computer Earlysupercomputers used this model first Nowadays it has become common – e.g., used inmodern computers on GPUs Single instruction Allprocessing units execute thesame instruction for any givenclock cycle Multiple data Eachprocessing unit canoperate on a different dataelementy [1 2 3 4]z [2 3 4 5]x 2 * (y z)LD AX,[DX 0]LD AX,[DX 3]LD BX,[EX 0]LD BX,[EX 3]ADD AX,BX ADD AX,BXSHL AX,1SHL AX,1ST AX,[CX 0]ST AX,[CX 3] CPU 1 CPU 4

Runs in lockstep (i.e., all elementssynchronized) Works well for algorithms with a lot ofregularity; e.g. graphics processing. Two main types: Processorarrays Vector pipelines Still highly deterministic (know the sameoperation is applied to specific set of data – butmore data to keep track of per instruction)

Vector pipelines Cray X-MP IBM9000, Cray X-MP,Fujitsu vector processor,NEC SX-2, Hitachi S820, ETA10 Processor arrays MasPar MP-1 ThinkingMachine CM2,MasPar MP-1 & MP-2,ILLIAC IV Graphics processor units usually use SIMD

Singledata stream fed into multipleprocessing units Each processing unit works on dataindependently via independentinstruction streams Few actual examples of this class ofparallel computer have ever existed

Possible uses? Somewhat intellectual? Maybe redundant! (see next slide)Possible example application: Differentset of signal processing operations workingthe same signal streamExample:Simultaneously find the min and max input, and do a sum of inputs.A inputx MAXINTx -MAXINTx 0If A x then x A If A x then x Ax x A CPU 1CPU 2CPU 3

The most common type of parallel computer (mostlate model computers, e.g. Intel Core Duo, in thiscategory)Multiple Instruction Multiple Data Each processor can be executing a different instructionstreamEvery processor may be working with a different data streamExecution can be asynchronous or synchronous; nondeterministic or deterministic

Examples Manyof the current supercomputers Networked parallel computer clusters SMP computers multi-core PCs AMD OpteronMIMD architectures could includeall the other models. e.g., SISD – just one CPU active, others running NOPSIMD – all CPUs load the same instruction butapply to different dataMISD – all CPUs load different instructionsbut apply it to the same dataIBM BlueGene

EEE4120FMaximum Effective Parallelism

Significant Tradeoff:Consideration for- hardware- softwareThis refers to the point of the numberof cores / amount of parallelismbeyond which additional parallelismprovides no further benefit or (worse)may reduce performance.

Significant mms cost and timeHardwarecores / available parallelismstartup timeThis refers to the point of the number ofcores / amount of parallelism beyond whichadditional parallelism provides no furtherbenefit or (worse) may reduce performance.cost of parallelismcores / available parallelismcores / available parallelismcores / available parallelism

Remember S TS / TPfrom Amdahl:(i.e. sequential time over parallel time) Youcan use these equations toapproximate behaviour of modelledsystems. E.g.: to represent speedup, commsoverhead, cost of equipment, etc. and usecalculations to estimate optimal selections.(simple example to follow)

Quickestimation for maximum effectiveparallelismSolution follows shortly!Please try it for your self, or work with a buddy to thinkabout how to respond to this question.

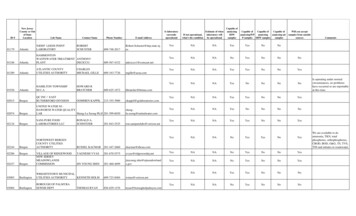

NProc (ms) Comms (ms) Total (ms)1100.0000 00166.2508086.250323.125160 163.125641.563320 321.563Could do some roughcalculations (or usebinary search) check:N 2,N 64,N 8,N 4

2nd part of Amdahl’s law videoUnderstanding Parallel Computing (Part 2): The Lawn Mower Law LinuxMagazineIf you haven’t already watched this please do!Amdahl2.flv

We can think of the efficiency of variousthings, e.g. power systems, motors, heatersetc. We can also think of the efficiency ofparallel computing Parallel Efficiency Definedas the:ratio of speedup (S) to the number of processors (p) Parallel Efficiency measures the fraction of timefor which a processor is usefully used. An efficiency of 1 is ideal ( 1 means you may beharnessing energy from another dimension )

Parallel Efficiency p number processorsSpTs exe time of the seq. alg.Tp exe time of the parallel alg.with p processors usedSp speedup(linear being the realistic ideal)super-linearspeedup(awesome!)linear speedupsub-linear speedupp (number cores)

Gustafson's law The theoretical speedup in latency of the executionof a task at fixed execution time that can beexpected of a system whose resources are improved. A follow-up to Amdahl's Lawformulation:Speedup S gained by N processors (instead of just one) for a task witha serial fraction s (not benefiting from parallelism) is as follows:S speedup, N cores, s serial portionUsing different variables, can be formulated as:Slatecy theoretical speedup in latency of the execution of the whole tasks speedup in latency of part of the task benefiting from improved parallelismp % of workload before the improvement that will be speeded up.* also referred to, more fairly, as the Gustafson–Barsis's law

Speedup in Latency (Slatency)This is a plot of2.5s going from 0.5 to 22speedup in latency (slatency)s 2p going from 10% to 100%As you can see, the latency isreduced (i.e. Slatency increased)as s increases and p increases.But for e.g. a real speedup of 2you actually need p 100% (i.e.the entire workload improved) toachieve it.s 1.51.5s 1.25s 11s 0.50.5s speedup in latency of part of the taskbenefiting from improved parallelisms 0.25000.20.40.60.811.2p % of workload before theimprovement that will be speeded up.percentage of workload (p %)Sl 0.25Sl 0.50Sl 1.00Sl 1.25Sl 1.50Sl 2.00Gustafson’s law can be more useful to real-timeembedded systems because it look more towardsimproving the response time of a system.

Glimpse into Next LectureAmdahl’s Law Revisited:Applying refinements for the heterogeneous,multicore era of computing .The next lecture helps you understand theprescribed reading, a highly influentialpaper, that brings Amdahl’s Law moreaccurately into the 21st century.

FREE Creative Commons LicenseCOUNTRY BOYMusic: https://www.bensound.com

Disclaimers and copyright/licensing detailsI have tried to follow the correct practices concerning copyright and licensing of material,particularly image sources that have been used in this presentation. I have put mucheffort into trying to make this material open access so that it can be of benefit to others intheir teaching and learning practice. Any mistakes or omissions with regards to theseissues I will correct when notified. To the best of my understanding the material in theseslides can be shared according to the Creative Commons “Attribution-ShareAlike 4.0International (CC BY-SA 4.0)” license, and that is why I selected that license to apply tothis presentation (it’s not because I particulate want my slides referenced but more toacknowledge the sources and generosity of others who have provided free material suchas the images I have used).Image sources:Clipart sources – public domain CC0 (http://pixabay.com/)PxFuel – CC0 orgImages from flickr

microcontrollers to supercomputers! RISC-V is available freely on a 'permissive license' RISC-V is nota company or a CPU implementation! If you want to explore more or participate in RISC-V, see: https://riscv.org. Many companies getting involved seems RISC-V will become a serious player! possibly likened to ARM but free!