Transcription

Computer-aided Ontology Development:an integrated environmentManuel Fiorelli, Maria Teresa Pazienza, Steve Petruzza, Armando Stellato, Andrea TurbatiART Research Group, Dept. of Computer Science,Systems and Production (DISP) University of Rome, Tor VergataVia del Politecnico 1, 00133 Rome, Italy{pazienza, stellato, turbati}@info.uniroma2.it{manuel.fiorelli, steve.petruzza}@gmail.comAbstractIn this paper we introduce CODA (Computer-aided Ontology Development Architecture), an Architecture and a Framework for semiautomatic development of ontologies through analysis of heterogeneous information sources. We have been motivated in its design byobserving that several fields of research provided interesting contributions towards the objective of augmenting/enriching ontologycontent, but that they lack a common perspective and a systematic approach.While in the context of Natural Language Processing specific architectures and frameworks have been defined, time is not yetcompletely mature for systems able to reuse the extracted information for ontology enrichment purposes: several examples do exist,though they do not comply with any leading model or architecture. Objective of CODA is to acknowledge and improve existingframeworks to cover the above gaps, by providing: a conceptual systematization of data extracted from unstructured information toenrich ontology content, an architecture defining the components which take part in such a scenario, and a framework supporting all ofthe above through standard implementations.This paper provides a first overview of the whole picture, and introduces UIMAST, an extension for the Knowledge Management andAcquisition Platform Semantic Turkey, that implements CODA principles by allowing reuse of components developed inside UIMAframework to drive semi-automatic Acquisition of Knowledge from Web Content.1. IntroductionA number of tasks focused on ontology development aswell as on augmentation or refinement of their contentthrough reuse of external information has been defined inthe last decade. The nature of these tasks is manifold:from the automation of ontology development processesto their facilitation through innovative and effectivesolutions for human-computer interaction. In some casestheir assessment has produced a plethora of (oftencontrasting) methodologies and approaches (as in the caseof ontology and lexicon integration (Buitelaar, et al.,2006; Cimiano, Haase, Herold, Mantel, & Buitelaar,2007; Pazienza & Stellato, 2006; Pazienza & Stellato,Linguistic Enrichment of Ontologies: a methodologicalframework, 2006)); in other ones, such as ontologylearning, it has lead to founding entire new branches ofresearch (Cimiano, 2006)The “external information” we are interested in, mostlyrefers to diverse forms of “narrative information sources”,such as text documents (or other kind of media, such asaudio and video) or to more structured knowledge content,like the one provided by machine readable linguisticresources. These latter comprise lexical resources (e.g.rich lexical databases such as WordNet (Miller, Beckwith,Fellbaum, Gross, & Miller, 1993), bilingual translationdictionaries or domain thesauri), text corpora (from puredomain-oriented text collections to annotated corpora ofdocuments), or other kind of structured or semi-structuredinformation sources, such as frame-based resources(Baker, Fillmore, & Lowe, 1998; Shi & Mihalcea, 2005).With the intent of providing a definition covering all ofthe previously cited tasks and addressing the interactionthey have with the above resources, we coined ), with this acronym covering all processesfor enriching ontology content through exploitation ofexternal resources, by using (semi)automatic approaches.In this paper, we lay the basis for an architecture (CODA:COD Architecture), supporting Computer-aided OntologyDevelopment, then introduce UIMAST, an extension forthe Knowledge Management and Acquisition PlatformSemantic Turkey, implementing CODA principles byallowing reuse of components developed inside the UIMAframework to drive semi-automatic Acquisition ofKnowledge from Web Content.2. State-of-the-art and MotivationMotivations and ideas for supporting fulfillment of theabove tasks’ objectives, have been often supportedthrough proof-of-concept systems, tools and in some casesopen platforms (Cimiano & Völker, 2005) developedinside the research community, laying the path andshowing the way for future industrial follow-up.Until now basic architectural definitions and interactionmodalities have been defined in detail fulfilling industrystandard level for processes such as:– ontology development with most recent ontologydevelopment tools following the path laid by Protégé(Gennari, et al., 2003)– text analysis starting from the TIPSTER architecture(Harman, 1992), its most notable implementationGATE (Cunningham, Maynard, Bontcheva, &Tablan, 2002) and the recently approved OASISstandard UIMA (Ferrucci & Lally, 2004).On the contrary a comprehensive study and synthesis ofan architecture for supporting ontology developmentdriven by knowledge acquired from external resources,has not been formalized until now.What lacks in all current approaches is an overallperspective on the task and a proposal for an architecture

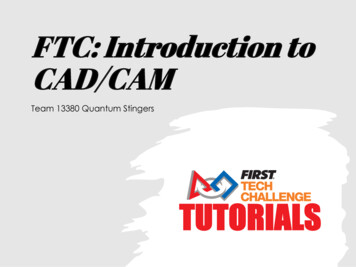

Analysis EnginesCollectionReaderAggregate Analysis EngineAnalysis EngineAnalysis EngineAE DescriptionsAnalysis EngineCASTSCODA Cas ConsumerIdentity ction disambiguationSmart SuggestionRDF Semantic RepositoryOntologyExternalRepositoryFigure 1. CODA Architecture (overview of components related to tasks 1 and 2)providing instruments for supporting the entire flow ofinformation (from acquisition of knowledge from externalresources to its exploitation) to enrich and augmentontology content. Just scoping to ontology learning,OntoLearn (Velardi, Navigli, Cucchiarelli, & Neri, 2005)provides a methodology, algorithms and a system forperforming different ontology learning tasks, OntoLT(Buitelaar, Olejnik, & Sintek, 2004) provides a ready-touse Protégé plugin for adding new ontology resourcesextracted from text, while the sole Text2Onto (Cimiano &Völker, 2005) embodies a first attempt to realize an openarchitecture for management of ontology learningprocesses.If we consider ontology-lexicon integration, previousstudies dealt with how to represent this integratedinformation (Peters, Montiel-Ponsoda, Aguado de Cea, &Gómez-Pérez, 2007; Buitelaar, et al., 2006; Cimiano,Haase, Herold, Mantel, & Buitelaar, 2007), other haveshown useful applications exploiting onto-lexicalresources (Basili, Vindigni, & Zanzotto, 2003; Peter,Sack, & Beckstein, 2006) though only few works(Pazienza, Stellato, & Turbati, 2008) dealt withcomprehensive framework for classifying, supporting,testing and evaluating processes for integration of contentfrom lexical resources with ontological knowledge.3. ObjectivesConsidering these expectations, we worked with theobjective of acknowledging and improving existingframeworks for Unstructured Information Management,thus providing:– a conceptual systematization of the tasks coveringreuse of data extracted from unstructured informationto improve ontology content–an architecture defining the components which takepart in such a scenario– a framework supporting all of the above throughstandard implementationsWe provide here requirements and objectives whichcharacterize COD tasks, the COD Architecture, and aCODA Framework3.1.COD TasksGiven the definition of COD provided at the beginning,we sketch here major related tasks:1. (Traditional) Ontology Learning tasks, devoted toaugmentation of ontology content through discoveryof new resources and axioms. They include discoveryof new concepts, concept inheritance relations,concept instantiation, properties/relations, domainand range restrictions, mereological relations orequivalence relations etc 2. Population of ontologies with new data: a rib of theabove, this focuses on the extraction of new grounddata for a given (ontology) model (or even forspecific concepts belonging to it)3. Linguistic enrichment of ontologies: enrichment ofontological content with linguistic informationcoming from external resources (eg. text, linguisticresources etc )3.2.CODA ArchitectureCOD Architecture (CODA, from now on) defines thecomponents (together with their interaction) which areneeded to support tasks above. This architecture builds ontop of existing standard for Unstructured InformationManagement UIMA (UIM Architecture) (tasks 1&2) and,for task 3, on the Linguistic Watermark (Pazienza,

Stellato, & Turbati, 2008) suite of ontology vocabulariesand software libraries for describing linguistic resourcesand the linguistic aspects of ontologies. Figure 1 depictsthe part of the architecture supporting tasks 1 and 2. Tinyarrows represent the use/depends on relationship, so thatthe Semantic Repository owl:imports the referenceontologies, the projection component invokes servicesfrom the other three components in the CODA CASConsumer as well as is driven by the projection documentand TS and reference ontology. Large arrows representinstead the flow of information.While UIMA already foresees the presence of CASConsumers1 for projecting collected data over any kind ofrepository (ontologies, databases, indices etc ), CODArchitecture expands this concept by providing groundanchors for engineering ontology enrichment tasks,decoupling the several processing steps whichcharacterize development and evolution of ontologies.This is our main original contribution to the framework.Here follows a description of the presented components.Projection ComponentThis is the main component which realizes the projectionof information extracted through traditional UIMcomponents (i.e. UIMA Annotations).The Unstructured Information Management (UIM)standard foresees data structures stored in a CAS(Common Analysis System). CAS data comprises a typesystem, i.e. a description – represented through featurestructures (Carpenter, 1992) – of the kind of entities thatcan be manipulated in the CAS, and the data (modeledafter the above type system) which is produced overprocessed information stream.This component thus takes as input:– A Type System (TS)– A reference ontology (we assume the ontology to bewritten in the RDFS or OWL W3C standard)– A projection document containing projection rulesfrom the TS to the ontology– A CAS containing annotation data representedaccording to the above TSand uses all the above in order to project UIMAannotations as data over a given Ontology Repository.The language for defining projections allows for:– Projecting CAS feature structures (FS) as instancesof a given class. FeaturePaths can be used to projectarbitrary feature values as instance names– Projecting FSs as values of datatype properties. Notethat this requires ontology instances to be elected assubjects for each occurrence of this propertyannotation. The domain class which will be used tolook for instance can be specified in the projectionrule. Note that, by default, the domain of the propertyis inherited from the ontology, though it may befurther restricted for the specific rule. So, forexample, if property date has owl:Thing as its domain1UIMA terminology is widely adopted along the paper: thoughsome explanations are provided here, we refer non-proficientreaders to the UIMA Glossary inside the UIMA Overview &SDK Setup document, which is available html(i.e. no domain restriction), the outcome of a specificAnalysis Engine, which is able to capture dates forconference events (or which is being used in a givensetting for this purpose), can be restricted in theprojection rule to automatically search for instancesof the restricted domain. The use that is made of theabove information is partially demanded to theapplication context, in order to properly select theright instances to be associated to the valuedproperty.– Projecting complex FSs as custom graph patterns.Some TS provide complex extraction patters whichcontains much more than plain text annotations; theypossibly provide facts with explicit semantics whichonly need to be properly imported into the ontology.In this case, custom RDF graph patterns can bedefined to create new complex relations inside theontology. GRAPH Patterns are sets of RDF triples, inthis case enriched by the presence of bindings to TSelements (again, in the form of FeaturePaths). Whenthis projection is being applied, the feature pathbindings are resolved and the ground pattern is usedin a SPARQL CONSTRUCT query to generate newRDF triples in the Semantic Repository).The Projection Component can be used in differentscenarios (from massively automated ontologylearning/population scenarios, to support in humancentered processes for ontology modeling/data entry) andits projection processes can be supported by the followingcomponents.Identity Resolution ComponentWhenever an annotation is projected towards ontologydata, the services of this component are invoked toidentify potential matches between the annotated infowhich is being reified into the semantic repository, andpreviously recognized resources already present inside it.If the Identity Resolution (IR) component discovers amatch, then the new entry is merged into the existing one;that is, any new data is added to the resource descriptionwhile duplicated information (probably the one whichhelped in finding the match) is discarded.The IR component may look up on the same repositorywhich is being fed by CODA though also externalrepositories of LOD (linked open data) can be accessed.Eventually, entity naming resolution provided by externalservices – such as the Entity Naming System (ENS)OKKAM (Bouquet, Stoermer, & Bazzanella, 2008) – maybe combined with internal lookup on the local repository.Input for this component are:– External RDF repositories (providing at least indexedapproximate search over their resources)– Entity Naming Systems access methods– Other parameters needed by specific implementationof the componentProjection Disambiguation Component(s)These components may be invoked by the ProjectionComponent to disambiguate between different possibleprojections. Projection documents may in fact describemore than one projection rule which can be applied togiven types in the TS. These components are thus, bydefinition, associated to entries in Projection Documents

and are automatically invoked when more than a rule ismatched on the incoming CAS data.This component has access by default to the currentSemantic Repository (and any reference ontology for theProjection rules), to obtain a picture of the ongoingprocess which can contribute to the disambiguationprocess.Smart Suggestion Component(s)These components help in proposing suggestions on howto fill empty slots in projection rules (such as subjectinstances in datatype property projections or free variablesin complex FS to graph-pattern projections). As forDisambiguation Components, these components can bewritten for specific Projection Documents and associatedto the rules described inside them, as supportingcomputational objects.3.3.CODA Framework ObjectivesCODA Framework is an effort to facilitate developmentof systems implementing the COD Architecture, byproviding a core platform and highly reusable componentsfor realization of COD tasks.Main objectives of this architectural framework are:1. Orchestration of all processes supporting COD tasks2. Interface-driven development of COD components3. Maximizing reuse of components and code4. Tight integration with available environments, suchas UIMA for management of unstructuredinformation from external resources (e.g. textdocuments) and Linguistic Watermark (Pazienza,Stellato, & Turbati, 2008) for management oflinguistic resources5. Minimizing required LOCs (lines of code) and effortfor specific COD component development, byproviding high level languages for matching/mappingcomponents I/O specifications instead of developingsoftware adapters for their interconnection6. Providing standard implementations for componentsrealizing typical support steps for COD tasks, such asmanagement of corpora, user interaction, validation,evaluation, production of reference data (oracles, goldstandards) for evaluation, identity discoverers etc In the specific, with respect to components described insection 3.2, CODA Framework will provide the mainProjection Component (and its associated projectionlanguage), a basic implementation of an IdentityResolution Component, and all the required business logicto fulfill COD tasks through orchestration of CODcomponents.4. Possible application scenariosWilling to fulfill these objectives, we envision severalapplication scenarios for CODA. We provide here adescription of a few of them.Fast Integration of existing UIMA components forontology populationBy providing projections from CAS type systems toontology vocabularies, one could easily embed standardUIMA AEs (Analysis Engines) and make them able topopulate ontology concepts pointed by the projections,without requiring developing any newsoftwarecomponent. These projections, which are part of objective5 above, will be modeled through a dedicated languagewhich will be part of the CODA framework. Moreover(objective 6 above), standard or customized identitydiscoverers will try to suggest potential matches betweenentities annotated by the AE and already existingresources in the target ontology, to keep identity ofindividual resources and add further description to them.In this scenario, given an ontology and a AE, only theprojection from the CAS type system of the AE to theontology is needed (and optionally, a customized identitydiscoverer).Everything else is assumed to beautomatically embedded and coordinated by theframework.Rapid prototyping of Ontology Learning AlgorithmsThis is the opposite situation of the scenario above.CODA, by reusing the same chaining of UIMAcomponents, ontologies, CAS-to-Ontology projections,identity discoverers etc , will provide:– a preconfigured CAS type system (OntologyLearning CAS Type System) for representinginformation to be extracted under the scope ofstandard ontology learning tasks (i.e. the onesdiscussed in section 3.1)– preconfigured projections from above CAS typesystem to learned ontology triples– extended interface definitions for UIMA analysisengines dedicated to ontology learning tasks:available abstract adapter classes will implement thestandard UIMA AnalysisComponent interface,interacting with the above Ontology Learning CAStype system and exposing specific interface methodsfor the different learning tasksIn this scenario, developers willing to rapidly deployprototypes for new ontology learning algorithms, will beable to focus on algorithm implementation and benefit ofthe whole framework, disburdening them from corporamanagement and generation of ontology data. This levelof abstraction far overtakes the Modeling PrimitiveLibrary of Text2Onto (i.e. a set of generic modelingprimitives abstracting from specific ontology modeladopted and being based on the assumption that theontology exposes at least a traditional object orienteddesign, such as that of OKBC (Chaudhri, Farquhar, Fikes,Karp, & Rice, 1998)). In fact CODA does not even leavesto the developer the task of generating new ontology data,while just asks for specific objects to be associated andthus produced for given ontology learning tasks. Forexample, pairs of terms could be produced by taxonomylearners, which need then to be projected as IS-A ortype-of relationships by the framework.Plugging of algorithms for automatic linguisticenrichment of ontologiesIn such a scenario, the user is interested in enrichingontologies with linguistic content originated from externallexical resources. The Linguistic Watermark library which is already been used in tools for (multilingual)linguistic enrichment of ontologies (Pazienza, Stellato, &Turbati, 2010) and which constitutes a fundamentalmodule of CODA - supports uniform access to

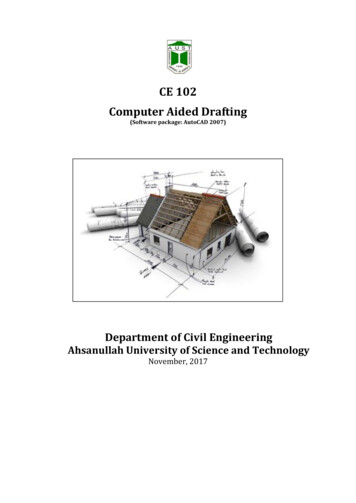

Figure 2. UIMAST Type System Editorheterogeneous resources wrapped upon a common modelfor lexical resource definition, allows for their integrationwith ontologies and for evaluation of the acquiredinformation. Once more, the objective is to relievedevelopers from technical details such as resource access,ontology interaction and update, by providing standardfacilities associated to tasks for ontology-lexiconintegration/enrichment, and thus leaving up to them thesole objective of implementing enrichment algorithms.User Interaction for Knowledge Acquisition andValidationUser interaction is a fundamental aspect when dealingwith decision-support systems. Prompting the user withcompact and easy-to-analyze reports on the application ofautomated processes, and putting at his hands instrumentsfor validating choices made by the system candramatically improve the outcome of processes forknowledge acquisition as well as support supervisedtraining of these same processes. CODA front-end toolsshould thus provide CODA specific applicationssupporting training of learning-based COD components,automatic acquisition of information from web pagesvisualized through the browser (or management of infopreviously extracted from entire corpora of documents)and editing of main CODA data structures (such as UIMACAS types, projection documents and, obviously,ontologies). Interactive tools should support iterativerefinement of massive production of ontology data as evolution.This last important environment is a further very relevantobjective, and motivated us to define and developUIMAST, an extension for Semantic Turkey (Griesi,Pazienza, & Stellato, 2007; Pazienza, Scarpato, Stellato,& Turbati, 2008), - a Semantic Web KnowledgeAcquisition and Management platform2 hosted on theFirefox Web Browser - to act as a CODA front-end fordoing interactive knowledge acquisition from web pages.5. UIMAST: A CODA-based tool supportingdynamic ontology populationThe UIMAST Project3 originated in late 2008, with theintent of realizing a system for bringing UIMA support toSemantic Turkey’s functionalities for KnowledgeAcquisition. The project has been organized around twomain milestones:– Supporting manual production of UIMA CAScompliant annotations– Reuse UIMA annotators to automatically extractinformation from web pages and project them overthe edited ontologyMilestone 1 has been reached in early 2009, with the firstrelease of UIMAST. This release features:1. A UIMA Type System Editor (figure 2 above), moreintuitive to use than the Eclipse-based one bundledwith UIMA, in that it provides a taxonomical view ofedited Feature Structures, showing explicit andinherited attributes for each Type.2. Interactive UIMA annotator: Semantic Annotationstaken through Semantic Turkey can be projected 0 is the officialpage on Firefox add-ons site addressing Semantic Turkeyextension, while http://semanticturkey.uniroma2.it/ provides aninside view about Semantic Turkey project, with updateddownloads, user manuals, developers support and access to tensions/uimast/. The idea forthe project has been awarded with IBM UIMA Innovation tware/dw/university/innovation/2007 uima recipients.pdf

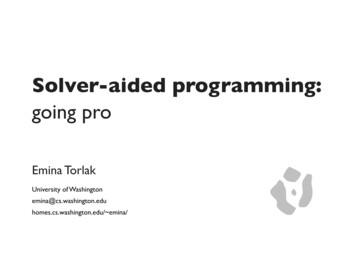

Figure 3. Editing Projections in UIMAST: from simple TS feature EmailAddress to ontology Datatype property emailUIMA Annotations. A xml based projectionlanguage4allows to project standard annotations takenagainst any domain ontology with respect to a givenType System. Currently there is no system supportingmanual production of UIMA annotations from WebPages. Annotations taken by human annotators can bereused to train machine-learning based AEs as well asto evaluate the output of AEs by producing goldenstandard annotated documents.Annotations taken through feature 2 can be exported indifferent formats, providing that their content can beprojected according to begin/end attributes of UIMAAnnotationBase feature. By default, UIMAST exploits xpointer annotations taken through the RangeAnnotator 5extension of Semantic Turkey.During Milestone 2, we produced a cross-SOFA6annotator which is able to parse content of specificdocument formats (such as HTML, PDF etc ) andproduce cross-annotations setting links between pure rawtext surrogates of analyzed documents and their or/6SOFA: Subject OF Analysis, a perspective over a (multimodal)artifact, see UIMA User guidesource formats. An HTML version of this annotator thusaccepts HTML documents, stores their content in adedicated HTML SOFA, then runs an HTML SAX parsererupting raw-text content which is stored in a dedicatedSOFA and cross-linked with the tag elements of theformer one.X-pointer annotations taken over the HTML page can thusbe easily aligned with annotations taken over raw-text.This alignment allows to produce standard char-offsetannotations starting from those manually taken with theinteractive UIMA annotator, as well as to projectautomatically generated annotations produced by UIMAAEs (which usually work over raw text content) overX-Pointer references; as a consequence they can bevisualized inside the same web page under analysis(which is the objective of milestone 2).Currently, the new release of UIMAST provides:1. A projection editor (figure 3: supporting only simpleClass and Property projections)2. A UIMA pear installer, able to load UIMA pearpackages3. The Visual Knowledge Acquisition Tool (KA Tool orsimply KAT).KAT provides visual anchors for users willing to semiautomatically import textual information present inside

Figure 4. Knowledge Acquisition with UIMASTweb pages into the current working ontology of SemanticTurkey. While knowledge acquisition in standardSemantic Turkey requires the user to perform manualwork (discovery of useful info and annotation) anddecision making (produce data from annotated elements),UIMAST KAT heavily exploits the backgroundknowledge available from the Type System of the loadedAEs and the projection document, as well as beneficiatingof support coming from CODA components in the form ofsmart suggestions, resolved identities etc ) thus speedingup the acquisition process in the direction of automatizingthe task.In an ordinary KA session, the user starts by defining thetool setup: this implies loading one or more UIMA pears7through the pear installer and then loading a projectiondocument associated to the currently edited ontology andthe imported pears (all of the above may be stored asdefault settings for the ontology project being edited sothat this process will not need to be repeated each time).After tool setup, the user can immediately inspect webpages containing interesting data which can be extractedby the loaded AEs. The KAT then highlights all the textsections of the web page which have been annotated bythe AEs. Each of these dynamically added highlights isnot a purely visual alteration of the underlying HTML, butan active HTML component providing fast-to-clickacceptance of proposed data acquisitions as well as morein-depth decision making procedures.As an example of integrated process involving differentresources in a user defined application, see figure 4 where7A UIMA components packagea simple Named Entity Recognizer (the one bundled withUIMA sample AEs) has been projected towards ontologyclass Person. The AE has been launched and namedentities discovered over the page have been highlighted.When the user passes with the mouse over one of thesehighlighted textual occurrences, the operation availablefrom the projection doc is shown, and the user can rightaway either authorize its execution, or modify its details.In the example in the figure, the user has been promptedwith the subtree rooted in the projected ontology class,and the user chooses to associate selected name to classResearcher instead of the more general Person. Should anidentity resolution component discover that the given textmay correspond to an existing resource (from the sameedited ontology or from an external ENS), then he maychoose to associate the taken annotation to it or reject itand create a new one.6. ConclusionThe engineering of complex processes involvingmanipulation, elaboration and transformation of data andsynthesis of knowledge is a recognized and widelyaccepted need, which lead in these years to thereformulation of tasks in terms of processing blocks otherthan (more than?) resolution steps. While traditionalresearch fields such as Natural Language Processing andKnowledge Representation/Management have now foundtheir standards, cross-boundary disciplines between thetwo need to find their way towards real applicability ofapproaches and proposed solutions. CODA aims at fillingthis gap by providing on the one hand a common

environment for ontology development throughknowledge acquisition, and on the other one by reusingthe many solutions and technologies which years ofresearch on these fields made easily accessible .We hope that the ongoing realization of CODA will leadto a more mature support for research in the fields of bothontology learning and ontology/lexicon interfaces, .7. ReferencesBaker, C., Fillmore, C., & Lowe, J. (1998). The BerkeleyFrameNet project. COLING-ACL. Montreal, Canada.Basili, R., Vindigni, M., & Zanzotto, F. (2003).Integrating Ontological and Linguistic Knowledge forConceptual Information Extraction.IEEE/WICInternational Conference on Web Intelligence.Washington, DC, USA.Bouquet, P., Stoermer, H., & Bazzanella, B. (2008). AnEntity Naming System for the Semantic Web. InProceedings of the 5th European Se

COD Architecture (CODA, from now on) defines the components (together with their interaction) which are needed to support tasks above. This architecture builds on top of existing standard for Unstructured Information Management UIMA (UIM Architecture) (tasks 1&2) and, for task 3, on the Linguistic Watermark (Pazienza,