Transcription

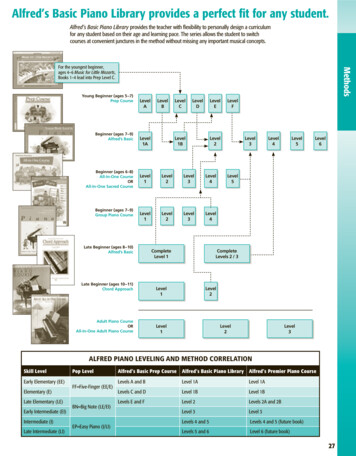

Advanced Operating SystemsMS degree in Computer EngineeringUniversity of Rome Tor VergataLecturer: Francesco QuagliaKernel level memory management1.2.3.4.The very base on boot vs memory managementMemory ‘Nodes’ (UMA vs NUMA)x86 paging supportBoot and steady state behavior of the memorymanagement system in the Linux kernel

Basic terminology firmware: a program coded on a ROM device, which canbe executed when powering a processor on bootsector: predefined device (e.g. disk) sector keepingexecutable code for system startup bootloader: the actual executable code loaded andlaunched right before giving control to the target operatingsystem this code is partially kept within the bootsector, andpartially kept into other sectors It can be used to parameterize the actual operatingsystem boot

Startup tasks The firmware gets executed, which loads in memory andlaunches the bootsector content The loaded bootsector code gets launched, which mayload other bootloader portions The bootloader ultimately loads the actual operatingsystem kernel and gives it control The kernel performs its own startup actions, which mayentail architecture setup, data structures and softwaresetup, and process activations To emulate a steady state unique scenario, at least oneprocess is derived from the boot thread (namely the IDLEPROCESS)

Where is memory management there? All the previously listed steps lead to change the image ofdata that we have in memory These changes need to happen just based on variousmemory handling policies/mechanisms, operating at: Kernel load time Kernel initialization (including kernel level memorymanagement initialization) Kernel common operations (which make large usageof kernel level memory management services thathave been setup along the boot phase)An initially loaded kernel memory-image is not the same as theone that common applications see’’ when they are activated

Traditional firmware on x86 It is called BIOS (Basic I/O System) Interactive mode can be activated via proper interrupts(e.g. the F1 key) Interactive mode can be used to parameterize firmwareexecution (the parameterization is typically kept viaCMOS rewritable memory devices powered by appositetemporary power suppliers) The BIOS parameterization can determine the order forsearching the boot sector on different devices A device boot sector will be searched for only if the deviceis registered in the BIOS list

Bios bootsector The first device sector keeps the so called master bootrecord (MBR) This sector keeps executable code and a 4-entry tables,each one identifying a different device partition (in termsof its positioning on the device) The first sector in each partition can operate as thepartition boot sector (BS) In case the partition is extended, then it can additionallykeep up to 4 sub-partitions (hence the partition bootsector can be structured to keep an additional partitioningtable) Each sub-partition can keep its own boot sector

RAM image of the MBRGrub (if you use it) or others

An example scheme with BiosBoot codePartition tableBootpartitionPartition 1Partition 3 (extended)Boot sectorExtended partition boot recordNowadays huge limitation:the maximum size ofmanageable disks is 2TB

UEFI – Unified Extended Firmware Interface It is the new standard for basic system support (e.g. bootmanagement) It removes several limitations of Bios: We can (theoretically) handle disks up to 9 zettabytes It has a more advanced visual interface It is able to run EFI executables, rather than simplyrunning the MBR code It offers interfaces to the OS for being configured(rather than being exclusively configurable bytriggering its interface with Fn keys at machinestartup)

UEFI device partitioning Based on GPT (GUID Partition Table) GUID Globally Unique Identifier . Theoretically allover the world (if the GPT has in its turn a uniqueidentifier) Theoretically unbounded number of partitions kept in thistable – No longer we need extended partitions forenlarging the partitions’ set GPT are replicated so that if a copy is corrupted thenanother one will work – this breaks the single point offailure represented by MBR and its partition table

Bios/UEFI tasks upon booting the OS kernel The bootloader/EFI-loader, e.g., GRUB loads in memory theinitial image of the operating system kernel This includes a machine setup code’’ that needs to run before theactual kernel code takes control This happens since a kernel configuration needs given setup in thehardware upon being launched The machine setup code ultimately passes control to the initialkernel image In Linux, this kernel image executes starting from thestart kernel() in the init/main.c This kernel image is way different, both in size and structure, fromthe one that will operate at steady state Just to name one reason, boot is typically highly configurable!

What about boot on multi-core machines The start kernel() function is executed along asingle CPU-core (the master) All the other cores (the slaves) only keep waiting that themaster has finished The kernel internal function smp processor id()can be used for retrieving the ID of the current core This function is based on ASM instructions implementing ahardware specific ID detection protocol This function operates correctly either at kernel boot or atsteady state

The actual support for CPU-coreidentification

Actual kernel startup scheme in LinuxCore-0Core-1 Core-2Core-(n-1) .code inhead.S(or variants)start kernelSYNC

An example head.S code snippet:triggering Linux paging (IA32 case)/* * Enable paging */ 3:movl swapper pg dir- PAGE OFFSET,%eaxmovl %eax,%cr3 /* set the page tablepointer. */movl %cr0,%eaxorl 0x80000000,%eaxmovl %eax,%cr0 /* .and set paging (PG) bit*/

Hints on the signature of thestart kernel function (as well as others) init start kernel(void)This only lives in memory during kernel boot (or startup)The reason for this is to recover main memory storage which is relevantbecause of both: Reduced available RAM (older configurations) Increasingly complex (and hence large in size) startup codeRecall that the kernel image is not subject to swap out(conventional kernels are always resident in RAM )

Management of init functions The kernel linking stage locates these functions onspecific logical pages (recall what we told about thefixed positioning of specific kernel level stuff in thekernel layout!!) These logical pages are identified within a “bootmem”subsystem that is used for managing memory when thekernel is not yet at steady state of its memorymanagement operations Essentially the bootmem subsystem keeps a bitmaskwith one bit indicating whether a given page has beenused (at compile time) for specific stuff

still on bootmem The bootmem subsystem also allows for memoryallocation upon the very initial phase of the kernelstartup In fact, the data structures (e.g. the bitmaps) it keepsnot only indicate if some page has been used forspecific data/code They also indicate if some page which is actuallyreachable at the very early phase of boot is not usedfor any stuff These are clearly “free buffers” exploitable upon thevery early phase of boot

An exemplified picture of bootmemDataCompact status ofbusy/free buffers (pages)0x80000000freeLink in a single imagefreeCode.Bootmembitmapfree0xc0800000Logical pages immediately reachable atthe very early phase of kernel startup

The meaning of reachable page’’ The kernel software can access the actual content of the page(in RAM) by simply expressing an address falling into thatpage The point is that the expressed address is typically a virtualone (since kernel code is commonly written using pointer’’abstractions) So the only way we have to make a virtual address correspondto a given page frame in RAM is to rely on at least a pagetable that makes the translation (even at the very early stage ofkernel boot) The initial kernel image has a page table, with a minimumnumber of pages mapped in RAM, those handled by thebootmem subsystem

How is RAM memory organized onmodern (large scale/parallel) machines? In modern chipsets, the CPU-core count continuouslyincreases However, it is increasingly difficult to build architectureswith a flat-latency memory access (historically referred toas UMA) Current machines are typically NUMA Each CPU-core has some RAM banks that are close andother that are far Generally speaking, each memory bank is associated witha so called NUMA-node Modern operating systems are designed to handle NUMAmachines (hence UMA as a special case)

Looking at the Linux NUMA setup viaOperating System facilities A very simple way is the numactl command It allows to discover How many NUMA nodes are present What are the nodes close/far to/from any CPU-core What is the actual distance of the nodes (from theCPU-cores)Let’s see a few ‘live’ examples .

Actual kernel data structures formanaging memory Kernel Page table This is a kind of ‘ancestral’ page table (all the others aresomehow derived from this one) It keeps the memory mapping for kernel level code anddata (thread stack included) Core map The map that keeps status information for any frame(page) of physical memory, and for any NUMA node Free list of physical memory frames, for any NUMA nodeNone of them is already finalized when westartup the kernel

A schemeFree listFree listCoremapFrames’zone xStatus (free/busy)of memory framesmov (%rax), %rbxKernel pagetableTarget frameFrames’zone y

Objectives of the kernel page table setup These are basically two: Allowing the kernel software to use virtual addressed whileexecuting (either at startup or at steady state) Allowing the kernel software (and consequently theapplication software) to reach (in read and/or write mode)the maximum admissible (for the specific machine) oravailable RAM storage The finalized shape of the kernel page table is thereforetypically not setup into the original image of the kernelloaded in memory, e.g., given that the available RAM todrive can be parameterized

A schemeRange of linearaddressesreachable whenswitching toprotected modeplus pagingPassage from the compile-timedefined kernel-page tableto the boot time reshuffled oneRange of linearaddressesreachable whenrunning at steadystateIncreaseof the sizeof reachableRAM locations

Directly mapped memory pages They are kernel level pages whose mapping onto physicalmemory (frames) is based on a simple shift between virtual andphysical address PA (VA) where is (typically) a simple function subtractinga predetermined constant value to VA Not all the kernel level virtual pages are directly mappedKernel s

Virtual memory vs boot sequence (IA32 example) Upon kernel startup addressing relies on a simple single levelpaging mechanism that only maps 2 pages (each of 4 MB) up to8 MB physical addresses The actual paging rule (namely the page granularity and thenumber of paging levels – up to 2 in i386) is identified viaproper bits within the entries of the page table The physical address of the setup page table is kept within theCR3 register The steady state paging scheme used by LINUX will be activatedduring the kernel boot procedure The max size of the address space for LINUX processes on i386machines (or protected mode) is 4 GB 3 GB are within user level segments 1 GB is within kernel level segments

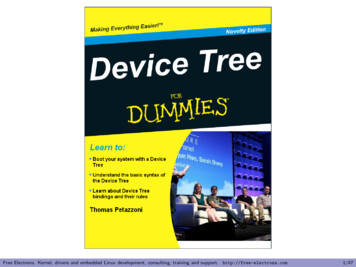

Details on the page table structure in i386 (i)1 Level pagingaddress 10 bits page number,22 bits page offset 2 Levels paging 20 bits page number,12 bits page offset 10 bits page section,10 bits actual page PT(E) physical 4MB frame address PD(E)PT(E) physical 4KB frame address

Details on the page table structure in i386 (ii) It is allocated in physical memory into 4KB blocks, which canbe non-contiguous In typical LINUX configurations, once set-up it maps 4 GBaddresses, of which 3 GB at null reference and (almost) 1 GBonto actual physical memory Such a mapped 1 GB corresponds to kernel level virtualaddressing and allows the kernel to span over 1 GB ofphysical addresses To drive more physical memory, additional configurationmechanisms need to be activated, or more recent processorsneeds to be exploited as we shall see

i386 memory layout at kernel startup for kernel 2.4code8 MB (mapped on VM)dataPage tableWith 2 validentries only(each one for a4 MB page)freeX MB (unmapped on VM)

Actual issues to be tackled1. We need to reach the correct granularity for paging (4KBrather than 4MB)2. We need to span logical to physical address across the whole1GB of kernel-manageable physical memory3. We need to re-organize the page table in two separate levels4. So we need to determine ‘free buffers’ within the alreadyreachable memory segment to initially expand the page table5. We cannot use memory management facilities other thanpaging (since core maps and free lists are not yet at steadystate)

Back to the concept of bootmem1. Memory occupancy and location of the initial kernel image isdetermined by the compile/link process2. A compile/link time memory manager is embedded into thekernel image, which is called bootmem manager3. It relies on bitmaps telling if any 4KB page in the currentlyreachable memory image is busy or free4. It also offers API (to be employed at boot time) in order to getfree buffers5. These buffers are sets of contiguous (or single) page alignedareas6. As hinted, this subsystem is in charge of handling initmarked functions in terms of final release of the correspondingbuffers

Kernel page table collocation within physicalmemoryCodedataPage table(formed by 4 KBnon-contiguousblocks)free1 GB (mapped on VM startingfrom 3 GBwithin virtualaddressing)

Low level “pages”Load undersized page table(kernel page size not finalized: 4MB)Finalize kernel handled- 4 KB (1K entry)page size (4KB)Expand page table viaboot mem low pages(not marked in the page table)- compile time identificationKernel boot

LINUX paging vs i386 LINUX virtual addresses exhibit (at least) 3 indirection levelspgdpmdpteoffsetPhysical(frame) adressPage GeneralDirectoryPage MiddleDirectoryPage TableEntries On i386 machines, paging is supported limitedly to 2 levels (pde,page directory entry – pte, page table entry) Such a dicotomy is solved by setting null the pmd field, which isproper of LINUX, and mapping pgd LINUX on pde i386 pte LINUX on pte i386

i386 page table size Both levels entail 4 KB memory blocks Each block is an array of 4-byte entries Hence we can map 1 K x 1K pages Since each page is 4 KB in sixe, we get a 4 GB virtual addressingspace The following macros define the size of the page tables blocks (theycan be found in the file include/asm-i386/pgtable2level.h) #define PTRS PER PGD #define PTRS PER PMD #define PTRS PER PTE102411024 the value1 for PTRS PER PMD is used to simulate the existenceof the intermediate level such in a way to keep the 3-level orientedsoftware structure to be compliant with the 2-level architecturalsupport

Page table data structures A core structure is represented by the symbol swapper pg dirwhich is defined within the file arch/i386/kernel/head.S This symbol expresses the virtual memory address of the PGD (PDE)portion of the kernel page table This value is initialized at compile time, depending on the memorylayout defined for the kernel bootable image Any entry within the PGD is accessed via displacement starting fromthe initial PGD address The C types for the definition of the content of the page tableentries on i386 are defined in include/asm-i386/page.h They aretypedef struct { unsigned long pte low; } pte t;typedef struct { unsigned long pmd; } pmd t;typedef struct { unsigned long pgd; } pgd t;

Debugging The redefinition of different structured types, which are identical insize and equal to an unsigned long, is done for debuggingpurposes Specifically, in C technology, different aliases for the same typeare considered as identical types For instance, if we definetypedef unsigned long pgd t;typedef unsigned long pte t;pgd t x; pte t y;the compiler enables assignments such as x y and y x Hence, there is the need for defining different structured types whichsimulate the base types that would otherwise give rise to compilerequivalent aliases

i386 PDE entriesNothing tells whether we can fetch (so execute)from there

i386 PTE entriesNothing tells whether we can fetch (so execute)from there

Field semantics Present: indicates whether the page or the pointed page table isloaded in physical memory. This flag is not set by firmware (ratherby the kernel) Read/Write: define the access privilege for a given page or a set ofpages (as for PDE) . Zero means read only access User/Supervisor: defines the privilege level for the page or for thegroup of pages (as for PDE). Zero means supervisor privilege Write Through: indicates the caching policy for the page or the setof pages (as for PDE). Zero means write-back, non-zero meanswrite-through Cache Disabled: indicates whether caching is enabled or disabledfor a page or a group of pages. Non-zero value means disabledcaching (as for the case of memory mapped I/O)

Accessed: indicates whether the page or the set of pages has beenaccessed. This is a sticky flag (no reset by firmware). Reset iscontrolled via software Dirty: indicates whether the page has been write-accessed. This isalso a sticky flag Page Size (PDE only): if set indicates 4 MB paging otherwise 4 KBpaging Page Table Attribute Index: . Do not care Page Global (PTE only): defines the caching policy for TLB entries.Non-zero means that the corresponding TLB entry does notrequire reset upon loading a new value into the page table pointerCR3

Bit masking in include/asm-i386/pgtable.h there exist some macrosdefining the positioning of control bits within the entries of the PDEor PTE There also exist the following macros for masking and setting thosebits #define #define #define #define #define #definePAGE PRESENTPAGE RWPAGE USERPAGE PWTPAGE PCDPAGE ACCESSED #define PAGE DIRTY0x0010x0020x0040x0080x0100x0200x040 /* proper of PTE */ These are all machine dependent macros

An examplepte t x;x ;if ( (x.pte low) & PAGE PRESENT){/* the page is loaded in a frame */}else{/* the page is not loaded in anyframe */} ;

Relations with trap/interrupt events Upon a TLB miss, firmware accesses the page table The first checked bit is typically PAGE PRESENT If this bit is zero, a page fault occurs which gives rise to a trap(with a given displacement within the trap/interrupt table) Hence the instruction that gave rise to the trap can get finallyre-executed Re-execution might give rise to additional traps,depending on firmware checks on the page table As an example, the attempt to access a read only page in writemode will give rise to a trap (which triggers thesegmentation fault handler)

Run time detection of current page size on IA-32 processorsand 2.4 Linux kernel#include kernel.h #define MASK 1 7unsigned long addr 3 30; // fixing a reference on the// kernel boundaryasmlinkage int sys page size(){//addr (unsigned long)sys page size; // moving the referencereturn(swapper pg dir[(int)((unsigned long)addr 22)]&MASK?4 20:4 10);}

Kernel page table initialization details As said, the kernel PDE is accessible at the virtual address keptby swapper pg dir (now init level4 pgt onx86-64/kernel 3 or init top pgt on x86-64/kernel4) The room for PTE tables gets reserved within the 8MB ofRAM that are accessible via the initial paging scheme Reserving takes place via the macroalloc bootmem low pages() which is defined ininclude/linux/bootmem.h (this macro returns avirtual address) Particularly, it returns the pointer to a 4KB (or 4KB x N)buffer which is page aligned This function belongs to the (already hinted) basic memorymanagement subsystem upon which the LINUX memorysystem boot lies on

Kernel 2.4/i386 initialization algorithm we start by the PGD entry which maps the address 3 GB, namelythe entry numbered 768 cyclically1. We determine the virtual address to be memory mapped (thisis kept within the vaddr variable)2. One page for the PTE table gets allocated which is used formapping 4 MB of virtual addresses3. The table entries are populated4. The virtual address to be mapped gets updated by adding 4MB5. We jump to step 1 unless no more virtual addresses or no morephysical memory needs to be dealt with (the ending conditionis recorded by the variable end)

Initialization function pagetable init()for (; i PTRS PER PGD; pgd , i ) {vaddr i*PGDIR SIZE; /* i is set to map from 3 GB */if (end && (vaddr end))break;pmd (pmd t *)pgd;/* pgd initialized to (swapper pg dir i) */ for (j 0; j PTRS PER PMD; pmd , j ) { pte base pte (pte t *) alloc bootmem low pages(PAGE SIZE);for (k 0; k PTRS PER PTE; pte , k ) {vaddr i*PGDIR SIZE j*PMD SIZE k*PAGE SIZE;if (end && (vaddr end)) break; *pte mk pte phys( pa(vaddr), PAGE KERNEL);}set pmd(pmd, pmd( KERNPG TABLE pa(pte base))); }}

Note!!! The final PDE buffer coincides with the initial pagetable that maps 4 MB pages 4KB paging gets activated upon filling the entry of thePDE table (since the Page Size bit gets updated) For this reason the PDE entry is set only after havingpopulated the corresponding PTE table to be pointed Otherwise memory mapping would be lost upon anyTLB miss

The set pmd macro#define set pmd(pmdptr, pmdval) (*(pmdptr) pmdval) Thia macro simply sets the value into one PMD entry Its input parameters are the pmdptr pointer to an entry of PMD (the type is pmd t) The value to be loaded pmdval (of type pmd t, defined viacasting) While setting up the kernel page table, this macro is used incombination with pa() (physical address) which returns anunsigned long The latter macro returns the physical address corresponding to agiven virtual address within kernel space (except for someparticular virtual address ranges, those non-directly mapped) Such a mapping deals with [3,4) GB virtual addressing onto [0,1) GBphysical addressing

The mk pte phys()macromk pte phys(physpage, pgprot) The input parameters are A frame physical address physpage, of typeunsigned long A bit string pgprot for a PTE, of type pgprot t The macro builds a complete PTE entry, which includes thephysical address of the target frame The result type is pte t The result value can be then assigned to one PTE entry

PAE (Physical address extension) increase of the bits used for physical addressing offered by more recent x86 processors (e.g. Intel Pentium Pro)which provide up to 36 bits for physical addressing we can drive up to 64 GB of RAM memory paging gets operated at 3 levels (instead of 2) the traditional page tables get modified by extending the entries at64-bits and reducing their number by a half (hence we can support¼ of the address space) an additional top level table gets included called “page directorypointer table” which entails 4 entries, pointed by CR3 CR4 indicates whether PAE mode is activated or not (which is donevia bit 5 – PAE-bit)

x86-64 architectures They extend the PAE scheme via a so called “long addressingmode” Theoretically they allow addressing 2 64 bytes of logical memory In actual implementations we reach up to 2 48 canonical formaddresses (lower/upper half within a total address space of 2 48) The total allows addressing to span over 256 TB Not all operating systems allow exploiting the whole range up to256 TB of logical/physical memory LINUX currently allows for 128 TB for logical addressing ofindividual processes and 64 TB for physical addressing

Addressing scheme64-bit48 out of 64-bit

textdataHeapStackDLLkernelNon-allowedlogical addresses(canonical 64-bitaddressing)

48-bit addressing: page tables Page directory pointer has been expanded from 4to 512 entries An additional paging level has been added thusreaching 4 levels, this is called “Page-Map level” Each Page-Map level table has 512 entries Hence we get 512 4 pages of size 4 KB that areaddressable (namely, a total of 256 TB)

also referred to as PGD(Page General Directory)

Direct vs non-direct page mapping In long mode x86 processors allow one entry of the PML4to be associated with 2 27 frames This amounts to 2 29 KB 2 9 GB 512 GB Clearly, we have plenty of room in virtual addressing fordirectly mapping all the available RAM into kernel pages onmost common chipsets This is the typical approach taken by Linux, where wedirectly map all the RAM memory However we also remap the same RAM memory in nondirect manner whenever required

Huge pages Ideally x86-64 processors support them starting from PDPT Linux typically offers the support for huge pages pointed toby the PDE (page size 512*4KB) See: /proc/meminfo and/proc/sys/vm/nr hugepages These can be “mmaped” via file descriptors and/or mmapparameters (e.g. MAP HUGETLB flag) They can also be requested via the madvise(void*,size t, int) system call (with MADV HUGEPAGEflag)

Back to speculation in the hardware From Meldown we already know that a page table entry plays acentral role in hardware side effects with speculative execution The page table entry provides the physical address of some“non-accessible” byte, which is still accessible in speculativemode This byte can flow into speculative incarnations of registers andcan be used for cache side effects . but, what about a page table entry with “presence bit”not set? . is there any speculative action that is still performed bythe hardware with the content of that page table entry?

The L1 Terminal Fault (L1TF) attack It is based on the exploitation of data cached into L1 More in detail: A page table entry with presence bit set to 0 propagates the value of thetarget physical memory location (the TAG) upon loads if that memorylocation is already cached into L1 If we use the value of that memory location as an index (Meltdown style)we can grub it via side effects on cache latency Overall, we can be able to indirectly read the content of anyphysical memory location if the same location has already beenread, e.g., in the context of another process on the same CPUcore Affected CPUs: AMD, Intel ATOM, Intel Xeon PHI

The schemeSpeculativelypropagate to CPUL1Tag presentPage tableVirtual address“invalid” physical address

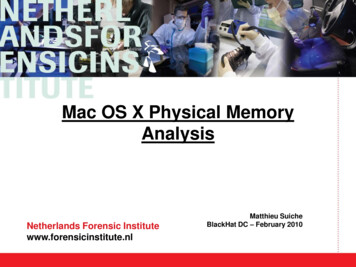

L1TF big issues To exploit L1TF we must drive page table entries A kernel typically does not allow it (in fact kernelmitigation of this attack simply relies on having “invalid”page table entries set to proper values not mappingcacheable data) But what about a guest kernel? It can attack physical memory of the underlying host So it can also attack memory of co-located guests/VMs It is as simple as hacking the guest level page tables, onwhich an attacker that drives the guest may have full control

Hardware supported “virtual memory”virtualization Intel Extended Page Tables (EPT) AMD Nested Page Tables (NPT) A scheme:Keeps track ofthe physicalmemory locationof the page framesused for activatedVM

Attacking the host physical memoryChange this towhateverphysical address andmake the entry invalid

Reaching vs allocating/deallocating memoryAllocation onlyLoad undersized page table(kernel page size not finalized)Finalize kernelhandled pagesize (4KB)Expand page table viaboot mem low pages(not marked in the page table)- compile time identificationKernel bootAllocation deallocationExpand/modify datastructures via pages(marked in the page table)- run time identification

Core map It is an array of mem map t (also known as struct page)structures defined in include/linux/mm.h The actual type definition is as follows (or similar along kerneladvancement):typedef struct page {struct list head list;struct address space *mapping;unsigned long index;struct page *next hash;/*/*/*/*- mapping has some page lists. */The inode (or .) we belong to. */Our offset within mapping. */Next page sharing our hash bucket inthe pagecache hash table. */atomic t count;/* Usage count, see below. */unsigned long flags;/* atomic flags, some possiblyupdated asynchronously */struct list head lru;/* Pageout list, eg. active list;protected by pagemap lru lock !! */struct page **pprev hash; /* Complement to *next hash. */struct buffer head * buffers;/* Buffer maps us to a disk block. */#if defined(CONFIG HIGHMEM) defined(WANT PAGE VIRTUAL)void *virtual;/* Kernel virtual address (NULL ifnot kmapped, ie. highmem) */#endif /* CONFIG HIGMEM WANT PAGE VIRTUAL */} mem map t;

Fields Most of the fields are used to keep track of the interactions betweenmemory management and other kernel sub-systems (such as I/O) Memory management proper fields are struct list head list (whose type is defined ininclude/linux/lvm.h), which is used to organize theframes into free lists atomic t count, which counts the virtual references mappedonto the frame (it is managed via atomic updates, such as withLOCK directives) unsigned long flags, this field keeps the status bits for theframe, such as:#define#define#define#define#define#definePG locked0PG referenced 2PG uptodate3PG dirty4PG lru6PG reserved 14

Core map initialization (i386/kernel 2.4 example) Initially we only have the core map pointer This is mem map and is declared in mm/memory.c Pointer initialization and corresponding memory allocationoccur within free area init() After initializing, each entry will keep the value 0 within thecount field and the value 1 into the PG reserved flagwithin the flags field Hence no virtual reference exists for that frame and the frame isreserved Frame un-reserving will take place later via the functionmem init() in arch/i386/mm/init.c (by

Kernel level memory management 1. The very base on boot vs memory management 2. Memory 'Nodes' (UMA vs NUMA) 3. x86 paging support 4. Boot and steady state behavior of the memory management system in the Linux kernel Advanced Operating Systems MS degree in Computer Engineering University of Rome Tor Vergata Lecturer: Francesco Quaglia