Transcription

Machine learning applicationsin biotechnology researchUC Davis Biotechnology Program 2012Davis, CATobias KindUC Davis Genome CenterFiehnLab - Metabolomics1

Machine learning Machine learning commonly used for prediction of future values Complex models (black box) do not always provide causal insight Predictive power is most importantPicture Source: WikipediaArtificial Intelligence2

Why Machine Learning?People cost money, are slow, don’t have timeLet the machine (computer) do it.(1984)Replace people with computers.?: 42The Terminator (2029)Picture Source: Wikipedia; Office; RottenTomatoes; Wikimedia-Commons3

Machine Learning PersonalitiesEpicurus:Principle of multiple explanations“All consistent models should beretained”.Epicurus(341 BC)Trust is good,control is betterLenin*Occam's Razor:Of two equivalent theories orexplanations, all other thingsbeing equal, the simplerone is to be preferred.Ockham(1288)Trust, but verifyRonald Reagan*Artificial intelligence,Neural networksTuring TestCan machines think?Alan Turing(1912)Sources: Wikipedia;Marvin Minsky(1927)(*) In principle4

Machine Learning AlgorithmsUnsupervised learning:Clustering methodsSupervised learning:Support vector machinesMARS (multivariate adaptive regression splines)Neural networksNaive Bayes classifierRandom Forest, Boosting trees, Honest trees,Decision treesCART (Classification and regression trees)Genetic programmingTransduction:.thanks to WIKI and Stuart GanskyBayesian Committee MachineTransductive Support Vector Machine5

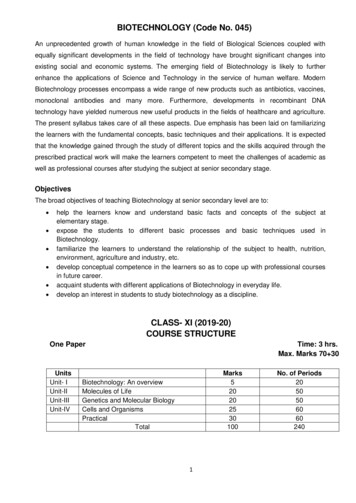

Concept of predictive data mining for classificationData PreparationFeature SelectionBasic Statistics, Remove extreme outliers, transform ornormalize datasets, mark sets with zero variancesPredict important features with MARS, PLS, NN,SVM, GDA, GA; apply voting or meta-learningModel Training Cross ValidationUse only important features, applybootstrapping if only few datasets;Use GDA, CART, CHAID, MARS, NN, SVM,Naive Bayes, kNN for predictionModel TestingCalculate Performance with Percentdisagreement and Chi-square statisticsModel DeploymentDeploy model for unknown data;use PMML, VB, C , JAVA6

Automated machine learning workflows – tools of the tradeStatistica DataminerworkflowWEKA KnowledgeFlowworkflowStatistica – academic license (www.onthehub.com/statsoft/); WEKA – open source (www.cs.waikato.ac.nz/ml/weka/)7

Massive parallel computingFree lunch is over – concurrency in machine learningModern workstation(with 4-64 CPUs)GPU computing(with 1000 stream processors)Cloud computing(with 10,000 CPUs/GPUs)Google Prediction APIAmazon Elastic MapReducePicture Source: ThinkMate Workstation; Wikipedia; Office; Google; Amazon8

Common ML applications in biotechnologyClassification - genotype/wildtype, sick/healthy, cancer grading, toxicity prediction andevaluations (FDA, EPA)105S IC Kt20H ealthy-5-10-20-15-10-505101520t1Regression - predicting biological activities, toxicity evaluations, prediction ofmolecular properties of unknown substances (QSAR and QSPR)S c atterplot (Spreadsheet2 10v*30c )V ar2 3.3686 1.0028*x; 0.95 Pred.Int.350300250200V ar2150100500-50050100150V ar12002503003509

Supervised learning with categorical ple12Sample13Sample14Sample15Sample16Category greengreengreengreengreenValue x1 Value x2 Value 0960Good solutionCategory y sin((40.579*Value x3 cos(sin(4.2372*Value x1)) 3.25702)/(1.43018 sin(Value x2)))Perfect solutionCategory y round(0.1219*Value x2)y function (x values)where y are discrete categories such as textmultiple categories (here colors) are possiblePicture Source: Nutonian Formulize10

Figures of merit for classificationsA) Calculate prediction and true/false 3Sample14Sample15Sample16Category greengreengreengreengreenValue x1 Value x2 Value 0960Example is special case of binary classificationmultiple categories are possibleSource: http://en.wikipedia.org/wiki/Sensitivity and specificitypredicted RUEgreenTRUEgreenTRUEgreenTRUEgreenTRUEgreenTRUEB) Confusion matrixtrue positives true negativefalse positives false negativesC) Figures of meritTrue positive rate orsensitivity or recall TP/(TP FN)False positive rate FP/FP TN)Accuracy (TP TN)/(TP TN FP FN)Specificity (TN/(FP TN)Precision TP/(TP FN)Negative predictive value TN/(TN FN)False discovery rate FP/(FP TP)D) ROC curves11

Supervised learning with continuous 7.9319.1023.39Value x1 Value 49385.76Value .0936670.0947670.096Good solutiony mod(Value x2*Value x2, 6.61)*tan(0.2688*sin(5.225*Value x2*Valuex2))*max(Value x2 -25.59/(Value x2*Value x2) cos(42.32*Value x2),floor(mod(Value x2*Value x2, 6.61))) round(4.918*Value x2) - 3.945 3.672*ceil(sin(5.225*Value x2*Value x2))R 2 Goodness of FitCorrelation CoefficientMaximum ErrorMean Squared ErrorMean Absolute 821247y function (x values)where y are continuous values such as numbersPicture Source: Nutonian Formulize12

Figures of merit for regressionsR 2 Goodness of FitCorrelation CoefficientMaximum ErrorMean Squared ErrorMean Absolute 821247Figures of merit are also calculated forexternal test and validation sets such as thepredictive squared correlation coefficient Q 2Picture Source: Nutonian Formulize13

Overfitting – trust but verify35y (observed)3025201510500.0020.0040.0060.0080.00y (predicted)Old model applied to new dataR2 0.995 Q2 0.7227Training setExternal validation setExternal validation failedPrediction power is most importantThe problem of overfitting.; DM HawkinsJ. Chem. Inf. Comput. Sci.; 2004; 44(1) pp 1 - 12;Mechanical turkPicture Source: Wikipedia;14

Overfitting – avoid the unexpectedDogsCatsNew Kid on the blockPicture Source: Wikipedia; Icanhzcheesburger.com15

Avoid overfittingInternal cross-validation (weak)n-fold CV70/30 split development/test set (good)Training (70%)Test (30%)External validation set orblind hold-out (best)Training (70%)Test (30%)External validation set ( 30%)TOP:Combine all three methodsTraining (70%) with CVTest (30%)External validation set ( 30%)16

Sample selection for testing and validationTraining setTest/validation setBad selectionGood selectionSample selection for test and validation set splitshould be truly randomizedRange of the y-coordinate (activity or response)should be completely coveredTraining and test set variables should not overlapPicture Source: Nutonian Formulize17

Why do we need feature selection? Reduces computational complexity Curse of dimensionality is avoided Improves accuracy The selected features can provide insights about the nature of the problem*Importance plotDependent 04050607080Importance (F-value)* Margin Based Feature Selection Theory and Algorithms; Amir Navot18

Feature selection examplePrincipal component analysis (PCA) microarray dataS c ore sc atterplot (t1 vs. t2)S c ore sc atterplot (t1 vs. t2)10100A M L 3A M L 2550A ML 58A ML 57A LL 65AALL 39A LL 52ML 56 A LL 55A LL 40AML 60A ML 70A ML 51A LL 61A ML 59AALL 45LL 44ML 50AALL 62A LL 43A LL 46A LL 66A LL 49A LL 64A LL 63A ML 53A LL 20185 B -c ellA LL 17281 B -c ellA ML 71A LL 47A ML 54A LL 67A LL 42 A LL 41A ML 72t2t20A M L 14A LL 11103 B -c ellA LL 28373 BA LL 19183 B-c ell -c ellA LL 549 B -c ellA LL R11 B-c ell -c ellA LL 14749 BA LL 16415 T-cellA LL 5982 B-c ellA LL 17269 T-cellLL 17929 B-cAALL 14402 T-cellell-c ellellLL 17638 T-cAALL 7092 BAA LL 22474 T-cLL 20414 B -cellellA LL 9186 T-cellellLL 18239 B-cA LL 9723 T-cellA LL 19769 B-c ellA LL 21302 B-c ellA LL 23953 B-c ellA M L 130A LL 19881 T-c ellA LL 9335 B -c ellA LL R23 B -c ellA M L 16A M L 1A M L 6A M L 20A LL 9692 B -c ell-5-50A M L 5A M L 12A ML 69A M L 7A LL 48A ML 68-100-150-100-50050100150t1NO feature selection no separationData: Golub, 1999 Science Mag-10-25-20-15-10-50510152025t1With feature selection separation19

Approach: Automated substructure detectionAim1: take unknown mass spectrum – predict all substructuresAim2: classification into common compound classes (sugar, amino acid, sterol)O192100Si266222Os1Os228150s3Si NO1209110508090126134176148162SiN206234 242 250100 110 120 130 140 150 160 170 180 190 200 210 220 230 240 250 260 270 280 290s4s5OSi OPioneers: Dendral project at Stanford University in the 1970sVarmuza at University of ViennaSteve Stein at NISTMOLGEN-MS team at University Bayreuth20

Principle of mass spectral features192100Mass Spectral Features m/z value m/z intensity delta ( m/z ) delta ( m/z ) x intensity non linear functions intensity series501209110508090126134f1266222MS Feature matrixf2281f3176148162206MS Spectrum f1f2 f3MS1100 20 50MS2100 20 50MS3100 20 60MS40 40 20MS50 40 20f4 f5 fn600 0600 20500 0500 40500 40234 242 250100 110 120 130 140 150 160 170 180 190 200 210 220 230 240 250 260 270 280 290OSiOSubstructure matrixs1Os2s3Si OSiNs4s5OSi O21

Application - Substructure detection and predictionGeneralized Linear Models (GLM)General Discriminant AnalysisBinary logit (logistic) regressionBinary probit regressionNonlinear modelsNeural NetworksMultivariate adaptive regression splines (MARS)Multilayer PerceptronTree modelsNeural network (MLP)Standard Classification Trees (CART)Radial Basis Function neural network (RBF)Standard General Chi-square Automatic Interaction Detector (CHAID) Machine LearningExhaustive CHAIDSupport Vector Machines (SVM)Boosting classification treesNaive Bayes classifierM5 regression treesk-Nearest Neighbors (KNN)Meta LearningPresented at ASMS Conference 2007, Indianapolis22

Strategy - let all machine learning algorithms competeLower is better23

Application: Retention time prediction forliquid chromatographylogP (lipophilicity)logP 2logP 4logP 8Retention time [min] very simplistic and coarse filter for RP only problematic with multi ionizable compounds logD (includes pKa) better than logP possible use as time segment filter% speciesCalibration using logP conceptfor reversed phase liquidchromatography dataDeoxyguanosinepH24

Application: Retention time prediction forliquid chromatography45y 1.0191x 0.5298R2 0.874440Riboflavin35predicted RT ginine500510152025303540experimental RT [min] Based on logD, pKa, logP and Kier & Hall atomic descriptors; 90 compounds; (ndev 48, ntest 32); Std error 3.7 min Good models need development set n 500, needs to be highly diverse Prediction power is most importantQSRR Model: Tobias Kind (FiehnLab) using ChamAxon Marvin and WEKAData Source: Lu W, Kimball E, Rabinowitz JD. J Am Soc Mass Spectrom. 2006 Jan;17(1):37-50; LC method using 90 nitrogen metabolites on RP-1825

Application: Decision tree supported substructureprediction of metabolites from GC-MS profilesSpectrumDecision treeSource: Metabolomics. 2010 Jun;6(2):322-333. Epub 2010 Feb 16.Decision tree supported substructure prediction of metabolites from GC-MS profiles.Hummel J, Strehmel N, Selbig J, Walther D, Kopka J.Compound structure26

Toxicity and carcinogenicity predictionswith ToxTreeSource: Patlewicz G, Jeliazkova N, Safford RJ, Worth AP, Aleksiev B. (2008) An evaluation of the implementation of the Cramer classification scheme in theToxtree software. SAR QSAR Environ Res. ; http://toxtree.sourceforge.net27

Conclusions – Machine LearningClassification (categorical data) and regression (continuous data) forprediction of future valuesLet algorithms compete for best solution (voting, boosting, bagging)Validation (trust but verify) is the cornerstone of machine learningto avoid false results and wishful thinkingModern algorithms do not necessarily provide direct causal insightthey rather provide the best statistical solution or equationDomain knowledge of the learning problem is important and helpful forartifact removal and final interpretationPrediction power is most important28

Thank you!29

Machine Learning Algorithms Unsupervised learning: Supervised learning: Transduction: Bayesian Committee Machine Transductive Support Vector Machine Clustering methods Support vector machines MARS (multivariate adaptive regression splines) Neural networks Naive Bayes classifier Random Forest, Boosting trees, Honest trees, Decision trees