Transcription

How Apache Sparkfits into theBig Data landscapeLicensed under a Creative Commons AttributionNonCommercial-NoDerivatives 4.0 International License

What is Spark?

What is Spark?Developed in 2009 at UC Berkeley AMPLab, thenopen sourced in 2010, Spark has since becomeone of the largest OSS communities in big data,with over 200 contributors in 50 organizations“Organizations that are looking at big data challenges –including collection, ETL, storage, exploration and analytics –should consider Spark for its in-memory performance andthe breadth of its model. It supports advanced analyticssolutions on Hadoop clusters, including the iterative modelrequired for machine learning and graph analysis.”Gartner, Advanced Analytics and Data Science (2014)spark.apache.org

What is Spark?

What is Spark?Spark Core is the general execution engine for theSpark platform that other functionality is built atop:! in-memory computing capabilities deliver speed ease of development – native APIs in Java, Scala,Python ( SQL, Clojure, R)general execution model supports wide varietyof use cases

What is Spark?WordCount in 3 lines of SparkWordCount in 50 lines of Java MR

What is Spark?Sustained exponential growth, as one of the mostactive Apache projects ohloh.net/orgs/apache

A Brief History

A Brief History: Functional Programming for Big DataTheory, Eight Decades Ago:what can be computed?Praxis, Four Decades Ago:algebra for applicative systemsAlonso Churchwikipedia.orgHaskell Curryhaskell.orgReality, Two Decades Ago:machine data from web appsPattie MaesMIT Media LabJohn Backusacm.orgDavid Turnerwikipedia.org

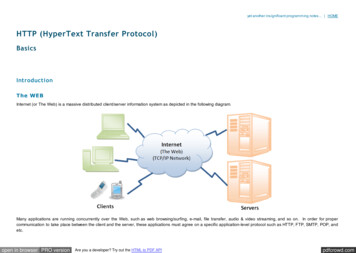

A Brief History: Functional Programming for Big Datacirca late 1990s:explosive growth e-commerce and machine dataimplied that workloads could not fit on a singlecomputer anymore notable firms led the shift to horizontal scale-outon clusters of commodity hardware, especiallyfor machine learning use cases at scale

vletsrecommenders classifiersAlgorithmicModelingWeb AppsMiddlewareaggregationeventhistorySQL Queryresult setsLogsDWCustomersETLcustomertransactionsRDBMS

Amazon“Early Amazon: Splitting the website” – Greg itting-website.html!eBay“The eBay Architecture” – Randy Shoup, Dan Pritchettaddsimplicity.com/adding simplicity an engi/2006/11/you scaled /eBaySDForum2006-11-29.pdf!Inktomi (YHOO Search)“Inktomi’s Wild Ride” – Erik Brewer (0:05:31 ff)youtu.be/E91oEn1bnXM!Google“Underneath the Covers at Google” – Jeff Dean (0:06:54 008/06/11/JeffDeanOnGoogleInfrastructure.aspx!MIT Media Lab“Social Information Filtering for Music Recommendation” – Pattie om/speakers/pattie maes.html

A Brief History: Functional Programming for Big Datacirca 2002:mitigate risk of large distributed workloads lostdue to disk failures on commodity hardware Google File SystemSanjay Ghemawat, Howard Gobioff, Shun-Tak e: Simplified Data Processing on Large ClustersJeffrey Dean, Sanjay Ghemawatresearch.google.com/archive/mapreduce.html

A Brief History: Functional Programming for Big Data2004MapReduce paper20022002MapReduce @ Google20042010Spark paper200620082008Hadoop Summit2006Hadoop @ Yahoo!2010201220142014Apache Spark top-level

A Brief History: Functional Programming for Big DataPregelDremelMapReduceDrillF1MillWheelGeneral Batch d Systems:iterative, interactive, streaming, graph, etc.MR doesn’t compose well for large applications,and so specialized systems emerged as workarounds

A Brief History: Functional Programming for Big Datacirca 2010:a unified engine for enterprise data workflows,based on commodity hardware a decade later Spark: Cluster Computing with Working SetsMatei Zaharia, Mosharaf Chowdhury,Michael Franklin, Scott Shenker, Ion oud spark.pdf!Resilient Distributed Datasets: A Fault-Tolerant Abstraction forIn-Memory Cluster ComputingMatei Zaharia, Mosharaf Chowdhury, Tathagata Das, Ankur Dave,Justin Ma, Murphy McCauley, Michael Franklin, Scott Shenker, Ion di12-final138.pdf

A Brief History: Functional Programming for Big DataIn addition to simple map and reduce operations,Spark supports SQL queries, streaming data, andcomplex analytics such as machine learning andgraph algorithms out-of-the-box.Better yet, combine these capabilities seamlesslyinto one integrated workflow

TL;DR: Generational trade-offs for handling Big ComputePhoto from John Wilkes’ keynote talk @ #MesosCon 2014

TL;DR: Generational trade-offs for handling Big ate(DFS)CheapNetworkreference(URI)

TL;DR: Applicative Systems and Functional Programming – RDDstransformationsRDDRDDRDDRDDactionvalue// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()// action 1!messages.filter( .contains("mysql")).count()

TL;DR: Big Compute in Applicative Systems, by the numbers 1. Express business logic in a preferred nativelanguage (Scala, Java, Python, Clojure, SQL, R, etc.)leveraging FP/closures2. Build a graph of what must be computed3. Rewrite graph into stages using graph reductionto determine how to move/combine predicates,where synchronization barriers are required,what can be computed in parallel, etc.(Wadsworth, Henderson, Turner, et al.)4. Handle synchronization using Akka andreactive programming, with an LRUto manage in-memory working sets (RDDs)5. Profit

TL;DR: Big Compute ImplicationsOf course, if you can define the structure of workloadsin terms of abstract algebra, this all becomes much moreinteresting – having vast implications on machine learningat scale, IoT, industrial applications, optimization in general,etc., as we retool the industrial plantHowever, we’ll leave that for another talk http://justenoughmath.com/

Spark Deconstructed

Spark Deconstructed: Log Mining Example// load error messages from a log into memory!// then interactively search for various patterns!// 2!!// base RDD!val lines sc.textFile("hdfs://.")!!// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()!!// action 1!messages.filter( .contains("mysql")).count()!!// action 2!messages.filter( .contains("php")).count()

Spark Deconstructed: Log Mining ExampleWe start with Spark running on a cluster submitting code to be evaluated on it:WorkerWorkerDriverWorker

Spark Deconstructed: Log Mining Example// base RDD!val lines sc.textFile("hdfs://.")!!// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()!!// action 1!messages.filter( .contains("mysql")).count()!!discussing the other part// action 2!messages.filter( .contains("php")).count()

Spark Deconstructed: Log Mining ExampleAt this point, take a look at the transformedRDD operator graph:scala messages.toDebugString!res5: String !MappedRDD[4] at map at console :16 (3 partitions)!MappedRDD[3] at map at console :16 (3 partitions)!FilteredRDD[2] at filter at console :14 (3 partitions)!MappedRDD[1] at textFile at console :12 (3 partitions)!HadoopRDD[0] at textFile at console :12 (3 partitions)

Spark Deconstructed: Log Mining Example// base RDD!val lines sc.textFile("hdfs://.")!!// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()!!// action 1!messages.filter( .contains("mysql")).count()!!Worker// action 2!messages.filter( .contains("php")).count()discussing the other partWorkerDriverWorker

Spark Deconstructed: Log Mining Example// base RDD!val lines sc.textFile("hdfs://.")!!// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()!!// action 1!messages.filter( .contains("mysql")).count()!!Worker// action 2!messages.filter( .contains("php")).count()block 1discussing the other partWorkerDriverblock 2Workerblock 3

Spark Deconstructed: Log Mining Example// base RDD!val lines sc.textFile("hdfs://.")!!// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()!!// action 1!messages.filter( .contains("mysql")).count()!!Worker// action 2!messages.filter( .contains("php")).count()block 1discussing the other partWorkerDriverblock 2Workerblock 3

Spark Deconstructed: Log Mining Example// base RDD!val lines sc.textFile("hdfs://.")!!// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()!!// action 1!messages.filter( .contains("mysql")).count()!!Worker// action 2!messages.filter( .contains("php")).count()block 1discussing the other partreadHDFSblockWorkerDriverblock 2Workerblock 3readHDFSblockreadHDFSblock

Spark Deconstructed: Log Mining Example// base RDD!val lines sc.textFile("hdfs://.")!!// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()!!// action 1!messages.filter( .contains("mysql")).count()!cache 1!Worker// action 2!messages.filter( .contains("php")).count()process,cache datablock 1discussing the other partcache 2WorkerDriverprocess,cache datablock 2cache 3Workerblock 3process,cache data

Spark Deconstructed: Log Mining Example// base RDD!val lines sc.textFile("hdfs://.")!!// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()!!// action 1!messages.filter( .contains("mysql")).count()!cache 1!Worker// action 2!messages.filter( .contains("php")).count()block 1discussing the other partcache 2WorkerDriverblock 2cache 3Workerblock 3

Spark Deconstructed: Log Mining Example// base RDD!val lines sc.textFile("hdfs://.")!!// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()!discussing the other part!// action 1!messages.filter( .contains("mysql")).count()!cache 1!Worker// action 2!messages.filter( .contains("php")).count()block 1cache 2WorkerDriverblock 2cache 3Workerblock 3

Spark Deconstructed: Log Mining Example// base RDD!val lines sc.textFile("hdfs://.")!!// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()!discussing the other part!// action 1!messages.filter( .contains(“mysql")).count()!cache 1!processfrom cacheWorker// action 2!messages.filter( .contains("php")).count()block 1cache 2WorkerDriverblock 2cache 3Workerblock 3processfrom cacheprocessfrom cache

Spark Deconstructed: Log Mining Example// base RDD!val lines sc.textFile("hdfs://.")!!// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()!discussing the other part!// action 1!messages.filter( .contains(“mysql")).count()!cache 1!Worker// action 2!messages.filter( .contains("php")).count()block 1cache 2WorkerDriverblock 2cache 3Workerblock 3

Spark Deconstructed:Looking at the RDD transformations andactions from another perspective // load error messages from a log into memory!// then interactively search for various patterns!// 2!!// base RDD!val lines sc.textFile("hdfs://.")!!transformations// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()!!// action 1!messages.filter( .contains("mysql")).count()!!// action 2!messages.filter( .contains("php")).count()RDDRDDRDDRDDactionvalue

Spark Deconstructed:RDD// base RDD!val lines sc.textFile("hdfs://.")

Spark Deconstructed:transformationsRDDRDDRDDRDD// transformed RDDs!val errors lines.filter( .startsWith("ERROR"))!val messages errors.map( .split("\t")).map(r r(1))!messages.cache()

Spark Deconstructed:transformationsRDDRDDRDDRDDaction// action 1!messages.filter( .contains("mysql")).count()value

Unifying the Pieces

Unifying the Pieces: Spark SQL// g-guide.html!!val sqlContext new org.apache.spark.sql.SQLContext(sc)!import sqlContext. !!// define the schema using a case class!case class Person(name: String, age: Int)!!// create an RDD of Person objects and register it as a table!val people t").map( .split(",")).map(p Person(p(0), )!!// SQL statements can be run using the SQL methods provided by sqlContext!val teenagers sql("SELECT name FROM people WHERE age 13 AND age 19")!!// results of SQL queries are SchemaRDDs and support all the !// normal RDD operations !// columns of a row in the result can be accessed by ordinal!teenagers.map(t "Name: " t(0)).collect().foreach(println)

Unifying the Pieces: Spark Streaming// ramming-guide.html!!import org.apache.spark.streaming. !import org.apache.spark.streaming.StreamingContext. !!// create a StreamingContext with a SparkConf configuration!val ssc new StreamingContext(sparkConf, Seconds(10))!!// create a DStream that will connect to serverIP:serverPort!val lines ssc.socketTextStream(serverIP, serverPort)!!// split each line into words!val words lines.flatMap( .split(" "))!!// count each word in each batch!val pairs words.map(word (word, 1))!val wordCounts pairs.reduceByKey( )!!// print a few of the counts to the rmination()// start the computation!// wait for the computation to terminate

Unifying the Pieces: MLlib// ml!!val train data // RDD of Vector!val model KMeans.train(train data, k 10)!!// evaluate the model!val test data // RDD of Vector!test data.map(t model.predict(t)).collect().foreach(println)!MLI: An API for Distributed Machine LearningEvan Sparks, Ameet Talwalkar, et al.International Conference on Data Mining (2013)http://arxiv.org/abs/1310.5426

Unifying the Pieces: GraphX// ming-guide.html!!import org.apache.spark.graphx. !import org.apache.spark.rdd.RDD!!case class Peep(name: String, age: Int)!!val vertexArray Array(!(1L, Peep("Kim", 23)), (2L, Peep("Pat", 31)),!(3L, Peep("Chris", 52)), (4L, Peep("Kelly", 39)),!(5L, Peep("Leslie", 45))!)!val edgeArray Array(!Edge(2L, 1L, 7), Edge(2L, 4L, 2),!Edge(3L, 2L, 4), Edge(3L, 5L, 3),!Edge(4L, 1L, 1), Edge(5L, 3L, 9)!)!!val vertexRDD: RDD[(Long, Peep)] sc.parallelize(vertexArray)!val edgeRDD: RDD[Edge[Int]] sc.parallelize(edgeArray)!val g: Graph[Peep, Int] Graph(vertexRDD, edgeRDD)!!val results g.triplets.filter(t t.attr 7)!!for (triplet - results.collect) {!println(s" {triplet.srcAttr.name} loves {triplet.dstAttr.name}")!}

Unifying the Pieces: SummaryDemo, if time permits (perhaps in the hallway):Twitter Streaming Language reference-applications/twitter classifier/README.html!For many more Spark resources online, check:databricks.com/spark-training-resources

TL;DR: Engineering is about costsSure, maybe you’ll squeeze slightly better performanceby using many specialized systems However, putting on an Eng Director hat, would yoube also prepared to pay the corresponding costs of: learning curves for your developers acrossseveral different frameworks ops for several different kinds of clusters tech-debt for OSS that ignores the math (80 yrs!)plus the fundamental h/w trade-offsmaintenance troubleshooting mission-criticalapps across several systems

Integrations

Spark Integrations:Use Lots of DifferentData SourcesClean UpYour rate WithMany OtherSystemscloud-based notebooks ETL the Hadoop ecosystem widespread use of PyData advanced analytics in streaming rich custom search web apps for data APIs low-latency multi-tenancy

Spark Integrations: Unified platform for building Big Data pipelinesDatabricks udmaking-big-data-easy.htmlyoutube.com/watch?v dJQ5lV5Tldw#t 883

Spark Integrations: The proverbial Hadoop ecosystemSpark Hadoop HBase ark-stack.htmlunified computehadoop ecosystem

Spark Integrations: Leverage widespread use of PythonSpark display/SPARK/PySpark Internalsunified computePy Data

Spark Integrations: Advanced analytics for streaming use casesKafka Spark Cassandradatastax.com/documentation/datastax enterprise/4.5/datastax on.com/?p thub.com/dibbhatt/kafka-spark-consumerunified computedata streamscolumnar key-value

Spark Integrations: Rich search, immediate insightsSpark ing-using-elastic-search-and-sparkunified computedocument search

Spark Integrations: Building data APIs with web appsSpark ereactive-platform-a-match-made-in-heavenunified computeweb apps

Spark Integrations: The case for multi-tenancySpark .html Mesosphere Google Cloud os-on-google-cloud.htmlunified computecluster resources

Advanced Topics

Advanced Topics:Other BDAS projects running atop Spark forgraphs, sampling, and memory sharing: BlinkDB Tachyon

Advanced Topics: BlinkDBBlinkDBblinkdb.org/massively parallel, approximate query engine forrunning interactive SQL queries on large volumesof data allows users to trade-off query accuracyfor response timeenables interactive queries over massivedata by running queries on data samplespresents results annotated with meaningfulerror bars

Advanced Topics: BlinkDB“Our experiments on a 100 node cluster show thatBlinkDB can answer queries on up to 17 TBs of datain less than 2 seconds (over 200 x faster than Hive),within an error of 2-10%.”BlinkDB: Queries with Bounded Errors andBounded Response Times on Very Large DataSameer Agarwal, Barzan Mozafari, Aurojit Panda,Henry Milner, Samuel Madden, Ion StoicaEuroSys (2013)dl.acm.org/citation.cfm?id 2465355

Advanced Topics: BlinkDBDeep Dive into BlinkDBSameer Agarwalyoutu.be/WoTTbdk0kCAIntroduction to using BlinkDBSameer Agarwalyoutu.be/Pc8 EM9PKqY

Advanced Topics: TachyonTachyon tachyon-project.org/fault tolerant distributed file system enablingreliable file sharing at memory-speed acrosscluster frameworksachieves high performance by leveraging lineageinformation and using memory aggressivelycaches working set files in memory therebyavoiding going to disk to load datasets that arefrequently readenables different jobs/queries and frameworksto access cached files at memory speed

Advanced Topics: TachyonMore tachyon/

Advanced Topics: TachyonIntroduction to TachyonHaoyuan Liyoutu.be/4lMAsd2LNEE

Case Studies

Summary: Case StudiesSpark at Twitter: Evaluation & Lessons LearntSriram tupspark-at-twitter Spark can be more interactive, efficient than MR Why is Spark faster than Hadoop MapReduce? Support for iterative algorithms and cachingMore generic than traditional MapReduce Fewer I/O synchronization barriersLess expensive shuffleMore complex the DAG, greater theperformance improvement

Summary: Case StudiesUsing Spark to Ignite Data oignite-data-analytics/

Summary: Case StudiesHadoop and Spark Join Forces in YahooAndy n-forces-at-yahoo/

Summary: Case StudiesCollaborative Filtering with SparkChris filtering-with-spark collab filter (ALS) for music recommendationHadoop suffers from I/O overheadshow a progression of code rewrites, convertinga Hadoop-based app into efficient use of Spark

Summary: Case StudiesWhy Spark is the Next Top (Compute) ModelDean p-compute-model Hadoop: most algorithms are much harder toimplement in this restrictive map-then-reducemodelSpark: fine-grained “combinators” forcomposing algorithmsslide #67, any questions?

Summary: Case StudiesOpen Sourcing Our Spark Job ServerEvan park-job-server github.com/ooyala/spark-jobserverREST server for submitting, running, managingSpark jobs and contextscompany vision for Spark is as a multi-team bigdata serviceshares Spark RDDs in one SparkContext amongmultiple jobs

Summary: Case StudiesBeyond Word Count:Productionalizing Spark StreamingRyan / overcoming 3 major challenges encounteredwhile developing production streaming jobswrite streaming applications the same wayyou write batch jobs, reusing codestateful, exactly-once semantics out of the boxintegration of Algebird

Summary: Case StudiesInstalling the Cassandra / Spark OSS StackAl ssandra-spark-stack.html install config for Cassandra and Spark togetherspark-cassandra-connector integrationexamples show a Spark shell that can accesstables in Cassandra as RDDs with types premapped and ready to go

Summary: Case StudiesOne platform for all: real-time, near-real-time,and offline video analytics on SparkDavis Shepherd, Xi spark

Resources

certification:Apache Spark developer certificate program http://oreilly.com/go/sparkcert defined by Spark experts @Databricks assessed by O’Reilly Media preview @Strata NY

community:spark.apache.org/community.htmlvideo slide archives: spark-summit.orglocal events: Spark Meetups Worldwideresources: databricks.com/spark-training-resourcesworkshops: databricks.com/spark-trainingIntro to SparkSparkDataSciSparkAppDevSparkDevOpsDistributed MLon SparkStreaming Appson SparkSpark Cassandra

books:Learning SparkHolden Karau,Andy Konwinski,Matei ZahariaO’Reilly (2015*)Fast Data Processingwith SparkHolden KarauPackt p.oreilly.com/product/0636920028512.doSpark in ActionChris FreglyManning (2015*)sparkinaction.com/

events:Strata NY Hadoop WorldNYC, Oct 15-17strataconf.com/stratany2014Big Data TechConSF, Oct 27bigdatatechcon.comStrata EUBarcelona, Nov 19-21strataconf.com/strataeu2014Data Day TexasAustin, Jan 10datadaytexas.comStrata CASan Jose, Feb 18-20strataconf.com/strata2015Spark Summit EastNYC, Mar 18-19spark-summit.org/eastSpark Summit 2015SF, Jun 15-17spark-summit.org

presenter:monthly newsletter for updates,events, conf summaries, etc.:liber118.com/pxn/Just Enough MathO’Reilly, 2014justenoughmath.compreview: youtu.be/TQ58cWgdCpAEnterprise Data Workflowswith CascadingO’Reilly, 2013shop.oreilly.com/product/0636920028536.do

What is Spark? spark.apache.org "Organizations that are looking at big data challenges - including collection, ETL, storage, exploration and analytics - should consider Spark for its in-memory performance and the breadth of its model. It supports advanced analytics solutions on Hadoop clusters, including the iterative model