Transcription

Braindrop: A Mixed-SignalNeuromorphic ArchitectureWith a DynamicalSystems-BasedProgramming ModelThis paper provides an overview of a current approach for the construction of aprogrammable computing machine inspired by the human brain.By A LEXANDER N ECKAR , S AM F OK , B EN V. B ENJAMIN , T ERRENCE C. S TEWART, N ICK N. O ZA ,A ARON R. VOELKER , C HRIS E LIASMITH , R AJIT M ANOHAR , Senior Member IEEE,K WABENA B OAHEN , Fellow IEEEANDBraindrop is the first neuromorphic systema clean abstraction is presented to the user. Fabricated indesigned to be programmed at a high level of abstrac-a 28-nm FDSOI process, Braindrop integrates 4096 neuronstion. Previous neuromorphic systems were programmed atin 0.65 mm2 . Two innovations—sparse encoding through ana-the neurosynaptic level and required expert knowledge oflog spatial convolution and weighted spike-rate summationthe hardware to use. In stark contrast, Braindrop’s computa-though digital accumulative thinning—cut digital traffic drasti-tions are specified as coupled nonlinear dynamical systemscally, reducing the energy Braindrop consumes per equivalentand synthesized to the hardware by an automated proce-synaptic operation to 381 fJ for typical network configurations.ABSTRACT dure. This procedure not only leverages Braindrop’s fabricof subthreshold analog circuits as dynamic computationalprimitives but also compensates for their mismatched andtemperature-sensitive responses at the network level. Thus,Manuscript received April 24, 2018; revised October 12, 2018 and November 12,2018; accepted November 12, 2018. Date of current version December 21,2018. (Corresponding author: Alexander Neckar.)This work was supported in part by the ONR under Grants N000141310419 andN000141512827. The work of A. Voelker was supported by OGS and NSERC CGSD. The work of C. Eliasmith was supported by the Canada Research ChairsProgram under NSERC Discovery Grant 261453.A. Neckar, S. Fok, and B. V. Benjamin, were with the Department of ElectricalEngineering, Stanford University, Stanford, CA 94305 USA (e-mail:aneckar@gmail.com).T. C. Stewart, A. R. Voelker, and C. Eliasmith are with the Centre forTheoretical Neuroscience, University of Waterloo, Waterloo, ON N2L 3G1,Canada.N. N. Oza was with the Department of Bioengineering, Stanford University,Stanford, CA 94305 USA.R. Manohar is with the Department of Electrical Engineering, Yale University,New Haven, CT 06520 USA.K. Boahen is with the Department of Bioengineering, Stanford University,Stanford, CA 94305 USA, and also with the Department of Electrical Engineering,Stanford University, Stanford, CA 94305 USA.Digital Object Identifier 10.1109/JPROC.2018.2881432KEYWORDS Analog circuits; artificial neural networks; asyn-chronous circuits; neuromorphics.I. INTRODUCTIONBy emulating the brain’s harnessing of analog signalsto efficiently compute and communicate, we can buildartificial neural networks (ANNs) that perform dynamiccomputations—tasks involving time—much more energyefficiently.Harnessing analog signals in two important waysenables biological neural networks (BNNs) to save energyby using much more energetically expensive digital communication sparingly [1]. First, BNNs exploit the nervemembrane’s local capacitance to continuously and dynamically update their analog somatic potentials, sparsifyingtheir digital axonal signaling in time. Second, BNNs exploitlocal fan-out to reduce long-range communication by propagating their analog dendritic signals across O(n) distanceto O(n2 ) somas,1 sparsifying their digital axonal signal1 The cortical sheet’s third dimension is much shorter than its firsttwo (2–3 mm versus tens of centimeters).0018-9219 2018 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission.See http://www.ieee.org/publications standards/publications/rights/index.html for more information.144P ROCEEDINGS OF THE IEEE Vol. 107, No. 1, January 2019

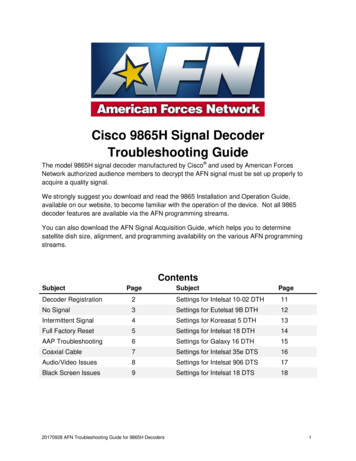

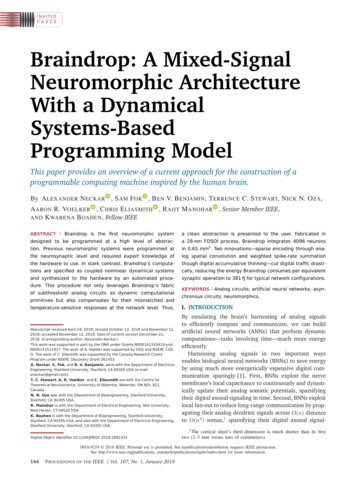

Neckar et al.: Braindrop: A Mixed-Signal Neuromorphic Architectureing in space. This complementary relationship betweenanalog dendritic signaling and digital axonal signaling iscompletely lost in traditional ANN implementations thatreplace discrete spike trains by continuous rates, dynamicneuronal behavior with static point nonlinearities, andspatially organized neuron arrays mimicking BNNs’ localconnectivity with globally connected neurons.The price to be paid for mimicking the BNNs’energy-efficient mixed-signal approach with modernCMOS process technology is the uncertainty in manufacturing. This uncertainty results in exponentially mismatched responses and thermal variability in analog (i.e.,physically realized) neuron circuits. Because these circuitsare not time-multiplexed, they must be sized as small aspossible to maximize neuron count, and because thesecircuits constantly conduct their bias current, they must bebiased with as little current as possible to minimize (static) power consumption. However, these two requirementsmake designing analog circuits in modern CMOS processeseven more challenging; smaller transistors have more mismatched threshold voltages, and minuscule currents areexponentially sensitive to this mismatch [2] as well as tothe ambient temperature [3].Thus, while using analog signaling promises energyefficiency because of its potential to sparsify the digitalcommunication in space and time, analog circuits’ inherent heterogeneity and variability impede programmability and reproducibility. This heterogeneity and variabilityare directly exposed to the user when the mixed-signalneuromorphic systems are programmed at the level ofindividual neuronal biases and synaptic weights [4]–[6].Because each chip is different, for a given computation,each must be configured differently. In addition, the siliconneurons inherit their transistors’ thermal variation, requiring further fine-tuning of programming parameters. Thislack of abstraction and reproducibility limits adoption toexperts who understand the hardware at the circuit level.To ease programmability and guarantee reproducibility,some recent large-scale neuromorphic systems adopt anall-digital approach [7], [8].This paper presents Braindrop (see Fig. 1), the firstmixed-signal neuromorphic system designed with a cleanset of mismatch- and temperature-invariant abstractions in mind. Unlike previous approaches for analogcomputation [9]–[12], which use fewer, bigger analog circuits biased with large currents to minimize mismatch (andits associated thermal variation), Braindrop’s hardwareand software embrace mismatch, working in concert toharness the inherent variability in its analog electronics toperform computation, thereby presenting a clean abstraction to the user. Orchestrating hardware and softwareautomatically is enabled by raising the level of abstractionat which the user interacts with the neuromorphic system.The user describes their computation as a system ofnonlinear differential equations, agnostic to the underlying hardware. Automated synthesis proceeds by characterizing the hardware and implementing each equationusing a group of neurons that are physically colocatedFig. 1.Mapping a computation onto Braindrop. (a) Desiredcomputation is described as a system of coupled dynamicalequations. (b) NEF describes how to synthesize eachsubcomputation using a pool of dynamical neurons. (c) User usesNengo, the NEF’s Python-based programming environment,to translate the equations into a network of pools. (d) Computationis implemented on Braindrop (blue outer outline indicates thepackage; inner outline indicates the die’s core circuitry). Nengocommunicates with Braindrop through its driver software to providea real-time interface.(called a pool). This computing paradigm, theoreticallyunderpinned by the Neural Engineering Framework(NEF) [13], is not only tolerant of, but also reliant on,mismatch; neuron responses form a set of basis functionsthat must be dissimilar and overcomplete. Dissimilarityenables arbitrary functions of the input space to be approximated by a linear transform. Overcompleteness ensuresthat the solutions exist in the null-space of the set’s thermalvariation. Thus, these two properties enable us to abstractthe analog soma and synapse circuits’ idiosyncrasies away.Section II briefly reviews the NEF, the level of abstraction at which a user interacts with the Braindrop system. Section III highlights accumulative thinning andsparse encoding, novel hardware implementations ofthe NEF’s linear decoding and encoding that sparsifydigital communication in time and space, respectively.Section IV describes Braindrop’s architecture and discusses its hardware implementation and software support. Section V characterizes and validates the hardware’sdecoding and encoding operations. Section VI demonstrates the performance of several example applicationscurrently running on the Braindrop. Section VII introduces an energy-efficient metric for spiking neural network (SNN) architectures with different connectivities—energy per equivalent synaptic operation—and determinesit for Braindrop over varying operating configurations.Section VIII compares Braindrop’s energy and area efficiencies with other SNN architectures. Section IX presents ourconclusions.II. N E U R A L E N G I N E E R I N G F R A M E W O R KThe NEF provides a way to translate a computation specified as a differential equation into a network of somas andVol. 107, No. 1, January 2019 P ROCEEDINGS OF THE IEEE145

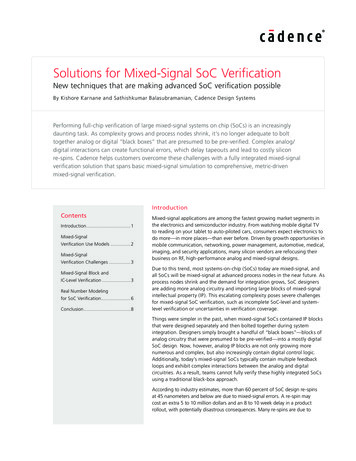

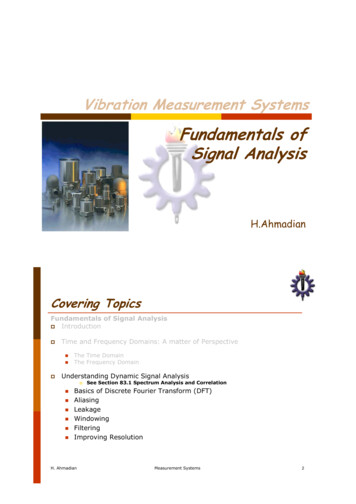

Neckar et al.: Braindrop: A Mixed-Signal Neuromorphic ArchitectureFig. 2.Emulating the nonlinear dynamical system ẋ t f x u t with a SNN. This system’s dynamics arematched by the SNN’s state vector, x(t), when Di and c(t) areassigned such thatD δx τ /τ f x x (after synapticτ iifiltering) and c t τ /τi u t . Branches of a soma’s dendrite andaxon realize weighting. Line thickness depicts weight magnitude.Sign may be positive (green line), negative (purple line), or zero(gray line).synapses interconnected via dendrites and axons. A somais viewed as implementing a static nonlinear function,whose argument is a continuous current and whose valueis the soma’s spike train. A synapse is viewed as theimplementing leaky integration (i.e., low-pass temporalfiltering), thereby converting these spike trains back into acontinuous current. A differential equation’s state variable(x), which may be multidimensional, is represented bya vector of d current signals. The equation specifies atransformation [f (x)] of this vector of d input currentsignals into another vector of d output current signals. Thistransformation is realized—and temporally integrated—bya collection (or pool) of N somas and d synaptic filters infour steps (see Fig. 2).First, differently weighted sums of the d input currentsare fed into each of the N somas (one per soma), a linearmapping known as encoding. Based on its particularweighting, each soma in the pool will provide a strongerresponse for a particular set of input vectors. A somais excited (receives positive current) when the vectorpoints in its preferred direction, and it is inhibited(receives negative current) when it points away. The NEFchooses these directions—specified by encoding vectors—randomly to ensure that all directions are representedwith equal probability.Second, these N current inputs are transformed by thesomas’ static nonlinearities into N spike trains, a pointwise146P ROCEEDINGS OF THE IEEE Vol. 107, No. 1, January 2019nonlinear mapping. Before passing the input currentthrough its static nonlinearity, each soma scales it by again and adds a bias current. The NEF assigns somas gainsand biases drawn from a wide distribution, resulting ina heterogenous set of nonlinearities. Compounded withtheir randomly drawn encoding vectors, the somas’ nonlinear responses—called tuning-curves—form a dissimilarand overcomplete basis set for approximating the arbitrarymultidimensional transformations of the input vector.Third, these N spike trains are converted into dweighted sums, another linear mapping known as decoding. For the weights, a decoding vector is assigned to eachsoma by solving a least-squares optimization problem (seeFig. 3). When each spike is viewed as a delta function withunit area, replacing it with a delta function with area equalto a component of the decoding vector and merging theresulting delta trains together yields the desired weightedsum for one of the d dimensions.Finally, the synaptic filters’ leaky integration is cleverlyexploited to integrate the transformed vector, a criticaldifference between the NEF, and other random-projectionnetworks, such as Extreme Learning Machines [14]–[16],which cannot realize a dynamic transformation. This operation is accomplished by feeding the merged, scaled deltatrains to the synaptic filters and adding their d outputcurrents to the d input currents through (recurrent) feedback connections (see Fig. 2). Thus, nonlinear differentialequations of arbitrary order may be implemented by asingle pool [13].More elaborate computations are first decomposed intoa coupled system of differential equations, and then, eachone is implemented by one of an interconnected set ofpools. These pools are interconnected by linking one pool’sdecoder to another pool’s encoder to form large networkgraphs. Linear transforms may be placed between decodersand encoders (see Fig. 4). The resulting SNN’s connectivityis defined by encoding vectors, decoding vectors, andFig. 3.Transformation y / πx (black curve) isapproximated by y AD (yellow curve), where each of A’s columnsrepresent a single neuron’s spike rates over x’s range (blue curvefor negative- and red curve for positive preffered directions) and Dis a vector of decoding weights, or, more generally, a matrix ofdecoding vectors. D is obtained by solving for argminD AD y λ D . To produce each panel, a basis pursuit isfirst performed on 1024 neurons’ tuning curves collected fromBraindrop to select the best sets of 3, 8, and 30 neurons’ responsesto form A, thereby demonstrating the effect of using more neuronson performance. Error bars represent 10th and 90th percentileswhen sampling for 0.3 s/point.

Neckar et al.: Braindrop: A Mixed-Signal Neuromorphic ArchitectureFig. 4.Operations and signal representations for an NEF network with two pools of neurons connected by decode-transform-encodeweights. Unlike Fig. 2, filtering takes place after encoding, leveraging these two linear operations’ commutativity. Three neurons emit threedeltas each. Their areas are equal to the weights: / , / , and / , from top to bottom. Merges ( ) conserve deltas and fan-out replicatesthem, and thus, the original nine deltas turn into 36 deltas by the time they reach the four synaptic filters.transform matrices, which occupy much less memory thanfully connected synaptic weight matrices, an appealingfeature of the NEF.The following five features make the NEF a particularlyappealing synthesis framework for mixed-signal neuromorphic hardware.1) Heterogeneous neuronal gains and biases—dueto transistor threshold-voltage mismatch—naturallyyield a diverse set of basis functions, which is desirable for function approximation.2) This set’s overcompleteness may be exploited to mitigate the tuning curves’ thermal variation by findingsolutions in the null-space [17], [18].3) Analog synaptic filtering provides a native dynamicalprimitive, which is necessary for emulating arbitrarydynamical systems.4) Theoretical extensions enable mismatch in synapticfilters’ time constants as well as their higher orderdynamics to be accurately accounted for [19], [20].5) Communicating between neurons using spikesincreases scalability because digital signaling isresilient to noise—unlike analog signaling.III. M A P P I N G T H E N E F T ON E U R O M O R P H I C H A R D WA R EIn a large-scale neuromorphic system, digital communication constitutes the largest component of the totalsystem’s power budget. Therefore, to improve energy efficiency, we have focused on reducing the digital communication in both time and space (i.e., making it more sparse,spatiotemporally). For temporal sparsity, we invented accumulative thinning, and for spatial sparsity, we inventedsparse encoding (see Fig. 5).Accumulative thinning is a digitally implemented,linear-weighted-sum operation that sparsifies digitalcommunication in time. This operation is performed inthree steps: 1) translate spikes into deltas with area equalto their weights; 2) merge these weight-area deltas intoa single train; and 3) convert this train into a unit-areadelta train (i.e., back to spikes),2 whose rate equals theweighted sum of input-spike rates. For the usual case ofweights smaller than 1, this method reduces total deltacounts through layers, communicating more sparsely thanprior approaches, which used probabilistic thinning, whileachieving the same signal-to-noise ratio (SNR).Sparse encoding represents the encoder not as a densematrix but as a sparse set of digitally programmed locations in a 2-D array of analog neurons. Each location,called a tap point,3 is assigned a particular preferreddirection, called an anchor-encoding vector. The diffusor—atransistor-based implementation of a resistive mesh—convolves the output of these tap points with its kernel torealize well-distributed preferred directions. Thus, neuronscan be assigned with encoding vectors that tile the multidimensional state space fairly uniformly using tap points assparse as one per several dozen neurons.Using digital accumulators for decoding and analogconvolution for encoding supports the NEF’s abstractionswhile sparsifying digital communication spatiotemporally,thereby improving Braindrop’s energy efficiency.A. Decoding by Accumulative ThinningMatrix-multiply operations lead to the traffic explosionwhen the weighted spike trains—represented by deltatrains—are combined by merging. One merge is associatedwith each output dimension. When a spike associated2 Only a spike’s time of occurrence carries information, whereas adelta’s area carries information as well as its timing.3 Meant to evoke the taproot of some plants, a thick central root fromwhich smaller roots spread.Vol. 107, No. 1, January 2019 P ROCEEDINGS OF THE IEEE147

Neckar et al.: Braindrop: A Mixed-Signal Neuromorphic ArchitectureFig. 5.Braindrop’s computational model replaces merges in Fig. 4 with accumulators ( ) and its encoding with sparse encoding followedby convolution. The nine original deltas are thinned to two before being sparsely fanned out, delivering four deltas to the synaptic filtersinstead of 36, while still reaching all the somas.Algorithm 1 Accumulator UpdateRequire: input w [ 1, 1]x : x wif x 1 thenemit 1 outputx : x 1else if x 1 thenemit 1 outputx : x 1end ifwith a particular input dimension occurs, the matrix’scorresponding column is retrieved and deltas with areaequal to each of the column’s components are mergedonto the output for each corresponding dimension,multiplying the traffic. As a result, O(din ) spikes enteringa matrix M Rdin dout result in O(din dout ) deltas beingthe output. This multiplication of traffic compounds witheach additional weight-matrix layer. Thus, O(N ) spikesentering a N d d N decode-transform-encodemultiply to O(N 2 d2 ) deltas (see Fig. 4).Accumulative thinning computes a linearly weightedsum of spike rates while reducing the number of deltasthat must be fanned out. The deltas’ areas—set equal to theweight—are summed into a two-sided thresholding accumulator to produce a train of signed unit-area deltas (seeAlgorithm 1). Because this operation is area-conserving,the synaptic filter’s leaky integration produces virtuallythe same result as it does for the merged weight-areadelta trains. For the usual case of weights smaller thanone, however, the accumulator’s state variable x (seeAlgorithm 1) spaces output deltas further apart (and more148P ROCEEDINGS OF THE IEEE Vol. 107, No. 1, January 2019evenly).4 Thus, it can cut a N d d N decodetransform-encode network’s delta traffic from O(N 2 d2 ) toO(N d) (compare Figs. 4 and 5), sparsifying its digitalcommunication in time by a factor N d.In practice, the total traffic is dictated by point-processstatistics of delta trains fed to synaptic filters and desiredSNR of their outputs. Traditionally, spike-train thinningas-weighting for neuromorphic chips has been performedprobabilistically as Bernoulli trials [21], [22], which alsoproduce unit-area-delta trains, but with Poisson statistics, whereas the accumulator operates as a deterministicthinning process (i.e., decimation), which produces trainswith periodic statistics (when the weights are small andassumed to be equal). With the Poisson statistics, SNRscales as λ, where λ is the rate of deltas, whereas withperiodic statistics, it scales as λ. Thus, the accumulatorcan preserve most of its input’s SNR while outputtingmuch fewer deltas than prior probabilistic approaches(see Fig. 6), thereby sparsifying digital communication intime. (For a mathematical analysis, see Appendix A.)Replacing the merging of inhomogeneous-area deltaswith the accumulation of their areas not only avoids trafficexplosion but also enables us to use a much simpleranalog synaptic filter circuit. For 8-bit weights, delta’sareas are represented by 8-bit integers. If these multibitvalues serve as input, a digital-to-analog converter (DAC)is needed to produce a proportional amount of current. This analog operation must be performed with therequisite precision, making the DAC extremely costly in4 For an output-delta rate of Fout , the accumulator’s step responseis jittered in time by up to 1/Fout due to variation in its initial state.This jitter is negligible if it is much shorter than the synaptic filter’stime constant, τ , because the filter’s delay is of the order of τ . Its stepresponse rises to 63% (1 1/e) of its final value in τ s.

Neckar et al.: Braindrop: A Mixed-Signal Neuromorphic ArchitectureFig. 6.Standard Decoding versus Accumulator versus BernoulliTrials. Top: deltas (vertical lines) generated by an inhomogeneousPoisson process are synaptically filtered (orange curve); the ideal var Z , calculated for theoutput is also shown (green curve). Middle: accumulator fed thesame deltas yields a similar SNR E Z / filtered train Z over a longer run. Its state (first inset) increments byw . with each input delta and thresholds at 1, triggering anoutput delta. Bottom: biased coin (p . ) is flipped for each delta.When the coin returns heads (second inset), an output delta isgenerated. This method accomplishes the same weighting but yieldspoor SNR.area. To avoid using it, compact silicon-synapse circuitdesigns take in unit-area deltas with signs denoting excitatory and inhibitory inputs [23]. The accumulator producesthe requisite unit-area deltas—modulating only their signand rate to convey the decoded quantity.In summary, the accumulator produces a unit-areadelta train with statistics approaching a periodic process,yielding higher SNR—for the same output delta rate—than Bernoulli weighting, which only produces Poissonstatistics.filter’s output currents with the somas’ input currents. Theoutput currents decay exponentially as they spread amongnearby somas [24]. The space constant of decay is tunedby adjusting the gate biases of the diffusor’s transistors.We leverage the commutativity of synaptic filtering andconvolution operations in our circuit design. By performingsynaptic filtering before convolution, and recognizing thata kernel need not be centered over every single neuron,we may reduce the number of (relatively large) synapticfilter circuits. Hence, the diffusor acts on temporally filtered subthreshold currents, and there is only one synapticfilter circuit for every four neurons. Through these twooperations’ commutativity, this solution is equivalent to theNEF’s usual formulation (compare Figs. 4 and 5).By choosing the adjacent tap points’ anchor-encodingvectors to be orthogonal, it is possible to assign variedencoding vectors to all neurons without encoding eachone digitally. The diffusor’s action implies that nearbyneurons receive similar input currents and, therefore,have similar encoding vectors (i.e., relatively small anglesapart). Hence, neighboring anchor-encoding vectors thatare aligned with each other yield similarly aligned encoding vectors for the neurons in between. This redundancy does not contribute to tiling the d-sphere’s surface.Conversely, vectors between tap points with orthogonalanchors span a 90 arc, boosting coverage.For 2-D and 3-D input spaces, just four and ninetap points, respectively, provide near-uniform tiling(see Fig. 8). Coverage may be quantified by plotting thedistribution of angles to the nearest encoding vector forpoints randomly chosen on the unit d-sphere’s surfaceB. Sparse Encoding by Spatial ConvolutionWe use convolution, implemented by analog circuits,to efficiently fan-out and mix outputs from a sparse setof tap points, leveraging the inherent redundancy of NEFencoding vectors. In the NEF, encoding vectors that uniformly tile a d-sphere’s surface are generally desirable.Because these encoding vectors form an overcompletebasis for the d-D input space, the greatest fan-out takesplace during encoding (see Fig. 4). Performing this highfan-out efficiently as well as reducing the associated largenumber of digitally stored encoding weights motivated usto use convolution (see Fig. 5).The diffusor—a hexagonal resistive mesh implementedwith transistors—convolves synaptic filter outputs with aradially symmetric (2-D) kernel and projects the resultsto soma inputs (see Fig. 7). It interfaces the synapticFig. 7.Diffusor operation. Somas are colored according to theproportion of input received from the red or green delta trains andshaded according to the total input magnitude received.Vol. 107, No. 1, January 2019 P ROCEEDINGS OF THE IEEE149

Neckar et al.: Braindrop: A Mixed-Signal Neuromorphic Architecture2-D metric space without distorting the relative distances.Furthermore, the number of neurons a single-pool networkneeds to approximate arbitrary functions of d dimensions isexponential in d [25]. Therefore, we simply make adjacentanchor encoders as orthogonal as possible and increasetheir number exponentially. (For empirical results, seeSection V-B.)In summary, using the diffusor to implement encoding isa desirable tradeoff. Encoding vectors are typically chosenrandomly, so the precise control of the original RN dmatrix is not missed. In exchange, we sparsify digitalcommunication in space—and reduce memory footprint—by a factor equal to the number of neurons per tap point.IV. B U I L D I N G B R A I N D R O PFig. 8.Encoding 2-D (left) and 3-D (right) spaces in a arrayof neurons. Top: for 2-D and 3-D, encoding vectors are generatedusing four and nine tap points (black dots, labeled with theirBraindrop implements a single core of the plannedBrainstorm chip, architected to support building amillion-neuron multicore system. As a target applicationto guide our design, we chose the Spaun brain model [26],which has an average decode dimensionality of eight.Thus, we provisioned the core with 16 8-bit weights perneuron, leaving ample space for transforms. Braindrop’sdigital logic was designed in a quasidelay-insensitiveasynchronous style [27], whereas its analog circuitswere designed using subthreshold current-modetechniques [28]. Automated synthesis software supportstranslating an abstract description of a dynamic nonlineartransformation into its robust implementation onBraindrop’s mismatched, temperature-variable analogcircuits.anchor-encoding vector), respectively. For 2-D, vector directionmaps to hue. For 3-D, the first dimension maps to luminance (whiteto black) and the other two to hue. Shorter vectors are moretransparent. Middle: resulting vectors are plotted. Bottom: vectorsare normalized, showing that they achieve reasonable radialcoverage.(see Fig. 9). By doing digital fan-out to four or ninetap points and leveraging analog convolution, we achieveperformance nearing that of the reference approach thatdigitally fans out to all 256 neurons.5 It is not surprisingthat the diffusor’s 2-D metric space efficiently tiles 2-Dand 3-D encoders that sit on 1-D and 2-D surfaces and,thus, can be embedded with little distortion of relativedistances.6Beyond 3-D, there are no longer clever arrangements ofa constant number of tap points that yields good coverage.Achieving good coverage becomes challenging because theencoders can no longer be embedded in the diffusor’s5 Alldistributions are approximated by the Monte Carlo method:generate a large number of random unit vectors (max(1000, 100 · 2d ))and find each vector’s largest innerproduct among the normalizedencoding vectors.6 In general, a d-D encoder sits on a (d 1)-D surface. Thus,the easily embeddable spaces could be extended to 4-D by buildinga 3-D diffusor, for instance, by stacking the thinned die and interconnecting them using dense thru-silicon vias.150P ROCEEDINGS OF THE IEEE Vol. 107, No. 1, January 2019A. ArchitectureTo maximize utilization of the core’s resources,4096 neurons, 64 KB of weight memory (WM), 1024accumulator buckets, and 1024 synaptic filters, two smallFig. 9.Angle-to-nearest-encoder CDFs, evaluated over the surfaceof the d-sphere (orange curve) for the 2-D (left) and 3-D (right)tap-point-and-diffusor encoders in Fig. 8. The 90th percentiles are0.07 and 0.20 rad, respectively, implying that 90% of the space hasgaps in coverage no bigger than these angles. For reference, CDFsfor an equal number (256) of encoding vectors distributed uniformlyrandomly on the d-sphere’s surface (green curve) and for four (2-D)or six (3-D) vectors aligned with the positive- ornegative-dimensional axes (red curve) are also shown.

Neckar et al.: Braindrop: A Mixed-Signal

efficiency because of its potential to sparsify the digital communication in space and time, analog circuits' inher-ent heterogeneity and variability impede programmabil-ity and reproducibility. This heterogeneity and variability are directly exposed to the user when the mixed-signal neuromorphic systems are programmed at the level of