Transcription

In "Proceedings of the International Conference on Cybernetics and Society",IEEE Systemsl Man and Cybernetics Societyl Atlanta, GAS/Octoberl 1981, pgs.393-397.THE INTELLIGENT EARA GRAPHICAL INTERFACE TO DIGITAL AUDIOChristopher SchmandtArohitecture Machine GroupMassachusetts Institute of TechnologyABSTRACTThe "Intelligent Ear" is a digitaldictaphone driven by an interactivegraphical interface. This systemexperiments in the use of cross mediamapping, between audio and its visualrepresentation, to facilitate manmachine interaction in sound editingtasks.The Ear's intelligence includes limitedkeyword recognition and display ofamplitude, i.e. phrashing data. A colorvideo display is capable of communicatingthis content simply but meaningfully,by mapping temporal audio events intospatial visual cues. The experienceof another area of information processing,screen oriented text editors, contributesto an easily used interface throughwhich the user can always visualizethe current state of the edited sound,The "Intelligent Ear" is an interactivegraphical interface to a digital audiorecording and playback system. The"Ear" is both a display oriented,content sensitive listening device anda "screen editor" for recorded sounds.It consists of a digital audio recordingand playback system coupled to a speechrecognizer; display and control is viaa color raster scan television monitoroverlayed with a touch sensitive surface.This system attempts to facilitate audiorelated tasks by providing a humaninterface to digitized sound using computer graphic techniques.The Graphical Audio InterfaceThe design emphasis of the IntelligentEar is on the interface, via graphics,to audio communications. We are attempting to show that a smart, andparticularly a highly interactive, display has the potential to revolutionizecontrol of otherwise non-graphical media.A key point is the Ear's intelligence;not only does it allow access to sounddata, it also attempts to understand it.As a listening device, the Ear digitizesa conversation or dictation and stores iton magnetic disk. It later scans therecording for the occurance of selectedkeywords. The recorded audio is thendisplayed graphically via a standardraster-scan color frame buffer. Soundamplitude modulates both height and colorof a waveform representation a the recording drawn on the display. The keywords which have been recognized in thespeech are written on the monitor belowthe appropriate 1.ocation in the waveformrepresentation of the sound.Interacting With Digital AudioThe Intelligent Ear can be used in avariety of ways depending on the particular application. In the most generalcase, we assume that a conversation ordictation has been pre-recorded at sometime and is now to be either reviewed oredited, perhaps by the same speaker,perhaps by another. At one extreme theEar can be used as a "minute taker" at ameeting; some time later, it displaysgraphically the conversations of themeeting, with different speakers shownin different colors, and keywords notedin the meeting highlighted textually.At the other extreme, the Ear is a dictation device which allows easy and cleanediting of memos, letters, etc; again,keywords can be displayed for more intuitive understanding of the speechwaveform displayed by the editor.The Ear's interface corisists of a representation of the recorded speech, andtouchable "buttons" similar to those ofa conventional tape recorder, but withpowerful additional editing functions.The sound is displayed much as on awaveform monitor or graph; lines acrossthe screen vary in height as well asintensity as a function of the amplitudeof the audio signal. Horizontal (i,e.time) resolution is adjusted so thatpauses between sentences and in somecases between words are clearly visible,allowing easy access to clean editpoints.The work r e p o r t e d h e r e i n was supported by t h e C y b e r n e t i c s TechnologyD i v i s i o n o f t h e Defense Advanced Research P r o j e c t s Agency, underC o n t r a c t No. MDA-903-77-c-00370360-8913/8I /OWl-O393 00.75 O 1981 IEEE

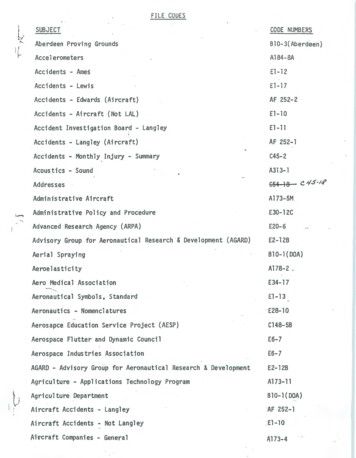

Figure I. The 1ntell.igent Ear. This photograph was taken from a standardtelevision monitor; the graphics use color in several areas. The screen istouch sensitive, and the words across the bottom are "buttons" which controlediting.A "sound cursor", a bright colored rectangle, is positioned by touching thedesired point on the sound waveform; itindicates where to make an edit or thepoint from which to start playing asound (see figure I),By touching a "play" button at the bottomof the screen, the sound starts playingfrom the current position of the soundcursor. While the sound is playing, theassociated waveform changes color insync with the audio, i.e. the user canvisually identify which part of the recording is playi.ng,a useful editing aid.The sound plays until a "stop" button istouched, or the end of the sound isreached,Note that there is a direct relation be-tween the (x, y) point touched on thescreen and a time offset into the recording, The waveform changing color inspace while, and quite in sync with, theaudio playing in time creates a powerfulspatial association between the two media.This cross-media link is a prerequisitefor an interface which allows immediateand intuitive interaction (1, 2).Selected keywords which have been detected are written under the appropriatepoint on the sound waveform displ.ay.Again, this allows a higher degree ofvisual perception of the content of therecording. Although userx%nteractionwith the graphical interface bringsnearly immediate response, keyword recognition is not a real. t.ime process, taki.ng

up to twelve times the length of a conversation to analyze it. The graphicalinterface communicates the degree ofcertainty for recognition of each wordin the intensity with which the word iswritten; the Ear displays words it ismore confident of brightly, and oneswith less confidence in less visiblegreys.AS an editor, the Ear allows both insertion and deletion of audio material. A"record" button allows the user to speakinto the sound document at the currentposition of the sound buffer. Similarly,a "delete" button proceeds to erasewhatever sound lies between the next twopoints on the sound waveform depictionthe user touches. The editor analyzesthe audio signal around the various editpoints to make a smooth edit; it's intelligence includes finding sentence orphrase boundaries.Whenever an edit is made, either a recorded insertion or deletion, the graphical display is updated to show thelatest change. Although keyword recognition cannot be done on insertions inreal time, the waveform representationchanges quickly, and the new version ofthe sound remains editable. Since editing is buffered (see below), extra redand green buttons are provided to saveor restore the edit buffers.11Display Oriented EditorAs an editor, the Intelligent Ear makesuse of many concepts which have gainedpopularity, with good reason, among usersof computer text editing systems. Withthe advent of inexpensive computer terminals with display capabilities, anumber of highly interactive editors havebeen written, and often are one of themost widely used programs on a computersystem (3, for example). Two specificfeatures of such editors have been deliberately incorporated, display orientationand buffered edit operations.A display oriented text editor is one inwhich a text file is continuously displayed in its current state. Typing control functions which inserts, delete, oralter text update the terminal screenimmediately. This instant feedback is invaluable for keeping track of the currentstate of the document being edited, and ofthe editor's recognition of the typist'sintent. The same idea is incorporatedinto the Ear for identical reasons, Whenever the sound is edited, the display ofthe sound amplitude, keywords, etc. isquickly updated, Cursor positioning,again as with a text editor, shows wherein the sound document the edit is tooccur. This makes learning the operationof the Ear a quick, intuitive process, andinsures that the user is more likely tomake the edits he/she actually wants.In addition, all edits are buffered. Twocopies of the sound are maintained, anda single edit is effected to only one ofthem. The sound can be thought of as alist of buffer offsets to play sequentially. In the case of a deletion, the soundis played up the beginning of the deletion,then skips to the audio section followingthe edit. In a recorded insert, thesound is played up to the insertion, thena separate record buffer is played, thenback to the original sound. Touchablebuttons then copy the edited sound intothe second edit buffer, so it will playas edited in a single linear playing.This feature gives a chance to reviewevery edit by playing it before any audiodate is permanently changed.Keyword RecognitionThe main barrier to speech recognitionfrom normal conversational speech (asopposed to the clearer enunciation andpauses between words usually associatedwith speech input devices) is the blurringtogether of a number of words into coarticulated phrases (4). Although theNippon Electron Company (NEC) DP-100 isremarkably successful analyzing connectedwords, it can process a maximum of 2.4seconds of continuous input, withoutpauses. These pauses tend to be absent inordinary conversational English,Using the flexibilty of a computer contolled digital audio system we devised ascheme to overcome the continuous inputlimitations of recognition hardware byplaying back small segments of the recorded sound with waits between eachsegment for the DP-100 to perform itsanalysis. The recording is divided intosections which are played sequentially.Since words may be chopped at the segmentboundaries, successive audio windows mustoverlap. What one actually hears duringthe keyword analysis of the recordingis a moving window of sound, with pausesbetween each play, and overlap betweeneach segment.Performance falls within a wide rangedepending on whether the recording is ofa single person dictating or a multispeaker conversation. Performance tendsto be a trade off; as we lower the levelof confidence required for acceptance,the number of correct recognitionsincreases, but so do the false guesses.Allowing one false guess per minute(reasonable in examples where keywordsoccur quite a bit more often) we havebeen able to recognize 25% to 75% of thekeywords in our experiments.

Several schemes have been developed togive higher recognition. A mu1t.i-passanalysis is used; the same recording isplayed to the speech recognizer threetimes, with varying window lengths andoverlaps. A majority poll is takenbetween the passes to determine whatcounts as a detection. This reducesfalse guesses as the signal (correctrecognitions) tends to remain morecoherrent across passes then the noise(false guesses on different passes tendto chose different words). This multipass algorithm roughly doubles keyworddetection performance, and has yieldedresults of between 40 and 100% of correct recognitions at the one false guessper minute rate.It is important to consider the impactof imperfect keyword recognition on thetask at hand. In fact, several graphical techniques increase the utility ofour detection algorithm. The firsttakes advantage of the fact that ourkeyword detection algorithm associatesa confidence value with each recognition,The text of keywords is written belowthe sound waveform display in a mannerwhich both indicates the confidence ofrecognition and simultaneously allowsuser filtering of these results. Themore confident we are of a keyword'sexistence, the brighter it is displayed in a greyscale of possible textintensities. Since the less confidentguesses are in fact more likely to beincorrect, they are written with fainttext so they can be easily ignored. Auser can quickly associate a trait suchas brightness with the Ear's certaintythat the word really exists.The second approach allows easy userfeedback into the keyword discriminationprocess. Two touch sensitive "buttons"are labelled "verify" and "erase".Touching either button, then the textfor a keyword, causes that keyword toeither turn full white (verify) orvanish (delete). From this point on,the Ear notes either 100% certainty ofthe existence of a keyword, or ceasesto display it; as this modifies a database associated with the sound recording,future uses will reflect this modifiedconfidence value. Thus the effectiveintelligence of the Ear can be boostedby allowing the user to contribute his/her own keyword detection.Hardware ConfigurationUser interaction with the Ear is via atouch sensitive color display. Graphicimages are drawn on a Ramtek 9300 framebuffer; this provides 9 bits per pixelof conventional raster scan video. Themonitor screen is covered with a clearf i l e diskdiskPerkin ElmerprocessorIIIIIIIIIIIIIII1. - - - -- ,-color monitor withtouch sensitivesurfaceIFigure 2. The hardware configuration.Solid lines indicate digital data paths;broken lines are analog audio or video.touch digitizing plastic surface manufactured by Elographics. All softwareand device interfaces inhabit a PerkinElmer 7/32 minicomputer with 512 Kbyte'sof memory. (see figure 2)At the heart of the Intelligent Ear isthe Laboratory's own design digital audiorecording and playback system, calledthe Soundbox (5,6), The Soundbox recordsand plays audio with a useable bandwidthof approximately 3.8 KHz, at eight bitsof resolution; this produces audio ofapproximately telephone quality. Suchrecording fidelity is quite sufficientfor experimental work involving voicebandwidth audio even though the humanvoice contains frequencies up to about8 KHz.Software drivers for the Soundbox moveblocks of digitized audio data between adedicated 20 megabyte magnetic disk andrecord and playback buffers. Sounds arestcred on a disk in a file system whichallows conventional data managementoperations: deletion, concatenation,copying, etc. Up to four sounds("voices") may be played simultaneously.Voices may also be preloaded with audiodata from disk so they may be playedsequentially with no audible pause between them, a feature we use for smoothlyplaying sounds Located in several editingbuffersA speech recognition system is connected.

to the audio output of the soundbox aswell as interfaced digitally to the Ear'scomputer. Recognition is accomplishedby a Nippon Electric Company DP-100Connected Speech Recognition System,(7).This unit is capable of recognizing upto 120 selectable words from continuoushuman speech. Connected speech is animportant requirement in this applicationsince we are analyzing normally spokenconversations without pauses betweenwords. The DP-100 will process up tofive words without pauses; for our keyword recognition operation the Soundboxbreaks the speech into arbitrary phrasesrather than trying to search for wordboundaries, a formidable task. Anotherimportant software feature of the Laboratory's version of the DP-100 is thatin addition to returning to the hostcomputer the word it has recognized, italso returns a "confidence value" indicating how close a rnatch was found withthat detection.SummaryThe Intelligent Ear concentrates ongraphic techniques at the merger ofseveral disciplines. An interactivecolor display is a highly useable interface to perusing and editing digital audiodata. The Ear's intelligence includeslimited keyword recognition and displayof amplitude, i.e. phrasing data, aboutthe sound. A color video display iscapable of communicating this intelligence simply but meaningfully. Finally,the experience of another area of information display, screen oriented texteditors, contributes to an easily usedand very practical editing system.AcknowledgementsBoth Eric Hulteen and Mark Vershel, graduate students at the Architecture Machine,have aided this project immensly, bothin sharing the programming tasks and incontributing creative ideas into the Ear'sdesign and keyword recognition algorithms.Their assistance is more than appreciated.References1.Negroponte, N. Augmentation of humanresources in command and controlthrough multiple media man-machineinteraction. MIT ArchitectureMachine Group, ARPA Report, 1976.2.Bolt, R.Ae Spatial-data-management,WIT Architecture Machine Group,DARPA Report, 1979.3.Ciccarelli, E. An Introduction to theEMACS Editor, MIT ArtificialIntelligence Laboratory Memo #447,January 1978.4. Reddy, D.R. Speech recognition bymachine: a review. Proceedingof the IEEE, 64, 4 (April l976),501-531.5. Hurd, Jonathan A.An InteractiveDigital Sound System for Multimedia Databases. S.B. Thesis,MIT, June 1979.6. Vershel, Mark. The Contribution of3-D sound to the Human-ComputerInterface. M.S. Thesis, MIT,June 1981.7. Kato, Yasuo. Words into action 111:a commercial system. ZEEE Spectrum(June 1980), 29.

IEEE Systemsl Man and Cybernetics Societyl Atlanta, GAS/ Octoberl 1981, pgs.393-397. THE INTELLIGENT EAR A GRAPHICAL INTERFACE TO DIGITAL AUDIO Christopher Schmandt Arohitecture Machine Group Massachusetts Institute of Technology ABSTRACT The "Intelligent Ear" is a digital dictaphone