Transcription

May/June2009Volume 2SOFTWARE TESTINGTesting strategies for complex environments:Agile, SOA1 CHAPTERA SOA VIEWOF TESTING2 CHAPTERAGILE TESTINGSTRATEGIES:EVOLVING WITHTHE PRODUCT

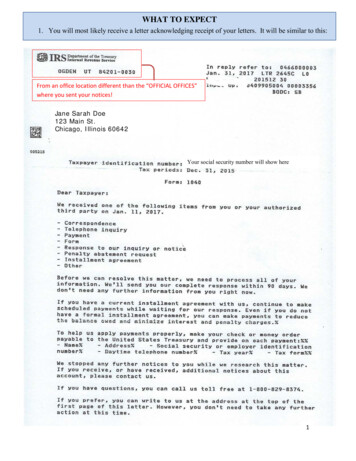

EDITOR’S LETTER1 Testing in the newworlds of SOA and agileaEDITOR’SLETTERaCHAPTER 1A SOA VIEWOF TESTINGaCHAPTER 2AGILE TESTINGSTRATEGIES:EVOLVINGWITH THEPRODUCTp UNLESS YOU LIVE in a sci-fi novel,there’s one rule of thumb for any newplace you go: some things are different; some are the same. Usually, success, fun or survival in that new placedepends upon how well you handlethe differences. In this issue ofSearchSoftwareQuality.com’s Software Testing E-Zine, the new “places”are a service-oriented architecture(SOA) and an agile software development environment.Examining the ins and outs of software testing in SOA environments in“An SOA view of testing,” consultantMike Kelly focuses on the “subtle differences.” What’s the same is thattesters here must start with thebasics. In SOA, the basics are connectivity, basic capacity, and authorization and authentication—not that different from other environments. Thelevel of complexity of data models,however, is very different from that ofother architectures and requires somenew methods of testing.While agile development requiresdifferent testing methods than thewaterfall model, those differences lieas much in human behavior as intechnology, according to consultant2 SOFTWARE TESTINGMAY/JUNE 2009Karen N. Johnson in “Agile testingstrategies: Evolving with the product.”Agile is a more collaborative processand calls for seizing “iterations as achance to evolve test ideas,” Johnsonwrites. Fundamental testing tasks, likeexploratory and investigative testing,stay the same, but the productivity ofcompleting those jobs can increase.Johnson and Kelly discuss the finerpoints of testing in agile and SOA,respectively, in this issue’s articles.They also describe revelations they’vehad while working in those environments and best practices they’velearned and continue to use.The theme of connectivity runsthrough these discussions of SOA andagile testing, as the former focuses onsystem connectivity and the latter onhuman collaboration. Both approaches, when done well, can help development and IT organizations achievelower costs and better software. JAN STAFFORDExecutive Editorjstafford@techtarget.com

CHAPTER 11 A SOA view of testingThe complexity of a service-oriented architecture cancomplicate testing and make choosing and implementingthe right testing tools more difficult. BY MICHAEL KELLYaEDITOR’SLETTERaCHAPTER 1A SOA VIEWOF TESTINGaCHAPTER 2AGILE TESTINGSTRATEGIES:EVOLVINGWITH THEPRODUCTp SERVICE-ORIENTED architectures(SOAs) are stealthily becoming ubiquitous. Large and small enterprisesleverage them to integrate widearrays of disparate systems into acohesive whole. Most companies andproject teams that implement SOA doso to reduce the cost, risk and difficulty of replacing legacy systems, acquiring new businesses or extending thelife of existing systems.Testing a SOA requires a solidunderstanding of the SOA design andunderlying technology. Yet, SOA’scomplexity makes figuring out thefunctional purpose of the SOA difficult and choosing and implementingthe right testing tools and techniquesto use with it more difficult.SOA is not simply Web services.According to iTKO (the makers of theTechTarget award-winning SOA testing tool LISA), nine out of 10 Webservices also involve some other typeof technology. In addition, they statethat most testing for SOA isn’t doneat the user interface level. It’s doneusing either specialized tools or aspart of an integration or end-to-end3 SOFTWARE TESTINGMAY/JUNE 2009test. This means people testing SOAneed to be comfortable with variedand changing technology, need tounderstand the various models forSOA, and should be familiar withthe risks common to SOA.WHY SERVICE-ORIENTEDARCHITECTURES?Large companies typically turn toSOA because their existing systemscan’t change fast enough to keep upwith the business. Because businessoperations don’t exist in discrete orfinite units, there are a lot of dependencies built into existing systems.SOA is an attempt to separate thesystem from the business operationsit supports. This allows companies toincrementally build or decommissionsystems and minimizes the impact ofchanges in one system to changes inanother.Implementing a SOA removesthe requirement of direct integration,which results in business operationsthat are easier to understand. Thattranslates into a more testable system

aEDITOR’SLETTERaCHAPTER 1A SOA VIEWOF TESTINGaCHAPTER 2AGILE TESTINGSTRATEGIES:EVOLVINGWITH THEPRODUCToverall. Over time, this makes iteasier to support a broader technology base, which in turn makes it easierto acquire and integrate new systems,extend the life of existing systemsand replace legacy systems.There are a number of differentmodel architectures for implementingSOA, including publish and subscript(often called “pub/sub”), requestand reply, and synchronous versusasynchronous. In addition, they canbe implemented in a broad rangeof technologies, including messageoriented middleware (MOM),simple HTTP posts with DNS routing,Web services, MQ series, JMS (orsome other type of queuing system)and even files passed in batchprocesses.If you’re testing a SOA you needto understand the implementationmodel and technologies involved,because these typically combine togive you some common features ofa SOA. These features often becomea focal point for your testing. Theycommonly include:Transformation and mapping:As data moves through a SOA, it’soften transformed between dataformats and mapped to variousstandard or custom data schemes. Routing: The path informationtakes as it moves through a SOAis often based on business rulesembedded at different levels ofthe architecture. 4 SOFTWARE TESTINGSecurity: SOAs often use stanMAY/JUNE 2009dards such as application-orientednetworking (AON), WS-Security,SAML, WS-Trust, WS-SecurityPolicy,or other custom security schemes fordata security as well as authorizationand authentication.Load balancing: SOAs are oftendesigned to spread work betweenresources to optimize resourceutilization, availability, reliabilityand performance. Translation: As data movesthrough a SOA, it’s often convertedor changed based on business rulesor reference data. Logging: For monitoring andauditing, SOAs often implementmultiple forms of logging. Notification: Based on the modelof SOA implemented, different notifications may happen at differenttimes. Adapters: Adapters (both customand commercial) provide APIs toaccess data and common functionswithin a SOA. FIGURING OUT WHAT TO TESTThe first thing you need to do whenyou start figuring what to test for yourSOA is to develop (or start developing) your test strategy. When I’mfaced with a new project and I’m notsure where to start, I normally pullout the Satisfice Heuristic Test Strategy Model and start there. Each sec-

Feeling theAgile Rush?Test FasterWith77TestCompleteThe EasiestTestComplete Ever!Agile development moves fast, too fastfor manual testing alone. In an agileenvironment you need automatedtesting tools to succeed. Check outTestCompletefromAutomatedQA.TestComplete lets anyone automate testsfor the widest range of technologies likeWeb, Ajax, Windows, .NET, Java, Flash, Flexand Silverlight. TestComplete has won fourJolt awards, been voted Best Testing Tooland yet it's still affordable.TestComplete's extensive feature set andgreat price has long made it the choice ofexpert testers. Now version 7 addsscript-free test creation and a simplifieduser interface so new testers can getproductive fast. TestComplete 7's easyautomated testing lets your entire QA teamtest more, test faster and ship on time withconfidence.Script-Free Test CreationSimple to learn and easy to extendDownload and Customize ExtensionsPre-built and custom add-ons makecomplex tests point and click easyUnmatched Technology SupportNew technology? No problem! Rapidsupport for the latest softwarePrice and Performance LeaderUnsurpassed features and a low priceto make you smile.Start Testing In Minutes!Download TestComplete now and starttesting AutomatedQA(978) 236-7900

tion of the Satisfice Heuristic TestStrategy Model contains things you’llneed to consider as you think aboutyour testing:Test techniques: What types oftesting techniques will you be doing?What would you need to supportthose types of testing in terms ofpeople, processes, tools, environments or data? Are there specificthings you need to test (like security,transformation and mapping, or loadbalancing) that may require specialized techniques? aEDITOR’SLETTERaCHAPTER 1A SOA VIEWOF TESTINGaCHAPTER 2AGILE TESTINGSTRATEGIES:EVOLVINGWITH THEPRODUCTProduct elements: What productelements will you be covering in yourtesting? What’s your scope? To whatextent will different data elements becovered in each stage? Which business rules? Will you be testing thetarget implementation environment?How much testing will you be doingaround timing issues? How will youmeasure your coverage against thoseelements, track it and manage it froma documentation and configurationmanagement perspective? Quality criteria: What types ofrisks will you be looking for while testing? Will you focus more on the business problem being solved or the risksrelated to the implementation modelor technology being used? Will youlook at performance at each level?What about security? How will youneed to build environments, integratewith service providers, or find toolsto do all the different types of testingyou’ll need to do? 6 SOFTWARE TESTINGMAY/JUNE 2009Project environment: What factors will be critical to your successand the success of the in-house teamas you take over this work? How willyou take in new work, structure yourrelease schedules, or move codebetween teams, phases or environments? What are the business driversto moving to a SOA and how willthose come into play while you’retesting? Recognize that as you think ofthese questions, it’s a matrix of concerns. A decision (or lack of decision)in each of these categories affects thescope of the decisions in the otherthree. Therefore, you will most likelyfind yourself approaching the problemfrom different perspectives at different times.Once you have an understandingof what features you’ll be testing andyou know which quality criteria you’llbe focused on, you’re ready to dig in.Don’t be surprised if in many waysyour testing looks like it does on anon-SOA project. SOA isn’t magic,and it doesn’t change things all thatmuch. However, I’ve found that thereare some subtle differences in wheremy focus is when I’m working on aSOA project.COVER THE BASICS AS SOON(AND AS OFTEN) AS YOU CANThe first area to focus on is typicallyconnectivity. Establishing a successfulround-trip test is a big step early in aSOA implementation. The first timeconnecting is often the most difficult.

Once you have that connectivity,don’t lose it. Build out regressiontests for basic connectivity that youcan run on a regular basis. While aSOA evolves, it is easy to break connectivity without knowing it. If youaEDITOR’SLETTERaCHAPTER 1A SOA VIEWOF TESTINGaCHAPTER 2AGILE TESTINGSTRATEGIES:EVOLVINGWITH THEPRODUCTAs data movesthrough a SOA it’stranslated, transformed,reformatted andprocessed. Thatmeans you’ll havea lot of tests focusedon testing data.break it, you’ll want to find out assoon as possible so it’s easier todebug any issues.Next you may want to look at basiccapacity. Capacity at this level can beanything from basic storage to connection pooling to service throughput.I’ve been on a couple of projectswhere these elements weren’t lookedat until the end, only to find that wehad to requisition new hardware atthe last minute or end up spendingdays changing configuration settingson network devices and Web servers.Figure out what you think you’ll needearly on, and run tests to confirmthose numbers are accurate andconsistent throughout the project.In addition, don’t put off testing forauthorization and authentication untilyou get into the target environment.I’ve worked on several teams where7 SOFTWARE TESTINGMAY/JUNE 2009development and test environmentshad different security controls thanproduction, which led to confusionand rework once we deployed andtried to run using an untested permissions scheme. If you use LDAP in production, use it when testing. If you’rehandling authorization in your SOAPrequest, do it consistently throughyour testing, even if you think it’seasier to disable it while doing“functional” testing.FOCUS ON THE DATAService-oriented architecturesaren’t just cleaner interfaces betweensystems, they are also complex datamodels. As data moves through aSOA it’s translated, transformed,reformatted and processed. Thatmeans you’ll have a lot of testsfocused on testing data. These teststypically make up the bulk of theregression test beds I’ve seen onprojects implementing a SOA.Tests focused around testing datain an SOA typically happen at thecomponent level and leverage stubsand harnesses to allow for testing asclose as possible to where the changetakes place. This simplifies debuggingand allows for more granular trackingof test coverage (based on a schema,mapping document or set of businessrules). SOA teams are constantlythinking about regression testing forthese tests. Small changes can havea large impact on the data, so thesetests become critical to refactoringor when adding new features.For many teams, these tests can

aEDITOR’SLETTERbe part of the continuous integrationprocess. While building these regression test beds can be very easy, onceyou have libraries of XML test caseslying around, maintaining them can bevery time consuming. That makes ateam’s unit testing strategy a criticalpart of the overall testing strategy.What will be tested at the unit level,component level, integration level andend-to-end? Starting as close to thecode as possible, automating there,and running those tests as often aspossible is often the best choice fordata-focused testing.aCHAPTER 1A SOA VIEWOF TESTINGaCHAPTER 2AGILE TESTINGSTRATEGIES:EVOLVINGWITH THEPRODUCTUNDERSTANDYOUR USAGE MODELSWhen I test a SOA, I develop detailedusage models. I originally used usagemodels solely when doing performance testing, but I’ve also found themhelpful when testing SOAs. When Ibuild usage models, I use the usercommunity modeling language(UCML) developed by Scott Barber.UCML allows you to visually depictcomplex workloads and performancescenarios. I’ve used UCML diagramsto help me plan my SOA testing, todocument the tests I executed, and tohelp elicit various performance, security and capacity requirements. UCMLisn’t the only way you can do that; ifyou have another modeling techniqueyou prefer, use that one.Usage models allow us to createrealistic (or reasonably realistic) testing scenarios. The power behind amodeling approach like this is that it’sintuitive to developers, users, man-8 SOFTWARE TESTINGMAY/JUNE 2009agers and testers alike. That meansfaster communication, clearerrequirements and better tests. Atsome level, every SOA implementation needs to think about performance, availability, reliability andcapacity. Usage models help focus thetesting dialogue on those topics (asopposed to just focusing on functionality and data).When testing for SOA performance,I focus on speed, load, concurrencyand latency. My availability and reliability scenarios for SOA often focuson failover, application and infrastructure stress, fault tolerance and operations scenarios. And conversationsaround capacity often begin with abasic scaling strategy and spiral outfrom there (taking in hardware, software, licensing and network concernsas appropriate). Because the scope isso broad, effective usage modelingrequires balancing creativity andreality.DEMONSTRATINGYOU CAN IMPLEMENTBUSINESS PROCESSESAt the end of your SOA implementation, you will have delivered something that implements or supports abusiness process. If the bulk of yourtesting up to that point has focusedon various combinations of servicelevel processes and data functions,then your end-to-end acceptancetests will focus on those final implemented business processes. Thesetests are often manual, utilize the endsystems (often user interface sys-

tems), and are slow and costly. All thetesting outlined up to this point hasbeen focused on making this testingmore efficient and effective.aEDITOR’SLETTERaCHAPTER 1A SOA VIEWOF TESTINGaCHAPTER 2AGILE TESTINGSTRATEGIES:EVOLVINGWITH THEPRODUCTMake sure you’reselecting tools to fitthe types of testingyou want to do. Manytools will do more thanyou need, or may forceyou to do somethingin a way that’s notoptimal for yourproject.If you’ve successfully covered thebasics, early and often, then youshould have few integration surpriseswhile doing your end-to-end testing.When you deploy the end-to-endsolution, you don’t want to be debugging basic connectivity or authorization/authentication issues. If you’vesuccessfully covered the data at theunit and service level, then you won’thave thousands of tests focused onsubtle variations of similar data.Instead, you’ll have tests targeted athigh-risk transactions, or tests basedon sampling strategies of what you’vealready tested. If you’ve done a goodjob of facilitating discussions aroundperformance, availability, reliabilityand capacity, then you likely alreadyreduced most of the risk around thosequality criteria and have clear plansfor migration to production.If you’re testing your first SOAimplementation, take some time tolearn more about the underlying technologies and implementation models.Once you’re comfortable with what’sbeing done, start looking at the toolsthat are available to support yourtesting needs. There are many greatcommercial and open source toolsavailable, and there is always theoption to create your own tools asneeded. Just make sure you’re selecting tools to fit the types of testing youwant to do. Many tools will do morethan you need, or may force you to dosomething in a way that’s not optimalfor your project.Finally, remember that whateveryou’re building now will change overtime. Make sure you’re working closely with the rest of the team to ensureregression capabilities have beenimplemented in the most effectiveand cost-effective ways possible.When you come back a year later tomake a small change, you don’t wantto have to re-create all the workyou’ve done the first time around. ABOUT THE AUTHOR:MICHAEL KELLY is currently the director of application development for Interactions. He alsowrites and speaks about topics in software testing. Kelly is a board member for the Associationfor Software Testing and a co-founder of the Indianapolis Workshops on Software Testing, aseries of ongoing meetings on topics in software testing. You can find most of his articles andblog on his website, www.MichaelDKelly.com.9 SOFTWARE TESTINGMAY/JUNE 2009

Making the decision to outsource the developmentof your critical application is not easy. There arepros and cons, but, once the decision is made, itis imperative that the quality you paid for andexpected is the quality you receive.Ask yourself these questions:1. What is the quality of the code that has beendeveloped?2. Is it too complex, making it unmaintainableand unreliable?3. How thoroughly was it tested prior todelivery back to you?4. Are you convinced that the most complexareas of your critical application are beingtested?5. Is the code quality and test coveragetrending in a positive direction?If you do outsource, you owe it to yourself, yourorganization, and most importantly your customersto know the answers to these questions.McCabe IQ provides those answers usingadvanced static and dynamic analysis technologyto help you focus your attention on the mostcomplex and risky areas of your code base.McCabe IQ’s breakthrough visualizationtechniques, enterprise reporting engine, andexecutive dashboard provide you with a completepicture of what is being produced.It’s time you made the most of your outsourcinginvestment and benefitted from our 30 years ofsoftware quality research and development.Don’t just think your code is good.Be sure of it, with McCabe IQ.

CHAPTER 21 Agile testingstrategies: Evolvingwith the productSoftware testing in an agile environment is the art ofseizing iterations as a chance to learn and evolve test ideas.BY KAREN JOHNSONaEDITOR’SLETTERaCHAPTER 1A SOA VIEWOF TESTINGaCHAPTER 2AGILE TESTINGSTRATEGIES:EVOLVINGWITH THEPRODUCTp YOU CAN ACHIEVE successfultesting in agile if you seize upon iterations as a chance to learn and evolvetest ideas. This is the art of softwaretesting strategy in agile. The challenge: How can testing be plannedand executed in the ever-evolvingworld of the iterative agile methodology? This article discusses some ofphilosophies, pros, cons and realitiesof software testing in the agile iterative process.One aspect of an agile softwaredevelopment project that I believebenefits testers is working with iterations. Iterations provide a previewopportunity to see new features and achance to watch features evolve. Theearly iterations are such sweet timesto see functionality flourish. I feel asthough I’m able to watch the process,to see the growth and the maturity ofa feature and a product. I feel more apart of the process of developmentwith an iterative approach to building11 SOFTWARE TESTINGMAY/JUNE 2009than I do when I’m forced to waitthrough a full waterfall lifecycle to seean allegedly more polished version ofan application.But there is an opposing point ofview that sees the iterative approachas frustrating. Early iterations couldIterations providea preview opportunityto see new featuresand a chance to watchfeatures evolve.be viewed as a hassle; the fact thatfeatures will evolve and morph ispotentially maddening if a testerthinks their test planning couldbecome obsolete. What if the features morph in such a way as to crushthe test planning and even crush thespirit of a waterfall-oriented tester? I

aEDITOR’SLETTERaCHAPTER 1A SOA VIEWOF TESTINGaCHAPTER 2AGILE TESTINGSTRATEGIES:EVOLVINGWITH THEPRODUCTthink it’s a matter of making a mindshift.Early iterations of functionalityprovide time to learn and explore.Learning is opportunity. While I’mlearning the product, I’m devisingmore ideas of how to test and what totest. Cycles of continuous integrationare chances to pick up speed withmanual testing and fine-tune testautomation.The iterative process also gives metime to build test data. I’m able totake advantage of the time. I also seemy learning grow in parallel to thematurity of a feature. As I’m learning,and the functionality is settling down,I can’t help but feel that both theproduct as a whole and I as a testerare becoming more mature, robustand effective. I’m learning and devising more ideas of how to test andwhat to test and potentially buildingtest data to test. We’re growingtogether.Cem Kaner outlined the approachof investigatory testing, and ScottAmbler discussed it in an articlecalled “Agile Testing Strategies”.Having been through a couple of agileprojects before reading Scott’s article,it was interesting to find myself nodding in agreement throughout. One ofKaner’s ideas on investigative testingthat I especially like and have experienced is finding both “big picture”and “little picture” issues.I enjoy the early iterations tostomp about an application so thatI can understand it. I like seeing myknowledge escalate to the pointwhere I’m asking good, solid ques-12 SOFTWARE TESTINGMAY/JUNE 2009tions that help the developmentprocess move along. I especially enjoyasking development a question andreceiving a pensive look from a developer, telling me I’ve just found a bugin code that I’ve not even seen or thathasn’t even been built—talk aboutfinding your bugs upstream. Frankly,I’ve long loathedthe idea that a fatstack of requirementscould give meeverything I needed.there’s also a greater chance that myown ideas will be incorporated, sinceagile gives me the opportunity to provide feedback about features earlyenough that my ideas can actuallymake it into a product. This is farmore likely with agile than if the product was fully baked before I had myfirst whiff.I’ve long loathed the idea that a fatstack of requirements could give meeverything I needed. “Here you go,”I’ve been told. “Now you can writethose test cases you testers build,because if you only test to requirements, the requirements and specifications are all you need.” I want toholler, “No, it isn’t so!” An hour at thekeyboard has more value to me thana day reading specifications. I wanttime with an application, I want timeto play, and I want time to learn. Andthe agile iterative process gives methat.

.PUTTING STRATEGY INTOPRACTICE: OUTLINE TEST IDEASaEDITOR’SLETTERaCHAPTER 1A SOA VIEWOF TESTINGaCHAPTER 2AGILE TESTINGSTRATEGIES:EVOLVINGWITH THEPRODUCTReview the user stories created forupcoming features. Read each storyclosely, envisioning the functionality.Write questions alongside the userstories; if possible, record your questions on a wiki where your commentsand the user stories can be viewed bythe team. Sharing your early thoughtsand questions on a team wiki givesthe entire team a chance to see thequestions being asked. Raising morequestions and conversations helps theentire team’s product knowledgeevolve. Also record your test ideas.This gives the developers a chance tosee the test ideas that you have. Miscommunications have an early chancefor clarification.When I write my early test ideas,they’re usually formatted as a bulleted list—nothing glamorous. I raise myquestions with the team and thedevelopers on team calls or at times Iarrange for that purpose. I openlyshare my test ideas and hunches atany possible time that I can. I tellevery developer I work with that mywork is not and does not need to bemysterious to them. I share as muchas anyone is willing to listen to orreview as early and as often as I can.To be honest, often the developers arebusy and ask for less than I would prefer, but that’s OK.I sometimes mix how I record mytest ideas, but the recording isn’t thepoint. The idea is to be constantlythinking of new and inventive ways tofind a break in the product. Find a wayto record test ideas. Be prepared to13 SOFTWARE TESTINGMAY/JUNE 2009record an idea at any time by havinga notebook, index cards, a voicerecorder—whatever the medium,make the process easy.GAIN A SENSE OF PRIORITYAfter reviewing all of the user stories,develop a sense of priority withineach story and then across all the stories. What do you want to test first?What have you been waiting to see?And what test idea is most likely toevoke the greatest issue? These arethe first tests.The theory behind the first-strikeattack is the old informal risk analysis.Risk analysis doesn’t go away withagile.When the next iteration comes, I’mnot in pause mode. I know where Iwant to be, I know which tests I wantto run. I don’t have any long testscripts to rework because of changes.I expect change. As I test these earlyreleases, I take notes. I adjust myideas as I learn the application. I findthat some early test ideas no longermake sense, no longer apply. I generate new ideas as I learn with eachiteration.USE SWEEP TESTINGIn 2007, I blogged about my approachto exploratory testing through a concept I call sweep testing. The practiceis one of accumulating test ideas,often using Excel. I could say I buildtest ideas into a central repository,but the term “repository” has such aheavy sound to it, when what I really

aEDITOR’SLETTERaCHAPTER 1A SOA VIEWOF TESTINGaCHAPTER 2AGILE TESTINGSTRATEGIES:EVOLVINGWITH THEPRODUCTmean is that I take the time to collectthose scraps of test ideas and earlyhunches and pool them together inone place. As the early iterations continue and my test ideas come to me atrandom, unplanned times, I’ve jotteddown ideas on index cards in my car,as voice recordings on my BlackBerryor as notes on the team wiki.As the project continues, early testideas fall by the wayside and newideas come into place. Taking the timeto list my ideas in one place helps merethink ideas and gain that revised,better informed sense of order andimportance. Once I’ve built my listand my list sweeps across all thefunctionality, I’m ready. I feel prepared, and I can launch into testingrapidly once the build hits.I function as the only tester on projects fairly often, so I’m able to use myconcept of sweep testing withoutneeding to share, do version controlor address the issues that come intoThe idea is to beconstantly thinkingof new and inventiveways to find a breakin the product.play when I’m not testing alone. Onprojects where I have had othertesters, I have used the concept ofsweep testing to assign differentworksheets or sections of a sheetfrom the same Excel file and had noissues with the divide-and-conquer15 SOFTWARE TESTINGMAY/JUNE 2009approach. I write in bulleted lists. Thelists are ideas, not steps or details.Now it’s not just the product thathas evolved through the iterations—Ihave evolved as a tester as well.COLLABORATIVE SPIRIT:PEOPLE WORKING TOGETHERThe shortage of people, money andtime, and the twisted situations eachof these can present—agile doesn’tcure all the blues. So it’s best not to betoo naïve, to think a process changeor a change in approach is going tosuddenly resolve all the murky eventsand behaviors that can happen on aproject.I still believe that the most powerfuladvantage on any project is peopleworking together who want to worktogether. This is one of the core principles of the context-driven schoolof testing.Bret Pettichord discusses severalways to build a collaborative spiritin his presentation “Agile TestingPractices.” The concepts of pairingtogether and no one developing specialized knowledge are two constructsthat I find especially insightful. I justseem to work alone more often thannot and have had fewer ch

overall.Overtime,thismakesit makesiteasier toacqu