Transcription

Backup Compression and Storage Deduplication: A perfect match?Hosted by David Gugick &David Swanson, Dell SoftwareJune 27, 2013

Agenda Speaker Introductions Deduplication Explained Deduplication and Backup Compression Benefits Ingest Rates Backup Recommendations Real-World Performance Takeaways Q&A Resources2

Your HostsDavid GugickDavid Swanson Product Management, Data Protection, Dell Software Database Systems Consultant, Dell Software david.gugick@software.dell com david.swanson@software.dell.com @davidgugick3

Deduplication Explained Eliminates the need to save duplicate data Connections–CIFS, NFS, Proprietary (DD Boost, Dell RDA) Inline vs post-process–Max ingest rate (single stream vs aggregate) Find matches–Chunking – sliding windows / variable block size Compress Target vs source-side deduplication Software vs hardware solutions Read Speed (Rehydration)–Overhead varies by vendor Replication4

Deduplication Effectiveness Varies Variables that influence the dedupe ratio for a given workload include:––––The type of data being backed up: Not all data sets have the same amount of duplicate data or compressibilityThe frequency of backups: More frequent backups will build the dedupe dictionary more quicklyThe retention period for backup jobs: Longer retention yields higher ratiosThe types of backups: Full backups will dedupe better than differential or transaction log backups Estimated deduplication ratio– Ratio estimates range from 9-12:1 for databases – same as 90-92% compression– Not a lot of duplicate data between databases– Benefits with databases are largely due to chunk matches within a single database Retention recommendations– Keep only what you need: Don’t keep more backups simply to raise the ratios Full or differential backups?– Most vendors will estimate logical storage (dedupe ratios) based on whether the customer performs full backups or leverages differential /incremental backups5

Deduplication Benefits Storage is reduced Replication speeds improve Processing is moved from servers to storage6

Backup Compression Benefits Reduces or eliminates disparity between source and target disk speeds– Backup speeds improve– Restore speeds improve Storage is reduced Network utilization is reduced Replication speeds improve Dump to and restore from tape speeds improve Helps with initializing Log Shipping / Mirroring / AlwaysOn Availability Groups7

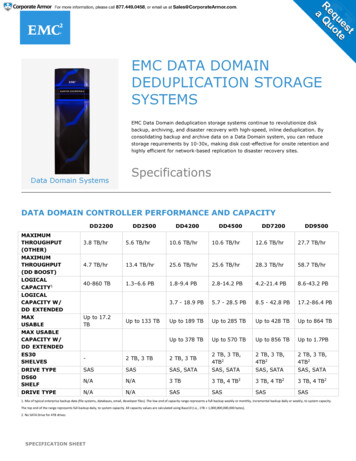

Ingest Rates Max ingest rate determines how fast the device can consume data– Many times stats are based on multiple backup streams– Single stream performance may be lower– Varies widely by how much you spend Network plays an important part––––8In practice, limits are lower1 Gb 125 MB / Sec10 Gb 1.25 GB / SecFibre Channel (8 GFC) 1.6 GB / Sec

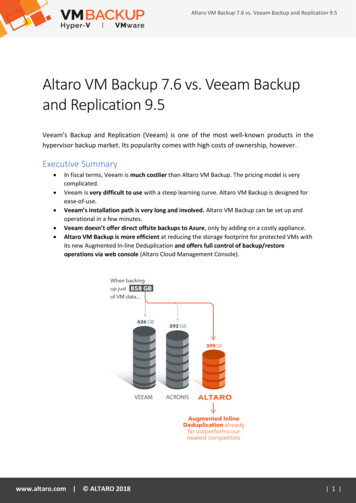

Backup Recommendations Offset backups to avoid network and ingest ratecontention Test with and without compression– Try a low-CPU compressor– 85% compression gives you close to 7X the writebandwidth Consider using differential backups to reduce storageand backup time– 70% reduction in data backed up means backups runon average 3.3X faster9

Backup Speed – 1 Backup10

Backup Speed – 2 Parallel Backups11

Backup Speed – 3 Parallel Backups12

Restore Speed13

Storage Footprint14

Takeaways Backup compression and deduplication are a good match Test your environment– Your results will vary based on many factors including: Rated speed of appliance, network design, backup job coordination, compressibilityof the database, database data change rate– Don’t expect much deduplication between different databases – most of the benefits are gained from backups of the same database Deduplication storage appliances are almost always shared in an environment– A single test on a single database in the lab is not representative of production– Furthermore, running full backups on the same database 30 times in a row as a test is not representative of production either– Even with exclusive access to deduplication storage by DBA team, there will usually be contention from parallel backup streams You won’t know the full effect of performing uncompressed backups until you test– Maintenance windows and RTOs may be affected15

Takeaways Test using lightweight backup compression– Avoids CPU load on the database server– Allows the deduplication storage the opportunity for some extra dedupe– Avoid Adaptive Compression to maximize deduplication If backup and restore times are most important, don’t be concerned with actual storage consumed– At worst, it’s a wash. At best, you’re saving space with compression– Don’t be overly concerned with final deduplication ratios - don’t keep 30 days of backups for each db just to get better deduplication ratiosif you only need 14 days Consider reducing data backed up using differential backups– Reduces the data read from SQL Server, sent over the network, and processed by the storage– Reduced backup windows– Can be compressed just the same16

Q&A17

Resources - References Some Deduplication Resources– Demystifying Deduplication White Paper: .pdf– Why Dedupe is a Bad Idea for SQL Server Backups: e-is-a-bad-idea-for-sql-serverbackups/– Backup Compression and Deduplication blog posts: cation-good-or-bad LiteSpeed– LiteSpeed Landing Page: http://www.quest.com/litespeed-for-sql-server/– Tech Brief: Top 7 LiteSpeed Features DBAs Should Know About: tures-dbas-should-knowabout815805.aspx– Webcasts and Events: d 15&prod 192 Dell DR 4100– http://www.dell.com/us/business/p/dell-dr4100/pd18

Thanks19

Jun 27, 2013 · – Don’t be overly concerned with final deduplication ratios - don’t keep 30 days of backups for each db just to get better deduplication ratios if you only need 14 days Consider reducing data backed up using differential backups – Reduces the data read from SQL Se