Transcription

Spec SheetCisco HyperFlexHX220c M5 Node(HYBRID)CISCO SYSTEMS170 WEST TASMAN DRSAN JOSE, CA, 95134WWW.CISCO.COMPUBLICATION HISTORYREV C.10AUGUST 16, 2022

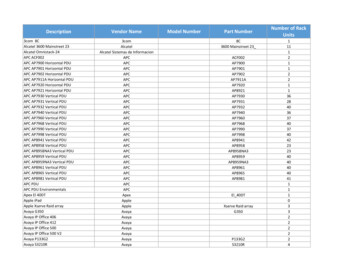

OVERVIEW . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1DETAILED VIEWS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2Chassis Front View . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2Chassis Rear View . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3BASE NODE STANDARD CAPABILITIES and FEATURES . . . . . . . . . . . . . . . . . . 4CONFIGURING the HyperFlex HX220c M5 Node . . . . . . . . . . . . . . . . . . . . . . 7STEP 1 VERIFY SERVER SKU . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8STEP 2 SELECT DEPLOYMENT MODE (OPTIONAL) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9STEP 3 SELECT CPU(s) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10STEP 4 SELECT MEMORY . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14CPU DIMM Configuration Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18STEP 5 SELECT RAID CONTROLLER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19SAS HBA (internal HDD/SSD/JBOD support) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19STEP 6 SELECT DRIVES . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20STEP 7 SELECT PCIe OPTION CARD(s) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23STEP 8 ORDER GPU CARDS (OPTIONAL) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25STEP 9 ORDER POWER SUPPLY . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26STEP 10 SELECT POWER CORD(s) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27STEP 11 SELECT ACCESSORIES . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30STEP 12 ORDER SECURITY DEVICES (OPTIONAL) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31STEP 13 ORDER TOOL-LESS RAIL KIT AND OPTIONAL REVERSIBLE CABLE MANAGEMENT ARM . 32STEP 14 SELECT HYPERVISOR / HOST OPERATING SYSTEM / INTERSIGHT WORKLOAD ENGINE (IWE) 33Intersight Workload Engine (IWE) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34STEP 15 SELECT HX DATA PLATFORM SOFTWARE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35STEP 16 SELECT INSTALLATION SERVICE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36STEP 17 SELECT SERVICE and SUPPORT LEVEL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37SUPPLEMENTAL MATERIAL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42Hyperconverged Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4210 or 25 Gigabit Ethernet Dual Switch Topology . . . . . . . . . . . . . . . . . . . . . . . . . . 44CHASSIS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45Block Diagram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46Serial Port Details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47Upgrade and Servicing-Related Parts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48KVM CABLE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49DISCONTINUED EOL PRODUCTS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50TECHNICAL SPECIFICATIONS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56Dimensions and Weight . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56Power Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57Environmental Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60Extended Operating Temperature Hardware Configuration Limits . . . . . . . . . . . . . . . . . . . 61Compliance Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 622Cisco HyperFlex HX220c M5 Node

OVERVIEWOVERVIEWCisco HyperFlex Systems unlock the full potential of hyperconvergence. The systems are based on anend-to-end software-defined infrastructure, combining software-defined computing in the form of CiscoUnified Computing System (Cisco UCS) servers; software-defined storage with the powerful Cisco HX DataPlatform and software-defined networking with the Cisco UCS fabric that will integrate smoothly with CiscoApplication Centric Infrastructure (Cisco ACI ). Together with a single point of connectivity and hardwaremanagement, these technologies deliver a preintegrated and adaptable cluster that is ready to provide aunified pool of resources to power applications as your business needs dictate.The HX220c M5 servers extend the capabilities of Cisco’s HyperFlex portfolio in a 1RU form factor with theaddition of the 2nd Generation Intel Xeon Scalable Processors, 2933-MHz DDR4 memory, and an all flashfootprint of cache and capacity drives for highly available, high performance storage.Deployment OptionsStarting with HyperFlex 4.5(2a) the following 2 deployment options are supported: HX Data Center with Fabric Interconnect - This deployment option connects the server to CiscoFabric Interconnect. The installation for this type of deployment can be done using the standaloneinstaller or from the Intersight. HX Data Center without Fabric Interconnect - This deployment option allows server nodes to bedirectly connected to existing switches. The installation for this type of deployment can be donefrom the Intersight only.The Cisco HyperFlex HX220c M5 Node is shown in Figure 1.Figure 1Cisco HyperFlex HX220c M5 NodeFront View with Bezel AttachedFront View with Bezel RemovedRear View (no VIC or PCIe adapters installed)Cisco HyperFlex HX220c M5 Node1

DETAILED VIEWSDETAILED VIEWSChassis Front ViewFigure 2 shows the front view of the Cisco HyperFlex HX220c M5 NodeFigure 21Chassis Front ViewDrive Slots7Fan status LEDSlot 01 (For HyperFlex System drive/Log drive) 1 x 2.5 inch SATA SSDSlot 02 (For Cache drive) 1 x 2.5 inch SATA SSD OR 1 x 2.5 inch SED SAS SSDSlot 03 through 10 (For Capacity drives) Up to 8 x 2.5 inch SAS HDD OR Up to 8 x 2.5 inch SED SAS HDD2N/A8Network link activity LED3Power button/Power status LED9Temperature status LED4Unit identification button/LED10Pull-out asset tag5System status LED11KVM connector (used with KVM cable thatprovides two USB 2.0, one VGA, and oneserial connector)6Power supply status LED——2Cisco HyperFlex HX220c M5 Node

DETAILED VIEWSChassis Rear ViewFigure 3 shows the external features of the rear panel.Figure 3Chassis Rear View1Modular LAN-on-motherboard (mLOM) cardbay (x16)7Rear unit identification button/LED2USB 3.0 ports (two)8Power supplies (two, redundant as 1 1)3Dual 1/10GE ports (LAN1 and LAN2). LAN1 isleft connector LAN2 is right connector9PCIe riser 2 (slot 2) (half-height, x16);4VGA video port (DB-15)10PCIe riser 1 (slot 1) (full-height, x16)51GE dedicated management port11Threaded holes for dual-hole grounding lug6Serial port (RJ-45 connector)——Cisco HyperFlex HX220c M5 NodeNOTE: Use of PCIe riser 2 requires a dual CPUconfiguration.3

BASE NODE STANDARD CAPABILITIES and FEATURESBASE NODE STANDARD CAPABILITIES and FEATURESTable 1 lists the capabilities and features of the base server. Details about how to configure the server fora particular feature or capability (for example, number of processors, disk drives, or amount of memory)are provided in CONFIGURING the HyperFlex HX220c M5 Node, page 7.Table 1 Capabilities and FeaturesCapability/FeatureDescriptionChassisOne rack unit (1RU) chassisCPUOne or two 2nd Generation Intel Xeon scalable familyChipsetIntel C621 series chipsetMemory24 slots for registered DIMMs (RDIMMs) or load-reduced DIMMs (LRDIMMs)Multi-bit ErrorProtectionThis server supports multi-bit error protection.VideoThe Cisco Integrated Management Controller (CIMC) provides video using theMatrox G200e video/graphics controller:Power subsystem Integrated 2D graphics core with hardware acceleration 512MB total DDR4 memory, with 16MB dedicated to Matrox video memory Supports all display resolutions up to 1920 x 1200 x 32bpp resolution at 60Hz High-speed integrated 24-bit RAMDAC Single lane PCI-Express host interface eSPI processor to BMC supportOne or two of the following hot-swappable power supplies: 770 W (AC) 1050 W (AC) 1050 W (DC) 1600 W (AC) 1050 W (AC) ELVOne power supply is mandatory; one more can be added for 1 1 redundancy.Front PanelA front panel controller provides status indications and control buttonsACPIThis server supports the advanced configuration and power interface (ACPI) 6.2standard.Fans4 Seven hot-swappable fans for front-to-rear coolingCisco HyperFlex HX220c M5 Node

BASE NODE STANDARD CAPABILITIES and FEATURESTable 1 Capabilities and Features (continued)Capability/FeatureExpansion slotsDescription Riser 1 (controlled by CPU 1): One full-height profile, 3/4-length slot with x24 connector and x16 lane. Riser 2 (controlled by CPU 2): One half-height profile, half-length slot with x24 connector and x16 laneNOTE: Use of PCIe riser 2 requires a dual CPU configuration. Dedicated SAS HBA slot (see Figure 8 on page 45) An internal slot is reserved for use by the Cisco 12G SAS HBA.Interfaces Rear panel One 1Gbase-T RJ-45 management port (Marvell 88E6176) Two 1/10GBase-T LOM ports (Intel X550 controller embedded on themotherboard One RS-232 serial port (RJ45 connector) One DB15 VGA connector Two USB 3.0 port connectors One flexible modular LAN on motherboard (mLOM) slot that canaccommodate various interface cards Front panel One KVM console connector (supplies two USB 2.0 connectors, one VGADB15 video connector, and one serial port (RS232) RJ45 connector)Internal storagedevices Up to 10 Drives are installed into front-panel drive bays that providehot-swappable access for SAS/SATA drives. 10 Drives are used as below: Six to eight SAS HDD or six to eight SED SAS HDD (for capacity) One SATA/SAS SSD or One SED SATA/SAS SSD (for caching) One SATA/SAS SSD (System drive for HyperFlex Operations) A mini-storage module connector on the motherboard for M.2 module for oneM.2 SATA SSDs for following usage: ESXi boot and HyperFlex storage controller VM One slot for a micro-SD card on PCIe Riser 1 (Option 1 and 1B). The micro-SD card serves as a dedicated local resource for utilities such ashost upgrade utility (HUU). Images can be pulled from a file share(NFS/CIFS) and uploaded to the cards for future use. Cisco Intersightleverages this card for advanced server management.IntegratedmanagementprocessorBaseboard Management Controller (BMC) running Cisco Integrated ManagementController (CIMC) firmware.Depending on your CIMC settings, the CIMC can be accessed through the 1 GEdedicated management port, the 1GE/10GE LOM ports, or a Cisco virtual interfacecard (VIC).CIMC manages certain components within the server, such as the Cisco 12G SASHBA.Cisco HyperFlex HX220c M5 Node5

BASE NODE STANDARD CAPABILITIES and FEATURESTable 1 Capabilities and Features (continued)Capability/FeatureDescriptionStorage controllerCisco 12G SAS HBA (JBOD/Pass-through Mode) Supports up to 10 SAS/SATA internal drives Plugs into the dedicated RAID controller slotmLOM SlotThe mLOM slot on the motherboard can flexibly accommodate the follow card: Cisco VIC 1387 Dual Port 40Gb QSFP CNA MLOM Cisco UCS VIC 1457 Quad Port 10/25G SFP28 CNA MLOMNote: PCIe options1387 VIC natively supports 6300 series FIs.To support 6200 series FIs with 1387, 10G QSAs compatible with 1387 areavailable for purchase. Breakout cables are not supported with 1387 Use of 10GbE is not allowed when used with 6300 series FI.PCIe slots on the Riser 1 and 2 can flexibly accommodate the following cards:Network Interface Card (NICs): Intel X550-T2 dual port 10Gbase-T Intel XXV710-DA2 dual port 25GE NIC Intel i350 quad port 1Gbase-T Intel X710-DA2 dual port 10GE NICVirtual Interface Card (VICs):UCSM6 Cisco VIC 1385 Dual Port 40Gb QSFP CNA w/RDMA Cisco UCS VIC 1455 Quad Port 10/25G SFP28 CNA PCIEUnified Computing System Manager (UCSM) runs in the Fabric Interconnect andautomatically discovers and provisions some of the server components.Cisco HyperFlex HX220c M5 Node

CONFIGURING the HyperFlex HX220c M5 NodeCONFIGURING the HyperFlex HX220c M5 NodeFor the most part, this system comes with a fixed configuration. Use these steps to see or change theconfiguration of the Cisco HX220c M5 Node: STEP 1 VERIFY SERVER SKU, page 8 STEP 2 SELECT DEPLOYMENT MODE (OPTIONAL), page 9 STEP 3 SELECT CPU(s), page 10 STEP 4 SELECT MEMORY, page 14 STEP 5 SELECT RAID CONTROLLER, page 19 STEP 6 SELECT DRIVES, page 20 STEP 7 SELECT PCIe OPTION CARD(s), page 23 STEP 8 ORDER GPU CARDS (OPTIONAL), page 25 STEP 9 ORDER POWER SUPPLY, page 26 STEP 10 SELECT POWER CORD(s), page 27 STEP 11 SELECT ACCESSORIES, page 30 STEP 12 ORDER SECURITY DEVICES (OPTIONAL), page 31 STEP 13 ORDER TOOL-LESS RAIL KIT AND OPTIONAL REVERSIBLE CABLE MANAGEMENTARM, page 32 STEP 14 SELECT HYPERVISOR / HOST OPERATING SYSTEM / INTERSIGHT WORKLOADENGINE (IWE), page 33 STEP 15 SELECT HX DATA PLATFORM SOFTWARE, page 35 STEP 16 SELECT INSTALLATION SERVICE, page 36 STEP 17 SELECT SERVICE and SUPPORT LEVEL, page 37Cisco HyperFlex HX220c M5 Node7

CONFIGURING the HyperFlex HX220c M5 NodeSTEP 1VERIFY SERVER SKUVerify the product ID (PID) of the server as shown in Table 2.Table 2 PID of the HX220c M5 NodeProduct ID (PID)DescriptionHX-M5S-HXDPThis major line bundle (MLB) consists of the Server Nodes (HX220C-M5SX andHX240C-M5SX) with HXDP software spare PIDs. Use this PID for creatingestimates and placing orders.NOTE: For the HyperFlex data center no fabric interconnect deployment mode,this PID must be usedHX220C-M5SX1HX220c M5 Node, with one or two CPUs, memory, eight HDDs for data storage,one SSD (HyperFlex system drive), one SSD for caching, two power supplies, oneM.2 SATA SSD, one micro-SD card, ESXi boot one VIC 1387 mLOM card, no PCIecards, and no rail kit.HX2X0C-M5SThis major line bundle (MLB) consists of the Server Nodes (HX220C-M5SX andHX240C-M5SX), Fabric Interconnects (HX-FI-6248UP, HX-FI-6296UP, HX-FI-6332,HX-FI-6332-16UP) and HXDP software spare PIDs.Notes:1. This product may not be purchased outside of the approved bundles (must be ordered under the MLB).The HX220c M5 Node: Requires configuration of one or two power supplies, one or two CPUs, recommendedmemory sizes, 1 SSD for Caching, 1 SSD for system logs, up to 8 data HDDs, 1 VIC mLOM card,1 M.2 SATA SSD and 1 micro-SD card. Provides option to choose 10G QSAs to connect with HX-FI-6248UP and HX-FI-6296UP Provides option to choose rail kits.Intersight Workload Engine (IWE)NOTE: All-NVMe and LFF nodes are not supported with IWE.NOTE: Use the steps on the following pages to configure the server with thecomponents that you want to include.8Cisco HyperFlex HX220c M5 Node

CONFIGURING the HyperFlex HX220c M5 NodeSTEP 2SELECT DEPLOYMENT MODE (OPTIONAL)Starting with HyperFlex 4.5(2a), the following 2 deployment options are supportedSelect deployment modeThe available deployment modes are listed in Table 3Table 3 Deployment ModesProduct ID (PID)DescriptionHX-DC-FIDeployment mode Selection PID to use Hyperflex with FIHX-DC-NO-FIDeployment mode Selection PID to use Hyperflex without FI HX Data Center with Fabric Interconnect - This deployment option connects the server toCisco Fabric Interconnect. The installation for this type of deployment can be done usingthe standalone installer or from the Intersight. This deployment mode has been supportedsince launch of HyperFlex. HX Data Center without Fabric Interconnect - This deployment option allows server nodesto be directly connected to existing switches. The installation for this type of deploymentcan be done from the Intersight only.Note the following apply:—No support for SED drives—No hyper-V support—No support for PMem—No support for Additional PCIE Cisco VIC—No support for stretch cluster—No support for Application acceleration engine—No support for IWESelecting this option will hence grey out the unsupported options during orderingNOTE: If no selection is done, the deployment mode is assumed to be DC with FICisco HyperFlex HX220c M5 Node9

CONFIGURING the HyperFlex HX220c M5 NodeSTEP 3SELECT CPU(s)The standard CPU features are: 2nd Generation Intel Xeon scalable family CPUs From 8 cores up to 28 cores per CPU Intel C621 series chipset Cache size of up to 38.5 MBSelect CPUsThe available CPUs are listed in Table 4.Table 4 Available CPUsProduct ID st DDR4Links DIMM ClockWorkload/Processor type2Support(GT/s)(MHz)UPI1Cisco Recommended CPUs (2nd Generation Intel Xeon Processors)HX-CPU-I82762.216538.50283 x 10.42933Oracle, SAPHX-CPU-I82602.416535.75243 x 10.42933Microsoft Azure StackHX-CPU-I6262V1.913533.00243 x 10.42400Virtual Server infrastructure orVSIHX-CPU-I6248R3.020535.75242 x 10.429332nd Gen Intel Xeon HX-CPU-I62482.515027.50203 x 10.42933VDI, Oracle, SQL, MicrosoftAzure StackHX-CPU-I6238R2.216538.50282 x 10.42933Oracle, SAP (2-Socket TDIonly), Microsoft AzureStackHX-CPU-I62382.114030.25223 x 10.42933SAPHX-CPU-I6230R2.115035.75262 x 10.42933Virtual Server Infrastructure,Data Protection, Big Data,Splunk, Microsoft AzureStackHX-CPU-I62302.112527.50203 x 10.42933Big Data, VirtualizationHX-CPU-I5220R2.212535.75242 x 10.42666Virtual Server Infrastructure,Splunk, Microsoft Azure StackHX-CPU-I52202.212524.75182 x 10.42666HCIHX-CPU-I5218R2.112527.50202 x 10.42666Virtual Server Infrastructure,Data Protection, Big Data,Splunk, Scale-out ObjectStorage, Microsoft AzureStackHX-CPU-I52182.312522.00162 x 10.42666Virtualization, Microsoft AzureStack, Splunk, Data ProtectionHX-CPU-I42162.110022.00162 x 9.62400Data Protection, Scale OutStorageHX-CPU-I4214R2.410016.50122 x 9.62400Data Protection, Splunk,Scale-out Object Storage,Microsoft AzureStack10Cisco HyperFlex HX220c M5 Node

CONFIGURING the HyperFlex HX220c M5 NodeTable 4 Available CPUsProduct ID st DDR4UPI1 Links DIMM ClockWorkload/Processor type2Support(GT/s)(MHz)HX-CPU-I42142.28516.50122 x 9.62400Data Protection, Scale OutStorageHX-CPU-I4210R2.410013.75102 x 9.62400Virtual Server Infrastructure,Data Protection, Big Data,SplunkHX-CPU-I42102.28513.75102 x 9.62400Virtualization, Big Data, Splunk8000 Series ProcessorHX-CPU-I8280L2.720538.50283 x 10.429332nd Gen Intel Xeon HX-CPU-I82802.720538.50283 x 10.429332nd Gen Intel Xeon HX-CPU-I8276L2.216538.50283 x 10.429332nd Gen Intel Xeon HX-CPU-I82762.216538.50283 x 10.429332nd Gen Intel Xeon HX-CPU-I82702.720535.75263 x 10.429332nd Gen Intel Xeon HX-CPU-I82682.920535.75243 x 10.429332nd Gen Intel Xeon HX-CPU-I8260Y2.416535.7524/20/ 3 x 10.41629332nd Gen Intel Xeon HX-CPU-I8260L2.316535.75243 x 10.429332nd Gen Intel Xeon HX-CPU-I82602.416535.75243 x 10.429332nd Gen Intel Xeon 6000 Series ProcessorHX-CPU-I6262V1.913533.00243 x 10.424002nd Gen Intel Xeon HX-CPU-I6258R2.720535.75282 x 10.429332nd Gen Intel Xeon HX-CPU-I62543.120024.75183 x 10.429332nd Gen Intel Xeon HX-CPU-I6252N2.315035.75243 x 10.429332nd Gen Intel Xeon HX-CPU-I62522.115035.75243 x 10.429332nd Gen Intel Xeon HX-CPU-I6248R3.020535.75242 x 10.429332nd Gen Intel Xeon HX-CPU-I62482.515027.50203 x 10.429332nd Gen Intel Xeon HX-CPU-I6246R3.420535.75162 x 10.429332nd Gen Intel Xeon HX-CPU-I62463.316524.75123 x 10.429332nd Gen Intel Xeon HX-CPU-I62443.615024.7583 x 10.429332nd Gen Intel Xeon HX-CPU-I6242R3.120535.75202 x 10.429332nd Gen Intel Xeon HX-CPU-I62543.120024.75183 x 10.429332nd Gen Intel Xeon HX-CPU-I6252N2.315035.75243 x 10.429332nd Gen Intel Xeon HX-CPU-I62522.115035.75243 x 10.429332nd Gen Intel Xeon HX-CPU-I62482.515027.50203 x 10.429332nd Gen Intel Xeon HX-CPU-I62463.316524.75123 x 10.429332nd Gen Intel Xeon HX-CPU-I62443.615024.7583 x 10.429332nd Gen Intel Xeon HX-CPU-I62422.815022.00163 x 10.429332nd Gen Intel Xeon Cisco HyperFlex HX220c M5 Node11

CONFIGURING the HyperFlex HX220c M5 NodeTable 4 Available CPUsProduct ID st DDR4UPI1 Links DIMM ClockWorkload/Processor type2Support(GT/s)(MHz)HX-CPU-I6240R2.416535.75242 x 10.429332nd Gen Intel Xeon HX-CPU-I6240Y2.615024.7518/14/ 3 x 10.4829332nd Gen Intel Xeon HX-CPU-I6240L2.615024.75183 x 10.429332nd Gen Intel Xeon HX-CPU-I62402.615024.75183 x 10.429332nd Gen Intel Xeon HX-CPU-I6238R2.216538.50282 x 10.429332nd Gen Intel Xeon HX-CPU-I6238L2.114030.25223 x 10.429332nd Gen Intel Xeon HX-CPU-I62382.114030.25223 x 10.429332nd Gen Intel Xeon HX-CPU-I62343.313024.7583 x 10.429332nd Gen Intel Xeon HX-CPU-I6230R2.115035.75262 x 10.429332nd Gen Intel Xeon HX-CPU-I6230N2.312527.50203 x 10.429332nd Gen Intel Xeon HX-CPU-I62302.112527.50203 x 10.429332nd Gen Intel Xeon HX-CPU-I6226R2.915022.00162 x 10.429332nd Gen Intel Xeon HX-CPU-I62262.712519.25123 x 10.429332nd Gen Intel Xeon HX-CPU-I6222V1.811527.50203 x 10.424002nd Gen Intel Xeon 5000 Series ProcessorHX-CPU-I5220S2.612519.25182 x 10.426662nd Gen Intel Xeon HX-CPU-I5220R2.215035.75242 x 10.426662nd Gen Intel Xeon HX-CPU-I52202.212524.75182 x 10.426662nd Gen Intel Xeon HX-CPU-I5218R2.112527.50202 x 10.426662nd Gen Intel Xeon HX-CPU-I5218B2.312522.00162 x 10.429332nd Gen Intel Xeon HX-CPU-I5218N2.310522.00162 x 10.426662nd Gen Intel Xeon HX-CPU-I52182.312522.00162 x 10.426662nd Gen Intel Xeon HX-CPU-I52173.011511.0082 x 10.426662nd Gen Intel Xeon HX-CPU-I5215L2.58513.75102 x 10.426662nd Gen Intel Xeon HX-CPU-I52152.58513.75102 x 10.426662nd Gen Intel Xeon 4000 Series ProcessorHX-CPU-I42162.110022.00162 x 9.624002nd Gen Intel Xeon HX-CPU-I4215R3.213011.0082 x 9.624002nd Gen Intel Xeon HX-CPU-I42152.58511.0082 x 9.624002nd Gen Intel Xeon HX-CPU-I4214R2.410016.50122 x 9.624002nd Gen Intel Xeon HX-CPU-I4214Y2.28516.5012/10/ 2 x 9.6824002nd Gen Intel Xeon HX-CPU-I42142.28516.50122 x 9.624002nd Gen Intel Xeon HX-CPU-I4210R2.410013.75102 x 9.624002nd Gen Intel Xeon 12Cisco HyperFlex HX220c M5 Node

CONFIGURING the HyperFlex HX220c M5 NodeTable 4 Available CPUsProduct ID st DDR4UPI1 Links DIMM ClockWorkload/Processor type2Support(GT/s)(MHz)HX-CPU-I42102.28513.75102 x 9.624002nd Gen Intel Xeon HX-CPU-I42082.18511.0082 x 9.624002nd Gen Intel Xeon 8511.0082 x 9.621332nd Gen Intel Xeon 3000 Series ProcessorHX-CPU-I3206R1.9Notes:1. UPI Ultra Path Interconnect. 2-socket servers support only 2 UPI performance, even if the CPUsupports 3 UPI.2. HyperFlex Data Platform reserves CPU cycle for each controller VM. Refer to the Install Guide forreservation details.CAUTION: For systems configured with 2nd Gen Intel Xeon 205W R-series processors,operating above 30o C [86o F], a fan fault or executing workloads with extensive use of heavyinstructions sets like Intel Advanced Vector Extensions 512 (Intel AVX-512), may assertthermal and/or performance faults with an associated event recorded in the System Event Log(SEL). HX-CPU-I6258R HX-CPU-I6248R HX-CPU-I6246R C/35.75MB24C/35.75MB16C/35.75MB20C/35.75MBDDR4 2933MHzDDR4 2933MHzDDR4 2933MHzDDR4 2933MHzApproved Configurations(1) 1-CPU Configuration: Select any one CPU listed in Table 4 on page 10. Requires 12 Core and above CPUs.(2) 2-CPU Configuration: Select two identical CPUs from any one of the rows of Table 4 on page 10.Cisco HyperFlex HX220c M5 Node13

CONFIGURING the HyperFlex HX220c M5 NodeSTEP 4SELECT MEMORYThe standard memory features are: Clock speed: Up to 2933 MHz; See available CPUs and their associated DDR4 DIMM maximumclock support in Table 4. Rank per DIMM: 1, 2, 4, or 8 Operational voltage: 1.2 V Registered ECC DDR4 DIMMS (RDIMMs), Load-reduced DIMMs (LRDIMMs)Memory is organized with six memory channels per CPU, with up to two DIMMs per channel, asshown in Figure 4.Slot 2Slot 2Slot 1HX220c M5 Node Memory OrganizationSlot 1Figure 4A1A2G2G1Chan GChan AB1C1H2 H1B2C2Chan BChan HChan CChan JCPU 1D1K2K1L2L1M2M1Chan KE2Chan EF1J1CPU 2D2Chan DE1J2Chan LF2Chan FChan M24 DIMMS3072 GB maximum memory (with 128 GB DIMMs)6 memory channels per CPU,up to 2 DIMMs per channel14Cisco HyperFlex HX220c M5 Node

CONFIGURING the HyperFlex HX220c M5 NodeSelect DIMMsSelect the memory configuration. The available memory DIMMs are listed in Table 5NOTE: The memory mirroring feature is not supported with HyperFlex nodes.Table 5 Available DDR4 DIMMsProduct ID (PID)PID DescriptionVoltageRanks/DIMMHX-ML-128G4RT-H1128 GB DDR4-2933MHz LRDIMM/4Rx4 (16Gb)1.2 V4HX-ML-X64G4RT-H164 GB DDR4-2933MHz LRDIMM/4Rx4 (8Gb)1.2 V4HX-MR-X64G2RT-H164 GB DDR4-2933MHz RDIMM/2Rx4 (16Gb)1.2 V2HX-MR-X32G2RT-H132GB DDR4-2933MHz RDIMM/2Rx4 (8Gb)1.2 V2HX-MR-X16G1RT-H116 GB DDR4-2933MHz RDIMM/1Rx4 (8Gb)1.2 V1HX-ML-128G4RW2128GB DDR4-3200MHz LRDIMM 4Rx4 (16Gb)1.2 V1HX-MR-X64G2RW264GB DDR4-3200MHz RDIMM 2Rx4 (16Gb)1.2 V1HX-MR-X32G2RW232GB DDR4-3200MHz RDIMM 2Rx4 (8Gb)1.2 V1HX-MR-X16G1RW216GB DDR4-3200MHz RDIMM 1Rx4 (8Gb)1.2 V1Notes:1. Cisco announced the End-of-sale of the DDR4-2933MHz Memory DIMM products: EOL14611 lists the productpart numbers affected by this announcement. Table 6 describes the replacement Memory DIMM product PartNumbers.2. DDR4-3200MHz replacement part numbers will operate at the maximum speed of the Intel 2nd generationXeon Scalable processor memory interface, ranging from 2133 MHz to 2933 MHz.Intersight Workload Engine (IWE)NOTE: Even though 128GB of DRAM is supported with IWE, it is recommended to havea minimum of 192GB of DRAM configured for maximum performance.Cisco HyperFlex HX220c M5 Node15

CONFIGURING the HyperFlex HX220c M5 NodeTable 6 lists the EOL Memory DIMM product part numbers and their replacement PIDs.Table 6 EOL14611 Memory DIMM Product Part Numbers and their replacement PIDsEOS ProductPart Number (PID)PID DescriptionReplacementProduct PIDReplacement ProductDescriptionHX-MR-X16G1RT-H16GB DDR4-2933MHz RDIMM1Rx4 (8Gb)/1.2vHX-MR-X16G1RW16GB DDR4-3200MHz RDIMM1Rx4 (8Gb)/1.2vHX-MR-X32G2RT-H32GB DDR4-2933MHz RDIMM2Rx4 (8Gb)/1.2vHX-MR-X32G2RW32GB DDR4-3200MHz RDIMM2Rx4 (8Gb)/1.2vHX-MR-X64G2RT-H64GB DDR4-2933MHz RDIMM2Rx4 (16Gb)/1.2vHX-MR-X64G2RW64GB DDR4-3200MHz RDIMM2Rx4 (16Gb)/1.2vHX-ML-X64G4RT-H64GB DDR4-2933MHzLRDIMM 4Rx4 (8Gb)/1.2vHX-MR-X64G2RW164GB DDR4-3200MHz RDIMM2Rx4 (16Gb)/1.2vHX-ML-128G4RT-H128GB DDR4-2933MHzLRDIMM 4Rx4 (16Gb)/1.2vHX-ML-128G4RW128GB DDR4-3200MHz LRDIMM4Rx4 (16Gb)/1.2vNotes:1. Cisco doesn't support a Load Reduce DIMM (LRDIMM) 64GB Memory PID as a replacement PID ofexisting UCS-ML-x64G4RT-H and recommends migrating to the Registered DIMM (RDIMM) instead,delivering the best balance in performance and price.16Cisco HyperFlex HX220c M5 Node

CONFIGURING the HyperFlex HX220c M5 NodeCPU DIMM Configuration TableApproved Configurations(1) 1-CPU configuration Select from 1 to 12 DIMMs.CPU 1 DIMM Placement in Channels (for identically ranked DIMMs)1(A1)2(A1, B1)3(A1, B1, C1)4(A1, B1); (D1, E1)6(A1, B1); (C1, D1); (E1, F1)8(A1, B1); (D1, E1); (A2, B2); (D2, E2)12(A1, B1); (C1, D1); (E1, F1); (A2, B2); (C2, D2); (E2, F2)(2) 2-CPU configuration Select from 1 to 12 DIMMs per CPU.CPU 1 DIMM Placement in Channels(for identical ranked DIMMs)CPU 2 DIMM Placement in Channels(for identical ranked DIMMs)CPU 1CPU 21(A1)(G1)2(A1, B1)(G1, H1)3(A1, B1, C1)(G1, H1, J1)4(A1, B1); (D1, E1)(G1, H1); (K1, L1)6(A1, B1); (C1, D1);(E1, F1)(G1, H1); (J1, K1); (L1, M1)8(A1, B1); (D1, E1);(A2, B2); (D2, E2)(G1, H1); (K1, L1); (G2, H2); (K2, L2)12(A1, B1); (C1, D1); (E1, F1); (A2, B2); (C2, D2);(E2, F2)(G1, H1); (J1, K1); (L1, M1); (G2, H2); (J2, K2);(L2, M2)Cisco HyperFlex HX220c M5 Node17

NOTE: The selected DIMMs must be all of same type and number of DIMMs must be equalfor both CPUs Even though 128GB of DRAM is supported, It is recommended to have a minimumof 192GB of DRAM configured for maximum performance HyperFlex Data Platform reserves memory for each controller VM. Refer to the Install Guide for reservation details. Recommended 6 or 12 DIMMs per CPU. Refer to the below “CPU DIMM Configuration Table” for the configurationdetailsSystem SpeedMemory will operate at the maximum speed of the Intel Xeon Scalable processor memory controller, rangingfrom 2133 MHz to 2933 MHz for M5 servers. Check CPU specifications for supported speedsNOTE: Detailed mixing DIMM configurations are described in Cisco UCS M5 MemoryGuide18Cisco HyperFlex HX220c M5 Node

STEP 5SELECT RAID CONTROLLERSAS HBA (internal HDD/SSD/JBOD support)Choose the following SAS HBA for internal drive connectivity (non-RAID): The Cisco 12G SAS HBA, which plugs into a dedicated RAID controller slot.Select Controller OptionsSelect the following: Cisco 12 Gbps Modular SAS HBA (see Table 7)Table 7 Hardware Controller OptionsProduct ID (PID)PID DescriptionControllers for Internal DrivesNote that the following Cisco 12G SAS HBA controller is factory-installed in the dedicated internalslot.HX-SAS-M5Cisco 12G Modular SAS HBA (max 16 drives)Approved ConfigurationsThe Cisco 12 Gbps Modular SAS HBA supports up to 10 internal drives.Cisco HyperFlex HX220c M5 Node19

STEP 6SELECT DRIVESThe stand

Cisco HyperFlex HX220c M5 Node DETAILED VIEWS 3 Chassis Rear View Figure 3 shows the external features of the rear panel. Figure 3 Chassis Rear View 1 Modular LAN-on-motherboard (mLOM) card bay (x16) 7 Rear unit identification button/LED 2 USB 3.0 ports (two) 8 Power supplies (two, redundant as 1 1) 3 Dual 1/10GE ports (LAN1 and LAN2). LAN1 is left connector LAN2 is right connector