Transcription

Wilhelm, A., Takhteyev, Y., Sarvas, R., Van House, N., and Davis, M. 2004. Photoannotation on a camera phone. In Extended Abstracts of the 2004 Conference onHuman Factors in Computing Systems (CHI 2004). Vienna, Austria, April 24 - 29,2004. ACM Press, New York, NY, 1403-1406. 2004 by authors

CHI 2004 ׀ Late Breaking Results Paper24-29 April ׀ Vienna, AustriaPhoto Annotation on a Camera PhoneAnita Wilhelm1, Yuri Takhteyev1, Risto Sarvas2, Nancy Van House1, Marc Davis112School of Information Management and SystemsUniversity of California Berkeley102 South Hall, Berkeley, CA 94720-4600, USA{awilhelm, yuri, vanhouse, marc}@ sims.berkeley.eduHelsinki Institute for Information Technology (HIIT)PO Box 9800, 02015 HUT, Finlandristo.sarvas@hiit.fiAbstractIn this paper we describe a system that allows users to annotatedigital photos at the time of capture. The system uses cameraphones with a lightweight client application and a server to store theimages and metadata and assists the user in annotation on thecamera phone by providing guesses about the content of the photos.By conducting user interface testing, surveys, and focus groups wewere able to evaluate the usability of this system and motivationsthat will inform our development of future mobile media annotationapplications. In this paper we present usability issues encountered inusing a camera phone as an image annotation device immediatelyafter image capture and users’ responses to use of such a system.browsing and retrieval. Consumer products are nowbeginning to appear which utilize metadata for imagemanagement, such as Adobe Photoshop Album 2.0,ACDSee, Apple iPhoto, and Adobe Photoshop CS.However, the vast majority of prior work on personal imagemanagement has assumed that image annotation occurs wellafter image capture in a desktop context. Time lag andcontext change then reduces the likelihood that users willperform the task, as well as their accurate recall of thecontent to be assigned to the photograph.Mobile devices, however, are designed to take into accountthe users’ physical environment and usage situations and canultimately enable us to infer image content from mobile usecontext.Furthermore, by utilizing networked devicescollaborative, co-operative applications are possible. If wecan take advantage of the affordances of mobile imaging, wecan overcome the loss of metadata in current digitalphotography due to time lapse and context change betweenimage capture and image annotation, as well as use mobilecontextual information to help to automate the imageannotation process.Categories & Subject Descriptors: H.5.1 [Informationinterfaces and presentation (e.g., HCI)]: Multimedia; H.4.3[Information systems applications]: CommunicationsApplications; H.3.m [Information storage and retrieval]:Information Search and RetrievalGeneral Terms: Design, Human FactorsKeywords: Mobile Camera Phones, Automated ContentMetadata, User Experience, User Motivation, Digital ImageManagement, Wireless Multimedia ApplicationsINTRODUCTIONNetworked mobile camera phones offer a good platform toapply these principles by providing us with a networkedimage capture device. While others have written about theireffect on the content of photos (e.g., [3, 4]), we wereinterested in how they might be used to facilitate theannotation process. The purpose of our project was to createan infrastructure for networked cameras to allow users toassign metadata at the point of capture and to utilize acollaborative network, along with automatically capturedenvironmental cues, to aide in automating the annotationprocess, thus reducing the effort required of the user.With the number and adoption of consumer digital mediacapture devices increasing, more personal digital media isbeing produced, especially digital photos. As consumersproduce more and more digital images, finding a specificimage becomes more difficult. Often, images are effectivelylost within thousands that are only demarcated by sequentialfile names. One solution to this image management problemis to enable users to create annotations of image content (i.e.,“metadata” about media), therefore allowing consumers tofind their photos by searching on information, instead ofsimply filenames.METHODOLOGYPrevious research in personal image management (surveyedin [5]) has facilitated annotation by using free-text,hierarchical and faceted metadata structures both textual [7]and iconic [1], drop down menus, drag and drop interfaces,and audio annotation with automated text transcription.Researchers have also sought to leverage the underlyingtemporal structure of photographed events to supportWe built a framework (“MMM” for “Mobile MediaMetadata”) that enables image annotation at the point ofcapture using Nokia 3650 camera phones over the AT&TWireless GSM/GPRS service [6]. We then gave 40 first yeargraduate students and 15 researchers camera phones to testour system for four months. We asked the students tobrainstorm applications to build on top of this framework.Our evaluation consisted of three investigations. First, weperformed user interface testing with five participants, givingthem three scenarios each for phone use, videotaped theiractions, and interviewed them afterwards about the useCopyright is held by the author/owner(s).CHI 2004, April 24–29, 2004, Vienna, Austria.ACM 1-58113-703-6/04/0004.1403

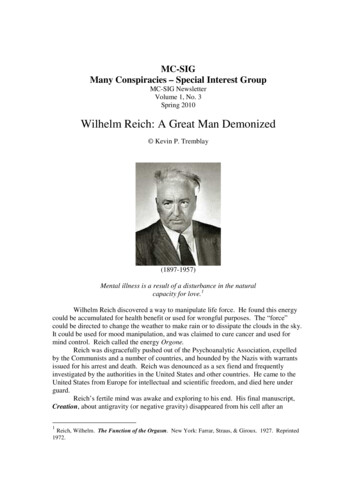

CHI 2004 ׀ Late Breaking Results Paper24-29 April ׀ Vienna, Austriascenarios and their current habits of image capture, storage,sharing, and retrieval. Second, all 55 participants wereadministered a weekly survey for seven weeks, inquiringabout their use of the phones and the implemented imageannotation system. Third, two focus groups discussed theirimage capture, storage, sharing, and retrieval habits. Onegroup (eight subjects) consisted of users of this system andthe other (seven subjects) was a general group of students.The former group additionally discussed their use of imageannotation systems and this one in particular.SYSTEM OVERVIEWUtilizing the camera phone’s hardware, network access, andsoftware programmability, we built a client-serverarchitecture. The client side software consisted of twocomponents. The first component implemented the picturetaking functionality and automatically gathered availablecontextual metadata before users uploaded the capturedimages to a remote server over the GPRS connection. Thesecond component was the phone’s built-in XHTMLbrowser. It was used for all subsequent user interactionbetween the client and the remote server, whichcommunicated with a collaborative repository of annotatedimages in order to help automate and facilitate the annotationprocess.Figure 1. Mobile Media Metadata (MMM) User Interactiondifferent option, including inputting new annotation textaltogether (see Figure 1).The remote repository of metadata possessed a facetedhierarchical structure [1, 7].By utilizing orthogonalhierarchies of descriptors that can be combined to make morecomplete descriptions, faceted classification structures enablerich description in ways that overcome the limitations ofstrictly hierarchical metadata structures and keyword basedapproaches used in most prior image annotation systems (e.g.FotoFile). One problem we faced, as we will discuss later,was the display and navigation of the faceted metadatastructure on the limited screen size of the mobile phone.The first component, named Image-Gallery, was developedin co-operation with Futurice1 for the Symbian 6.1 operatingsystem on the phone. Image-Gallery automatically capturedlocation metadata by storing the GSM network cell ID. Then,utilizing the username associated with each phone, itautomatically captured the user’s identification, as well astime and date at the moment of capture. This information wassent with the photograph, via the GSM/GPRS network to ourserver, where it was matched against a repository ofannotated images. After Image-Gallery launched the phone’sweb browser, annotation “guesses” generated by a serverside program were returned to the user, through the XHTMLbrowser on the phone to await confirmation or correction.USER INTERACTION CHALLENGESBy formally testing our infrastructure and by ongoinginteraction with the 40 student subjects and 15 researchers weidentified a set of challenges.Network UnpredictabilityThe primary user difficulty was the unpredictability andlimited availability of the GSM/GPRS network. The networkoften failed to transmit the image and/or metadata (bothautomated and user-assisted) to the server and was often veryslow. Interactions that we had predicted would take 30 to 45seconds per session often took 3 to 5 minutes. Users becamevery frustrated as the delay distracted their attention fromtheir ongoing tasks and provided little feedback in theprocess. Users commented during testing:Keeping the human-in-the-loop, the XHTML browserpresented the user with a series of screens suggestingmetadata about the photograph. For each screen the serverside program would try to “guess” each answer by matchingany previously submitted information against thecollaborative repository of annotated images. The “guesses”were presented as drop down lists of prepopulated answers.The choice at the top of each list was deemed the mostprobable based on server-side matching algorithms. The usercould then confirm the suggested annotation with one simpleclick, or correct the annotation by selecting a“I have to keep staring at the screen to check for change eventhough I would rather pay attention to other things aroundme.”XHTML Browser InteractionIn effort to keep our prototype thin and simple [2],considering the large learning curve of the Symbian OS, weutilized the camera phone’s XHTML browser as ourprinciple user interface. The XHTML browser interaction,however, presented the users with interesting usabilityproblems. Once the browser is launched, the form buttons1http://www.futurice.fi1404

CHI 2004 ׀ Late Breaking Results Paper24-29 April ׀ Vienna, AustriaLessons Learnedcontained within the XHTML page do not correlate with thehardware buttons (called “softkeys”) located on the phoneclient. Instead, these two softkeys, located just below thescreen, contain hard-coded browser functions. To customizethese softkeys, client-side programming is necessary. Toavoid excessive client-side programming, all of ournavigational options were contained within a form, inside theXHTML pages. Therefore, unlike full-client programs, likeFuturice’s Image-Gallery, which interact with the user byboth softkeys as well as a central scroll key, all interactionwithin the browser (including all navigational and annotationoptions) necessary for our application were navigated by onecentral scroll key. Subsequently, the interaction followedone of a desktop web application more with the center keysubstituted for the mouse. This presented problems for ourusers:Though we did in many ways reduce the cost of annotation tousers by allowing them to remain in their environment whileannotating, as well as reducing keystroke entry by allowingdrop-down selection from a repository of inferred metadata,the network and user interface issues we encounteredseverely limited usability. Most participants only used theannotation system to complete required classroomassignments and even then, only annotated one to two facetsper photo.However, we feel that we did make some progress inreducing annotation cost, as users ultimately noted:“For the most part, it’s fairly easy to select items and click‘Next.’ The annotation process isn’t that hard.”Subsequently, we feel that by taking into account theselearned future versions will only improve:“It was confusing to alternate from using the two big buttonsunder the screen (options) [i.e., softkeys] because once youare in MMM you should never use them or else you’ll getkicked off site.” Design for network unpredictability and errors. Onepossible solution is to limit continual network interactionby creating a full-client application. (The network shouldalso improve over time.)Finally, the small screen of the mobile phone presentedchallenges for traversing a large facetted hierarchicalmetadata display. Neither the breadth nor depth of theclassification structure could be displayed easily, as theformer encountered screen real-estate limitations and thelatter sequential page load latencies. We therefore decided tocarefully select key nodes of the hierarchy and present theuser with a limited user-focused hierarchy. This hierarchycontained approximately three depth levels across four facets(Person, Location, Object, and Activity). Each descriptorexposed in the interface was carefully selected to correlatewith its node in the larger backend hierarchy. Thereforeusers could select one salient descriptor on the interface, butactually annotate many more. For example, the user couldspecify a location as “South Hall”, but the image would beannotated as: US California Alameda County Berkeley UC Berkeley South Hall. Any implied metadata stored inthe backend hierarchy would automatically be added to theuser’s annotation. Web applications run through the XHTML browser onmobile phones do not simulate full-client applicationnavigation well. Use a prototyping methodology thatsimulates the specific mobile interaction better to testuser experience. Presentation of a limited version of the facetedhierarchical structure must not be so limited as to overlyconstrain or confuse the user. Use of a different displaymetaphor, may be a better approach and lists of 12-15items should not be exceeded.This user-focused hierarchy was traversed as a series ofscreens containing drop-down lists. The users reported thatthey understood the interaction, however, because of thelimited choices they were often unsure where to categorizetheir photograph.Digital Images: The Funnel EffectMetadata Hierarchy DisplayUSE PATTERNS AND MOTIVATIONSTo design systems to support image capture and re-use ingeneral, to improve this infrastructure, and to create usefulapplications using it, we need to understand how cameraphones affect users’ photographic habits, as well as theirmotivations for annotation. We addressed these questions inour focus groups, interviews, and surveys.When we asked users about digital images in general,participants in the focus groups described a funnel effect indigital picture taking, sharing, and printing. They took manypictures, kept some of them, shared a selected group of those,and printed an even smaller subset. Digital imaging was formany a key element in the large volume of "throwaway"pictures, since, unlike "regular" photos, the marginal cost ofeach photo was zero. They reported that they would like toannotate only a subset of those taken, mostly only those goodenough to share.Furthermore, because we allowed users to add new items tothe hierarchy, the drop-down selections became very long.Users seemed to tolerate scrolling through 12-15 items, butonce the list exceeded that length they complained that no“jumping” or “short-cut” mechanisms were available to helpquickly traverse the long list. One user requested:Additionally, student subjects were generally unconcernedabout metadata for future use: for example, identifyingpeople in photos—they said that they already knew thesepeople. We suspect that this short-term perspective may be“I would like menus that wrapped or some ability to jumpdown on a menu (alphabetically?)”1405

CHI 2004 ׀ Late Breaking Results Paper24-29 Aprildue in part to the relative youth and childless status of mostof our subjects. They did not seem concerned, for example,with sharing images with future generations. Camera Phone Photos: The Power of NowCONCLUSIONSBecause users were able to carry their camera phones muchof the time, they reported taking more humorous or ad-hocimages than they would with their “normal” cameras, whichthey often only carried to specific events or for specificpurposes. One user reported taking an image of a rather sad(droopy) palm tree outside of the school building because hewanted to capture its melancholy that day; another tookpictures of students filling a water fountain with bubble bath.In such cases, the “power of now” was apparent. They wereable to capture unique or funny moments in their daily livesand communicate them to others via images.This isconsistent with other researchers’ findings that people takedifferent kinds of photos with camera phones [3, 4]. ׀ Vienna, AustriaUser preferences for annotation are generally limited to afew favored images, and some key information for eachphotograph.From this group of users, we conclude that mobile cameraphones enable a new approach to annotating media that canreduce user effort by (1) facilitating metadata capture at thetime of image capture, (2) adding some metadataautomatically, and (3) leveraging networked collaborativemetadata resources. As networks improve, our problemswith network latency and unreliability will be reduced.However, user interface and system designs for mobile imageannotation need to overcome the challenges of text entry andhierarchical display and navigation on mobile devices. Wealso need to develop hybrid solutions that integrate desktopand mobile application components into more complete andappropriate solutions than either can offer alone.Like Ito and Okabe's [3] users, our users reported a shortterm orientation toward the photos taken with the cameraphones, with more interest in sharing than in searching orretrieving their photos. One group wrote a script that wouldautomatically publish selected photos to a personal web page,with an attached caption (moblogging). Other users sharedtheir photos by using the imaging device itself: showingpeople images on the camera phone. Still others used email,Bluetooth, and infrared capabilities to share images withothers. Near the end of the semester, we supplied the studentswith a web-based browsing tool to view images and theirannotations via the desktop. Users reported that this madesharing easier and added great value to the application.Furthermore, users preferred searching and browsing basedon input metadata via the desktop rather than the phones andsuggested further desktop-based annotation capabilities.More generally, we need to understand and design for theemergent behavior resulting from changes in technology.Digital imaging, in general, and camera phones in particular,make new kinds of imaging behavior possible. The readyavailability (and current low image quality) of camera phonesencourages the capture of images for short-term usesaffecting the kind of annotation currently desired. As imagequality improves, we expect that users will add to these adhoc uses more traditional (long-term) imaging behavior withmore need for metadata.Selective Metadata Annotation2. Edwards, K.W., Bellotti, V., Dey, A.K., Newman, and M.W.,Stuck in the Middle: The Challenges of User-Centered Designand Evaluation for Infrastructure. Proc. CHI2003. AMC Press,297-304.REFERENCES1. Davis, M. Media Streams: An Iconic Visual Language for VideoRepresentation. in Baecker, R.M., Grudin, J., Buxton, W.A.S.and Greenberg, S. eds. Readings in Human-ComputerInteraction: Toward the Year 2000, Morgan Kaufmann, SanFrancisco (1995) 854-866.To our surprise, our subjects were generally not interested infully annotating photos by keywords. They simply wanted toattach one or two salient identifiers. Annotations often tookthe form of captions rather than standard metadata: the reasonwhy they took the picture, a witty remark, or somethingpersonal shared with the observer. This was true of photostaken with the camera phones and other cameras, but theimmediacy of camera phone photos seemed particularly wellsuited to this kind of annotation.3. Ito, M. and Okabe, D., "Camera phones changing the definitionof picture-worthy," Japan Media Review 4.php4. Koskinen, I., Kurvinen, E. and Lehtonen, T.-K. ProfessionalMobile Image. IT Press, Helsinki (2002).5. Rodden, K. and Wood, K.R., How Do People Manage TheirDigital Photographs? Proc. CHI2003, ACM Press (2003), 409416.Lessons LearnedFrom the above, we conclude: Mobile camera phone use highlights the “power of now”in always being available for ad-hoc picture taking. For our camera phone users, sharing and browsing aremore important than searching or retrieval. A desktop component adds great value to the mobileapplication by easing search, sharing, and quickbrowsing.6. Sarvas, R., Herrarte, E., Wilhelm, A., and Davis, M., MetadataCreation System for Mobile Images. Proc. MobiSys2004, ACMPress (Forthcoming, 2004).7. Yee, P., Swearingen, K., Li, K. and Hearst, M., FacetedMetadata for Image Search and Browsing. Proc. CHI2003,ACM Press (2003), 401-408.1406

2004. ACM Press, New York, NY, 1403 1406. 2004 by authors. Photo Annotation on a Camera Phone Anita Wilhelm1, Yuri Takhteyev1, Risto Sarvas2, Nancy Van House1, Marc Davis1 1School of Information Management and Systems University of California Berkeley 102 South Hall, Berkeley, CA 94720-4600, USA {awilhelm, yuri, vanhouse, marc}@ sims.berkeley.edu 2Helsinki Institute for .