Transcription

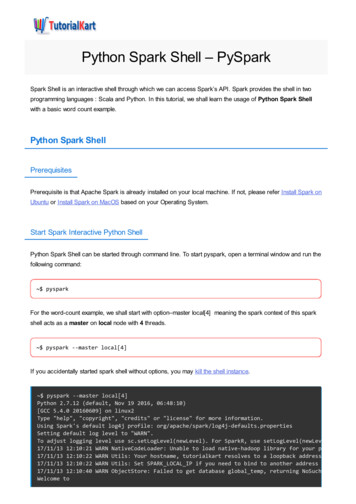

Python Spark Shell – PySparkSpark Shell is an interactive shell through which we can access Spark’s API. Spark provides the shell in twoprogramming languages : Scala and Python. In this tutorial, we shall learn the usage of Python Spark Shellwith a basic word count example.Python Spark ShellPrerequisitesPrerequisite is that Apache Spark is already installed on your local machine. If not, please refer Install Spark onUbuntu or Install Spark on MacOS based on your Operating System.Start Spark Interactive Python ShellPython Spark Shell can be started through command line. To start pyspark, open a terminal window and run thefollowing command: pysparkFor the word-count example, we shall start with option–master local[4] meaning the spark context of this sparkshell acts as a master on local node with 4 threads. pyspark --master local[4]If you accidentally started spark shell without options, you may kill the shell instance. pyspark --master local[4]Python 2.7.12 (default, Nov 19 2016, 06:48:10)[GCC 5.4.0 20160609] on linux2Type "help", "copyright", "credits" or "license" for more information.Using Spark's default log4j profile: org/apache/spark/log4j-defaults.propertiesSetting default log level to "WARN".To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).17/11/13 12:10:21 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform.17/11/13 12:10:22 WARN Utils: Your hostname, tutorialkart resolves to a loopback address: 127.0.0.117/11/13 12:10:22 WARN Utils: Set SPARK LOCAL IP if you need to bind to another address17/11/13 12:10:40 WARN ObjectStore: Failed to get database global temp, returning NoSuchObjectExcepWelcome to

/ / / /\ \/ \/ / / ' // / . /\ , / / / /\ \ version 2.2.0/ /Using Python version 2.7.12 (default, Nov 19 2016 06:48:10)SparkSession available as 'spark'. Spark context Web UI would be available at http://192.168.0.104:4040 [The default port is 4040].Open a browser and hit the url http://192.168.0.104:4040 .Spark context : You can access the spark context in the shell as variable named sc .Spark session : You can access the spark session in the shell as variable named spark .Word-Count Example with PySparkWe shall use the following Python statements in PySpark Shell in the respective order.input file sc.textFile("/path/to/text/file")map input file.flatMap(lambda line: line.split(" ")).map(lambda word: (word, 1))counts map.reduceByKey(lambda a, b: a b)counts.saveAsTextFile("/path/to/output/")InputIn this step, using Spark context variable, sc, we read a text file.

input file sc.textFile("/path/to/text/file")MapWe can split each line of input using space ” ” as separator.flatMap(lambda line: line.split(" "))and we map each word to a tuple (word, 1), 1 being the number of occurrences of word.map(lambda word: (word, 1))We use the tuple (word,1) as (key, value) in reduce stage.ReduceReduce all the words based on Key. Here a, b are values and for the same key, values are reduced toa b .counts map.reduceByKey(lambda a, b: a b)Save counts to local fileAt the end, counts could be saved to a local file.counts.saveAsTextFile("/path/to/output/")When all the commands are run in Terminal, following would be the output : input file sc.textFile("/home/arjun/data.txt")map input file.flatMap(lambda line: line.split(" ")).map(lambda word: (word, 1))counts map.reduceByKey(lambda a, b: a ut can be verified by checking the save location./home/arjun/output lspart-00000 part-00001 SUCCESS

Sample of the contents of output file, part-00000, is shown below :/home/arjun/output cat e have successfully counted unique words in a file with the help of Python Spark Shell – PySpark.You can use Spark Context Web UI to check the details of the Job (Word Count) we have just run.Navigate through other tabs to get an idea of Spark Web UI and the details about the Word Count Job.ConclusionIn this Apache Spark Tutorial, we have learnt the usage of Spark Shell using Python programming languagewith the help of Word Count Example.Learn Apache Spark Apache Spark Tutorial Install Spark on Ubuntu

Install Spark on Ubuntu Install Spark on Mac OS Scala Spark Shell - Example Python Spark Shell - PySpark Setup Java Project with Spark Spark Scala Application - WordCount Example Spark Python Application Spark DAG & Physical Execution Plan Setup Spark Cluster Configure Spark Ecosystem Configure Spark Application Spark Cluster ManagersSpark RDD Spark RDD Spark RDD - Print Contents of RDD Spark RDD - foreach Spark RDD - Create RDD Spark Parallelize Spark RDD - Read Text File to RDD Spark RDD - Read Multiple Text Files to Single RDD Spark RDD - Read JSON File to RDD Spark RDD - Containing Custom Class Objects Spark RDD - Map Spark RDD - FlatMap Spark RDD - Filter Spark RDD - Distinct Spark RDD - ReduceSpark Dataseet Spark - Read JSON file to Dataset Spark - Write Dataset to JSON file Spark - Add new Column to Dataset Spark - Concatenate Datasets

Spark - Concatenate DatasetsSpark MLlib (Machine Learning Library) Spark MLlib Tutorial KMeans Clustering & Classification Decision Tree Classification Random Forest Classification Naive Bayes Classification Logistic Regression Classification Topic ModellingSpark SQL Spark SQL Tutorial Spark SQL - Load JSON file and execute SQL QuerySpark Others Spark Interview Questions

Spark Others Spark Interview Questions. Title: Python Spark Shell - PySpark - Word Count Example Created Date: 5/24/2021 2:31:23 PM .