Transcription

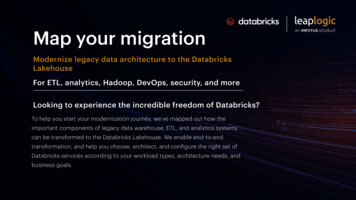

Map your migrationModernize legacy data architecture to the DatabricksLakehouseFor ETL, analytics, Hadoop, DevOps, security, and moreLooking to experience the incredible freedom of Databricks?To help you start your modernization journey, we’ve mapped out how theimportant components of legacy data warehouse, ETL, and analytics systemscan be transformed to the Databricks Lakehouse. We enable end-to-endtransformation, and help you choose, architect, and configure the right set ofDatabricks services according to your workload types, architecture needs, andbusiness goals.

#1 WorkloadsLegacy component/featureDatabricks-equivalentDML scriptsDatabricks SQL, Spark SQLDDL scriptsDelta TablesProcedural codeDatabricks Lakehouse, PySparkData store (proprietary formats)Databricks Lakehouse, PySparkUser-defined fuctionsLakehouse-native/custom functions, Sparkfunctions on DatabricksOrchestration and scheduler scriptsDatabricks jobs, Airflow, Databricks RuntimeETL (Informatica/DataStage/Ab Initio, etc.)Databricks Lakehouse-native, PySpark,Spark ScalaSchema conversionDelta TablesData migrationSpark JDBCReporting (repointing)BI Reporting on Delta Lake

#2 Data warehousingLegacy component/featureDatabricks-equivalentData warehouseDatabricks LakehouseEvent-driven computeDatabricks Unit (DBU)Data storageDelta TablesMetadata managementDelta LakeLineageDatabricks Lakehouse (Spark-based solution)JDBC clientDatabricks SQL

#3 DevOps, security, and governanceLegacy component/featureDatabricks-equivalentSecuritySSO, Token Management API, and third-partyintegrationsCI/CD (DevOps)Databricks Repos, Jenkins, CloudBees (third-party)GovernanceDatabricks SCIM, Dataguise/Zaloni (third-party)Infrastructure as codeTerraform (third-party)Defect management systemJira (third-party)Row-level securityRanger (third-party)Universal artifact managementJFrog (third-party)

Accelerate migration toDatabricks with LeapLogicConvert or rearchitect legacy code, workflows, and analyticsMigrating legacy dataworkloads to DatabricksAny Legacy SourceETL and OrchestrationAssessmentcan be complex and risky.LeapLogic automates theEDW/Hadoop/Databasesend-to-end modernization journey with lowercost and risk.ETL and OrchestrationTransformationnotebooksworkflowslive tables*Cloud-native workflowsCloud Data Warehouse/Data Lakedelta lakelive sumption/ Reporting/ AnalyticsOperationalizationPhotonSQLBI engines integration* Under development working with Databricks product teamAuto-transform any legacy source to Databricks Lakehouse

Watch LeapLogic in action!Teradata to DatabricksInformatica to DatabricksSAS to DatabricksTo start your end-to-end modernization journey, write to us at info@leaplogic.io today.LeapLogic automates the transformation of legacy data warehouse, ETL, analytics, and Hadoop to native cloud platforms. Owned by Impetus Technologies Inc., LeapLogic partners with AWS, Azure,Databricks, GCP, and Snowflake to de-risk migrations. For over a decade, Impetus Technologies has been the 'Partner of Choice' for several Fortune 500 enterprises in transforming their data andanalytics lifecycle, including modernization to the cloud, data lake creation, advanced analytics, and BI consumption. The company brings together a unique mix of engineering services, technologyexpertise, and software products.To learn more, visit www.leaplogic.io or write to info@leaplogic.io 2022 Impetus Technologies, Inc. All rights reserved. Product and company names mentioned herein may be trademarks of their respective companies. May 2022

important components of legacy data warehouse, ETL, and analytics systems can be transformed to the Databricks Lakehouse. We enable end-to-end transformation, and help you choose, architect, and con igure the right set of Databricks services according to your workload types, architecture needs, and business goals.