Transcription

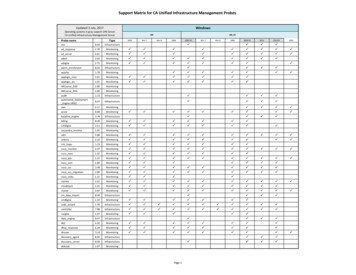

A Working Theory-ofMonitoringLISA 2013Caskey L. Dickson (caskey@google.com)Site Reliability Engineer, Google, Inc.

Metrics"the assignment of numerals tothings so as to represent factsand conventions about them"– S. S. Stevens 1946

Why a “theory”Monitoring seems easyIt’s not.Why?If successful, we should be able to sensibly map many monitoringmethods/modes into a good model with fidelity.

What do we monitor? (What’s a metric?)Named value at some time. Metric identity/namek-tuple within an identity space attached to each value www-1.na-east.example.com, httpd(3321), foo.example.com, 200-ok-count hostname, process, vhost, name Metric values (overlapping)Counters, Gauges, Percentiles Nominal, Ordinal, Interval, Ratio Derived count@20131005T142155.867Z 8505936

How can we monitor? (What’s a metric?)ResolutionHow frequently are you reading a metric?Every 6 seconds? 6 Minutes?LatencyAfter reading, how long before we act on them?Seconds, Minutes, Hours?DiversityAre you collecting many different metrics?10, 25, 50, 100, 10K, 10M?

Why do we monitor? Operational Health/Response (R ,L ,D )High Resolution, Low Latency, High Diversity Quality Assurance/SLA (R ,L-,D )High Resolution, High Latency, High Diversity Capacity Planning (R-,L-,D )Low Resolution, High Latency, High Diversity Product Management (R-,L-,D-)Low Resolution, High Latency, Low DiversityWhat about these? (R-,L ,D-) LB (R-,L ,D ) (R ,L ,D-) (R ,L-,D-)

Monitoring at scaleWeb server 25 metrics/server 50 metricsmonitoringDatabase0.16 metrics/second

Monitoring at scaleWeb server 25 metrics/server 50 metrics100M active daily users 200K peak QPSmonitoringDatabase0.16 metrics/second

Monitoring at scaleWeb serverWeb serverWeb serverWeb server 25 metrics/server 50 metrics100M active daily users 200K peak QPS@ 20QPS/server 10,000 servers 25,000 metrics Web serverDatabaseDatabaseDatabaseDatabase 166 metrics/secondDatabasemonitoring

Monitoring at scale 25 metrics/server 50 metrics100M active daily users 200K peak QPS@ 20QPS/server 10,000 servers 25,000 metrics 166 metrics/secondDNS serverload balancermonitoring

Monitoring at scale 25 metrics/server 50 metrics100M active daily users 200K peak QPS@ 20QPS/server 10,000 servers 25,000 metricsX 12 ‘types’ of servers 3,000,000 metrics10,000 metrics/secondDNS serverload balancermonitoring

Monitoring at scale 25 metrics/server 50 metrics100M active daily users 200K peak QPS@ 20QPS/server 10,000 servers 25,000 metricsX 12 ‘types’ of servers 3,000,000 metricsX 8/6 sites (N 2) 4,000,000 metrics13,333 metrics/second

Monitoring at scale 25 metrics/server 50 metrics100M active daily users 200K peak QPS@ 20QPS/server 10,000 servers 25,000 metricsX 12 ‘types’ of servers 3,000,000 metricsX 8/6 sites (N 2) 4,000,000 metrics13,333 metrics/secondO(10K) metrics/secondO(32MB) data / sweep

Monitoring at scale 25 metrics/server 50 metrics100M active daily users 200K peak QPS@ 20QPS/server 10,000 servers 25,000 metricsX 12 ‘types’ of servers 3,000,000 metricsX 8/6 sites (N 2) 4,000,000 metrics13,333 metrics/secondO(10K) metrics/secondO(32MB)/sweepOps @ 1 minute O(50K) metrics/secondO(320MB)/sweepO(460GB)/24 hours

What do we monitor? (recap) Named, timestamped values of differing types Gathered at high resolution Large quantities Many different consumers(downsampling, filtering, aggregation) Reliably

CollectionAnalysis /ComputationAlerting / EscalationVisualizationStorageConfigurationSensing /Measurement

The creation of metrics at some minimum level ofabstraction. Generally raw counters plus some attributes.CollectionAnalysis /ComputationAlerting /EscalationVisualizationDifferent systems gather data at different speeds. top/ps/netstat arevery immediate, sar somewhat less so, nagios much less so.Different systems have different concepts ofan individual unit for metric identityNo consistent interfaceStorageConfigurationSensing / MeasurementSensing /Measurement

Placing of time series in a (readily?) accessible formatRaw, aggregated and post-computation metricsOccurs in different formats at different stages/var/log/syslog, /var/log/apache/access log, /var/www/mrtg/*,/var/lib/rrdb/*.rrd, mysql/postgresqlI/O throughputStructure limits analysis/visualization optionsCollectionAnalysis /ComputationAlerting eSensing /Measurement

Bringing together many individual metrics in one placeto support analysis.Metric identity needs to remain meaningfulafter aggregation.Key for scalabilityMany transports, smart and dumb.multicast, TCP, rrdcached, SFTP, rsyncCollectionAnalysis /ComputationAlerting tionSensing /Measurement

Extraction of meaning from the raw data.Often focused upon finding and detecting features or anomalies.Some anomalies are important, others are.merely interesting.CPU constrained for throughput/depthLots of interesting research in autocorrelationRAM constrained for metric volumeCollectionAnalysis /ComputationAlerting isSensing /Measurement

CollectionAnalysis /ComputationAlerting /EscalationWhen anomalies are detected, something has to deal withpromulgation of those conditions to interested parties.Some anomalies are urgent (short-term SLO critical) others are merelyimportant.Visualization“Urgent” anomalies reflect conditions that withoutimmediate operator intervention will lead to an outageor SLO excursion. Something is responsible for beingnoisy until someone comes to help.Ideally this happens as infrequently as possible.StorageConfigurationAlerting & EscalationSensing /Measurement

Meaningful visualization of the raw data can be thedifference between staying within or exceeding your SLO.CollectionAnalysis /ComputationAlerting /EscalationVisualizationViewing more than 3 dimensions can be problematic for those of uswho are still human.Goal-orientedRead and apply your Tufte/FewStorageConfigurationVisualizationSensing /Measurement

Some visualizations are less than useful.Disk space is a commonly graphed metric whichis un-actionable withoutderivatives.Not all views have the sametaxonomy.CollectionAnalysis /ComputationAlerting ization and ActionabilitySensing /Measurement

Affects every layerNeeds configuration managementComplicates distributed systemsLimits change velocityCollectionAnalysis /ComputationAlerting urationSensing /Measurement

Operational Health/Response (R ,L ,D )High Resolution, Low Latency, High Diversity Quality Assurance/SLA (R ,L-,D )High Resolution, High Latency, High Diversity Capacity Planning (R-,L-,D )Low Resolution, High Latency, High Diversity Product Management (R-,L-,D-)Low Resolution, High Latency, Low DiversityCollectionAnalysis /ComputationAlerting /EscalationVisualizationStorageConfigurationWhy do we monitor? (repeat)Sensing /Measurement

Mostly synthesized/reprocessed metrics (KPIs vs. SLIs) Lots of historic data in storage for long-term views Analysis of synthesized metrics from concrete metrics 7-day actives Conversion rates Easy to understand visualizations of resulting metricsCollectionAnalysis /ComputationAlerting t Management (R-,L-,D-)Sensing /Measurement

Evaluation of current serving capacity Calculation of proxy metrics Impact of changes to serving capacity Cost per user Efficiency Alerting when capacity limits approachingCollectionAnalysis /ComputationAlerting ty Planning (R-,L-,D )Sensing /Measurement

Includes developer supportCollectionAnalysis /ComputationAlerting /EscalationVisualization Collect data from both narrow and wide views(Sensing high resolution process behavior and system-metrics) Offline and real-time performance analysis, tracing(Collection and storage of data from diverse runs) Not necessarily real-time Useful visualizations to aid understandingStorageConfigurationQuality Assurance/SLA (R ,L-,D )Sensing /Measurement

The hardest use caseCollectionAnalysis /ComputationAlerting /EscalationVisualization Immediate, up to date metrics (low latency collection) Encompassing the entire fleet (broad collection coverage, manysensors incorporated) Real-time computation of thresholds and alerts(high speed analysis) Reliable and flexible alerting Storage of enough timeseries at high enough resolution forcomparison (XXXGB/day * 730 days) Simple configuration of global monitoring perspectiveStorageConfigurationOperational Health/Response (R ,L ,D )Sensing /Measurement

A moment please.All the systems to be discussed have inherent, undeniable value and Ihave personally used and benefited from them and mean no disrespectto the implementers and maintainers of them.Personally I use these systems, in the past I have relied upon them forproduction services I was responsible for.This is NOT a criticism of those products, rather an indication of wherethey stop short of one particular hypothetical ideal.

CollectionAnalysis /ComputationAlerting /EscalationSensing: /proc, /sys, syscalls(1)Collection: while(true);top - 18:54:30 up 67 days, 3:05, 2 users, load average: 1.60, 1.03, 0.48Analysis: SummingTasks: 113 total,1 running, 112 sleeping,0 stopped,0 zombieCpu0 : 0.0%us, 0.7%sy, 0.0%ni, 99.3%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%stCpu1 : 0.7%us, 1.3%sy, 0.0%ni, 98.0%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%stand sortingCpu2 : 2.7%us, 7.7%sy, 0.0%ni, 5.0%id, 84.6%wa, 0.0%hi, 0.0%si, 0.0%stCpu3 : 0.0%us, 1.3%sy, 0.0%ni, 97.3%id, 1.3%wa, 0.0%hi, 0.0%si, 0.0%stAlerting: Sort to topMem:503132k total,496152k used,6980k free,41340k buffersSwap:0k total,0k used,0k free,195772k cachedVisualization: orderedPID USERPR NI VIRT RES SHR S %CPU %MEMTIME COMMAND15070 httpd200 105m 87m 1144 D8 17.80:06.18 httpdlists, dynamic sorting1032 mediatom 200 1160m 47m 816 S0 9.6 38:51.31 mediatomb6521 root200 83476 46m 36m S0 9.50:04.65 apt-get6643 caskey200 26840 8000 1616 S0 1.60:00.54 bashStorage: none6236 root200 107m 4236 3156 S0 0.80:00.06 sshd456 syslog200 244m 3280 460 S0 0.74:46.73 rsyslogdConfiguration:1303 root200 743m 3080 284 S0 0.6 14:36.75 ushare31304 root200 2042m 2584 1492 S0 0.50:00.14 console-kit-daeruntime shortcuts1 root200 24432 1768 696 S0 0.40:22.14 initVisualizationStorageConfiguration/bin/top (host process health)Sensing /Measurement

Sensing /MeasurementBasically some of top timeseriesLinux 2.6.18-194.el5PAE PMPMPMCPUallallallall%user0.000.250.750.33Linux 2.6.18-194.el5PAE (dev-db)07:28:0607:28:0707:28:0807:28:09Average:AM kbmemfree 209743262092482097432CollectionAnalysis /ComputationAlerting 00.00%system0.000.250.250.1703/26/2011%memused 79654417965441796544(8 50(8 8820488204StorageConfiguration/bin/sar (host health)

Sensing /MeasurementSensing: dtrace/strace/ltraceprocess wrapperCollection: single instanceAnalysis: NoneAlerting: N/AVisualization:NoneStorage: NoneConfiguration:command id[pid[pid[pid[pid[pidCollectionAnalysis /ComputationAlerting 3]11783]11783]11783]11783]libc start main(0x407420, 1, 0x7fff75b6aad8, 0x443cc0, 0x443d50 unfinished . geteuid() 1000getegid() 1000getuid() 1000getgid() 1000setuid(1000) 0malloc(91) 0x00cf8010XtSetLanguageProc(0, 0, 0, 0x7f968c9a3740, 1) 0x7f968bc16220ioctl(0, 21505, 0x7fff75b6a960) 0XtSetErrorHandler(0x42bbb0, 0x44f99c, 0x669f80, 146, 0x7fff75b6a72c) 0XtOpenApplication(0x670260, 0x44f99c, 0x669f80, 146, 0x7fff75b6a72c) 0xd219a0IceAddConnectionWatch(0x42adc0, 0, 0, 0x7f968c9a3748, 0 unfinished . IceConnectionNumber(0xd17ec0, 0, 1, 0xcfb138, 0xd17c00) 4 . IceAddConnectionWatch resumed ) 1XtSetErrorHandler(0, 0, 1, 0xcfb138, 0xd17c00) 0XtGetApplicationResources(0xd219a0, 0x6701c0, 0x66b220, 34, 0) 0strlen("off") 3StorageConfiguration*trace (process behavior)

Sensing:SNMP, subprocess, 2 metrics maxCollection:Centralized scraping over SMTPLocal processesAnalysis:Basic mathAlerting:NoneVisualization:day/week/month/year graphs 2 variablesCollectionAnalysis /ComputationAlerting nsing /Measurement

Operations:Ideal for netops, no alerting thoughProduct Management:NoneCapacity Planning:Ideal for network ops and host healthQ/A, SLA:NoneCollectionAnalysis /ComputationAlerting nsing /Measurement

Sensing:Subprocesses and plugins, LOTS of pluginsCollection:Centralized scrapingSupport for forwarding metricsAnalysis: At sensing timeAlerting:Configurable alarms and emailsVisualization:Basic graphs of check resultsDependency chainsCollectionAnalysis /ComputationAlerting Sensing /Measurement

Operations:Good for simple operations, basic alert supportRedundant (N M) configurations more difficultProduct Management:N/A, heavily focused on up/down checksCapacity Planning:N/AQ/A, SLA:N/A, poor/no timeseries visualizationCollectionAnalysis /ComputationAlerting Sensing /Measurement

Sensing:gmond on nodesextensions/pluginsCollection:multicast, UDP, TCP pollsAnalysis:value thresholdexternal (nagios)Storage: rrdtool/rrdcachedAlerting: N/AVisualization: ganglia-webCollectionAnalysis /ComputationAlerting aSensing /Measurement

Operations:Unsuited, no alerting built inCan feed nagios/otherProduct Management:Cluster ops focusCapacity Planning:Well suitedQ/A, SLA:Historic viewsCollectionAnalysis /ComputationAlerting aSensing /Measurement

Sensing:Poller, cron basedCollection:Primarily SNMPAnalysis:Basic summingStorage: rrdtool, MySQLAlerting: N/AVisualization:Static graphsCollectionAnalysis /ComputationAlerting /EscalationVisualizationStorageConfigurationCacti (MRTG )Sensing /Measurement

Operations:No alerts limits utility to diagnosticsProduct Management:Well suitedCapacity Planning:Well suitedQ/A, SLA:Well suitedCollectionAnalysis /ComputationAlerting /EscalationVisualizationStorageConfigurationCacti (MRTG )Sensing /Measurement

Sensing: Arbitrary JSON emitters “Checkers”Collection: RabbitMQ JSON event busAnalysis:HandlersStorage: N/AAlerting:HandlersVisualization: N/ACollectionAnalysis /ComputationAlerting ensing /Measurement

Operations:Configurable collection layer, handlers and checkersProduct Management:N/ACapacity Planning:N/AQ/A, SLA:Can feed live data to other technologiesCollectionAnalysis /ComputationAlerting ensing /Measurement

Sensing:Deployable log throwerCollection:MQ ng: N/AVisualization:Kibana (ES)CollectionAnalysis /ComputationAlerting shSensing /Measurement

Operations:Historical view of systems, searching for incident infoProduct Management:N/ACapacity Planning:N/AQ/A, SLA:Tracing of individual problem cases,cross correlation among different log setsCollectionAnalysis /ComputationAlerting shSensing /Measurement

Sensing:Custom clientsCollection:TSD RPCAnalysis:ExternalStorage:Complete storage layerAlerting: N/AVisualization: N/ACollectionAnalysis /ComputationAlerting DBSensing /Measurement

Sensing /MeasurementOperations:Can handle the volumeProduct Management:N/ACapacity Planning:N/AQ/A, SLA:N/ACollectionAnalysis /ComputationAlerting /EscalationVisualizationmysql.bytes receivedmysql.bytes sentmysql.bytes receivedmysql.bytes sentmysql.bytes receivedmysql.bytes sent1287333217 327810227706 schema foo host db11287333217 6604859181710 schema foo host db11287333232 327812421706 schema foo host db11287333232 6604901075387 schema foo host db11287333321 340899533915 schema foo host db21287333321 5506469130707 schema foo host db2StorageConfigurationOpenTSDB

Sensing: N/ACollection: N/AAnalysis: N/AStorage: N/AAlerting: N/AVisualization:Very nice interactive charts ofprepared data setsCollectionAnalysis /ComputationAlerting ensing /Measurement

Operations:Data exploration of limited valueProduct Management:Good discovery and goal seekingCapacity Planning:Interactive searching for hiddendependenciesQ/A, SLA:Great potential for exploringtraces and dependenciesCollectionAnalysis /ComputationAlerting ensing /Measurement

Sensing:DIY, name valueCollection:Custom messaging protocolAnalysis: N/AStorage: Carbon Whisperfile-per-metricAlerting: N/AVisualization:Static config of complex graphsCollectionAnalysis /ComputationAlerting teSensing /Measurement

Operations:Command-line graph creation,limited interactive webProduct Management:Great for visualizationCapacity Planning:Also good for visualizationQ/A, SLA:Can visualize, but lacks interactivityCollectionAnalysis /ComputationAlerting teSensing /Measurement

Sensing:Nagios erAnalysis:Reactioner/BrokerStorage: RRDtoolAlerting:ReactionerVisualization:Sadly not much better than NagiosCollectionAnalysis /ComputationAlerting n (Nagios Graphite CM)Sensing /Measurement

Operations:Much better CM than NagiosProduct Management:N/ACapacity Planning:N/AQ/A, SLA:N/ACollectionAnalysis /ComputationAlerting nSensing /Measurement

CollectionAnalysis /ComputationAlerting /EscalationVisualizationLots and lots of vendorsAlertSite, Bijk, CopperEgg, Dotcom Monitor, GFI Cloud, Kaseya, LogicMonitor, Monitis, MonitorGrid, Nimsoft,ManageEngine, Panopta, Pingdom, Scout, ServerDensity, Shalb SPAE, CloudTest, .SaaS offeringsRemote collection, local agents, push and pullImplementation black boxesStorageConfiguration“Cloud Monitoring”Sensing /Measurement

All of the above.Nagios Graphite Sensu Logstash GangliaInteroperability is limited at the interface layer.MQ based solutions are promising glue.Interactive graphs are inspiring.CollectionAnalysis /ComputationAlerting /EscalationVisualizationStorageConfigurationIn the Real World Sensing /Measurement

CollectionAnalysis apacityPlanningSensing /MeasurementOperationsAlerting /EscalationQA/SLAVisualization

Thanks!Sensing /MeasurementCollectionAnalysis /ComputationAlerting er,sf,github,.}Join us! go to ionsCommentsFeedbackHate Mail

AppendixExtra stuff, just in case.Here, have a sleepy cat.

100M users explained 100M userseach user uses the app 10 times a day each user access causes 10 requests actually not, because the internet users are not distributed equally around the worldand don't use the app at the same times equallyso more like 200000 queries a secondlet's say each query requires 10 disk seeks HTML page, images, dynamic requests, query flowso 10 billion requests a daymeans an average of about 100000 queries a second 1 billion user accesses per dayamortized; some use more, some use lesswhat do we need to serve that?

10K servers explained let's say a disk does about 100 disk seeks per second2000000 seeks per second mean 20000 diskswe could try cramming 20000 disks into one server but that'd be a very large and expensive server and we found out a while ago that it's more economical to use lots ofsmall servers rather than one big one also called "warehouse scale computing"at 2 disks per server, 10000 servers40 per rackfills 250 racksabout 150 meters of rack space

DNS server monitoring load balancer 25 metrics/server 50 metrics 100M active daily users 200K peak QPS @ 20QPS/server 10,000 servers 25,000 metrics X 12 'types' of servers 3,000,000 metrics 10,000 metrics/second