Transcription

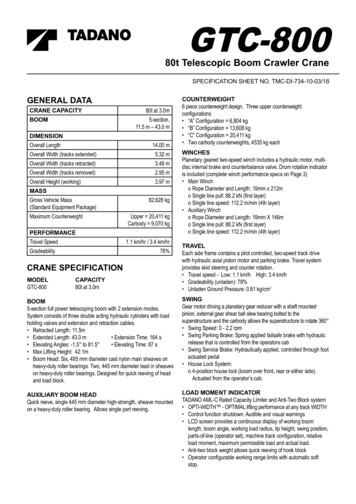

GTC S41755 FAST, SCALABLE,STANDARDIZED AI INFERENCEDEPLOYMENTMarch 2022Shankar Chandrasekaran, Triton Product Marketing ManagerMahan Salehi, Triton Product Manager

AI INFERENCE WORKFLOWTwo Part Process Implemented by Multiple PersonasData Scientist MLEngineerML EngineerMLOps, DevOpsApp ultTrainedModelsOptimize For MultipleConstraints For High Perf.InferenceModelRepoAIApplicationScaled Multi-frameworkInference Serving For High Perf.& Utilization On GPU/CPU2

AI INFERENCE IS HARDReal TSFRAMEWORKSMODELSGNNDECISION TREESAI INFERENCEAzureAmazonGoogleMachineSageMaker Vertex PUA30GPUA100GPUx86CPUArmCPUDataCenterEdgeEmbedded3

TRITON INFERENCE SERVEROpen-Source Software For Fast, Scalable, Simplified Inference ServingAny FrameworkSupports MultipleFramework BackendsNatively e.g.,TensorFlow, PyTorch,TensorRT, XGBoost,ONNX, Python & MoreAny Query TypeAny PlatformX86 CPU Arm CPU NVIDIA GPUs MIGOptimized for Real Time,Batch, Streaming,Ensemble InferencingLinux Windows VirtualizationPublic Cloud, DataCenter andEdge/Embedded (Jetson)DevOps & MLOpsIntegration WithKubernetes, KServe,Prometheus & GrafanaAvailable Across AllMajor Cloud AI n-inference-serverPerformance &UtilizationModel Analyzer forOptimal ConfigurationOptimized for HighGPU/CPU Utilization,High Throughput & LowLatency4

NATIVELY SUPPORTED EXECUTION BACKENDSTensorFlow 1.x/2.xAny ModelSavedModel GraphDefFILPyTorchAny modelJIT/Torchscript PythonONNX RTTree based models (e.g. XgBoost,Scikit-learn RandomForest, LightGBM)ONNX converted modelsOpenVINOCustom C BackendOpenVINO optimized models on IntelarchitectureCustom framework in C TensorRTTF-TensorRT & TorchTRTPythonFaster Transformer Backend(Alpha)All TensorRT optimizedmodelsCustom code in Python e.g.pre/post processing, any Pythonmodel.DALIPre-processing logic using DALIoperatorsAny TensorFlow and PyTorch modelMulti-GPU, multi-node inferencing forlarge transformer models (GPT and T5)NVTabularFeature engineering and preprocessinglibrary for tabular data.HugeCTRRecommender model with largeembeddings5

MODEL ANALYZEROptimal Triton ConfigurationTriton Model AnalyzerQoS constraints(Throughput,Latency, Memory)Evaluate MultipleConfigs(Precision X Batch Size XConcurrent Instances)Optimal ModelConfigurationModelsGitHub repo and docs: https://github.com/triton-inference-server/model analyzer6

MODEL NAVIGATORAccelerate optimized model er/model navigator7

TRITON FOREST INFERENCE LIBRARY (FIL)Illustration of Fraud detection with Triton FILModel Explainability With Shapley ValuesTimeTransactionValueProductTypeZip Code GBDT ModelContribution to Prediction(Shapley Value12%Time8%Zip CodeLatency(ms)FeaturesXGBooston CPUTriton FILon GPUTargetLatency1.5 ms0%-15%Triton FILon CPU20%40%60%80%Fraud Detection Rate 88

LATEST TRITON FEATURESMLFlow Integration Plugin (POC)Plugin to convert MLFlow Model Repo to Triton model repoformatFleet Command IntegrationHelm chart to deploy Triton in Fleet CommandImplicit State Management for TensorRT BackendNative support for stateful models (e.g. ASR). Also improvesperformance for autoregressive models. Support forTF/PyT/ORT backends on roadmapEmbedded DevicesTriton for Jetpack for Xavier, Nano, and TX2Now supports PyTorch and Python backendsBusiness Logic ScriptingEnables users to programmatically run “business logic”(control flow, conditionals, etc) in ensemble pipelinesFIL Backend- CPU Performance OptimizationsEnable multi-threading on CPU and other optimizations, resultingin 10x throughput speedupInferentia SupportRun models on AWS InferentiaRate LimiterEnables cross model prioritization. Controls how manyrequests are fed into each model simultaneouslyJava HTTP Client (Alpha)HTTP Java client available in TritonContainer Composition Utility ToolTooling to automate process of customizing Triton containerand reducing size by adding/removing backends9

Triton FasterTransformer BackendInference on Giant Multi-GPU, Multi-Node NLP Models with Billions of ParametersGoal: Serve giant transformer models and accelerate inferenceperformanceCurrent Capabilities: Written in C /CUDA and relies on cuBLAS, cuBLASlt, cuSPARSELtOptimize kernels to accelerate inference for encoder/decoderlayers of transformer modelsIntegrated as a backend in Triton Inference ServerUses tensor/pipeline parallelism for multi-GPU, multi-nodeinferenceFP16, FP32 supportedPOC of Post-training weight-only INT8 quantization for GPT Only for BS 1-2Supports sparsity for BERTUses MPI and NCCL to enable inter/intra node communicationExceptions/Limitations: Supports only GPT and T5 style models currently for multi-node Model must be converted to FasterTransformer format Megatron and HuggingFace converters provided POC of Tensorflow/ONNX converters Currently beta releaseNemo-Megatron EA -early-accessTriton FasterTransformer Open Source Github stertransformer backend10

UPCOMING FEATURE – TRITON nTriton Management Service (TMS) Deploys Triton with requestedmodels Load models on demand, unloadsmodels when not in use Helps group models from differentframeworks together to ensurethey co-exist efficiently Spins up new Triton pods onincreasing inference load Health check w/ restarts of eServer TritonInferenceServerModel RepoKubernetes 11

ECOSYSTEM INTEGRATIONSAmazon SageMakerAmazon ElasticAmazon ElasticKubernetes Service (EKS) Container Service(ECS)Azure Machine LearningAzureKubernetesService (AKS)Google Vertex AI(*New* Native Integration)Google KubernetesEngine (GKE)Kubeflow/KServeAlibaba CloudPAI-EASPrometheusSHAKUDO12

TRITON ADOPTION ACROSS USE CASESFraud DetectionFraud DetectionSearch & AdsFraud DetectionServing platformGrammar CheckMeeting TranscriptionDocument TranslationImageSegmentationPreventive maintenanceDefect DetectionPackage AnalyticsClinical NotesAnalyticsTranslatorImageClassification &RecommendationVideo ContentAuditProduct Identification13

AMAZON ADOPTS NVIDIA AI FOR REAL TIME SPELL CHECKFOR PRODUCT SEARCHInference Latency250msReal Time Spelling Correction Of Search TextTriton TensorRT Meets Latency Target WhileOptimizing for ThroughputTriton Model Analyzer Reduced Time to FindOptimal Configuration from Weeks to HoursOptimizeModel w/TensorRTChoose BestConfig w/Triton ModelAnalyzerDeploy w/TritonInferenceServerUnoptimizedModelOptimized w/Triton TensorRT40ms14

MICROSOFT ADOPTS TRITON FOR DOCUMENTTRANSLATION IN TRANSLATOR SERVICE30 language pairs w/ 1s latency on GPU100X throughput vs CPU (154 sentences/s vs 1sentence/s)Cost effectively scales to thousands/millions of users(Few GPU servers vs. 100’s of CPU servers)New GPU model replaces 20 separate CPU models15

EASILY GET TCO AND LATENCY ADVANTAGES WITHTRITONBERT-Large on A100 vs. CPUCPU 319.75CPU371100x less97x lessNVIDIA A100 3.30 0NVIDIA A100 100 200 300Cost / (1M Inference)NVIDIA A100 97x better TCOcompared to CPU 40040100200300400Latency (s)NVIDIA A100 100x lower latencymeeting real-time threshold16

NVIDIA AI ENTERPRISE SOFTWARE SUITEEnabling AI and Data Analytics on VMware vSphereNVIDIA AI EnterpriseNVIDIA Enterprise SupportTensorFlow*AI and DataScience Toolsand mizationPyTorch*NVIDIA RAPIDS NVIDIA TensorRT NVIDIA Triton InferenceServerNVIDIA GPU OperatorNVIDIA Network OperatorNVIDIAvGPUNVIDIAMagnum IO NVIDIACUDA-X AI Optimized forPerformanceCertified forVMware vSphereBare-metal performance acrossmultiple nodes to power large, complextraining and machine learningworkloads virtualizedReduce deployment risks with acomplete suite of NVIDIA AI softwarecertified for the VMware data centerVMware vSphere with TanzuEnsure mission-critical AI projects stayon track with access to NVIDIA expertsAccelerated Mainstream ServersNVIDIA SmartNIC / DPUNVIDIA GPUNetwork and Infrastructure AccelerationApplication AccelerationNVIDIA EnterpriseSupport17

TRITON: BRINGING THE 3 TEAMS TOGETHERInference Serving: Simplified, Highly Performant & FlexibleApps withtrained ModelsTrained ModelsData scientistsDevelopers, ML EngineersDevOps, MLOps, IT OperatorsRetrainingAny frameworkTensorFlow TensorRT Plan PyTorch ONNX RT OpenVINO CustomAny platformCloud (AWS, GCP, Azure) Datacenter Edge GPU CPU18

LEARN MORE AND DOWNLOADFor more ton-inference-serverGet the ready-to-deploy container with monthly updates from the NGC /containers/tritonserverOpen-source GitHub nce-serverLatest release ver/server/releasesQuick start rver/blob/main/docs/quickstart.md19

EASILY GET TCO AND LATENCY ADVANTAGES WITH TRITON BERT-Large on A100 vs. CPU 3.30 319.75 0 100 200 300 400 NVIDIA A100 CPU Cost / (1M Inference) . certified for the VMware data center Ensure mission-critical AI projects stay on track with access to NVIDIA experts. 18 TRITON: BRINGING THE 3 TEAMS TOGETHER .