Transcription

International Journal of Computer Applications (0975 – 8887)Volume 15– No.4, February 2011Handling Duplicate Data in Data Warehouse for DataMiningDr. J. Jebamalar TamilselviC. Brilly GiftaAssistant ProfessorDept of Computer ApplicationsJaya Eng College, ChennaiMphil (Research Scholar)Dept of computer ApplicationsBharathiyar UniversityABSTRACTThe problem of detecting and eliminating duplicated data isone of the major problems in the broad area of data cleaningand data quality in data warehouse. Many times, the samelogical real world entity may have multiple representations inthe data warehouse. Duplicate elimination is hard because it iscaused by several types of errors like typographical errors,and different representations of the same logical value. Also,it is important to detect and clean equivalence errors becausean equivalence error may result in several duplicate tuples.Recent research efforts have focused on the issue of duplicateelimination in data warehouses. This entails trying to matchinexact duplicate records, which are records that refer to thesame real-world entity while not being syntacticallyequivalent. This paper mainly focuses on efficient detectionand elimination of duplicate data. The main objective of thisresearch work is to detect exact and inexact duplicates byusing duplicate detection and elimination rules. This approachis used to improve the efficiency of the data.Keywordselimination. But the speed of the data cleaning process is veryslow and the time taken for the cleaning process is high withlarge amount of data. So, there is a need to reduce time andincrease speed of the data cleaning process as well as need toimprove the quality of the data.There are two issues to be considered for duplicatedetection: Accuracy and Speed. The measure of accuracy induplicate detection depends on the number of false negatives(duplicates you did not classify as such) and false positives(non-duplicates which were classified as duplicates) [12].In this research work, a duplicate detection and eliminationrule is developed to handle any duplicate data in a datawarehouse. Duplicate elimination is very important to identifywhich duplicate to retain and duplicate is to be removed. Themain objective of this research work is to reduce the numberof false positives, to speed up the data cleaning process reducethe complexity and to improve the quality of data. A highquality, scalable duplicate elimination algorithm is used andevaluated it on real datasets from an operational datawarehouse to achieve objective.Data Cleaning, Duplicate Data, Data Warehouse, Data Mining1. INTRODUCTIONData warehouse contains large amounts of data for datamining to analyze the data for decision making process. Dataminers do not simply analyze data, they have to bring the datain a format and state that allows for this analysis. It has beenestimated that the actual mining of data only makes up 10% ofthe time required for the complete knowledge discoveryprocess [3]. According to Jiawei, the precedent timeconsuming step of preprocessing is of essential important fordata mining. It is more than a tedious necessity: Thetechniques used in the preprocessing step can deeply influencethe results of the following step, the actual application of adata mining algorithm [6]. Hans-peter stated as the role of theimpact on the link of data preprocessing to data mining willgain steadily more interest over the coming years.Preprocessing is one of the fourth future trend and majorissues in data mining over the next years [7].In data warehouse, data is integrated or collected frommultiple sources. While integrating data from multiplesources, the amount of the data increases and as well as data isduplicated. Data warehouse may have terabyte of data for themining process. The preprocessing of data is the initial andoften crucial step of the data mining process. To increase theaccuracy of the mining result one has to perform datapreprocessing because 80% of mining efforts often spend theirtime on data quality. So, data cleaning is very much importantin data warehouse before the mining process. The result of thedata mining process will not be accurate because of the dataduplication and poor quality of data. There are many existingmethods available for duplicate data detection and2. DUPLICATEELIMINATIONDETECTIONANDIn the duplicate elimination step, only one copy of exactduplicated records are retained and eliminated other duplicaterecords [4]. The elimination process is very important toproduce a cleaned data. Before the elimination process, thesimilarity threshold values are calculated for all the recordswhich are available in the data set. The similarity thresholdvalues are important for the elimination process. In theelimination process, select all possible pairs from each clusterand compare records within the cluster using the selectedattributes. Most of the elimination processes compare recordswithin the cluster only. Sometimes other clusters may haveduplicate records, same value as of other clusters. Thisapproach can substantially reduce the probability of falsemismatches, with a relatively small increase in the runningtime.The following procedures are used to identify or detectduplicates and eliminate duplicates.i.ii.iii.iv.v.vi.Get threshold value from LOG tableCalculate certainty factorCalculate data quality factor for each recordDetect or identify duplicates using certainty factor,threshold value and data quality factorEliminate duplicate record based on data quality,threshold value, number of missing value and rangeof each field valueRetain only one duplicate record which is havinghigh data quality, high threshold value and highcertainty factor7

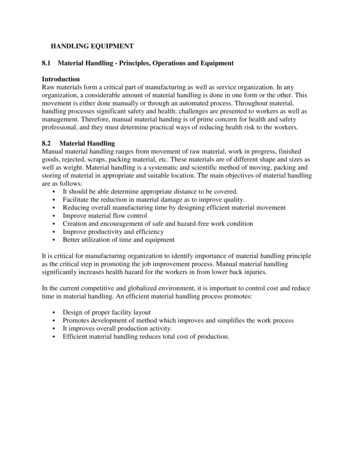

International Journal of Computer Applications (0975 – 8887)Volume 15– No.4, February 2011C1C2CnDirty data withduplicate recordsDirty data withduplicate recordsDirty data withduplicate recordsCertainty FactorRule basedduplicate dataidentificationThreshold valueThreshold valueMissing valuesTypes of dataDistinct valuesRange ofvaluesRule basedduplicate dataeliminationLack of qualityCleaning data withdefined ruleCleaned dataFigure 1. Framework for duplicate identification andeliminationFigure 1 shows the framework for duplicate data detectionand duplicate elimination. There are two kinds of rules used inthis framework. i) duplicate data identification rule ii)duplicate data elimination rule. Duplicate data identificationrule is used to identify or detect duplicate using certaintyfactor and threshold value. Duplicate data elimination rule isused to eliminate duplicate data using certain parameters andused to retain only one exact duplicate data.3. DUPLICATEDATAIDENTIFICATION / DETECTION RULEDuplicate record detection is the process of identifyingdifferent or multiple records that refer to one unique realworld entity or object if their similarity exceeds a certaincutoff value. However, the records consist of multiple fields,making the duplicate detection problem much morecomplicated [13]. A rule-based approach is proposed forthe duplicate detection problem. This rule is developed withthe extra restriction to obtain good result of the rules. Theserules specify the conditions and criteria for two records to beclassified as duplicates. A general if then else rule is used inthis research work for the duplicate data identification andduplicate data elimination. A rule will generally be of theform:if condition then action The action part of the rule is activated or fired when theconditions are satisfied. The complex predicates and externalfunction references may be contained in both the conditionand action parts of the rule [10]. In existing duplicatedetection and elimination method, the rules are defined for thespecific subject data set only. These rules are not applicablefor another subject data set. Anyone with subject matterexpertise can be able to understand the business logic of thedata and can develop the appropriate conditions and actions,which will then form the rule set. In this research work, the8

International Journal of Computer Applications (0975 – 8887)Volume 15– No.4, February 2011rules are formed automatically based on the specific criteriaand formed rules are applicable for any subject dataset. Induplicate data detection rule, threshold values of record pairsand certainty factors are very important.which is presented in Table 6a. Certainty factor is calculatedbased on the types of the attributes and calculation of certaintyfactor is listed below.Rule 1: certainty factor 0.95 (No. 1 and No. 4)3.1 Threshold valueThreshold value is calculated by identifying similaritybetween records and field values; that is, similarity value isused for calculating threshold value. In the similaritycomputation step, the threshold value is calculated and storedin the LOG table. In data elimination and identification step,the threshold value is extracted from the LOG table to identifyand eliminate duplicate records. The threshold value iscalculated for each field as well as for each record in eachcluster. The cutoff threshold values are assigned for eachattribute based on the types and importance of the attribute inthe data warehouse. This cutoff threshold is used foridentifying whether record is duplicated or not by usingcalculated threshold values of each record pairs.3.2 Certainty factorIn the existing method of duplicate data elimination [10],certainty factor (CF) is calculated by classifying attributeswith distinct and missing value, type and size of the attribute.These attributes are identified manually based on the type ofthe data and the most important of data in that datawarehouse. For example, if name, telephone and fax field areused for matching then high value is assigned for certaintyfactor. In this research work, best attributes are identified inthe early stages of data cleaning. The attributes are selectedbased on the specific criteria and quality of the data. Attributethreshold value is calculated based on the measurement typeand size of the data. These selected attributes are well suitedfor the data cleaning process. Certainty factor is assignedbased on the attribute types.Table 1: Classification of attribute zeofdataTypesof data 1 2 3 4 5 6 7 8 9 10 Table1 produces variety of attribute types. Forexample, an attribute may be a key attribute or a normalattribute. If normal attribute, attributes are classified with highdistinct values, low missing values, high data type and highrange of values. Each attribute can have any one of the typeMatching key field with high type and highsize and matching field with high distinct value,low missing value, high value data type andmatching field with high range valueRule 2: certainty factor 0.9 (No. 2 and No. 4) Matching key field with high range valueand matching field with high distinct value,low missing value, and matching field withhigh range valueRule 3: certainty factor 0.9 (No. 3 and No. 4) Matching key field with high typeand matching field with high distinct value,low missing value, high value data type andmatching field with high range valueRule 4: certainty factor 0.85 (No. 1 and No. 5) Matching key field with high type and highsize and matching field with high distinct value,low missing value and high range valueRule 5: certainty factor 0.85 (No. 1 and No. 5) Matching key field and high sizeand matching field with high distinct value,low missing value and high range valueRule 6: certainty factor 0.85 (No. 2 and No. 5) Matching key field with high typeand matching field with high distinct value,low missing value and high range valueRule 7: certainty factor 0.85 (No. 1 and No. 6) Matching key field with high size and hightype and matching field with high distinct value,low missing value and high value data typeRule 8: certainty factor 0.85(No. 2 and No. 6) Matching key field with high sizeand matching field with high distinct value,low missing value and high value data typeRule 9: certainty factor 0.85 (No. 3 and No. 6) Matching key field with high typeand matching field with high distinct value,low missing value and high value data typeRule 10: certainty factor 0.8 (No. 1 and No. 7) Matching key field with high type and highsize and matching field with high distinct value,high value data type and high range valueRule 11: certainty factor 0.8 (No. 2 and No. 7) Matching key field with high sizeand matching field with high distinct value,high value data type and high range value9

International Journal of Computer Applications (0975 – 8887)Volume 15– No.4, February 2011Rule 12: certainty factor 0.8 (No. 3 and No. 7)Matching key field with high typeand matching field with high distinct value,high value data type and high range valueRule 13: certainty factor 0.75 (No. 1 and No. 8) Matching key field with high type and highsize and matching field with high distinct value andlow missing valueRule 14: certainty factor 0.75 (No. 2 and No. 8)Certainty factor is calculated based on the quality of data. Ifan attribute has high certainty factor value, then less thresholdvalue is assigned in record comparison. If then else conditionis used to identify duplicate value. For example,If CF 0.95 and TH 0.75 thenRecords are duplicates. Matching key field with high sizeand matching field with high distinct value andlow missing valueRule 15: certainty factor 0.75 (No. 3 and No. 8) Matching key field with high typeand matching field with high distinct value andlow missing valueRule 16: certainty factor 0.7 (No. 1 and No. 9) Matching key field with high type and highsize and matching field with high distinct value andhigh value data typeRule 17: certainty factor 0.7 (No. 2 and No. 9) Matching key field with high sizeand matching field with high distinct value andhigh value data typeRule 18: certainty factor 0.7 (No. 3 and No. 9) Matching key field with high typeand matching field with high distinct value andhigh value data typeRule 19: certainty factor 0.7 (No. 1 and No. 10) EndTable 2: Values of Certainty factor and Threshold valueS.NoRulesCertaintyFactor (CF)Thresholdvalue (TH)1{TS}, {D, M,DT, DS}0.950.752{T, S}, {D, M,DT, DS}0.90.803{TS, T, S}, {D,M, DT}, {D, M,DS}0.850.854{TS, T, S}, {D,DT, DS}0.80.95{TS, T, S}, {D,M}0.750.956{TS, T, S}, {D,DT}, {D, DS}0.70.95TS – Type and Size of key attributeMatching key field with high type and highsize and matching field with high distinct value andhigh range valueRule 20: certainty factor 0.7 (No. 2 and No. 10)T – Type of key attributeMatching key field with high sizeand matching field with high distinct value andhigh range valueRule 21: certainty factor 0.7 (No. 3 and No. 10)M – Missing value of attributes S – Size of key attributesD – Distinct value of attributesDT – Data type of attributesDS – Data size of attributes Matching key field with high typeand matching field with high distinct value andhigh range valueDuplicate data is identified based on the certainty factor,threshold value and quality data. Quality data is combined inthe certainty factor. So, certainty factor and threshold valuesare very important in data elimination. Certainty factor andthreshold value are calculated based on the defined rule listedin Table 2. In rule 1, if the attribute has high distinct values(D), low missing values (M), high measurement type (DT)and high data range, then the highest certainty factor (0.95) isassigned for attributes and less threshold value (0.75) isenough for comparing records. Because comparing attributesshould have high quality of values for duplicate detection.Duplicate records are identified in each cluster to identifyexact and inexact duplicate records. The duplicate records canbe categorized as match, may be match and no-match. Matchand may be match duplicate records are used in the duplicatedata elimination rule. Duplicate data elimination rule willidentify the quality of the each duplicate record to eliminatepoor quality duplicate records.3.3 Duplicate data elimination ruleTypically duplicate data elimination is performed as the laststep and this step has to take place while integrating twosources or performed on an already integrated source. Thecombination of attributes can be used to identify duplicaterecords. In the duplicate elimination, only one best copy of10

International Journal of Computer Applications (0975 – 8887)Volume 15– No.4, February 2011duplicate record has to be retained and remaining duplicaterecords should be eliminated. Correct duplicate records areidentified using certainty factor and threshold value. Duplicatedata is eliminated based on the number of missing value,range of each field value, data quality of each field value andrepresentation of data. Each duplicate data or overallsimilarities of two records are determined from the similaritiesof selected record fields. An example of duplicate dataelimination is the rule that two records with (i) identical fieldvalue (ie) high threshold value (ii) are of the same length, and(iii) belong to the same type of data, are duplicates. The rulecan be represented as: high threshold value same length offield value same representation of data with slight changes not duplicateThe following factors are used in the duplicate dataelimination rule.i.Number of missing valuesii.Range of valuesiii.Data representationiv.Lack of qualityv.Threshold valueDuplicate records are identified by using specific andhigh discrimination power attributes. In general, duplicaterecords can have so many missing fields. Hence, records canbe eliminated based on the number of missing values in eachduplicate record. Duplicate record is eliminated if theduplicate record is has more missing values than otherduplicate records. The size and range of each field value iscalculated and compared with other duplicate data field toeliminate poor quality duplicate data. For example, sometimesduplicate data can have shortcut form or abbreviation. So, therange of each field value is calculated to remove duplicatedata which have low range than other duplicate records. Mostof the time record is duplicated because of the different formatused for data representation. For example, ‘M’ and ‘F’ areused for male and female but ‘1’ and ‘0’ are used for genderrepresentation. So, there is a need to identify exact format foreach field representation. Wrong format of duplicate data canbe eliminated to retain best and high quality duplicate data.Data warehouse may contain data with poor quality. Data withpoor quality must be removed or to be changed whileeliminating duplicate data. Mostly, duplicate records areidentified and eliminated based on the threshold value of eachduplicate record. Highest threshold value duplicate record isretained and lowest threshold value duplicate records areeliminated.In this research work, rules are developed to eliminatepoor quality duplicate data based on the missing values,length, format, quality of the data and threshold value. Forexample, two records are identified as duplicates. In these tworecords, one record has higher missing values than the otherrecord. Hence, duplicate record with high number of missingvalue is eliminated and other duplicate record is retained. Ifboth duplicate records have the same number of missingvalues, then it checks length, format and quality of the datafor duplicate data elimination.Rule 1Missing(high) 1.0Rule 2Size or length(low) 1.0Rule 3Format(low) 1.0Rule 4Quality(low) 1.0Rule 5Threshold(low) 1.0Rule 6Missing(high) 1.0 Size or length(low) 1.0Rule 7Missing(high) 1.0 Format(low) 1.0Rule 8Missing(high) 1.0 Quality(low) 1.0Rule 9Missing(high) 1.0 Threshold(low) 1.0Rule 10Size or length(low) 1.0 Format(low) 1.0Rule 11Size or length(low) 1.0 Quality(low) 1.0Rule 12Size or length(low) 1.0 Threshold(low) 1.0Rule 13Format(low) 1.0 Quality(low) 1.0Rule 14Format(low) 1.0 Threshold(low) 1.0Rule 15Threshold(low) 1.0 Quality(low) 1.0Rule 16Missing(high) 1.0 Length(low) 1.0 Format(low) 1.0Rule 17Missing(high) 1.0 Length(low) 1.0 Quality(low) 1.0Rule 18Missing(high) 1.0 Length(low) 1.0 Threshold(low) 1.0Rule 19Missing(high) 1.0 Length(low) 1.0 Size orlength(low) 1.0Rule 20Missing(high) 1.0 Format(low) 1.0 Quality(low) 1.0Rule 21Missing(high) 1.0 Format(low) 1.0 Length(low) 1.0Rule 22Missing(high) 1.0 Format(low) 1.0 Threshold(low) 1.0Rule 23Missing(high) 1.0 Threshold(low) 1.0 Quality(low) 1.0Rule 24Missing(high) 1.0 Threshold(low) 1.0 Length(low) 1.0Rule 25Missing(high) 1.0 Quality(low) 1.0 Length(low) 1.0Rule 26Missing(high) 1.0 Length(low) 1.0 Format(low) 1.0 Length(low) 1.0Rule 27Missing(high) 1.0 Length(low) 1.0 Format(low) 1.0 Threshold(low) 1.0Rule 28Missing(high) 1.0 Length(low) 1.0 Format(low) 1.0 Quality(low) 1.0Rule 29Missing(high) 1.0 Format(low) 1.0 Quality(low) 1.0 Threshold(low) 1.011

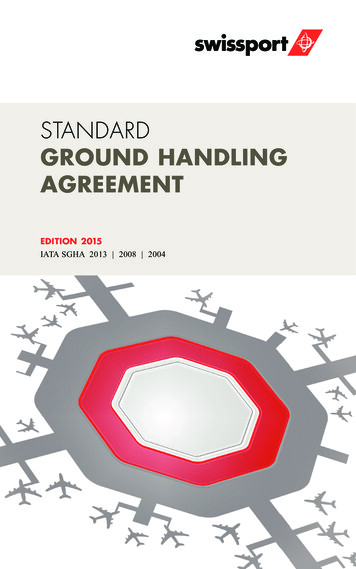

International Journal of Computer Applications (0975 – 8887)Volume 15– No.4, February 2011Rule 31Rule 32Missing(high) 1.0 Format(low) 1.0 Quality(low) 1.0 Length(low) 1.0Missing(high) 1.0 Threshold(low) 1.0 Quality(low) 1.0 Length(low) 1.0Missing(high) 1.0 Length(low) 1.0 Threshold(low) 1.0 Quality(low) 1.0 Format(low) 1.0Table 3: Rules for duplicate data eliminationTable 3 produces rules for duplicate data elimination.Different criteria are assigned for each rule. Each rule has itshighest and lowest priority than other rules. Based on thepriority of the rule, the duplicate records are eliminated. Therules are used to delete duplicate records and to retain onlyone copy of exact duplicate record based on the definedfactors. The above factors are used in a set of restricted valueson each of the matching criteria, which can be calculated fordata elimination. After data elimination, the quality data aremerged in the next stage.4. DATASET ANALYSISTwo datasets are used namely Customer dataset andStudent dataset in this research work for result analysis. Thecustomer data set is a synthetic data set containing 720customer-like Records and 17 attributes with 287 duplicates.The attributes are CUSTOMER CREDIT ID, CUSTOMERNAME, CONTACT FIRST NAME, CONTACT LASTNAME, CONTACT TITLE, CONTACT POSITION, LASTYEAR'S SALES, ADDRESS1, ADDRESS2, CITY,REGION, COUNTRY, POSTAL CODE, E-MAIL, WEBSITE, PHONE and FAX.Also, for this research work a real world 10, 00,000student’s records from college data set are collected frommultiple departments having same schema definition forexecuting duplicate detection process. The data set usesnumeric, categorical and date data type. Address table consistof total 22 attributes. The attributes are ID, ADD MNAME,ADD LNAME,ADD DISTRICT,ADD ADDRESS3,ADD ADDRTYPE,ADD CUSER,ADD MDATE,ADD MUSER,ADD ADDRESS4,ADD EMAIL,ADD FAX,ADD MOBILE,ADD ADDRESS1,ADD PHONE2,ADD NAME,ADD ADDRESS2,ADD PHONE1,ADD CDATE,ADD DEL,ADD PARENTTYPE and ADD PINCODE. Out of that 6important attributes ADD FNAME, ADD ADDRESS1,ADD ADDRESS2, ADD PINCODE, ADD PHONE, andADD CDATE are used for duplicate detection process.Efficiency of duplicate detection and elimination is largelydepends on the selection of attributes.5. EXPERIMENTAL RESULTSTo evaluate the performance of this research work, the errorsintroduced in duplicate records range from smalltypographical changes to large changes of some fields.Generally, the database duplicate ratio is the ratio of thenumber of duplicate records to the number of records of thedatabase. To analyze the efficiency of this research work,proposed approach is applied on a selected data warehousewith variant window size, database duplicate ratios anddatabase sizes. In all test, the time taken for duplicate datadetection and elimination process is analyzed to evaluate theefficiency of time saved in this research work.5.1 Duplicates Vs Threshold Values – Student Dataset70000No. of Duplicate RecordsRule hreshold ValueFigure 2: Duplicate detected Vs varying threshold valueATTRIBUTES0.50.60.70.80.91ADD ADDRESS1401533851137462344233104830988ADD ADDRESS1 ADD 0160572135413653874523454289840172ADD ADDRESS1 ADD NAMEADD PHONE1ADD ADDRESS1 ADD NAMEADD PHONE1ADD CDATEADD ADDRESS1 ADD NAMEADD PHONE1ADD CDATEADD DELADD ADDRESS1 ADD NAMEADD PHONE1ADD CDATEADD DELADD ADDRESS2ADD ADDRESS1 ADD NAMEADD PHONE1ADD CDATEADD DELADD ADDRESS2ADD PINCODETable 4: Attributes and threshold value12

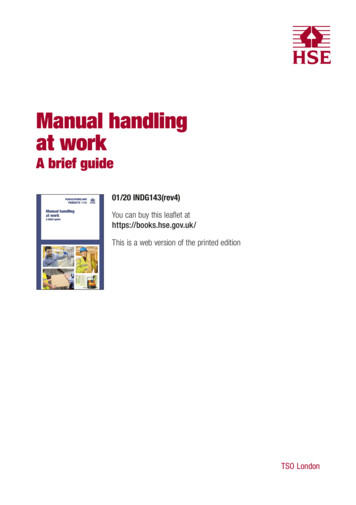

International Journal of Computer Applications (0975 – 8887)Volume 15– No.4, February 2011Figure 2 shows the performance of duplicate detectionwith false mismatches by varying the threshold value. Table 4contains number of duplicates detected by each combinationof attributes for particular threshold value. The data valuesshown in bold letters represent the total number of duplicaterecords detected at optimum threshold value. The optimumthreshold values are 0.6, 0.7 and 0.8. The results are notaccurate if the threshold value is greater than or less than theoptimum threshold value. The number of mismatches andfalse mismatches are increased and it is not possible to detectexact and inexact duplicates. Hence, the accuracy of theduplicate detection is not exact. The threshold value is animportant parameter for duplicate detection process.PHONE5.2 Duplicates Vs Threshold Values – Customer DatasetE MAILCONTACT LAST NAMECITYFAXPOSTAL CODECUSTOMER NAME291287287254215294286286247231287285283238216E MAILCONTACT LAST NAMEPHONEFAXPOSTAL CODECUSTOMER NAME350PHONENo. of Duplicates300FAX250200POSTAL CODECUSTOMER NAME150E MAIL100CONTACT LAST NAMECITY ADDRESS150Table 5: Attributes and threshold value00.70.80.91Threshold ValuesFigure 3: Duplicate detected Vs varying threshold 7287287275252287287267241232PHONEFAXPHONEFAXPOSTAL CODE5.3 Percent of incorrectly detected duplicated pairs VsAttributesPHONEFAX0.1286287263257190POSTAL CODECUSTOMER NAMEPHONE0.08ADD ADDRESS10.06ADD NAME0.04ADD PHONE10.02ADD ADDRESS2ADD CDATE0FAXPOSTAL CODECUSTOMER NAMEFigure 3 shows the performance of exact duplicatedetection by varying the threshold value. Table 5 containsnumber of duplicates detected by each combination ofattributes for particular threshold value. The exact duplicatevalue is 287 in selected customer dataset. Figure 6c showsthat threshold values 0.6, 0.7 and 0.8 are optimum thresholdvalues for duplicate detection. The results are not accurate ifthe threshold value is greater than or lesser than the optimumthreshold value. Generally, data warehouse contains inexactduplicates with slight changes because of the typographicalerrors. Hence, the threshold value is an important parameterfor duplicate detection process. If the threshold value is 1,then the detection of duplicates is very low because it takesonly exact duplicate values. If the threshold value is less than0.5, then the detection of duplicates is very high but the ratioof false mismatches is increased.False Positives (%)0.6ADD PINCODE292287274261225ADD ADDRESS3Window SizeE MAILFigure 4: False Positives Vs Window size and Attributes13

International Journal of Computer Applications (0975 – 8887)Volume 15– No.4, February 2011Figure 4 shows that false positive ratio is increased asincreases the window sizes. Identification of duplicatesdepends on keys selected and size of the window. This figureshows the percent of those records incorrectly marked asduplicates as a function of the window size. The percent offalse positive is almost insignificant for each independent runand grows slowly as the window size increases. Thepercentage of false positives is very slow if the window size isdynamic. This suggests that the dynamic window size is moreaccurate than fixed size window in duplicate detection.5.4 Time taken on databases with different dataset size140Time(Seconds)120Table Definition100Token Creation80Blocking60Similarity Computation40Duplicate Detection20Duplicate Elimination050,00075,000 1,00,000 1,25,000 1,50,000Total TimeDatabase(Size)Figure 5: Time taken Vs Database sizeFigure 5 shows variations of time taken in differentdatabase sizes. The result on time taken by each step of thisresearch work is shown in figure 5. The time taken byproposed research work increases as database size increases:the time increases when the duplicate ratio increases indataset. The time taken for duplicate data detection andelimination is mainly dependent upon size of the dataset andduplicate ratio in dataset. The efficiency of time saved ismuch larger than existing work because token based approachis implemented to reduce the time taken for cleaning processand improves the quality of the data.5.5 Time performance Vs Different size databases andPercentage of created duplicates1401201008010% duplicates6030% duplicates4050% duplicates20050,000 75,000 100,000 1,25,000 1,50,00010%, 30% and 50% duplicates are created in the selecteddatasets for result analysis. The results are shown in Figure 6.The time taken for duplicate detection and elimination isvaried based on the size of the data and percentage ofduplicates available in the dataset. For these relatively largesize databases, the time seems to increase linearly as the sizeof the databases increase is independent of the duplicatefactor.6. CONCLUSIONDeduplication and data linkage are important tasks in the preprocessing step for many data mining projects. It is importantto improve data quality before data is loaded into datawarehouse. Locating approximate duplicates in large datawarehouse is an important part of data management and playsa critical role in the data cleaning process. In this researchwok, a framework is designed to clean duplicate data forimproving data quality and also to support any subjectoriented data.In this research work, efficient duplicate detection andduplicate elimination approach is developed to obtain goo

Data warehouse may have terabyte of data for the mining process. The preprocessing of data is the initial and often crucial step of the data mining process. To increase the accuracy of the mining result one has to perform data preprocessing because 80% of mining efforts often spend their time on data quality. So, data cleaning is very much .

![Duplicate Poker Guide[1] - Weblogs at Harvard](/img/66/duplicate-poker-guide1.jpg)