Transcription

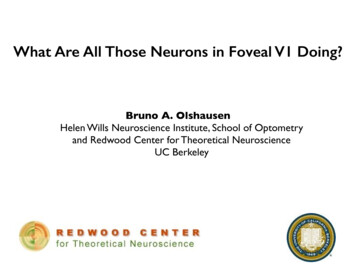

What Are All Those Neurons in Foveal V1 Doing?Bruno A. OlshausenHelen Wills Neuroscience Institute, School of Optometryand Redwood Center for Theoretical NeuroscienceUC Berkeley

Fibers of Henle011, Vol. 52, No. 3ganglion cellsRevealing Henle’s Fiber Layer using SD-OCT1487foveaamacrine cellsbipolar cellshorizontal cellsmalian foveal histologer C. Wagner, ProBiological Sciences,ware, http://dspace.photoreceptorspace/handle/19716/or components are inctangle, showing theutions by the photoreuter segments, nuclei,g in HFL. GCL, ganRs, photoreceptor ISoriocapillaris and cho-NDMETHODSionided informed consent to participate in this study.pproved by Institutional Review Boards at the Univerat Berkeley and the Medical College of Wisconsin, and20,000 A-scans per second. Forty B-scans centered on the fovea, eachconsisting of 1000 A-scans, were registered and averaged as previouslydescribed.21 A headrest was used to stabilize subjects, and deviation ofthe entry position from where the B-scan appeared flat was measuredusing markings on the stage. Additionally, video was recorded of livevertical translation of the stage while vertically oriented B-scans were

Midget ganglion cells receiveinput from midget bipolar cells.Ratio is 1:1 in fovea.

Retinal ganglion cell sampling array(shown at one dot for every 20 ganglion cells)(from Anderson & Van Essen, 1995)

Letter size vs. eccentricity(Anstis, 1974)

N

E NE

ENE

Trends i‘Crowding’(a)(b)From: Whitney & Levi (2011)TREN

Foveal oversampling in LGN and Cortex(Connolly & Van Essen, 1984)VISUAL TOPOGRAPHY IN MACAQUE LGN561“ despite the fact that theestimated total number of LGNcells is similar to the total numberof retinal ganglion cells, their ratiomust vary from many LGN cellsper retinal ganglion cell for the5 O fovea to fewer than one LGN cellper retinal ganglion cell in theperiphery.”LGNAV1Fig. 14. The visual field (A) and its representations in LGN layer 6 (B)J5mmrepresents the perimeter of the macaque visual field. (Note inverted cortical

EDCCortex:LGN cell ratio ranges from 1000:1 inLAYERfovea5to 100:1 in peripheryA(Connolly & Van Essen, 1984)Fig. 15. On the right, the anisotropic layer-5 and layer-6 representationsof small squares in the visual field near the horizontal meridian (A). Theserepresentations send anisotropic inputs (B) to striate cortex. which, when*\they converge on layer 4C (0,produce an isotropic visual represecortical "modules" (D). In E, the cortical modules are shown inseslice of striate cortex.ooooTCortex:LGNcell ratio 4A4CPPARVlII.0"1"10"8 0"total LGN cellular magnification factor from Table 1. The smaEccentricityshows the number of 4A and 4C0 cells per parvicellular cell, calFig. 16. Cell ratios as a function of eccentricity in the geniculocorticalpathway. The large-dot line shows the total number of striate nerons perLGN cell. This ratio was calculated as Ma (cortex) X (cells/mm2 surfacearea)/M, (LGN), where Ma (cortex) is the areal magnification factor for astandardized striate cortex (equation 8 from Van Essen et al., '84); cells/mm2 was taken from O'Kusky and Colonnier ('82). and M, (LGN) is theMa (cortex) x (4A 4Cp cells/mm21 x M, (parvi), with appropfrom the same three sources. The dashed line shows the numbcells per magnocellular cell, calculated as M, (cortex) x (4Cn celM, (magno).

Fixational eye movements(drift)(from Austin Roorda, UC Berkeley)

PNAS November 9, 2010 vol. 107 no. 45Drift 19525–19530RetinaBayesian model of dynamic image stabilization in thevisual systemYoram Buraka, Uri Roknia, Markus Meistera,b, and Haim Sompolinskya,c,1Poisson spikesaCenter for Brain Science, Harvard University, Cambridge, MA 02138; bDepartment of Molecular and Cellular Biology, Harvard University, Cambridge, MA02138; and cInterdisciplinary Center for Neural Computation, Hebrew University, Jerusalem 91904, IsraelEdited by William T. Newsome, Stanford University, Stanford, CA, and approved September 17, 2010 (received for review May 8, 2010)Dcomputation fixational eye motion neural network retina cortexRetinaspikes, it would seem that downstream visual areas require knowledge of the image trajectory. The image jitter on the retina duringfixation is a combined effect of body, head, and eye movements (6,7). Whereas the brain can often estimate the sensory effects of selfgenerated movement using proprioceptive or efference copy signals, such information is not available for the net eye movement atthe required accuracy (8–10) (reviewed in ref. 11). Thus the imagetrajectory must be inferred from the incoming retinal spikes, alongwith the image itself. In so doing, an ideal decoder based on theBayesian framework would keep track of the joint xprobability foreach possible trajectory and image, updating this probability disWhattributionin response to the incoming spikes (5,Where11). However, theimages encountered during natural vision are drawn from a hugeensemble. For example, there are 2900 possible black-and-whiteimages with 30 30 pixels, which coversonly a portion of the fovea.RetinaClearly the brain cannot represent a distinct likelihood for each ofthese scenes, calling into question the practicality of a Bayesianestimator in the visual system.Here we propose a solution to this problem, based on a factorized approximation of the probability distribution. This approximation introduces a dramatic simplification, and yet the emergingdecoding scheme is useful for coping with the fixational imageWhatdrift.We present a neural network that executesWherethis dynamic algorithm and could realistically be implemented in the visual cortex.It is based on reciprocal connections between two populations ofyiyiy x ixNEUROSCIENCECHumans can resolve the fine details of visual stimuli although theNo DriftDriftimage projectedon the retina is constantlydrifting relative to thephotoreceptor array. Here we demonstrate that the brain must takethis drift into account when performing high acuity visual tasks.Further, we propose a decoding strategy for interpreting the spikesemitted by the retina, which takes into account the ambiguity causedby retinal noise and the unknown trajectory of the projected imageon the retina. A main difficulty, addressed in our proposal, is theexponentially large number of possible stimuli, which renders theideal Bayesian solution to the problem computationally intractable.In contrast, the strategy that we propose suggests a realisticimplementation in the visual cortex. The implementation involvestwo populations of cells, one that tracks the position of the imageand another that represents a stabilized estimate of the image itself.Spikes from the retina are dynamically routed to the two populationsand are interpreted in a probabilistic manner. We consider thearchitecture of neural circuitry that could implement this strategyand its performance under measured statistics of human fixationaleye motion. A salient prediction is that in high acuity tasks, fixedfeatures within the visual scene are beneficial because they provideinformation about the drifting position of the image. Therefore,complete elimination of peripheral features in the visual sceneshould degrade performance on high acuity tasks involving verysmall stimuli.

Traditional models compute motion and form independentlymotion energyand poolingoptic flowfeature extractionand poolinginvariant patternrecognitiontime-varying image

a)b)Motion and form must be estimatedsimultaneouslytime-varying imageestimate motionestimate motionoptic flowregularization(smoothness)motionnatural scenestatistics priorpatternformnatural scenestatistics priortime-varying imageestimate patternform

Retinal image motion helps pattern discriminationRatnam, Domdei, Harmening, & Roordan during thects, absolutedual trialsosaic ofetinal disuring eachg (an examin shown inhe stabilizedat occurredtabilizationraversed 0.4d differently,ization were(Figure 2C).e set of conesion, whereasnatural6Figure3. Stimulusmotionacuitythe resolutionRatnam, K.,Domdei,N., Harmening,W. M.,improves& Roorda, A.(2017).atBenefitsof retinal image motionat the limitsof spatialvision.Journalof Vision,the17, stimulus1–11.limit.(A) Innaturalviewing,(‘‘E’’) is fixed in spacecrimination

Graphical model for separating form and motion(Alex Anderson, Ph.D. thesis)Eye positionSpikes(from LGN afferents)PatternŜ arg max log P (R S)SXarg max logP (R X, S) P (X)SX

Given current estimate of position (X), update SRetinaInternal PositionEstimate, XInternal PatternEstimate, S

Given current estimate of pattern (S), update XRt 1StP (Xt R0:t )P (Xt 1 R0:t )P (Rt 1 Xt 1 , S S t )P (Xt 1 R0:t 1 )

Joint estimation of form and motion(Alex Anderson, Ph.D. thesis)

Motion helps estimation of pattern S

Motion restores acuity in the case of cone lossFigure 3: Motion benefit during cone loss. a, Tumbling E with a retinal cone lattice that has 30 percent

Including a prior over SEye positionSpikes(from LGN afferents)SparsepriorPatternS DAÂ arg max log P (R A) log P (A)Asparse

Natural image pattern may be inferred with a sparse priorusing a Gabor-like basis similar to V1 receptive fieldsFigure 4: Neurons with structured receptive fields improve inference: a, A natural scene patch pro-

Main points The foveal representation in LGN, and again in cortex, ishighly oversampled, in terms of number of neurons perganglion cell, with respect to the periphery. Phenomena such as crowding and shape adaptationsuggest a looser representation of shape in the peripherythat is more subject to grouping or contextual influencesthan in the fovea. Neural circuits in the foveal portion of V1 must take intoaccount estimates of eye position or motion in order toproperly integrate spatial information. One possibility is separate populations of neurons thatinteract multiplicatively in order to explicitly disentangleform and motion.

The Cirrus HD-OCT is an SD-OCT device that uses a 50-nm band-width light source centered at 840 nm, has a 5-!m axial resolution, and obtains 27,000 axially oriented scans (A-scans) per second. Cirrus data are acquired over a 20 field (6 mm for an emmetropic eye) and has a scan depth of 2 mm. Two standard Cirrus scan protocols were used to