Transcription

White paperSoftware & DataBuilding a competitiveadvantage throughdata maturityBy Jeremy Peters: GISP, CIMP – Master Data Management & Data QualitySolutions Architect Principal Consultant Distinguished Engineer Pitney Bowes Customer Information Management

Page 2AbstractData Maturity considers where datalives, how it is managed, data qualityand the type of questions beinganswered with data. A comprehensiveanalytics environment can be achieved,as organizations advance through thestages of Data Maturity, to effectivelyanalyze information to make decisionsabout future products, markets, andcustomers. A comprehensive analyticsenvironment often includes anintegrated, accurate, consistent,consolidated and enriched view of coredata assets across the entire enterprise.This data environment can be providedby automating effective dataintegration, data cleaning, dataenrichment, consolidation/entityresolution, and Master DataManagement as well, as descriptive,predictive and prescriptive dataanalysis. In this paper, we describesix stages of Data Maturity and whatorganizations can achieve at each stageto enable effective decision making andgain a competitive advantage through atrue single view of the business.Building a competitive advantage through data maturityA Pitney Bowes white paper

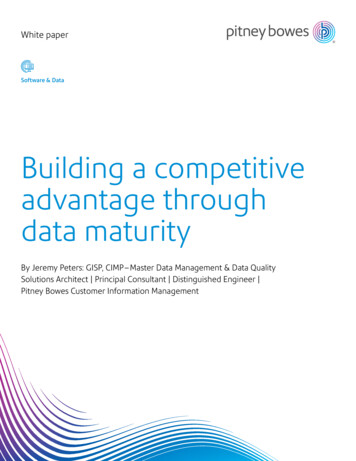

Page 3Data is evolving into a powerful resource formaking insightful, forward-looking predictions andrecommendations. This evolution is happening ascompute and storage technology is improving, enablingmore powerful analytical tools that are being used as acompetitive advantage by more skilled data managementprofessionals. The size, variety and update rate of data isgrowing fast, and quality is often an issue, as it comesfrom databases, web application logs, industry-specifictransaction data and location-aware devices like mobilephones and many kinds of sensors. Today, full-time datamanagement teams, including data scientists, analysts, andengineers are responsible for creating and maintaining asingle source of truth for the company. These teams arefinding and fixing data quality issues, performingexploratory, predictive and prescriptive analyses toanswer the tough questions and enabling other analystsin individual business units.and analyzed are creating even greater data veracity/dataquality challenges. Data needs to be cleaned and dataquality needs to be implemented before confidence canbe established in the use of any analytics. Data needs tobe properly managed, protected and used to effectivelyunderstand and improve marketing/sales, customerexperience, and operations.Global data growth is characterized by variety, volume,velocity, and veracity. A new complexity is caused by manynew data types that are now being collected in addition tomaster and transactional data such as semi-structured data(e.g., email, electronic forms, etc.), unstructured data (e.g.,text, images, video, social media, etc.), as well as sensorand machine-generated data. The volume of data thatcompanies and governments are collecting is growingrapidly. They must now deal with data sources that arehundreds of terabytes or more that need to be storedand analyzed. The rate/velocity at which data needs tobe created, processed and analyzed, often in real-time,is increasing in many applications. The new variety, greatvolume, and rate at which data needs to be processedIn this paper, we describe the following six stages of DataMaturity and what organizations can achieve at each stageto enable effective decision making and gain a competitiveadvantage through a true single view of the business:Data Maturity looks at how companies manage their data toanswer the important questions that will have a significantimpact on the business including improving marketingeffectiveness, lowering operating costs, increasing revenue,optimizing the supply chain, and improving the productmix and customer service. Data Maturity stages are a wayto measure how advanced a company’s data managementand analysis is. Data Maturity considers where data lives,how it is managed, data quality and the type of questionsbeing answered with data. Stage 1: Manual data collection and management Stage 2: Automated data collection, integrationand management Stage 3: Data quality Stage 4: Master data consolidation Stage 5: Single view of the enterprise Stage 6: Getting results: data analytics to improvedecision making

Page 4Stage 1: Manual data collectionand managementIn this stage, an organization has not fully committed tothe importance of data management and how it appliesthat data to drive decision making across functions anduse cases. Data ownership is fragmented across multipledepartments with little governance and accountability.A complete inventory of data assets (Data Dictionary)does not exist. Data management staff have a limitedrange of skills and are responsible for a limited rangeof tasks such as modeling predictable data for sales ormarketing. The way data is collected, managed, integrated,and prepared for use across the organization is oftenad-hoc and uncoordinated, using multiple systems, withsignificant data quality issues. There is no informationsecurity for key information assets. Reporting is limitedto tasks that are critical for business operations, with noformal Enterprise Information Management (EIM) tools orstandards in place to support this, and spreadsheets areused as a primary means of reporting. The tools that areleveraged to help integrate and activate diverse data andinsights are less than optimal. The methods by which anorganization applies analytical methodologies to data arein their early stages of implementation. The beginningsof data-driven culture may exist.Stage 2: Automated data collection,integration and managementIn this stage, data warehouse and data lake systems arewell-defined, managed and governed. The planning,development, and execution of security policies andprocedures to provide authentication, authorization,access, integrity, and auditing of data and informationassets are established. In this and subsequent stages,a robust data governance program controls and processesare implemented to ensure that important data assets areformally managed throughout the enterprise.A foundational Enterprise Information Management (EIM)system is also established to provide the basis for anextended analytical environment. One of the strengths ofBuilding a competitive advantage through data maturityEIM is its ability to define data integration, data qualityand data analysis transforms in graphical workflows.These transforms can now be pushed down into analyticaldatabases and Hadoop, and analytics, rules, decisions,and actions can now be added into informationmanagement workflows to create automated analyticalprocesses. Workflows can be built and re-used regularlyfor common analytical processing of both structuredand unstructured data to speed up the rate at whichorganizations can consume, analyze and act on data.Workflows can be implemented as batch jobs and realtime web services.EIM Data Discovery tools are used to scan an organization’sdata resources to get a complete inventory of its datalandscape. Structured data, unstructured data, and semistructured data can be scanned to generate a library ofdocumentation describing a company’s data assets andto create a metadata repository.EIM Data Integration tools are used to integrate (join,filter and map) multiple different data sources. Differentdata sources containing the same entity (e.g. product)information can be mapped to the same logical modelso the same EIM Consolidation (entity resolution) processcan master data from multiple existing sources based on acommon input data schema. This logical model representsthe business entities and relationships that the businesswants to understand for each physical model/data source.These data sources technologies can include relational andanalytical DBMS (e.g. Oracle and data warehouse appliances,respectively), big data non- relational data managementplatforms (e.g. Hadoop platform), NoSQL data store (e.g.,graph database, such as Neo4j), applications (e.g. SAP),cloud (e.g. Azure) and text-based (e.g. XML). These datasources can be integrated into a single logical modelvia batch ETL, real-time web service requests and/orData Federation/Virtualization among other integrationmethodologies. Better customer experience and provideroperations efficiency is achieved through automated DataCollection, Integration, and Management. However, dataquality still needs to be implemented before confidencecan be established in the use of any analytics.A Pitney Bowes white paper

Page 5Stage 3: Data qualityIn this stage, organizations ensure the accuracy, timeliness,completeness, and consistency of their data so that thedata is fit for use. Here, automated Data Profiling is usedby organizations to identify any data quality issues relatedto core data duplication, accuracy, timeliness, completeness,and consistency. Data Quality Assessments (DQA) areprepared, using the data profiling results, to outline thedata quality issues, the data quality rules to address eachissue and the implementation of data quality rules. EIMData Quality transforms can then be configured tointegrate, parse/normalize, standardize, validate and enrichthe data based on the implementation of each data qualityrule outlined in the DQA report. EIM technology is alsoimplemented to standardize data at the point of captureas data is entered into the system through on-line forms.For example, valid postal address candidates are suggestedfor the user to pick from as a user types in their addressin an address data capture form. EIM Data Governancetechnologies and processes enable a business stewardto review, correct and re-process records that failedautomated processing or were not processed with asufficient level of confidence. Standardization helpseliminate duplications and data- entry issues whileenabling synchronization of data across the enterprise.EIM Data Enrichment processes enhance an organization’sdata by adding additional detail to make it a more valuableasset. Data enrichment can be based on spatial data,marketing data, or data from other sources that you wish touse to add additional detail to your data. Location is oftenused to relate and join disparate data sources that share aspatial relationship. For example, addresses can be geocodedto determine the latitude/longitude coordinates of eachaddress and store those coordinates as part of each record.The geographic coordinate data could then be used toperform a variety of spatial calculations, such as findingthe bank branch nearest the customer. The most commonmethod of enrichment involves joining on commonattributes from a data source to be enriched with a datasetwith additional detail. Fuzzy and exact matching on similarattributes between a data source to be enriched with adataset with additional detail is another methodology used.Transliteration and Translation capabilities are used in EIMData Quality technologies to help many organizationsstandardize global communication and information indifferent languages. Transliteration capabilities change textsfrom one script to another, such a Chinese Han to Latin,without translating the underlying words. Personal names,from many countries such as China, Taiwan, Hong Kong (e.g.Han script), Japan (e.g. Kanji script, Katakana script andHiragana script), Korea (e.g. Hangul script) and Russia (Cyrillicscript) can be transliterated in batch from non-Latin scriptsto Latin script using Transliteration technology. Customeraddresses in different languages and scripts can also betransliterated, parsed, standardized and validated in batchaccording to postal authority standards using Global AddressValidation technologies. Information, such as organizationnames can be effectively translated in batch from non-Latinscripts to English from many countries using translationtechnologies. This language and script-based standardizationnot only improves data quality but also improves theeffectiveness of entity (person, place, object and/or thing)consolidation to determine which records represent thesame entity. There is no need to maintain different nonconsolidated versions of the same entity with varyinginformation in different languages and scripts.Data cleaning and enrichment not only improves thedata quality of the source data but also improves theeffectiveness of master data consolidation in Stage 4to determine which records represent the same entity.Effective data quality and data governance enableconfident analytics to better monitor the business anddecide future lines of growth.

Page 6Stage 4: Master data consolidationIn this stage, organizations create and maintain a consistentconsolidated master view of their core data assets acrossthe enterprise with accurate and complete information.Data duplications and discrepancies are resolved bothwithin and between existing systems. A holistic andconsistent representation of an enterprise’s core dataassets is created and managed. Some of the key types ofmaster data or master entity types are: Customer Product Vendor Supplier Organization (e.g. business unit) Employee Geography (region/country) Location Service Fixed asset (e.g. building)Building a competitive advantage through data maturityEIM Data Quality technologies are used to automate regularmatching and consolidation processes to master regularupdates to core data assets once they are standardizedand normalized. Here, companies automate data matchingroutines to fix data consolidation and duplication issues.In this data matching routine, customizable exact andfuzzy matching rules can be used to compare and link awide variety of effective identifiers such as standardizedversions of product name, description, and id in productrecords from various internal systems. This advanced fuzzymatching process maximizes matches and reduces falsepositives using appropriate types of fuzzy matchingalgorithms along with computing weights for each identifierbased on its estimated ability to correctly identify a matchor a non-match. These weights are then used to calculatethe probability that two given records refer to the samemaster entity type. Records that represent the same entitythat are confidently matched and linked together can thenbe more easily consolidated to create a single masterrecord with enriched information. A master record canbe created from all matching entity records by evaluatingattributes (e.g. name) from each record according tocompleteness, accuracy and/or timeliness to determinewhich attributes from which record should make up themaster record. EIM Data Governance technologies andprocesses enable a business steward to review, correctand re-process records that did not match with a sufficientlevel of confidence. This master data is then updated inthe appropriate downstream systems. This consolidatedview is the starting point to be able to effectively analyzeinformation to make decisions about future products,markets, and customers.A Pitney Bowes white paper

Page 7Stage 5: Single view of the enterpriseIn this stage, organizations create and maintain a consistent,single master view of their core data assets across theenterprise with accurate and complete information. Masterrepresentations of the same data assets/ entities are nolonger held in multiple systems. Master Data is loaded andmanaged in a Master Data repository.Some businesses are using Graph database technologiesto store, manage, view, search, and analyze master dataand their complex relationships to more effectivelyuncover important relationships and trends. UsingGraph technologies also provides the opportunity to view,search, and analyze big data that is physically stored in anon graph repository such as big data non-relational datamanagement platform (e.g. Hadoop platform). Banks dothis with billions of transactions. Storing the transactiondata in a Hadoop repository provides speed and scalabilityat low cost, while master customer and account data canbe stored and maintained in a graph database. DataFederation/ Virtualization integration, data consolidation,and graph database based technologies can be used to link,visualize, search and analyze master data stored in thegraph database with the related information stored in theoriginal source systems. This single consolidated view canprovide insights into clusters of an organization’s assets(e.g. customers, products, fixed assets, etc.) and influentialconnections within and between those clusters.

Page 8Stage 6: Getting results: data analyticsto improve decision makingIn this stage, organizations are using analytic solutionsto get meaning from the large volumes of data to helpimprove decision making. Companies have the processes,management, and technology to apply sophisticateddata models to improve marketing effectiveness, loweroperating costs, increase revenue, optimize their supplychain, and improve their product mix and customer service.A comprehensive analytic environment, powered bytechnologies such as Artificial intelligence (AI), MachineLearning, Business Intelligence, Location Intelligence, andGraph database technologies, is employed for a businessto have a holistic view of the market and how a companycompetes effectively within that market. This includes: Descriptive Analytics helps determine what happened inthe past using methodologies such as data aggregationand data mining Predictive Analytics helps determine what is likely to happenin the future using statistical models and forecasting Prescriptive Analytics helps determine how to achieve thebest outcome and identify risks to make better decisionsusing optimization and simulation algorithms.Artificial intelligence (AI) technologies make it possiblefor machines to learn from experience, adjust to newinputs and perform human-like tasks. Machine Learning,a branch of AI, is a method of data analysis that automatesanalytical model building. Machine learning algorithmsbuild a mathematical model based on sample data,known as “training data”, to make predictions or decisionswithout being explicitly programmed to perform the task.For example, Machine Learning statistical models are usedfor profit-impacting customer behavior and propensityanalysis by identifying and grouping all sales transactionsby their customers in order to predict customer behaviorsand propensities.Building a competitive advantage through data maturityLocation intelligence (LI) technologies make it possible toderive meaningful insight from geospatial data relationshipsto solve a particular problem. Location is often used torelate and join disparate data sources that share a spatialrelationship. Location intelligence visualization can helpidentify patterns and trends by seeing and analyzing datain a map view with spatial analysis tools such as thematicmaps and spatial statistics. Location intelligence can helpfind “data needles in a data haystack” by using spatialrelationships to filter relevant data. Location intelligencetechnologies can provide data processing, visualization,and analysis tools for both the time and geographicdimension of data that helps expose important insightsand provide actionable information.Graph database technologies are being used to store, view,search, and analyze master data and related entities (e.g.transactions) and their complex relationships to uncoverimportant relationships and trends. Many businesses weinteract with every day, use graph databases and analysis,such as Google, Facebook, LinkedIn, and Amazon. Graphscome in many shapes and sizes, suitable for an extremelywide variety of business problems such as Google WebSite Ranking Graphs, Amazon Market Basket Graphs andFacebook and LinkedIn Social Network Graphs. Analysisof social networks, such as Facebook and LinkedIn, canprovide insights into clusters of people or organizationsand influential connections within and between thoseclusters. The importance of technologies suited to dealingwith complex relationships has increased with the growthof Big Data. When properly designed and executed,graphs can be one of the most intuitive ways to analyzeinformation. Having each subject represented only oncewith all of its relationships, in the context of all of the othersubjects and their relationships, makes it possible to seehow everything is related at the big-picture level. Centralityalgorithms and influence analysis can be applied tomeasure the importance and significance of individualentities and relationships.A Pitney Bowes white paper

Page 9ConclusionData Maturity considers where data lives, how it ismanaged, data quality and the type of questions beinganswered with data. A comprehensive analyticsenvironment can be achieved, as organizations advancethrough the stages of Data Maturity, to effectively analyzeinformation to make decisions about future products,markets, and customers. A comprehensive analyticsenvironment often includes an integrated, accurate,consistent, consolidated and enriched view of core dataassets across the entire enterprise. This data environmentcan be provided by automating effective data integration,data cleaning, data enrichment, consolidation/entityresolution, and Master Data Management as well, asdescriptive, predictive and prescriptive data analysis.For many organizations, advanced Data Maturity cansignificantly improve marketing effectiveness, loweroperating costs, increase revenue, optimize their supplychain, and improve their product mix and customer service.

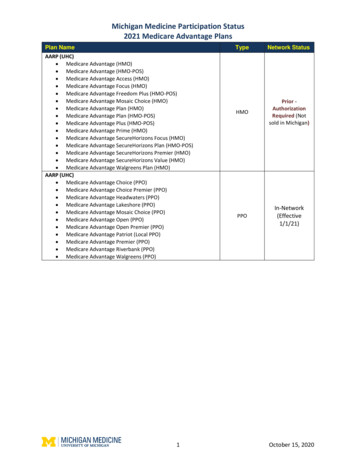

Page 10Data maturity matrixStage 1: Manual datacollection and managementStage 2: Automated data collection,integration and managementStage 3:Data qualityPeople/organizationvision& strategy Some management awareness, but noenterprise wide buy-in Org makes strategic decisions withoutadequate information Dependent on a few skilled individuals Growing executive awareness of the valueof data assets in some business areas Power of information is understood butstrategy is still project oriented Value of information realized & shared on crossfunctional projectsDatagovernance Little governance and accountability A Data Governance program isbeginning to be established includingdocumenting the risks related withuncontrolled information assets Org formalizes objectives for information sharing toachieve operational efficiency Data Governance technologies enable a business stewardto review, correct and re-process records that were notprocessed with a sufficient level of confidenceDataarchitecture No formal information architecturecontains the principals, requirementsand models to guide teams on how toshare enterprise information Information architecture frameworkexists, but does not extend to newdata sources or advancedanalytic capabilities Best practice information architectural patterns for bigdata and analytics are defined and have been applied incertain areasMetadata Little or no business metadata No policy or organizational strategy IT organization has become aware ofmetadata but does not manage it strategically EIM Data Discovery tools are used toscan an organizations data resources toget a complete inventory of their data Inconsistent asset tagging Department-level common repositories and policiesDataintegration&interoperability No integration strategy Minimal data integration Each integration is manual, custom,typically point-to-point, and done on aone-off basis Organization is beginning to recognizeintegration as a challenge EIM Data Integration tools are beginning tobe used to integrate multiple differentdata sources APIs published (e.g. REST, SOAP), or Web-Based Synchronization of APIs A few, limited cloud connectors and services are offered API documentation and a developer portal maybe availableData quality Significant data quality issues Data quality activity is reactive No capability for identifying dataquality expectations Limited anticipation of certain data issues Data quality expectations start tobe articulated Simple errors are identified and reported Dimensions of data quality are identified and documented Organizations begin to ensure the accuracy, timeliness,completeness, and consistency of their data so that thedata is fit for use Data Quality Assessments (DQA) are prepared, using thedata profiling results, to outline the data quality issuesand the data quality rules to address each issue EIM Data Quality transforms are used to integrate, parse/normalize, standardize, validate and enrich dataReference &master data Manually maintain trusted sources Siloed structure with limited integration IT organization takes steps towardcross-department data sharing, such asMaster Data Management (MDM) Tactical MDM implementations that are limited in scopeand target a specific divisionData analytics/measurement Reporting is limited to tasks that arecritical for business operations andspreadsheets are used as a primarymeans of reporting Full benefits of analytics poorlyunderstood, siloed and ad hoc activities,yet reasonable results Analytics are used to inform decision makers whysomething in the business has happenedData security There is no information security forkey information assets Planning, development and execution ofsecurity policies and procedures forinformation assets is established More advanced use of security technologies andadoption of new tools for incident detection andsecurity analytics

Page 11Stage 4: Masterdata consolidationStage 5: Single view ofthe enterpriseStage 6: Getting results: data analyticsto improve decision makingPeople/organizationvision& strategy Senior Mgt. recognizes enterpriseinformation sharing as critical forimproved business performance and,therefore, moves from project-levelinformation management to EIM Senior management embraces EIMthen markets and communicates it Organization has implementedsignificant portions of EIM, including aconsistent information infrastructure Senior management sees information as acompetitive advantage and exploits it to createvalue and increase efficiency IT organization strives to make informationmanagement transparent to usersDatagovernance Governance councils and a formal dataquality program, with assigned data stewards,help manage information as an asset Data Governance technologies enable abusiness steward to review, correct andre-process records that did not consolidatewith a sufficient level of confidence Governance council and steeringcommittees resolve problemspertaining to cross-functionalinformation Monitoring and enforcement of informationgovernance is automated throughout the enterpriseDataarchitecture Information architecture and associatedstandards are well defined and cover mostof the volume, velocity, variety andveracity capabilities Enterprise information architecture (EIA)acts as a guide for the EIM program,ensuring that information is exchangedacross the organization to support theenterprise business strategy Organization sets standards forinformation management technologies Information Architecture fully underpins businessstrategies to enable competitive advantageMetadata Organization manages metadata andresolves semantic inconsistencies tosupport reuse and transparency The organization manages metadataand resolves semantic inconsistenciesto support reuse and transparency. The organization has achieved metadatamanagement and semantic reconciliationDataintegration&interoperability An API integration strategy has beenimplemented, exposing APIs A single integration strategy group isresponsible for putting pre-packagedreusable workflows in place Sophisticated integration user interface,orchestration layer and abstraction layer exist Many pre-packaged connections areoffered along with the ability tointegrate to things and distributeendpoints into leading platforms andmarketplaces Organization has achieved seamless informationflows and data integration across the IT portfolioData quality Organizations create and maintain aconsistent consolidated master view oftheir core data assets across the enterprisewith accurate and complete information Data duplications and discrepancies areresolved both within and between existingsystems on cyclic schedule Data quality benchmarks defined Observance of data quality expectationstied to individual performance targets Industry proficiency levels are used foranticipating and setting improvementgoals Data quality maturity governance framework is inplace such that enterprise-wide performancemeasurements can be used for identifyingopportunities for improved systemic data qualityReference &master data Enterprise business solution provides asingle source of the truth with a close-loopdata quality capabilities Master data is updated in the appropriatedownstream systems MDM provides a single version of thetruth, with a closed-loop data qualitycapabilities Master Data is loaded and managed in aSINGLE Master Data repository Master representations of the same dataassets/entities are no longer held inmultiple systems Organization has achieved integrated master datadomains and unified contentData analytics/measurement Analytical Insight is used to predict thelikelihood of what will happen to somecurrent business activity Predictive Analytics is used to helpoptimize an organization’s decisionmaking so that the best actions aretaken to maximize business value Descriptive, predictive and prescriptive analytical insightoptimizes business processes and is automated where possible A comprehensive analytic environment, powered bytechnologies such as Artificial intelligence (AI), MachineLearning, Business Intelligence, Location Intelligence andGraph database technologies is employed in order for abusiness to have a holistic view of the market and how acompany competes effectively within that marketData security Security policie

Data Maturity stages are a way to measure how advanced a company's data management and analysis is. Data Maturity considers where data lives, how it is managed, data quality and the type of questions being answered with data. In this paper, we describe the following six stages of Data Maturity and what organizations can achieve at each stage

![05[2] Strategy competitors, competitive rivalry .](/img/2/052-strategy-competitors-competitive-rivalry-competitive-behavior-and-competitive-dynamics.jpg)