Transcription

Intro to Incast, Head of Line Blocking,and Congestion ManagementLive WebcastJune 18, 201910:00 am PT

Today’s PresentersTim LustigMellanoxJ MetzSNIA Board of DirectorsCisco 2019 Storage Networking Industry Association. All Rights Reserved.Sathish GnanasekaranBrocade/BroadcomJohn KimSNIA NSF ChairMellanox

SNIA-At-A-Glance 2019 Storage Networking Industry Association. All Rights Reserved.3

SNIA Legal NoticeThe material contained in this presentation is copyrighted by the SNIA unless otherwisenoted.Member companies and individual members may use this material in presentations andliterature under the following conditions:Any slide or slides used must be reproduced in their entirety without modificationThe SNIA must be acknowledged as the source of any material used in the body of any document containing materialfrom these presentations.This presentation is a project of the SNIA.Neither the author nor the presenter is an attorney and nothing in this presentation is intendedto be, or should be construed as legal advice or an opinion of counsel. If you need legaladvice or a legal opinion please contact your attorney.The information presented herein represents the author's personal opinion and currentunderstanding of the relevant issues involved. The author, the presenter, and the SNIA do notassume any responsibility or liability for damages arising out of any reliance on or use of thisinformation.NO WARRANTIES, EXPRESS OR IMPLIED. USE AT YOUR OWN RISK. 2019 Storage Networking Industry Association. All Rights Reserved.

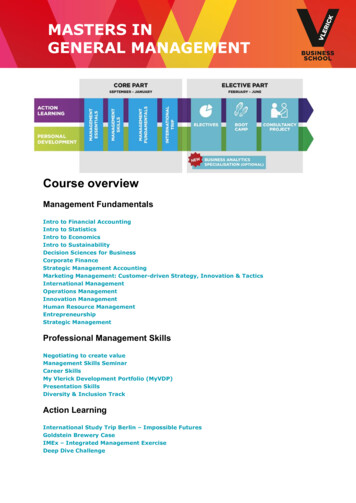

AgendaWhy This Presentation?EthernetFibre ChannelInfiniBandQ&A 2019 Storage Networking Industry Association. All Rights Reserved.5

Why This Presentation?All networks are susceptible to congestionAdvances in storage technology are placing unusualburdens on the networkHigher speeds increase the likeliness of congestionPlanning becomes more important than ever*Gosling, James. 1997. The 8 fallacies of distributed computing. 2019 Storage Networking Industry Association. All Rights Reserved.6

Fixing The Wrong AssumptionsThings that people get wrong about thenetwork*The network is reliableLatency is zeroBandwidth is infiniteThe network is secureTopology doesn’t changeThere is one administratorTransport cost is zeroThe network is homogeneous*Gosling, James. 1997. The 8 fallacies of distributed computing. 2019 Storage Networking Industry Association. All Rights Reserved.7

EthernetJ Metz 2019 Storage Networking Industry Association. All Rights Reserved.8

Application-Optimized Networks You do not need to and should not be designing anetwork that requires a lot of buffering Capacity and over-subscription is not a function of theprotocol (NVMe, NAS, FC, iSCSI, CEPH) but of theapplication I/O requirements5 millisecond viewConges0on Threshold exceededData Centre Design Goal: Optimizing thebalance of end to end fabric latency withthe ability to absorb traffic peaks andprevent any associated traffic loss 2019 Storage Networking Industry Association. All Rights Reserved.9

Variability in Packet Flows Small Flows/Messaging Small – Medium Incast (Hadoop Shuffle, Scatter-Gather, DistributedStorage)Large Flows (Heart-beats, Keep-alive, delay sensitiveapplication messaging)(HDFS Insert, File Copy)Large Incast (Hadoop Replication, Distributed Storage) 2019 Storage Networking Industry Association. All Rights Reserved.10

Understanding Incast Synchronized TCP sessions arriving at common congestion point (all sessions startingat the same time) Each TCP session will grow window until it detects indication of congestion (packet lossin normal TCP configuration) All TCP sessions back off at the same timeBufferBufferOverflow 2019 Storage Networking Industry Association. All Rights Reserved.11

Incast CollapseIncast collapse is a very specializedcase; not a permanent condition It would need every flow to arrive atexactly the same time The problem is more the buffer fills upbecause of elephant flows Historically, buffers handle every flowthe same Could potentially be solved with biggerbuffers, particularly with short frames One solution is to have larger buffers inthe switches than the TCP Incast (avoidoverflow altogether), but this addslatency 2019 Storage Networking Industry Association. All Rights Reserved.12

Buffering the Data Center Large, “elephant flows” can overrunavailable buffers Methods of solving this problem: Increase buffer sizes in the switches Notify the sender to slow downbefore TCP packets get dropped 2019 Storage Networking Industry Association. All Rights Reserved.13

Option 1: Increase BuffersLeads to “buffer bloat”Consequence:As bandwidth requirements get larger, buffer sizes grow and growWhat happens behind the scenesLarge TCP flows occupy most bufferFeedback signals are sent when buffer occupancy is bigLarge buffer occupancy can’t increase link speed but cause long latencyHealthy does of drops are necessary for TCP congestion controlRemoving drops (or ECN marks) is like turning off the TCP congestion control) 2019 Storage Networking Industry Association. All Rights Reserved.14

Option 2: Telling the Sender to Slow Down Instead of waiting for TCP to drop packetsand then adjust flow rate, why not simplytell the sender to slow down before thepackets get dropped? Technologies such as Data Centre TCP(DCTCP) uses Explicit CongestionNotification, “ECN”) instruct the sender todo just this Dropped packets are the signal to TCP tomodify the flow of packets being sent in acongested network 2019 Storage Networking Industry Association. All Rights Reserved.15

Explicit Congestion Notification (ECN)Initialization IP Explicit Congestion Notification(ECN) is used for congestionnotification.ECN enables end-to-endcongestion notification betweentwo endpoints on a IP networkIn case of congestion, ECN getstransmitting device to reducetransmission rate until congestionclears, without pausing traffic.CongestionexperiencedData DiffServ Field Values0x00 – Non ECN Capable0x10 – ECN Capable Transport (0)0x01 – ECN Capable Transport (1)0x11- Congestion Encountered 2019 Storage Networking Industry Association. All Rights Reserved.16

Data Center TCP (DCTCP)Congestion indicated quantitatively (reduce load prior to packet loss)React in proportion to the extent of congestion, not its presence.Reduces variance in sending rates, lowering queuing requirements.ECN MarksTCPDCTCP1011110111Cut window by 50%Cut window by 40%0000000001Cut window by 50%Cut window by 5%Mark based on instantaneous queue length.Fast feedback to better deal with bursts. 2019 Storage Networking Industry Association. All Rights Reserved.17

DCTCP and Incast Collapse DCTCP will prevent Incast Collapse for long lived flows Notification of congestion via ECN prior to packet loss Sender gets informed that congestion is happening and can slow down traffic Without ECN, the packet could have been dropped due to congestions and sender will notice thisvia TCP timeoutDCTCP Enabled IPStackECNEnabledDCTCP Enabled IPStack 2019 Storage Networking Industry Association. All Rights Reserved.18

Fibre ChannelSathish Gnanasekaran 2019 Storage Networking Industry Association. All Rights Reserved.19

CongestionOffered traffic load greater than drain rate–Receive port does not have memory to receive more framesNon-lossless networks––Receiver drops packets, End-points retryRetries cause significant performance impactLossless Networks–––Receiver paces transmitter, transmitter sends only when allowedSeamlessly handles bursty trafficSustained congestion spreads to downstream ports causing significantimpact 2019 Storage Networking Industry Association. All Rights Reserved.

Fibre Channel ChannelCredit AccountingWhen a link comes up, each side tells each other how much frame memory it has–Known as “buffer credits”Transmitters use “buffer credit” to track the available receiver resources–Frames are sent up to the number of available buffer creditsReceivers tell transmitters when frame memory becomes available––Available buffer credit is signaled by the receiver (“receiver ready” – R RDY)Another frame can be sentFibre Channel PortFrame MemoryFibre Channel PortTXFibre Channel FrameRXR RDY 2019 Storage Networking Industry Association. All Rights Reserved.RXTXFrame Memory

Fibre ChannelFibre Channel is a credit based, lossless network–Not immune to congestionFibre Channel network congestion has three causes–––Lost Credit occurs when the link experiences errorsCredit Stall occurs when frame processing slows or stopsOversubscription occurs when the throughput demandexceeds link speed 2019 Storage Networking Industry Association. All Rights Reserved.

Congestion CauseLost CreditLost Credit due to link errors––Lost Credit – Makes us slow downFrames are not receivedCredits are not returnedCredit tracking out of synch––Transmitter sees fewer creditsReceiver sees fewer framesSlows transmission rate––Transmitter waits longer for crediton averageA link reset is required to recover 2019 Storage Networking Industry Association. All Rights meR RDYLost credit causes congestion by reducingframe transmission rate!

Congestion CauseDevice Credit StallCredit Stall occurs due to rxdevice misbehavior––Frames not processedBuffers not freed, credits not returnedPrevents frame flow––Credit Stalled Device - Makes us waitFrames for the device stack up in thefabric waiting for creditFabric resources are held by ame flow slows for other devices–Frames for other devices can’t moveCredit Stalled Devices cause congestion bynot sending “I’m Ready!” 2019 Storage Networking Industry Association. All Rights Reserved.

Congestion CausePort OversubscriptionOversubscription occurs due toresource mismatches–Oversubscribed Device - Asks for too muchDevice asks for more data than link speedCongestion slows upstream ports––Throughput on upstream ports reduced byfan-in to congested portUpstream port congestion worseFrameOversubscribedFrameFrameFrameI’m ReadyI’m ReadyServer(HBA)I’m ReadySlows frame flow––Frame flow slowed due to lower drain rateAffects congestion source as well asunrelated flows 2019 Storage Networking Industry Association. All Rights Reserved.Oversubscribed Devices cause congestion byasking for more frames than the interface onthe path can handle

Congestion ImpactCongestion results in sub-optimal flow performanceSustained congestion radiates to upstream ports–––Congestion spreads from receiver to all upstream portsNot only affects the congestion source but unrelated flowsCan affect significant number of flowsMild to moderate congestion results in sluggish applicationperformanceSevere congestion results in application failure 2019 Storage Networking Industry Association. All Rights Reserved.

Fibre ChannelCongestion Mitigation SolutionsDetection–Alerts notify SAN administrators of link and device errorsCredit Recovery–Link reset resets the credits on a link–Credit recovery automatically detects when a credit has been lost and restores itIsolation–Port fencing isolates mis-behaving links and/or devices–Virtual channels allows “victim” flows to bypass congested flows–Virtual fabrics allow SAN administrators to group and isolate applications flows efficiently 2019 Storage Networking Industry Association. All Rights Reserved.

InfiniBandJohn Kim 2019 Storage Networking Industry Association. All Rights Reserved.28

InfiniBand—The BasicsInfiniBand is a credit-based, lossless networkLossless because the transmitter cannot send unless the receiverhas resourcesCredit-based because credits are used to track those resourcesLow latency with RDMA and hardware offloadsInfiniBand network congestion has one main causeOversubscription occurs when the IO demand exceeds theavailable resources. Can be caused by incastCan also come from hardware failure 2019 Storage Networking Industry Association. All Rights Reserved.29

InfiniBand—CongestionIf one destination is congested, sender waitsSince lossless, senders pause rather than drop packetsIf pause too long, causes timeout problemsIf one switch congested too long, can spreadFlows to other destinations can be affected if they share a switchSometimes called “Head of Line Blocking”Large “elephant” flows can victimize small “mice” flowsNot only in InfiniBand—true for other lossless networks 2019 Storage Networking Industry Association. All Rights Reserved.30

InfiniBand—CongestionInitialcongestionCongestion ExampleGray flows from Nodes A and B to Node D cause congestion at Switch 2.Node D is overwhelmed and pauses traffic periodicallySwitch 2’s buffers fill up with incoming trafficPurple traffic flow is not affected at this pointI’mhappy!Buffers gettingfull—I’mcongested!I’m busy-Wait!Node ASwitch 2Node BNode DI’mhappy!Switch 1Node C 2019 Storage Networking Industry Association. All Rights Reserved.Switch 3Node E31

InfiniBand—CongestionInitialcongestionIf Congestion Lasts Long Enough, It Can SpreadSwitch 2 asks Switch 1 to wait and Switch 1’s buffers start to fill upPurple traffic flow is now affected—it’s a “victim” flowEven though it’s between non-congested Nodes C and ENow I’mbusy too!Node AInitialcongestionCongestionspreadingSwitch 2Node BI’m busy-Wait!Node DSwitch 1Node C 2019 Storage Networking Industry Association. All Rights Reserved.Switch 3Hey,where’smy data?Node E32

InfiniBandCongestion Control OptionInitialcongestionCongestion Control Throttles TrafficSwitch alerts destination about potential congestionDestination alerts senders to slow down temporarilyPurple traffic flow is no longer victimized by gray flowsSending athalf-speed!NoCongestionAye,halfspeed!Hey “D”:congestioncoming!“A” & “B”:please slowdown!Node ASwitch 2Node BNode DI’mhappy!Switch 1Node C 2019 Storage Networking Industry Association. All Rights Reserved.Switch 3Node E33

InfiniBandSolving the Congestion ProblemOverprovisioningMore bandwidth reduces chance of congestionCongestion ControlSimilar to Ethernet ECN; hardware-accelerated notificationsAdaptive RoutingChooses least-congested path, if multiple paths availableVirtual LanesCredit-based flow control per laneCongestion in one traffic class does not affect other clases 2019 Storage Networking Industry Association. All Rights Reserved.34

SummaryAdvances in storage are impacting networksNetwork congestion differs by network typeBut congestion can potentially affect all storage networksIssues and SymptomsIncast collapse, Elephant flows, Mice flowsLost credits, Credit Stall, OversubscriptionHardware failures, Head of line blocking, Victim flowsCuresData Centre TCP, Explicit Congestion NotificationLink reset, Credit recovery, Virtual channels, Port FencingOverprovisioning, Adaptive Routing, Virtual lanes 2019 Storage Networking Industry Association. All Rights Reserved.35

After This WebcastPlease rate this webcast and provide us with feedbackThis webcast and a PDF of the slides will be posted to the SNIANetworking Storage Forum (NSF) website and available on-demandat www.snia.org/forums/nsf/knowledge/webcastsA Q&A from this webcast, including answers to questions wecouldn't get to today, will be posted to the SNIA-NSF blog:sniansfblog.orgFollow us on Twitter @SNIANSF 2019 Storage Networking Industry Association. All Rights Reserved.3

ResourcesFCIA Webcast: Fibre Channel Performance: Congestion, Slow Drain, and OverUtilization, Oh Buffers Queues and Caches -caches-explained/Explicit Congestion formance Evaluation of Explicit Congestion Notification in IP Networkshttps://tools.ietf.org/html/rfc2884Data Center TCPhttps://tools.ietf.org/html/rfc8257Performance Study of dctcp-sigcomm10.pdf 2019 Storage Networking Industry Association. All Rights Reserved.37

Thank You 2019 Storage Networking Industry Association. All Rights Reserved.3

Intro to Incast, Head of Line Blocking, and Congestion Management Live Webcast June 18, 2019 10:00 am PT

![Drawing the Human Head Burne Hogarth[English] - Internet Archive](/img/29/drawing-the-human-head-burne-hogarth-english.jpg)