Transcription

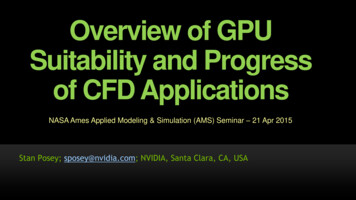

Overview of GPUSuitability and Progressof CFD ApplicationsNASA Ames Applied Modeling & Simulation (AMS) Seminar – 21 Apr 2015Stan Posey; sposey@nvidia.com; NVIDIA, Santa Clara, CA, USA

Agenda: GPU Suitability and Progress of CFDNVIDIA HPC IntroductionCFD Suitability for GPUsCFD Progress and Directions2

NVIDIA - Core Technologies and ProductsCompany Revenue of 5B USD; 8,800 Employees;HPC Growing 35% CAGRGPUMobileCloudGeForce Quadro , Tesla Tegra GRID3

GPUs Mainstream Across Diverse HPC DomainsFY14 SegmentsMedia &Entertain9%Finance4%CAE /MFG7%Consumer Web6%Med Image/Instru11%Supercomputing23%Oil & Gas12%Higher Ed /Research15%Defense/Federal13%World’s Top 3 Serversare GPU-Accelerated4

Tesla GPU Progression During Recent Years2012 (Fermi)2014 (Kepler)2014 (Kepler)2014 (Kepler)M2075K20XK40K80Peak SPPeak SGEMM1.03 TF3.93 TF2.95 TF4.29 TF3.22 TF8.74 TF4xPeak DPPeak DGEMM.515 TF1.31 TF1.22 TF1.43 TF1.33 TF2.90 TF3xMemory size6 GB6 GB12 GB24 GB (12 each)Mem BW (ECC off)150 GB/s250 GB/s288 GB/s480 GB/s (240 each)2x2x2.6 GHz3.0 GHz3.0 GHzMemory ClockPCIe GenGen 2Gen 2Gen 3Gen 3# of Cores448268828804992 (2496 each)Board Power235W235W235W300WKepler/ Fermi2x5x0% – 28%Note: Tesla K80 specifications are shown as aggregate of two GPUs on a single board5

GPU Motivation (II): Energy Efficient 33,862.7National Super Computer CentreGuangzhou14,389.82GSIC Center, Tokyo Tech KFC23,631.70Cambridge University217,590.0Oak Ridge National Lab #1 USA33,517.84University of Tsukuba317,173.2DOE, United States43,459.46SURFsara410,510.0RIKEN Advanced Institute forComputational Science53,185.91Swiss National Supercomputing(CSCS)58,586.6Argonne National Lab63,131.06ROMEO HPC Center66,271.0Swiss National Supercomputing#1 EuropeCentre (CSCS)73,019.72CSIRO75,168.1University of Texas82,951.95GSIC Center, Tokyo Tech 2.585,008.9Forschungszentrum Juelich92,813.14Eni102,629.10(Financial Institution)94,293.3DOE, United States162,495.12Mississippi State (top nonNVIDIA)Intel Phi591,226.60ICHEC (top X86 cluster)103,143.5Government6

GPU Motivation (III): Advanced CFD TrendsHigher fidelity models within manageable compute and energy costsRANSURANSLES?DNSIncrease in non-deterministic ensembles to manage/quantify uncertaintyNumber ofJobs 10xHOMs for improved resolution of transitional and vortex-heavy flowsAccelerator technology identified as a cost-effective andpractical approach to future computational challenges7

NVIDIA Strategy for GPU-Accelerated HPCStrategic AlliancesBusiness and technical alliances with COTS vendorsInvestment in long-term collaboration for solver-level librariesDevelopment of collaborations with academic research community:Examples in CFD: Imperial College—Vincent, Oxford—Giles, Wyoming—Mavriplis,GMU—Lohner, UFRJ—Coutinho, TiTech—Aoki, GWU—Barba, SU—Jameson, othersSoftware DevelopmentLibraries cuSPARSE, cuBLAS; OpenACC with PGI (acquisition) and CrayNVIDIA linear solver toolkit with emphasis on AMG for industry CFDApplications SupportApplication engineering support for COTS vendors and customersImplicit Schemes: Integration of libraries and solver toolkitExplicit Schemes: Stencil libraries, OpenACC for directives-based8

Agenda: GPU Suitability and Progress of CFDNVIDIA HPC IntroductionCFD Suitability for GPUsCFD Progress and Directions9

Programming Strategies for GPU vesProgrammingLanguagesProvides Fast“Drop-In”AccelerationGPU-acceleration inStandard Language(Fortran, C, C )Maximum Flexibilitywith GPU Architectureand Software FeaturesIncreasing Development Effort10

CFD Characteristics and GPU SuitabilityStructured Grid FVExplicitUnstructured FVUnstructured FENumerical operations on I,J,K stencil, no “solver”[Flat profiles: Typical GPU strategy is directives (OpenACC)]UsuallyCompressibleFinite VolumeImplicitUsuallyIncompressibleFinite Element:Sparse matrix linear algebra – iterative solvers[Hot spot 50%, few LoC: Typical GPU strategy is CUDA and libs]11

Select GPU Implementations for CFD PracticeStructured Grid FVUnstructured FVSD SJTU YDRAFlareUsuallyIncompressiblePyFRHyperfluxJENRE, PropelTACOMAFinite VolumeImplicitUnstructured FEFinite Element:ANSYS FluentCulises forAcuSolveMoldflowOpenFOAM12

What is Meant by “CFD Practice”These are not demonstrators, rather meaningful developmentstowards production use CFDProven performance on large-scale engineering simulationsLong-term maintenance and software engineering considerationsCo-design efforts between CFD scientists and computer scientistsIn most cases, contributions from NVIDIA devtech engineering13

Select GPU Implementations for CFD PracticeStructured Grid FVUnstructured FVSD SJTU YDRAFlareUsuallyIncompressibleHyperfluxFinite Element:ANSYS FluentStructured grid explicitgenerally best GPU fitPyFRJENRE, PropelTACOMAFinite VolumeImplicitUnstructured FECulises forAcuSolveMoldflowOpenFOAM14

Select GPU Implementations for CFD PracticeStructured Grid FVUnstructured FVSD SJTU YDRAFlareUsuallyIncompressibleHyperfluxFinite Element:ANSYS FluentUnstructured grid usuallywith renumbering/coloringPyFRJENRE, PropelTACOMAFinite VolumeImplicitUnstructured FECulises forAcuSolveMoldflowOpenFOAM15

Select GPU Implementations for CFD PracticeStructured Grid FVUnstructured FVSD SJTU YDRAFlareUsuallyIncompressibleHyperfluxFinite Element:ANSYS FluentCOTS CFD mostly applysolver library (AmgX)PyFRJENRE, PropelTACOMAFinite VolumeImplicitUnstructured FECulises forAcuSolveMoldflowOpenFOAM16

Select GPU Implementations (Summary)NRLOrganizationLocationSoftwareGPU ApproachCOMAC/SJTUChinaSJTU RANSFortran and CUDAU SouthhamptonUKHiPSTARFortran and OpenACCTurbostreamUKTurbostreamFortran, python templates s-to-s to CUDAGE GRCUSTACOMAFortran and OpenACCRolls RoyceUKHYDRAFortran, python DSL s-to-s to CUDA-FBAE SystemsUKFlareC and s-to-s templates to CUDAStanford UUSSD C and CUDAPyFRUKPyFRPython s-to-s to CUDA (C for CPU)CFMSUKHyperfluxPython s-to-s to CUDA (C for CPU)JENRE, PropelUSUSAC and Thrust templates for CUDAANSYS FluentUSImplicit FEAC and AmgX library, OpenACCFluiDynaDECulises/OpenFOAM C (OpenFOAM), AmgX library, CUDAAltairUSAcuSolveFortran and CUDAAutodeskUSMoldflowFortran and AmgX library17

Select GPU Developments at Various StagesOrganizationLocationSoftwareGPU ApproachU Wyoming /MavriplisUSNot specificCU object oriented templatesGMU / LohnerUSFEFLOPython Fortran-to-CUDA translatorSpaceXUSNot specificC and CUDACPFDUSBARRACUDAFortran and CUDAGWU / BarbaUSNot specificC , python, pyCUDAUTC ResearchUSCombustionFortran and CUDAConvergent Science / LLNLUSCONVERGEC and CUDA, cuSOLVE (NVIDIA)Craft TechUSCRAFT, CRUNCHFortran and CUDA, OpenACCBombardierCANot specificC and CUDADLRDETAUFortran and CUDAONERAFRelsAFortran and CUDAVratisPLSpeed-IT (OFOAM)C and CUDANUMECABEFine/TurboFortran and OpenACCPrometechJPParticleworksC and CUDATiTech / AokiJPNot specificC and CUDAJAXAJPUPACSFortran and OpenACCKISTI / ParkKRKFLOWFortran and CUDA, OpenACCVSSCINPARAS3DFortran and CUDA18

NVIDIA AmgX for Iterative Implicit MethodsScalable linear solver library for Ax b iterative methodsNo CUDA experience required, C API: links with Fortran, C, C Reads common matrix formats (CSR, COO, MM)Interoperates easily with MPI, OpenMP, and hybrid parallelSingle and double precision; Supported on Linux, Win64Multigrid; Krylov: GMRES, PCG, BiCGStab; Preconditioned variantsClassic Iterative: Block-Jacobi, Gauss-Seidel, ILU’s; Multi-coloringFlexibility: All methods as solvers, preconditioners, or smoothersDownload AmgX library: http://developer.nvidia.com/amgx19

NVIDIA AmgX Weak Scaling on Titan 512 GPUsUse of 512 nodes on ORNL TITAN SystemSolve Time / IterationTime (s)0.10AmgX 1.0 (AGG)0.080.060.040.020.0012481632Number of GPUs64128256512 Poisson matrix with 8.2B rows solved in under 13 sec (200e3 Poisson matrix per GPU) ORNL TITAN: NVIDIA K20X one per node; CPU 16 core AMD Opteron 6274 @2.2GHz20

Agenda: GPU Suitability and Progress of CFDNVIDIA HPC IntroductionCFD Suitability for GPUsCFD Progress and Directions21

Progress Summary for GPU-Parallel CFDGPU progress in CFD research continues to expandGrowth from arithmetic intensity in high-order methodsBreakthroughs with Hyper-Q feature (Kepler), GPUDirect, etc.Strong GPU investments by commercial (COTS) vendorsBreakthroughs with AmgX linear solvers and preconditionersPreservation of costly MPI investment: GPU 2nd-level parallelismSuccess in end-user developed CFD with OpenACCMost benefits currently with legacy Fortran, C emergingGPUs behind fast growth in particle-based commercial CFDNew commercial developments in LBM, SPH, DEM, etc.22

OpenACC Acceleration of TACOMA at GE GRC2.6x4 Nodes2.0x1.4xSource: https://cug.org/proceedings/cug2014 and-gtc.gputechconf.com/gtc-quicklink/e7FnYI23

OpenACC Acceleration of TACOMA at GE GRCSource: https://cug.org/proceedings/cug2014 and-gtc.gputechconf.com/gtc-quicklink/e7FnYI24

NASA FUN3D and 5-Point Stencil Kernel on PU: E5-2690 @ 3Ghz, 10 coresGPU: K40c, boost clocks, ECC off3022202.0xCase: DPW-Wing, 1M cells182.4x101 call of point solve5 over all colors11.53.7xNo data transfers in GPU results1 CPU core: 300ms10 CPU cores: 44ms (6.8x on 10)0CPUOpenACC1OpenACC2CUDA FortranOpenACC1 Unchanged code: 2.0xOpenACC2 Modified code : 2.4x (same modified code runs 50% slower on CPUs)CUDA Fortran Highly optimized CUDA version: 3.7xCompiler options (e.g. how memory is accessed) have huge influence on OpenACC resultsPossible compromise: CUDA for few hotspots, OpenACC for the restDemonstrated good interoperability: CUDA can use buffers managed with OpenACC data clauses25

ANSYS Fluent26

ANSYS Fluent Profile for Coupled PBNS SolverNon-linear iterationsAssemble Linear System of EquationsRuntime: 35% 65%Solve Linear System of Equations: Ax bAcceleratethis firstConverged ?NoStopYes27

ANSYS Fluent Convergence BehaviorCoupled vs segregated solverCoupledStable convergence for dragcoefficient at 550 iterationsTRUCK BODY MODEL(14 million cells)SegregatedOscillating behavior for drageven after 6000 iterations28

ANSYS Fluent 14.5 GPU Solver ConvergencePreview of ANSYS Fluent Convergence Behavior Matched CPU1.0000E 00NVAMG-ContNVAMG-X-momNVAMG-Y-mom1.0000E-01Error momFLUENT-Y-mom1.0000E-03FLUENT-Z-momNumerical ResultsMar 2012: Test forconvergence ateach iterationmatches preciseFluent behavior1.0000E-04Model FL5S1:- Incompressible- Flow in a Bend- 32K Hex Cells- Coupled 131415161718191101111121131141Iteration Number29

ANSYS Fluent and NVIDIA AmgX Solver LibraryANSYS Fluent SoftwareRead input, matrix Set-upAMG OperationsGPUAMG Solver 65%of Profile time,operations on GPUCPUDevelopment Strategy:- Integration of AmgXGlobal solution, write output 30

ANSYS Fluent 15 Performance for 111M CellsANSYS Fluent 15.0 Performance – Results by NVIDIA, Dec 2013144 CPU cores – Amg144 CPU cores48 GPUs – AmgX144 CPU cores 48 GPUs3680% AMG solver time292X2.7 XLowerisBetter1811AMG solver timeper iteration (secs)Truck Body Model Fluent solution timeper iteration (secs)111M mixed cellsExternal aerodynamicsSteady, k-e turbulenceDouble-precision solverCPU: Intel Xeon E5-2667;12 cores per nodeGPU: Tesla K40, 4 per nodeNOTE: AmgX is a linearsolver toolkit fromNVIDIA, used by ANSYS31

ANSYS Fluent 16 Performance for 14M CellsANSYS Fluent 16.0 Performance – Results by NVIDIA, Dec 2014Truck Body Model97928059ANSYS Fluent Time (Sec)3.0x5.4x LowerisBetter3229 1487CPU onlyCPU GPUAMG solver timeCPU onlyCPU GPUSolution timeSteady RANS modelExternal flow, 14M cellsCPU: Intel Xeon E52697v2 @ 2.7GHz;48 cores (2 nodes)GPU: 4 X Tesla K80 (2 pernode)NOTE: Time untilconvergence32

OpenFOAM33

Typical OpenFOAM Use: Parameter Optimization#1: Develop validated CFD model in ANSYS Fluentor other commercial CFD software in production#2: Develop CFD model in OpenFOAM,validate against commercial CFD model#3: Conduct parameter sweeps with OpenFOAM(procedure considered by many users to be costprohibitive using commercial CFD license models)34

Culises: CFD Solver Library for OpenFOAMCulises Easy-to-Use AMG-PCG Solver:#1. Download and license from http://www.FluiDyna.de#2. Automatic installation with FluiDyna-provided script#3. Activate Culises and GPUs with 2 edits to config-fileconfig-file CPU-onlyconfig-file CPU GPUwww.fluidyna.deFluiDyna: TU MunichSpin-Off from 2006Culises provides alinear solver libraryCulises requires onlytwo edits to controlfile of OpenFOAMMulti-GPU readyContact FluiDynafor license detailswww.fluidyna.de35

Culises (with AmgX) Coupling to OpenFOAMCulises Coupling is User-Transparent:www.fluidyna.de(AmgX)36

FluiDyna Culises: CFD Solver for OpenFOAMCulises: A Library for Accelerated CFD on Hybrid GPU-CPU SystemsDr. Bjoern Landmann, PU.pdfwww.fluidyna.deDrivAer: Joint Car Body Shape by BMW and tomotive/drivaerMesh Size - CPUsSolver speedup of 7xfor 2 CPU 4 GPU 36M Cells (mixed type) GAMG on CPU AMGPCG on GPU9M - 2 CPU 18M - 2 CPU36M - 2 CPUGPUs 1 GPU 2 GPUs 4 GPUsCulises2.5x4.2x6.9xOpenFOAM1.36x1.52x1.67x37

ANSYS Fluent Investigation of DrivAerSource: ANSYS AutomotiveSimulation World Congress,30 Oct 2012 – Detroit, MI“Overview ofTurbulence Modeling”By Dr. Paul Galpin, ANSYS, Inc.Available ANSYS models38

Particle-BasedCFD for GPUs39

Particle-Based Commercial CFD Software GrowingISVSoftwareApplicationMethodGPU StatusPowerFLOWLBultraXFlowProject micsLBMLBMLBMLBMEvaluationAvailable Multiphase/FSMultiphase/FSDiscrete phaseMPS ( SPH)MP-PICDEMAvailable v3.5In developmentIn developmentANSYS Fluent–DDPM Multiphase/FSSTAR-CCM Multiphase/FSDEMDEMIn developmentEvaluationAFEAESILSTCAltairSPHSPH, ALESPH, ALESPH, ALEAvailable v2.0In developmentEvaluationEvaluationHigh impactHigh impactHigh impactHigh impact40

FluiDyna Lattice Boltzmann Solver uidxwww.fluidyna.deSpin-Off in 2006from TU MunichCFD solver usingLattice Boltzmannmethod (LBM)Demonstrated 25xspeedup single GPUMulti-GPU readyContact FluiDynafor license details41

TiTech Aoki Lab LBM Solution of External FlowsA Peta-scale LES (Large-Eddy Simulation) for Turbulent FlowsBased on Lattice Boltzmann Method, Prof. Dr. Takayuki 8Is4ClCwww.sim.gsic.titech.ac.jpAoki CFD solver using LatticeBoltzmann method (LBM) withLarge Eddy Simulation (LES)42

Summary: GPU Suitability and Progress of CFDNVIDIA observes strong CFD community interest in GPU accelerationNew technologies in 2016: Pascal, NVLink, more CPU platform choicesNVIDIA business and engineering collaborations in many CFD projectsInvestments in OpenACC: PGI release of 15.3; Continued Cray collaborationsGPU progress for several CFD applications – we examined a few of theseNVIDIA AmgX linear solver library for iterative implicit solversOpenACC for legacy Fortran-based CFDNovel use of DSLs, templates, Thrust, source-to-source translationCheck for updates on continued collaboration with NASA (and SGI)Further developments for FUN3D undergoing review at NASA LaRCCollaborations with NASA GSFC ongoing with climate model and other43

Stan Posey; sposey@nvidia.com; NVIDIA, Santa Clara, CA, USA

Mem BW (ECC off) 150 GB/s 250 GB/s 288 GB/s 480 GB/s (240 each) 2x Memory Clock 2.6 GHz 3.0 GHz 3.0 GHz PCIe Gen Gen 2 Gen 2 Gen 3 Gen 3 2x # of Cores 448 2688 2880 4992 (2496 each) 5x Board Power 235W 235W 235W 300W 0% - 28% Tesla GPU Progression During Recent Years