Transcription

HSI 2009Catania, Italy, May 21-23, 2009Automated FAQ Answering withQuestion-Specific KnowledgeRepresentation for Web Self-ServiceEriks SneidersDept. of Computer and Systems Sciences, Stockholm University / KTH, Swedeneriks@dsv.su.seAbstract — Automated FAQ answering is a valuablecomplement to web self-service: while the vast majority ofsite searches fail, our FAQ answering solution for restricteddomains answers two thirds of the queries with accuracyover 90%. The paper is shaped as a best practice summary.The reader will find out how the shortcomings of site searchare overcome by FAQ answering, what kind of techniquesfor FAQ retrieval are available, why question-specificknowledge representation is arguably most appropriate forrestricted domains, and what peculiarities of running anFAQ answering service for a customer service may beexpected.Keywords — Automated question answering,answering, FAQ retrieval, web self-service.TFAQI. INTRODUCTIONHE benefits of web self-service are well known: costsper transaction saved 20-30 times [1] [2], availabilityaround the clock, shorter response time. These benefitshold, however, only if the users find what they are lookingfor. People using self-service expect quick solutions orabandon the site [3].Automated question answering (QA) is a step beyondsearch engines where the system not just retrievesdocuments but rather delivers answers to user questions.Most often QA stands for fact extraction from free textdocuments in open domain, or ontology and reasoningbased systems in restricted domain. Our research subject isa subclass of QA – automated FAQ (Frequently AskedQuestions) retrieval in a restricted domain. The system hasbeen in operation for a few years and has brought up agreat deal of positive experience. In the next section wediscuss shortcomings of site search engines that FAQretrieval is meant to overcome. Section III shows thereoccurring nature of user queries, while Section IV givesan insight into various techniques for FAQ retrieval.Section V presents the main points of our own FAQretrieval technique, its performance, and the core features.Sneiders, E. (2009) Automated FAQ Answering withQuestion-Specific Knowledge Representation for WebSelf-Service. Proceedings of the 2nd InternationalConference on Human System Interaction (HSI'09),May 21-23, Catania, Italy, IEEE, pp.298-305Section VI is titled “Lessons learned”. The advantages ofautomated FAQ answering for web self-service are listedin Section VII. Two final sections present the furtherresearch and conclusions.II. SEARCH IS NOT ENOUGHAlthough 82% of visitors use site search, as much as85% of searches do not return the results sought [4].Search technology is continuously developing by addingspelling correction, synonym vocabularies, processinginflectional forms of words, stemming, compoundsplitting, analysis of document structure and links betweendocuments. Still, site search has shortcomings caused notby bad technology but rather inherent to keyword-basedsearch phenomenon as such.1.2.3.4.Information not published. The task of a searchengine is to find documents that satisfy the query, asearch engine pulls information from a documentrepository. Unfortunately, there is no guarantee thatany relevant documents exist. Analysis of userqueries in our case study showed that 65% of thequeries considered for inclusion in the FAQdatabase did not have any correspondinginformation published. There is no way the mostperfect search technology would ever find it.Wrong keywords. Documents and user queries mayrefer to the same thing using different wording, andthere will be no match. Linguistic enhancementssuch as synonym dictionaries and stemming dohelp, yet they hit the ceiling when the keywordschange their meaning in different contexts.Documents vs. answers. A search engine findsdocuments written from information provider’spoint of view. On a website, people most oftensearch in order to find answers to their questionsrather than retrieve documents.Undetermined nature of “0 documents found”.This is a consequence of the above features fromthe user perspective. The fact that no documents arefound does not mean that the answer is notpossible. For example, a user is searching for aproduct on a company website and finds norelevant web pages. This is not an answer. In orderto find the answer, the user calls the customer298

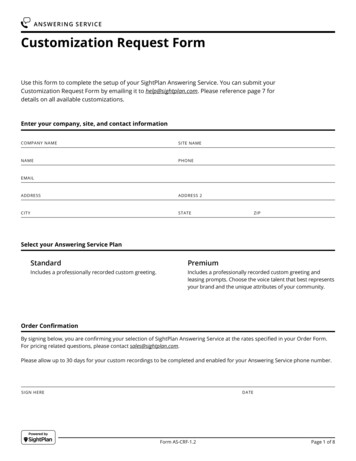

Hits per FAQ120100Top 60 FAQs1963 hits 87% of all hits80604020012039587796115FAQsFig. 1. Distribution of hits per FAQ.5.6.7.service and finds out that the company does notoffer the product. This is a definite answer whichcould not be delivered by search.Text only. Site search engines retrieve documents indifferent formats – HTML, plain text, PDF,Microsoft Word, etc. Structured databases andmultimedia objects are normally not considered.Search in heterogeneous sources is technicallypossible [5] and is provided by contentmanagement systems such as IBM OmniFind, butnot all companies have them or offer outside theirclosed intranets. Not the least because unrestrictedsearch beyond public web documents is a securitythreat.Statistical matching methods in a small collection.Search engines use statistical matching methods,they do not interpret user queries or documents. Ifthere is only one relevant document on a website, itis more likely to be buried in search results forcommon keywords.Short queries. Two studies in restricted domainsearch report the average query length 2.25 [6] and2.31 [7] words. It is too little to always retrieverelevant documents.III. REOCCURRENCE OF USER QUERIESAlready when FAQ lists were invented it was clear thatpeople who share the same interests tend to ask the samequestions over and over again. Lin [8] notices similaritybetween reoccurring questions to a QA system and Zipf’scurve on word ranks – the curve of question frequenciesand Zipf’s curve have similar shapes. Furthermore, thewhole paradigm of template-based QA relies onreoccurring questions [9].In order to make the discussion more specific, let usexamine the actual user queries recorded in the log files ofour FAQ retrieval system. We inspected 1996 consecutivequeries; the system answered 1432 queries by matchingthem to 127 FAQs. In total 2246 hits were generated. A hitis a match between a query and an FAQ. There are morehits than queries because one query can match more thanone FAQ and, therefore, generate more than one hit. Thedistribution of the hits per FAQ is shown in Fig. 1. Thetop 60 FAQs are responsible for 87% of all hits.Considering the fact that 72% of all queries had a hit(1432 of 1996), we can estimate that 60 FAQs matchedaround 63% of all queries.[6] and [10] show frequencies of word pairs fromqueries in site search, which gives us a hint of populartopics. In another study 25 most popular queries to a USlegislation digital library accounted for 17% of the queryflow [7].Our study shows that the majority of user queries fallinto a relatively few subjects that can be successfullycovered by FAQs. Furthermore, we can mount an FAQretrieval system on top of a site search engine and thusincrease our users’ experience: the search facility startsanswering questions, and there is no need to browse FAQlists.IV. APPROACHES TO FAQ RETRIEVALMost FAQ retrieval techniques fall into threecategories: (i) statistical techniques that learn answeringnew questions from a large amount of sample data, (ii)Natural Language Processing (NLP) and semantic textanalysis, and (iii) template-based techniques where eachFAQ has a cluster of templates that mimic expected userquestions.A. Statistical techniquesStatistical techniques are most useful when we deal witha large number of question-answer pairs created by a thirdparty, for instance those available on the web. The systemmatches a query to FAQ questions and possibly other partsof the FAQ file/records. Measuring semantic similarity,however, is not trivial because the query and the FAQsmay use different wording. For example, “How can Ireturn my merchandise?” and “What do we do in order toget our money back?” carry the same meaning without asingle word in common. Therefore the first problem to besolved is bridging lexical gaps, finding which words canbe perceived as synonyms in the given context.[11] tries to resolve lexical gaps by matching the query299

to different fields of an FAQ file – question text, answertext, FAQ page title – with and without stemming,removing stop-words, using n-grams. [12] categorizespreviously logged queries using original FAQs as the seeddata. The system matches a new query not only to theoriginal FAQs but also to the attached clusters of previousqueries, which ensures larger lexical diversity. [13] and[14] are inspired by machine translation techniques. [13]translates FAQs to foreign languages and then back toEnglish, which results in question pairs with the samemeaning but sometimes different wording. This way thesystem learns relationships between words.After semantic relationships between words are found,the systems calculate similarity between a query and FAQrecords using Information Retrieval (IR) methods such ascosine similarity, Okapi BM25, etc.B. NLP and ontologiesThe NLP approach involves word sense disambiguationand semantic analysis of user queries and FAQ texts, aftersyntactic, morphological and lexical analysis has beencompleted. NLP uses manually crafted lexicons andoptionally domain ontologies.The most prominent FAQ retrieval system of this kindis FAQFinder [15] which retrieves question-answer pairsfrom FAQ files on various topics. The system combinestwo text similarity measures. One is vector similarity withtf-idf term weights. The other one calculates the distancebetween words using WordNet. The system attempts todisambiguate nouns and verbs, creates WordNet synonymsets, and then counts the number of hypernym/hyponymlinks between these synonym sets. The synonym sets andhypernym/hyponym links are general; there is no domainknowledge involved.[16] uses domain dependent and independent lexicons.Semantic analysis takes the result of lexical analysis of thequery in order to detect its semantic category. For eachsemantic category there is template that computes arepresentative question for retrieval of the correct answerfrom the FAQ knowledge base. A concept hierarchydictionary helps to calculate distance between words. Thesystem learns semantic categories through user feedback.[17] uses a domain ontology in order to align theconcepts in the query with those in the FAQs, as well as todetect FAQs that contain keywords that semanticallyconflict with the query. Otherwise, probabilistic keywordmatching and user feedback are used to calculatesimilarity between the query and an FAQ entry.[18] uses a domain ontology in order to calculatesemantic distance between words when an FAQ is beingcategorized or matched to a user query. [19] constructsontological graphs – connected nodes representingsentences – for the query and FAQs, and compares thesegraphs.It should be noted that all FAQ retrieval techniques usebasic language processing which may be on,thesaurus, etc.C. Template-based techniquesThe most prominent template-based system is START,which makes use of knowledge annotations as “computeranalyzable collections of natural language sentences andphrases that describe the content of various informationsegments” [20]. An annotation mimics the structure“subject-relationship-object”. START matches a userquestion to the annotation entries on both the word level(using additional lexical information about synonyms, ISA trees, etc.) and the structure level (rules for paraphrasedarguments of verbs, nominalization, etc.). When the userquestion matches an annotation entry, START follows thepointer to the information segment tagged by theannotation and returns it as the answer.Our system, described in the next section, is anothertemplate-based retrieval system.V. OUR APPROACH TO FAQ ANSWERING FORWEB SELF-SERVICEOur system encompasses two modules – an FAQmodule and a site search engine. The goal of the FAQmodule is to capture reoccurring queries, also naturallanguage questions, and present semantically matchinganswers, while the search engine does its best applyingstatistical keyword matching to the documents on thewebsite.The system receives input from two places on thewebsite – “search the site” and “contact us”. In “contactus”, the users are encouraged to ask question to the systembefore phoning the customer service. There are three kindsof queries – (i) fully formulated questions, (ii) phrasessuch as “report the damage”, and (iii) single keywords.The FAQ module perceives single keywords as “What canyou tell / what should I know about ” type of questions.If the system passes the query to the site search engine, itremoves stop-words and too common domain words. Ifthe user has applied “quotation marks”, the query is notaltered.The output is following: If the FAQ module finds one matching FAQ and isconfident about the match, the FAQ and its answerare directly presented to the user. Furthermore, thesystem offers the user an option to submit a searchquery, derived form the original user query, to thesite search engine.If several, say 2-3, FAQs are retrieved or thesystem is not that confident about the match, theFAQ questions are listed and the user can retrievetheir answers one by one. The FAQ list iscomplemented by a site search result.If no matching FAQs are found, the systemperforms ordinary site search.The system does not maintain any dialogue the way chatbots do.300

A. Question templatesThe FAQ retrieval technique is described in detail in[21]; minor modifications have been added over the years.A brief description here will help the reader understandthe conclusions presented in the following sections.Unlike other systems that retrieve third party questionanswer pairs, our FAQ module maintains its own databasewith around 150 FAQs. As we see in Fig. 1, about 60 ofthem are somewhat often retrieved. Each FAQ has one orseveral manually crafted question templates (Fig. 2),slightly more than 300 in total. A question template holdslinguistic signatures that remotely resemble regularexpressions and mimic the language structures of expecteduser queries.FAQ .question templateFAQ 2question templateFAQ 1question templateFig 2. Question templates represent FAQsA linguistic signature contains synonym sets where asynonym is a word stem, number, or phrase. In a phrase,the order of and distance between synonym sets aredefined. A question template has three linguisticsignatures that define language structures that (i) must bepresent, (ii) may be present, and (iii) may not be present ina matching query. By matching these three linguisticsignatures to a query the system makes conclusions aboutthe semantic distance between the question template andthe query. Several question templates linked to one FAQcover the lexical diversity of matching queries.A linguistic signature is the result of syntactic (phrases),morphological (word stemming, compound wordsplitting), and lexical (synonym sets) analysis of expecteduser queries. Like all FAQ retrieval techniques do, we alsoperform linguistic text analysis. Unlike most techniques,we do this analysis before, not after user queries arereceived. When a query arrives, the system follows theinstructions stored in linguistic signatures and matcheslexical items to the query.The system does limited reasoning when it postprocesses retrieved FAQs. It may, for example, disregardsimple or low match-confidence FAQs if there are morecomplex or high match-confidence FAQs retrieved.B. Performance of FAQ retrievalPrecision and recall are common IR measures. Recallshows the share of retrieved relevant FAQs among all therelevant FAQs in the database, while precision shows theshare of relevant FAQs among all the retrieved FAQs. Wemeasured also rejection, a measure coined by [15].Rejection shows the share of correctly reported nilanswers when there is no matching FAQ in the database.That is, rejection measures the system’s ability not toretrieve garbage if there is no answer in the database.Precision and recall were measured according to the IRtraditions: the retrieved documents – FAQs – were eitherrelevant or not relevant. Such a binary relevance valuemakes sense because the FAQ database has beenoptimized for incoming queries over some time and acertain semantic distance between a query and theretrieved FAQs is being deliberately tolerated. We did notjudge how well the FAQs were shaped after each querybut rather how well the system managed to retrieverelevant and only relevant information choosing from theFAQs that existed in the database at the moment ofreceiving the query.We took 317 consecutive queries from the log file andstripped away 112 queries that had no correspondingFAQs in the database. This gave us 205 queries. Precisionand recall were measured for each query separately;precision only for the queries with non-zero recall. Theaggregated results are shown in Table 1.The rejection value is the number of correct nil-answersdivided by the total number of queries that should havehad nil-answers: 97/112 0.87.From the 16 zero-recall queries, 7 were a re-wording ofthe previous query from the same IP address within a fewseconds. Only 9 zero-recall queries were originalinformation needs.Our performance measurements show that more than 9of each 10 queries receive a relevant answer. Forcomparison, [22] estimates that 70% is the upper boundsof correctly answered queries in open domain free textQA. Restricted domain QA is supposed to do better thanthat by applying domain specific knowledge.In the literature, different performance measures forFAQ-based question answering are used. Furthermore, asystem may have several components each evaluatedTABLE 1: QUALITY OF FAQ RETRIEVAL.Precision10.500.670.330.25Total queriesAvg precisionNum queries17681311890.96Recall10.500Total queriesAvg recallNum queries186316Nil-answersCorrectGarbageNum queries97152050.91Total queriesRejection1120.87301

separately, and there may be a number of changingparameters that influence the measurement figures. Thismakes comparison of different systems tricky. In order toput our own performance measurements into context, weselected some related performance figures that werereasonably easy to interpret.[11] operates statistical techniques in open domain.Considering the top 20 retrieved FAQs, 36% of userqueries got adequate answers, and 56% of the queries gotrelevant information including adequate answers.Considering the top 10 retrieved FAQs, the figures were29% and 50% respectively.[13] also operates statistical techniques in open domain,in Korean. It has precision 0.78 at recall close to 0, andprecision 0.09 at recall 0.9. In between, some sampleprecision/recall values are 0.44/0.3 and 0.21/0.6.[12] is another Korean system, based on statisticaltechniques, that operates in a domain of several websites.Its performance was measured by Mean Reciprocal Rank(MRR), which is calculated using the position of the firstrelevant answer in the list of retrieved FAQs, and so for anumber of queries. In a nutshell, MRR value is 1 if allqueries get the right answer as the first item in the list ofretrieved texts, and 0.5 if the right answer is always thesecond retrieved text. The best MRR for the answerretrieval component of [12] was 0.7, given that all othercomponents of the system made no mistakes.FAQFinder [15] does shallow NLP in open domain. Itsquestion matching component reaches maximum recall0.68 at 0 rejection. As rejection rises, recall drops. Forexample, 0.4 rejection results in 0.53 recall, and 0.8rejection results in 0.25 recall.[18] is an ontology-based FAQ answering system inrestricted domain. It has 0.78 recall at 0.82 rejection.C. Question-specific representation of linguisticknowledgeThe main cause of our superior performance figures isgranular knowledge representation embodied in questiontemplates. While normally NLP and IR systems work in avague application-specific knowledge domain, our systemworks in a number of narrow well-defined FAQ-specificknowledge domains.application knowledge domainFig. 3 illustrates the difference. On side (b) we have alexicon and ontology that cover a part of the applicationknowledge domain. The lexicon has domain dependentand independent parts. The coverage of the knowledgedomain must be rather complete if we want to processarbitrary text.On side (a) we have a number of question templates aspieces of onto-lexical knowledge that patch the applicationknowledge domain. The coverage of the applicationknowledge domain is poor and focused only on the mostoften queried parts; we do not develop knowledgerepresentation that we don’t need. Several questiontemplates may share common parts of knowledgerepresentation and thus avoid redundancy.Question templates encompass the following kinds ofknowledge: Ontological. Although a question template hardly isan ontology in the sense of Artificial Intelligence, itdoes define concepts, their attributes, andrelationships in the context of the FAQ.Lexical. The ontological knowledge is expressed bywords and phrases stored in question templates.Context-dependent synonymy and synonymy basedon ontological relationships is respected. Slang,abbreviations, localizations, dialects are included.Morphological. Words are usually stemmed or theright morphological form is used. Compoundwords are split.Syntactic. Complex syntactic structures arerecorded as phrases.Although question templates all together do not covermuch of the application knowledge domain (only the mostoften queried parts), FAQ-specific linguistic knowledge ispretty well covered because the domain of one template isnarrow.The templates eliminate much of the word sensedisambiguation difficulty because synonyms and multiword expressions are defined in narrow context.Lexical gaps – queries may carry the same meaningwhile having different wording – are covered by a clusterof templates (see Fig. 2). Such a cluster is analogous queryexpansion.application knowledge gygenerallexiconqtqt(a)(b)Fig. 3. FAQ-specific vs. application domain-specific knowledge representation302

Missing words in user queries do not cause problemsbecause the templates assume them in narrow context. Forexample, “Is it more expensive under 25?” is interpretedas “Is a car insurance more expensive if the driver is under25 years of age?” in the context of the right FAQ. Thetemplates can deal with short queries such as searchphrases and keywords.Question templates are crafted manually analyzingprevious user queries, if such queries are available. In thissense our technique correlates with [12] (see SectionIV.A). Nonetheless, the data in a template is not statistical.A template encapsulates human decisions based on (i)statistical information about past queries and (ii) reasoningabout the future queries.Crafting question templates can be compared withSearch Engine Optimization where Google-enthusiaststarget popular search queries and optimize specific webpages for these queries. For popular queries on majorsearch engines, a web page stands not a chance to reachthe top of search results without being heavily optimized.The optimization effort is continuous because searchengines regularly modify their ranking criteria. In ourcase, instead of HTML documents we optimize questiontemplates to capture expected relevant queries and ignoreirrelevant ones. The syntax of our linguistic signatures andthe matching algorithm do not change.VI. LESSONS LEARNEDOur FAQ answering system has been in operation for afew years by now, and we have accumulated someexperience to be shared.A. Starting the FAQ answering serviceAs we started building the FAQ database from scratch,the first problem was finding the users’ information needs.Site search logs were not available because the searchservice was outsourced. Therefore we split the task intotwo sub-tasks. First we populated the FAQ database usingany information sources available. Then, after the systemwas put into operation, we analyzed the log files.From the web server logs we could find the most visitedinformation and product pages. Assuming that users mayask about the content of these pages, we created our firstFAQs linked to that information. It proved later that thecontent of the most visited pages did not dominate theinformation needs posed to the FAQ answering system.The customer service, whose work the system wassupposed to relieve, supplied its own list of FAQs. Someof these FAQs did indeed represent popular informationneeds, some didn’t.After the FAQ answering system had been in operationfor a while, we checked the source of the answers. 65% ofall answers we written specifically for our FAQ database.Only 35% of the answers were readily available on thecompany’s website. This illustrates the awareness of thecompany about its customers’ information needs. TheFAQ answering system improved this awarenessconsiderably.B. NeighborhoodThe largest restriction of our QA approach is the staticnature of FAQs – the answers do not react to details inuser queries. This limitation is lessened if the genericanswer describes the way to a user-tailored answer. Forexample, the user may need to log into the self-servicesystem and look for the exact answer there.It is, in fact, possible to make FAQs more querytailored by linking them to database data [23]. Still, thisposes integration difficulties between a QA system and thedatabase. There is no universal solution of how to connecta system to an arbitrary database in a manner similar tohow search engines index the web. “‘Integration’ is aword we don’t want to hear” was the message from thecompany using our system. Even more, the companyrequested that our software was kept outside its firewalls.The system is an outsourced isolated module that operatesisolated FAQs and smoothly fits into the self-servicewebsite by means of HTML/HTTP. We do questionanswering in a plug-n-play manner; no integration withother systems.There were three considerations that made the companycautious. The spirit of self-service implies reducing thework load on employees; the company was not willing toincrease this work load and costs by any kind of systemintegration. Furthermore, any new third party softwareinside the firewalls is a security threat. Finally, systemintegration would probably make the project drown in theinternal bureaucracy.C. Stable FAQ databaseThe initial period, a couple of months, of the system’soperation required regular log-mining in order to discoverusers’ information needs and unusual wording of thequeries. Log analysis allowed us improving the selectionof relevant FAQs and linguistic coverage of questiontemplates.Only a few new FAQs were added to the database afterthe initial period of active discovery of the users’information needs, mostly FAQs related to advertizingcampaigns and rare external events. The overall subjectdistribution of user queries is stable. Table 2 shows theshare of answered questions in March and October of twoconsecutive years. The number of submitted questionsslowly rises, but the share of answered ones remainsstable.TABLE 2: SHARE OF ANSWERED QUESTIONS OVER TIME.Q subm.Q answ.% .02Oct10536745070.71Adding new FAQs and question templates to thedatabase does not cause difficulties because of thegranular representation of onto-linguistic knowledge. Weshould be cautious, however, modifying the shared piecesof onto-linguistic knowledge used by several templates.303

VII. ADVANTAGES OF FAQ ANSWERING FORWEB SELF-SERVICEA. “Intelligent search”Let us see how our FAQ answering system overcomesthe shortcomings of site search listed in Section II.1.2.3.4.5.6.7.The FAQ database is populated according to users’information needs being discovered throughanalysis of user queries. The system pushesforward repeatedly requested answers, whichsatisfies more than two thirds of all queries.Any QA system is linguistically enhanced.Question templates easily handle synonyms andwording of ontological relationships.The system provides specific answers, documentsare included where needed.The system handles all reoccurring queries anddelivers definite answers even in cases where sitesearch would yield “0 documents found”.The answers may contain as much multimedia asthe medium holding them, in our case HTML andweb browsers, allows.Question templates are created by learning frompast queries, but they are optimized for futurequeries disregarding any statistical properties ofthe text on the website.The FAQ module interprets short queries asquestions and answers them accordingly.B. Business benefitsThe business benefits of automated FAQ answering aretwofold. The company that runs the FAQ answeringservice has acknowledged that its customer service doesnot receive trivial questions anymore and that operatorshave more time to deal with complex issues that do requirehuman involvement. A minimized number of repeatinginsignificant questions to the operators reduces stress andstress-related decrease of productivity. Furthermore,analysis of user queries is a source of business intelligencewhich helps to monitor customer interests, proactivelyreact to demand, receive feedback on advertizingcampaigns. The estimated return on investment was fourfive-fold.From the user’s point of view, automated FAQanswering yields the following benefits: (i) immediateanswer, if any, no telephone queues or waiting for an email answer, (ii) availability around the clock, no officehours, (iii) equal availability from around the globe, (iv)interaction in the user’s own pace, no one is waiting in theother end of the phone line, (v) comfort for shy people andpeople with language difficulties.VIII. FURTHER RESEARCHThere are several angles of the further research.

Abstract — Automated FAQ answering is a valuable complement to web self-service: while the vast majority of site searches fail, our FAQ answering solution for restricted domains answers two thirds of the queries with accuracy over 90%. The paper is shaped as a best practice summary. The reader will find out how the shortcomings of site search