Transcription

MEASURING CLINICAL REASONING COMPETENCY USING A VIRTUAL PATIENT MODELMeasuring Clinical ReasoningCompetency Using a VirtualPatient ModelAbstractDANE M. CHAPMAN, MD, PhDJUDITH G. CALHOUN, PhD, MBA1ADRIAN P. VAN MONDFRANS, PhD2WAYNE K. DAVIS, PhD11,2,3Institutional Affiliations:1. University of Michigan MedicalSchool, Department of MedicalEducation, Ann Arbor, MI2. Brigham Young University,McKay School of Education,Department of InstructionalPsychology and Technology,Provo, UT3. University of MissouriColumbia, School of Medicine,Center for Clinical Reasoningand Procedural Competency,Department of EmergencyMedicine, Columbia, MOBackground:Physicians must be thorough yet efficient in data gathering and must use decision-makingstrategies that limit diagnostic studies and costs, but still promote maximal diagnostic proficiency.These clinical reasoning skills are neither adequately taught nor measured in medical schools andresidencies.Objective:To define clinical reasoning constructs a priori and develop clinical reasoning indices to be usedwith a virtual patient simulation model for teaching and assessing clinical reasoning competency.Methods:We used an experimental, pretest-posttest design to assess expected gains in clinical reasoningcompetency after three hours of virtual patient simulation practice. Computer transcripts(N 486) were generated by 81 medical students with complete data who solved one pretest, threepractice, and two posttest simulations.Results:Four clinical reasoning constructs were identified a priori: proficiency, efficiency, thoroughness,and strategy, and nineteen clinical reasoning indices were defined. Multivariate ANOVA andcorrelational analyses revealed significant pretest-posttest differences for posttest 1 (13/19indices) and posttest 2 (14/19 indices), supporting the instructional effectiveness of virtualpatient simulation practice and the construct validity of four clinical reasoning constructs andtheir corresponding nineteen clinical reasoning performance indicies. Reliability (stability) andconcurrent validity of indices varied with case content.Conclusions:Instructional effectiveness, validity and stability of four constructs and nineteen correspondingclinical reasoning indices were established for a computer-based, free-inquiry virtual patientsimulation model.Keywords:virtual patient, simulation, clinical reasoning, clinical decision-making, competency, assessmentIntroductionHigh-fidelity, virtual-reality training simulations areincreasingly being used for procedural training until proficiencyis reached, and before allowing trainees to perform certain highrisk procedures on patients.1-4 The Federal Drug AdministrationMARCH 20131:1JOURNAL OF CLINICAL REASONING AND PROCEDURAL COMPETENCY(FDA) endorsement of procedure-based simulation training isexpected to cause a ripple effect throughout all of medicine.1Traditional methods of procedural training, including practicingupon patients, will no longer be acceptable as currentlyperformed. While it is doubtful that the use of patients for trainingwill ever be completely substituted with simulations, physicians1

MEASURING CLINICAL REASONING COMPETENCY USING A VIRTUAL PATIENT MODELwill be held to higher standards of training and remediation toreduce medical errors, just as pilots have been mandated withflight simulators.2-6MethodsDespite the popularity and rapid advance of procedure-basedsimulations in medicine, the application of cognitive-based virtualpatient simulations has been noted by some experts to be stuck intime.3-5 The “marvelous medical education machine,” a completesimulator for medical education as described by Friedman,3 hasyet to be built. As its potential impact upon medical educationand patient care quality is every bit as powerful as the impact ofthe flight simulator upon aviation, the marvelous computer willlikely be built, though probably not all at once.3-6 The ultimatevirtual patient simulator will be high-fidelity—meaning it willfaithfully simulate the actual physician-patient encounter. Itwill also be free-inquiry—meaning users can access data freelywithout menus or other branching limitations and without cues.Rather than text or verbal descriptions of physical exam anddiagnostic test findings, actual visual and auditory responseswill be provided, such as visual cues for skin rashes, cardiac andrespiratory sounds, and digital images for electrocardiographs(EKGs) and radiographs. While the USMLE step 3 computerbased case simulation exam has made notable strides in thisregard, it is not the ulitmate virtual patient simulator and it stillhas branching and cueing limitations.7-10We used an experimental pretest, posttest control group designto assess expected gains in CR competency after three hoursof VPS practice. To address the effects of medical information(content) upon clinical reasoning (process), pretest-posttest andpractice-posttest cases of similar and dissimilar content domainswere utilized as controls.Our aims in this study were to implement a high-fidelity,free-inquiry virtual patient simulation (VPS) model into themedical school curriculum to teach clinical reasoning (CR)skills, and then develop a scoring rubric using the VPS model asan assessment tool for measuring data-gathering and decisionmaking CR competencies. Specifically, we hypothesized that: (1)three hours of VPS practice with feedback would significantlyimpact CR competency as measured by VPS assessments, (2)CR learning constructs could be identified, and a correspondingscoring rubric of CR indicies developed to detect expected gainsin CR competency, (3) Certain CR construct(s) would be casecontent dependent and represent “medical knowledge” andother CR constructs would be independent of any VPS casecontent effect, representing underlying CR “process skills”, (4)stability of CR constructs (and their corresponding CR indicies)across VPS cases of varying content could be taken as a measureof reliability, (5) construct validity of CR indices would besupported if indices detected expected pretest-posttest gains(e.g., construct validity here refers to whether an index correlateswith the theorized learning construct, such as “clinical reasoningproficiency,” that it purports to measure), and (6) concurrentvalidity of CR indices would be supported if indices from thesame CR construct correlated more highly than indices fromdifferent CR constructs, and the two measures were taken at thesame time.11MARCH 20131:1JOURNAL OF CLINICAL REASONING AND PROCEDURAL COMPETENCYStudy Design:Study Setting and Population:The study qualified for institutional review board (IRB)exemption as a curriculum innovation project and was conductedat the Taubman Health Sciences Library Learning ResourceCenter of the University of Michigan Medical School. Ninetyseven of 191 post-second-year medical students volunteeredwithout compensation to participate in a computer simulation(CS) elective during a required, four-week problem-basedlearning curriculum (PBLC). The PBLC occurred between thepreclinical and clerkship years with 23-25 CS participants beingrandomly assigned to each PBLC week from May 7 to June 1after their second year.Computer Simulation Elective:The 6.5 hour CS elective included two sessions (3.0 and3.5 hours) on Monday-Wednesday, Tuesday-Thursday orWednesday-Friday mornings during which students workedthrough six VPSs: one 60-minute pretest (cardiology), three60-minute practice simulations with corrective feedback(pediatric endocrinology, infectious disease and pulmonary),and two 45-minute posttests (pulmonary and cardiology).No corrective feedback was provided for pretest or posttestassessment simulations. Students were randomly assignedto work in groups of three or individually during practicesimulations only. All students completed their pretest andposttest simulations as individuals.Virtual Patient Simulations:The multi-problem, network-based VPSs used in the studysimulated the actual physician-patient encounter with highfidelity and free inquiry and included 21 patient problems amongthe six cases.12 Following an “opening scene,” users assumedthe role of physicians and moved to and from history, physicalexamination, diagnostic study, diagnosis and treatment sectionswithout menu-driven cueing or branching limitations.13 TheVPSs were not the ultimate virtual patient, however, as artificialintelligent responses to all history, physical exam and diagnostictest inquiries were provided as text, and not virtual touch, soundor images.2

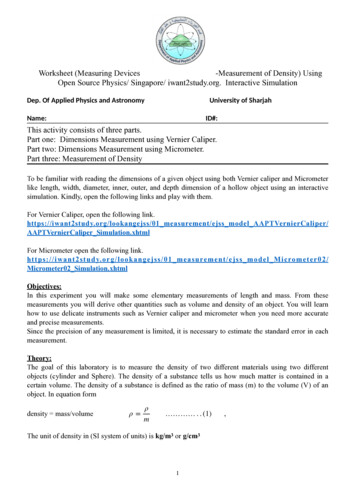

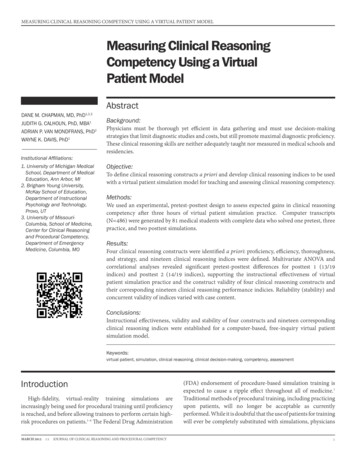

MEASURING CLINICAL REASONING COMPETENCY USING A VIRTUAL PATIENT MODELAssessments and Procedure:Computer transcripts (N 486) were generated by 81 medicalstudents with complete data, and documented student-computerinteractions for 243 hours of medical student practice and 202hours of assessment. Outcome performance scores alongnineteen predetermined CR indices (dependent variables) werederived from 243 hard-copy computer assessment transcripts(one pretest and two posttests). To standardize transcriptscoring, coding regulations were developed using sampletranscripts. Case-specific VPS scoring protocols provided asummary of those expert-recommended critical inquiries thathad been made. Diagnosis sections were independently scoredby two individuals using case-specific coding regulations thatidentified acceptable synonyms for diagnoses. Transcripts werescored by at least one rater who was blinded to pretest-posttestclassification, and inter-rater agreement was consistently high(r .90). While therapeutic and management plans were alsocomputer-scored, these were ignored for the purposes of thisstudy.Development of Scoring Rubric:Nineteen CR competency indices were defined a priori basedupon a review of the medical problem-solving literature andwere classified into one of four clinical reasoning constructs:proficiency, efficiency, thoroughness, and strategy (See Table 1).Clinical reasoning proficiency referred to how effectivelycritical data were gathered and correct diagnoses made. TheCR proficiency indices were: percent of critical data-gatheringinquiries obtained for history (history proficiency), physicalexamination (physical examination proficiency), and diagnostictests (diagnostic test proficiency); percent of correct diagnosesmade (diagnosis proficiency); Problem Solving Index (PSI)–anTABLE 1: Mathematical Descriptions of Nineteen Clinical Reasoning PerformanceIndices Derived for Use in Multi-Problem Virtual Patient yHistory TakingHTP(Obtained CHT/Total CHT) X 100Physical ExaminationPEP(Obtained CPE/Total CPE) X 100Diagnostic TestsDTP(Obtained CDT/Total CDT) X 100Correct DiagnosesDP(Obtained CD/Total CD) X 100Program Solving IndexPSI(HTP PEP DTP DP) / 4Proficiency IndexPI(Obtained CHT CPE CDT) X 100 / (Total CHT CPE CDT)EfficiencyHistory TakingHTE(CHT Obtained/HTT) X 100Physical ExaminationPEE(CPE Obtained/PET) X 100Diagnostic TestsDTE(CDT Obtained/DTT) X 100History TakingHTTTotal HTPhysical ExaminationPETTotal PEDiagnostic TestsDTTTotal DTTotal Data-GatheringTDG(HTT PET DTT)ThoroughnessDiagnosisDTTotal DHistory TakingHTS[HTT/(HTT PET DTT)] X 100Physical ExaminationPES[PET/(HTT PET DTT)] X 100Diagnostic TestsDTS[DTT/(HTT PET DTT)] X 100Focused Strategy IndexFSI(HH PP DD 1) / (HP HD PH PD DH DP 1)Invasiveness/Cost IndexICI[DTT/(HTT PET)] X 100StrategyaSymbol Key: HT history taking inquiries, PE physical examination inquiries, DT diagnostic test inquiries, D diagnoses indicated, C critical inquiry ordiagnosis (e.g. CHT critical history taking inquiries), HH history to history transition, PP physical exam to physical exam transition, DD diagnostic test todiagnostic test, HP history to physical exam, HD history to diagnostic test, PH physical exam to history, PD physical exam to diagnostic test, DH diagnostictest to history, and DP diagnostic test to physical exam transition.MARCH 20131:1JOURNAL OF CLINICAL REASONING AND PROCEDURAL COMPETENCY3

MEASURING CLINICAL REASONING COMPETENCY USING A VIRTUAL PATIENT MODELaverage of data-gathering and decision-making proficiencies;and Proficiency Index (PI)–the percent of data-gathering criticalinformation obtained.Clinical reasoning efficiency was defined as the percentageof data-gathering inquiries that were critical in making thediagnosis of a patient’s problem(s). Higher scores representedgreater efficiency in making medical inquiries. Clinical reasoningefficiency indices included history, physical examination anddiagnostic test efficiencies.Clinical reasoning thoroughness reflected the frequency ofdata-gathering inquiries made or diagnoses indicated. Clinicalreasoning thoroughness indices included: total number ofhistory inquiries (history thoroughness), physical examinationinquiries (physical examination thoroughness), and diagnostictest inquiries (diagnostic test thoroughness); total numberof history, physical examination and diagnostic test inquiriescombined (total data-gathering thoroughness); and total numberof diagnoses hypothesized at the completion of each simulatedcase (diagnosis thoroughness).Clinical reasoning strategy referred to the cognitive strategiesused to arrive at correct diagnoses. It reflected individualpreference for certain data-gathering techniques (e.g. to useeither a focused inquiry approach or a “shot gun” or haphazardapproach). CR strategy indices included: percent of totaldata-gathering inquiries that relate to history taking (historystrategy), physical examination (physical examination strategy),or diagnostic test (diagnostic test strategy); Focused StrategyIndex—the ratio of data-gathering inquiry transitions of similartype (e.g. history to history) to all other combinations of possibleinquiry transitions from one type of inquiry to another (e.g.history to physical examination, diagnostic test to history, etc),where high scores reflect a more focused and systematic datagathering

07.07.2018 · free-inquiry virtual patient simulation (VPS) model into the medical school curriculum to teach clinical reasoning (CR) skills, and then develop a scoring rubric using the VPS model as an assessment tool for measuring data-gathering and decision- making CR competencies. Specifically, we hypothesized that: (1) three hours of VPS practice with feedback would significantly impact CR