Transcription

Civic OnlineReasoningCurriculum EvaluationCivic Online Reasoning: Curriculum Evaluation1

The threat to democracy froma digitally credulous citizenryis nothing less than an issue ofnational defense. Facing thischallenge will require a renewededucational commitment.

4Executive Summary5Background5A New Approach6MediaWise Partnership6Curriculum Development6Sample Lesson6Assessments8Phases of Implementation8Pilot Study8Field Experiment9Changes in Reasoning11Student Interviews12Conclusion13Methodological Appendix14Pilot Test14Field ExperimentHow to cite this report: Sam Wineburg, Joel Breakstone, Mark Smith, Sarah McGrew,and Teresa Ortega. “Civic Online Reasoning: Curriculum Evaluation” (working paper2019-A2, Stanford History Education Group, Stanford University, Stanford, 2019).

Executive SummaryCompared with students in regular classrooms, students in CivicOnline Reasoning classrooms improved significantly in their abilityto evaluate online sources.BackgroundIn 2018 and 2019, our team administered an assessment of online reasoning to 3,446 high school students across the country and found they were vexedby even basic evaluations of digital sources.1 How canwe help students become smarter consumers of digital information? To develop a roadmap, we observedfact checkers at the nation’s leading news outlets anddistilled their strategies into an instructional approachwe call Civic Online Reasoning (COR)—the ability tosearch for, evaluate, and verify social and politicalinformation.MediaWiseIn 2018, the Stanford History Education Group (SHEG)partnered with the Poynter Institute and the LocalMedia Association to create MediaWise, an initiativesupported by Google.org. We worked alongsideclassroom teachers to develop curriculum for teachingthe skills of Civic Online Reasoning.ResearchA pilot study in a Northern California school districtshowed modest but promising gains. We fine-Civic Online Reasoning: Curriculum Evaluationtuned our approach and then conducted a full-scaleexperiment with students in a large Midwesternschool district. Compared with students in regularclassrooms, students in COR classrooms improvedsignificantly in their ability to evaluate online sources.COR CurriculumThe COR curriculum is available for free atcor.stanford.edu. The suite of lessons can beimplemented as a full curriculum or taught as aseries of stand-alone lessons. Our materials areaccompanied by instructional videos introducingthe approach, easy-to-use assessments for trackingstudents’ progress, and a ten-episode video series,Navigating Digital Information, by noted author andYouTube star John Green.What's at StakeThe threat to democracy from a digitally credulouscitizenry is nothing less than an issue of nationaldefense. Facing this challenge will require a renewededucational commitment that acknowledges the depthof the problem and meets it with vigor and resolve. Weoffer this curriculum as a start in that direction.4

National PortraitBackgroundPrior research found that . . .In November 2016, the Stanford History Education Group (SHEG)released a report showing that students struggled when askedto evaluate digital sources. Middle school students confusedadvertisements with news stories. High school students accepted postson social media at face value. College students fell flat trying to identifythe organization behind a website.252%of students took aRussian video asevidence of USvoter fraud23of studentscouldn’t distinguishbetween ads & news96%of students did notask who was behinda partisan siteThe 2016 presidential election prompted national soul searching aboutthe corrosive effects of online misinformation. A raft of initiatives,including legislative actions in 18 states, sought to address digitalilliteracy. What have these efforts wrought? Three years after ouroriginal study, we set out to find answers.From June 2018 to May 2019, we surveyed 3,446 high school students in14 states, a sample that matched the demographic profile of high schoolstudents in the United States. The picture that emerged was troubling.Fifty-two percent believed that a Facebook video allegedly showingballot stuffing provided “strong evidence” of voter fraud during the2016 Democratic primary elections. (The video was actually shot inRussia—a fact easily established with a basic search.) Two-thirds couldnot tell the difference between ads marked “Sponsored Content” andnews stories on the homepage of a news site. Ninety-six percent couldnot identify connections between a climate change denial website andthe fossil fuel industry.3A New ApproachThe most popular approaches to teaching digital literacy don’t seem tobe working. “Checklist” methods (best known by the acronyms ABCand CRAAP) provide students with long lists of questions to ask beforedeeming a site credible.4 However, research shows that this is theopposite of what experts do when landing on an unfamiliar site.We know because we observed fact checkers from the nation’s leadingnews outlets. Rather than wasting time on an unfamiliar site, factcheckers almost immediately opened up new tabs in their browsersto search for information about the site. We refer to this strategy aslateral reading—the act of turning to the broader web to get a fix onone of its nodes.5Source:Students’ Civic OnlineReasoning: A NationalPortrait, StanfordHistory EducationGroup, 2019.Fact checkers asked three questions of a digital source: (1) Who’sbehind it? (2) What’s the evidence for its claim? and (3) What do othersources say? These questions are the cornerstones of Civic OnlineReasoning—the ability to search for, evaluate, and verify social andpolitical information online. We distilled the fact checkers’ strategiesinto an instructional approach and taught it to high school and college5

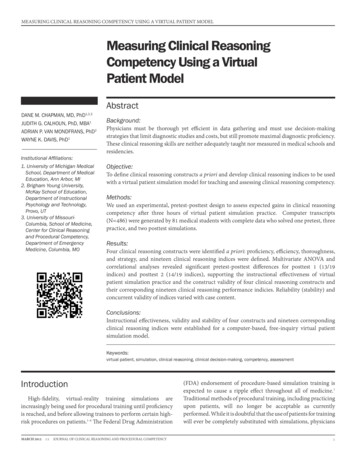

students in a series of “proof of concept” studies.6Even brief interventions—in one case only 150minutes—helped students improve.MediaWise PartnershipIn 2018, SHEG joined MediaWise, a collaborationwith the Poynter Institute and the Local MediaAssociation supported by Google.org. MediaWiseset out to help students become more discerningconsumers of online information. Based on ourresearch with fact checkers, we developed andpilot-tested instructional materials. In addition, wepartnered with John Green and Crash Course onthe development of Navigating Digital Information,a 10-episode YouTube series. Since its launch inJanuary 2019, the series has logged more than amillion views.Curriculum DevelopmentCurriculum development followed a multi-step cycle.We identified key aspects of Civic Online Reasoningand drafted lessons built around actual sources fromthe web. Teachers across the country piloted theselessons with their students and gave us feedbackabout what did and didn’t work. Each lesson wentthrough an iterative process of prototyping, piloting,revising, and re-piloting.Sample LessonAll of our lessons provide students withopportunities to evaluate online sources. Forexample, a lesson focused on “Who’s behind theinformation?” gives students explicit instructionin lateral reading. Students first go online tojudge the reliability of a site about minimum wagepolicy. Based on pilot testing with thousands ofstudents, we know that most will focus on surfacelevel features of the site: Is it a .com or a .org? Isit free of banner ads? Are there links to reputablesources? Is there a contact listed? Rather thanfocus on these aspects (each of which is easy tomanipulate), the teacher models lateral readingby opening new browser tabs, searching for theCivic Online Reasoning: Curriculum EvaluationOur curriculumopens a widecorridor. It canbe taught as afull package orimplementedas stand-alonelessons.organization, and seeing what other, reputablesources have to say. In this case, the original site isrun by a Washington, D.C.-based public relationsfirm supported by corporate interests. Afterwatching their teacher model the search process,students practice lateral reading in small groupsusing a different example.AssessmentsWe use short assessments to frequently monitorstudents’ progress. One of our assessments gaugeswhether students question the reliability of aphotograph posted by an unknown user on Twitter(see Figure 1). The tweet includes a picture of achild, purportedly in Syria, lying between twograves. (The image was actually taken in SaudiArabia as part of an art project. The “graves” arepiles of rocks.7) Students are asked to determinewhether the tweet provides “strong evidence” aboutconditions for children in Syria.A three-level rubric accompanies each assessment.The simplicity is by design: easy-to-use rubricsallow teachers to quickly identify students whohave mastered a concept, those who are on theright track, and those who need more help. Figure1 displays one of our assessments and a rubric withsample student responses.6

Figure 1Assessment & RubricAssessmentGautam Trivedi@Gotham3Meanwhile in Syria, a child sleeps betweenhis parentsThe civil war in Syria began in2011 and continues through thepresent. This image was posted onTwitter, a social media platform, inJanuary 2014.Does the post provide strongevidence about conditions forchildren in Syria?Explain your reasoning.11:48 PM 16 Jan 2014Rubric & Example Student ResponsesBeginningStudent argues that the post provides strongevidence or uses incorrect or incoherent reasoning.EmergingStudent raises questions about the post but does notconsider its source or the origin of the photograph.MasteryStudent argues the post does not provide strongevidence and questions the source of the post (e.g.,we don’t know anything about the author of the post)and/or the source of the photograph (e.g., we don’tknow where the photo was taken).Civic Online Reasoning: Curriculum EvaluationExample Student Response:“It shows that some of the children have no parentsand he or she is very sad to have no parents.”Example Student Response:“There is no evidence to prove that those arethe child’s parents.”Example Student Response:"How do I know this picture is real? I don'tknow who posted it, how they obtained thisphoto, or if it was even shot in Syria. I just can'trely on this image alone."7

Phases of ImplementationWe evaluated the COR curriculum in two phases: (a) a pilot study in Fall 2018; and (b) a fieldexperiment in Spring 2019. (See the Methodological Appendix for full details on both.)PlanningSummer 2018Pilot StudyFall 2018Pilot StudyWe ran the pilot in an urban public schooldistrict on the West Coast that enrolls over 15,000students from 7th to 12th grade. Over a quarter ofthe district’s students were classified as EnglishLearners, and another 48% were “Fluent EnglishProficient” (English was not their primary languagebut they had achieved English fluency). Nearlythree-quarters of the students were Latino and 70%were eligible for free or reduced-price lunch.At the start of the school year, SHEG staff providedteachers with a one-day professional developmentworkshop, and teachers participated in followup meetings during curriculum implementation.Students who participated in the pilot study took apretest at the start of the school year and a posttestat the end of the fall semester. The pre- andposttest measured the same COR skills but useddifferent digital sources.Students showed small but promising gains (seeMethodological Appendix). Teachers felt that thebreadth of the curriculum was too wide-rangingand students needed more opportunities to practicethe COR skills. In short, we had tried to pack toomany topics into too short a time.Field ExperimentWe conducted a field experiment in an urbanpublic school district in the Midwest with over40,000 students. Nearly half of students in thedistrict qualified for free or reduced lunch. OverCivic Online Reasoning: Curriculum EvaluationRevisionWinter 2019Field ExperimentSpring 2019Data AnalysisSummer 2019one-third were non-white, and about 7% wereEnglish learners. Based on what we learned fromthe pilot, we revised our approach and set out toteach fewer skills more deeply.Every second-semester government/civics classin the district participated in the study. The finalsample included 464 juniors and seniors at six highschools. Two hundred sixty-five students fromthree high schools completed COR lessons, and 199students from the other three high schools servedas the control group. To ensure that the students ineach group were similar, we matched schools basedon demographic characteristics and assigned thematched schools to opposite conditions.Teachers implementing the COR curriculumattended a one-day professional developmentworkshop at the start of the spring semester. Weintroduced the teachers to Civic Online Reasoningand provided them with six lessons that had beenrevised based on feedback from the pilot. Over thecourse of the spring semester, teachers integratedthese COR lessons into their classes. SHEG staff metwith the teachers during the semester to provideadditional training and support. Teachers in thecontrol classes taught their normal curriculum.Students took a pretest assessment at thebeginning of the semester and a posttest at theend. Each assessment consisted of seven exercisesrequiring the evaluation of online sources. Studentshad a live internet connection and could searchanywhere online.8

Figure 2Pretest and PosttestScores for Studentsin the COR andControl ClassroomsStudents in the COR classroomsshowed greater improvement thanstudents in the control PostAnalysis of pre/posttest data revealed that students in the CORclassrooms showed greater improvement over the course of the semesterthan students in the control classrooms. Figure 2 shows the changein mean scores from pretest to posttest for treatment and control. Onaverage, students’ scores in COR classrooms improved by over twopoints from pre- to posttest (out of 14 possible points), while scoresin the regular classrooms improved by just over half a point (seeMethodologi

2019-A2, Stanford History Education Group, Stanford University, Stanford, 2019). Civic Online Reasoning: Curriculum Evaluation 4 Executive Summary Background In 2018 and 2019, our team administered an assess-ment of online reasoning to 3,446 high school stu-dents across the country and found they were vexed by even basic evaluations of digital sources.1 How can we help students