Transcription

Intro to Apache SparkPaco Nathan, @pacoidhttp://databricks.com/download slides:http://cdn.liber118.com/spark/dbc bids.pdfLicensed under a Creative Commons AttributionNonCommercial-NoDerivatives 4.0 International License

Lecture Outline: login and get started with Apache Sparkon Databricks Cloud understand theory of operation in a cluster a brief historical context of Spark, where itfits with other Big Data frameworks coding exercises: ETL, WordCount, Join,Workflow tour of the Spark API follow-up: certification, events, communityresources, etc.2

Getting Started

Getting Started:Everyone will receive a username/passwordfor one of the Databricks Cloud shards: 02.cloud.databricks.com/Run notebooks on your account at any timethroughout the duration of the course. Theaccounts will be kept open afterwards, longenough to save/export your work.4

Getting Started:Workspace/databricks-guide/01 Quick StartOpen in a browser window, then follow thediscussion of the notebook key features:5

Getting Started:Workspace/databricks-guide/01 Quick StartKey Features: Workspace, Folder, Notebook, Export Code Cells, run/edit/move Markdown Tables6

Getting Started: Initial coding exerciseWorkspace/training-paco/00.log exampleOpen in one browser window, then rebuilda new notebook to run the code shown:7

Spark Deconstructed

Spark Deconstructed: Log Mining ExampleWorkspace/training-paco/01.log exampleOpen in one browser window, then rebuilda new notebook by copying its code cells:9

Spark Deconstructed: Log Mining Example# load error messages from a log into memory!# then interactively search for patterns!!# base RDD!lines sqlContext.table("error log")!!# transformed RDDs!errors lines.filter(lambda x: x[0] "ERROR")!messages errors.map(lambda x: x[1])!!# persistence!messages.cache()!!# action 1!messages.filter(lambda x: x.find("mysql") -1).count()!!# action 2!messages.filter(lambda x: x.find("php") -1).count()10

Spark Deconstructed: Log Mining ExampleWe start with Spark running on a cluster submitting code to be evaluated on it:WorkerWorkerDriverWorker11

Spark Deconstructed: Log Mining Example# base RDD!lines sqlContext.table("error log")!!# transformed RDDs!errors lines.filter(lambda x: x[0] "ERROR")!messages errors.map(lambda x: x[1])!!# persistence!messages.cache()!!# action 1!messages.filter(lambda x: x.find("mysql") -1).count()!!discussing the other part# action 2!messages.filter(lambda x: x.find("php") -1).count()12

Spark Deconstructed: Log Mining ExampleAt this point, we can look at the transformedRDD operator graph:messages.toDebugString!!res5: String !MappedRDD[4] at map at console :16 (3 partitions)!MappedRDD[3] at map at console :16 (3 partitions)!FilteredRDD[2] at filter at console :14 (3 partitions)!MappedRDD[1] at textFile at console :12 (3 partitions)!HadoopRDD[0] at textFile at console :12 (3 partitions)13

Spark Deconstructed: Log Mining Example# base RDD!lines sqlContext.table("error log")!!# transformed RDDs!errors lines.filter(lambda x: x[0] "ERROR")!messages errors.map(lambda x: x[1])!!# persistence!messages.cache()!!# action 1!messages.filter(lambda x: x.find("mysql") -1).count()!!Worker# action 2!messages.filter(lambda x: x.find("php") -1).count()discussing the other partWorkerDriverWorker14

Spark Deconstructed: Log Mining Example# base RDD!lines sqlContext.table("error log")!!# transformed RDDs!errors lines.filter(lambda x: x[0] "ERROR")!messages errors.map(lambda x: x[1])!!# persistence!messages.cache()!!# action 1!messages.filter(lambda x: x.find("mysql") -1).count()!!# action 2!messages.filter(lambda x: x.find("php") -1).count()discussing the other partWorkerblock 1WorkerDriverblock 2Workerblock 315

Spark Deconstructed: Log Mining Example# base RDD!lines sqlContext.table("error log")!!# transformed RDDs!errors lines.filter(lambda x: x[0] "ERROR")!messages errors.map(lambda x: x[1])!!# persistence!messages.cache()!!# action 1!messages.filter(lambda x: x.find("mysql") -1).count()!!# action 2!messages.filter(lambda x: x.find("php") -1).count()discussing the other partWorkerblock 1WorkerDriverblock 2Workerblock 316

Spark Deconstructed: Log Mining Example# base RDD!lines sqlContext.table("error log")!!# transformed RDDs!errors lines.filter(lambda x: x[0] "ERROR")!messages errors.map(lambda x: x[1])!!# persistence!messages.cache()!!# action 1!messages.filter(lambda x: x.find("mysql") -1).count()!!# action 2!messages.filter(lambda x: x.find("php") -1).count()discussing the other partWorkerblock 1readHDFSblockWorkerDriverblock 2Workerblock 317readHDFSblockreadHDFSblock

Spark Deconstructed: Log Mining Example# base RDD!lines sqlContext.table("error log")!!# transformed RDDs!errors lines.filter(lambda x: x[0] "ERROR")!messages errors.map(lambda x: x[1])!!# persistence!messages.cache()!!# action 1!messages.filter(lambda x: x.find("mysql") -1).count()!!# action 2!messages.filter(lambda x: x.find("php") -1).count()discussing the other partcache 1Workerprocess,cache datablock 1cache 2WorkerDriverprocess,cache datablock 2cache 3Workerblock 318process,cache data

Spark Deconstructed: Log Mining Example# base RDD!lines sqlContext.table("error log")!!# transformed RDDs!errors lines.filter(lambda x: x[0] "ERROR")!messages errors.map(lambda x: x[1])!!# persistence!messages.cache()!!# action 1!messages.filter(lambda x: x.find("mysql") -1).count()!!# action 2!messages.filter(lambda x: x.find("php") -1).count()discussing the other partcache 1Workerblock 1cache 2WorkerDriverblock 2cache 3Workerblock 319

Spark Deconstructed: Log Mining Example# base RDD!lines sqlContext.table("error log")!!# transformed RDDs!errors lines.filter(lambda x: x[0] "ERROR")!messages errors.map(lambda x: x[1])!! discussing the other part# persistence!messages.cache()!!# action 1!messages.filter(lambda x: x.find("mysql") -1).count()!!# action 2!messages.filter(lambda x: x.find("php") -1).count()cache 1Workerblock 1cache 2WorkerDriverblock 2cache 3Workerblock 320

Spark Deconstructed: Log Mining Example# base RDD!lines sqlContext.table("error log")!!# transformed RDDs!errors lines.filter(lambda x: x[0] "ERROR")!messages errors.map(lambda x: x[1])!! discussing the other part# persistence!messages.cache()!!# action 1!messages.filter(lambda x: x.find("mysql") -1).count()!!# action 2!messages.filter(lambda x: x.find("php") -1).count()cache 1processfrom cacheWorkerblock 1cache 2WorkerDriverblock 2cache 3Workerblock 321processfrom cacheprocessfrom cache

Spark Deconstructed: Log Mining Example# base RDD!lines sqlContext.table("error log")!!# transformed RDDs!errors lines.filter(lambda x: x[0] "ERROR")!messages errors.map(lambda x: x[1])!! discussing the other part# persistence!messages.cache()!!# action 1!messages.filter(lambda x: x.find("mysql") -1).count()!!# action 2!messages.filter(lambda x: x.find("php") -1).count()cache 1Workerblock 1cache 2WorkerDriverblock 2cache 3Workerblock 322

Spark Deconstructed: Log Mining ExampleLooking at the RDD transformations andactions from another perspective # base RDD!lines sqlContext.table("error log")!!# transformed RDDs!errors lines.filter(lambda x: x[0] "ERROR")!messages errors.map(lambda x: x[1])!!transformations# persistence!messages.cache()!!# action 1!messages.filter(lambda x: x.find("mysql") -1).count()!!# action 2!messages.filter(lambda x: x.find("php") -1).count()23RDDRDDRDDRDDactionvalue

Spark Deconstructed: Log Mining ExampleRDD# base RDD!lines sqlContext.table("error log")24

Spark Deconstructed: Log Mining ExampleRDDRDDRDDRDDtransformations# transformed RDDs!errors lines.filter(lambda x: x[0] "ERROR")!messages errors.map(lambda x: x[1])!!# persistence!messages.cache()25

Spark Deconstructed: Log Mining ExampletransformationsRDDRDDRDDRDDactionvalue# action 1!messages.filter(lambda x: x.find("mysql") -1).count()26

A Brief History

A Brief History:2004MapReduce paper20022002MapReduce @ Google20042010Spark paper200620082008Hadoop Summit2006Hadoop @ Yahoo!282010201220142014Apache Spark top-level

A Brief History: MapReducecirca 1979 – Stanford, MIT, CMU, etc.set/list operations in LISP, Prolog, etc., for parallel /lisp.htmcirca 2004 – GoogleMapReduce: Simplified Data Processing on Large ClustersJeffrey Dean and Sanjay circa 2006 – ApacheHadoop, originating from the Nutch ProjectDoug Cuttingresearch.yahoo.com/files/cutting.pdfcirca 2008 – Yahooweb scale search indexingHadoop Summit, HUG, etc.developer.yahoo.com/hadoop/circa 2009 – Amazon AWSElastic MapReduceHadoop modified for EC2/S3, plus support for Hive, Pig, Cascading, etc.aws.amazon.com/elasticmapreduce/29

A Brief History: MapReduceOpen Discussion:Enumerate several changes in data centertechnologies since 2002 30

A Brief History: MapReduceRich Freitas, IBM ility-gap/meanwhile, spinnydisks haven’t changedall that much d-technology-trends-ibm/31

A Brief History: MapReduceMapReduce use cases showed two majorlimitations:1. difficultly of programming directly in MR2. performance bottlenecks, or batch notfitting the use casesIn short, MR doesn’t compose well for largeapplicationsTherefore, people built specialized systems asworkarounds 32

A Brief History: phLabStormGeneral Batch ProcessingSpecialized Systems:iterative, interactive, streaming, graph, etc.The State of Spark, and Where We're Going NextMatei ZahariaSpark Summit (2013)youtu.be/nU6vO2EJAb433TezS4

A Brief History: SparkDeveloped in 2009 at UC Berkeley AMPLab, thenopen sourced in 2010, Spark has since becomeone of the largest OSS communities in big data,with over 200 contributors in 50 organizations“Organizations that are looking at big data challenges –including collection, ETL, storage, exploration and analytics –should consider Spark for its in-memory performance andthe breadth of its model. It supports advanced analyticssolutions on Hadoop clusters, including the iterative modelrequired for machine learning and graph analysis.”Gartner, Advanced Analytics and Data Science (2014)spark.apache.org34

A Brief History: Spark2004MapReduce paper20022002MapReduce @ Google20042010Spark paper2006200820102008Hadoop Summit2006Hadoop @ Yahoo!Spark: Cluster Computing with Working SetsMatei Zaharia, Mosharaf Chowdhury,Michael J. Franklin, Scott Shenker, Ion StoicaUSENIX HotCloud oud spark.pdf!Resilient Distributed Datasets: A Fault-Tolerant Abstraction forIn-Memory Cluster ComputingMatei Zaharia, Mosharaf Chowdhury, Tathagata Das, Ankur Dave,Justin Ma, Murphy McCauley, Michael J. Franklin, Scott Shenker, Ion StoicaNSDI di12-final138.pdf35201220142014Apache Spark top-level

A Brief History: SparkUnlike the various specialized systems, Spark’sgoal was to generalize MapReduce to supportnew apps within same engineTwo reasonably small additions are enough toexpress the previous models: fast data sharing general DAGsThis allows for an approach which is moreefficient for the engine, and much simplerfor the end users36

A Brief History: Spark37

A Brief History: Sparkused as libs, instead ofspecialized systems38

A Brief History: SparkSome key points about Spark: handles batch, interactive, and real-timewithin a single framework native integration with Java, Python, Scalaprogramming at a higher level of abstractionmore general: map/reduce is just one setof supported constructs39

A Brief History: Key distinctions for Spark vs. MapReduce generalized patternsunified engine for many use cases lazy evaluation of the lineage graphreduces wait states, better pipelining generational differences in hardwareoff-heap use of large memory spaces functional programming / ease of usereduction in cost to maintain large apps lower overhead for starting jobsless expensive shuffles40

TL;DR: Smashing The Previous Petabyte Sort llysets-a-new-record-in-large-scale-sorting.html41

TL;DR: Sustained Exponential GrowthSpark is one of the most active Apache projectsohloh.net/orgs/apache42

TL;DR: Spark Expertise Tops Median Salaries within Big survey.csp43

Coding Exercises

Coding Exercises: WordCountDefinition:countofteneacheach wordwordappearsappearscount howhow ofteninof texttextdocumentsdocumentsin aacollectioncollection ofvoid map (String doc id, String text):!for each word w in segment(text):!This simple program provides a good test casefor parallel processing, since it: !!emit(w, "1");!requires a minimal amount of codevoid reduce (String word, Iterator group):!demonstrates use of both symbolic andnumeric values!isn’t many steps away from search indexingserves as a “Hello World” for Big Data apps!A distributed computing framework that can runWordCount efficiently in parallel at scalecan likely handle much larger and more interestingcompute problems45int count 0;!for each pc in group:!!count Int(pc);!emit(word, String(count));

Coding Exercises: WordCountWordCount in 3 lines of SparkWordCount in 50 lines of Java MR46

Coding Exercises: WordCountWorkspace/training-paco/02.wc exampleOpen in one browser window, then rebuilda new notebook by copying its code cells:47

Coding Exercises: JoinWorkspace/training-paco/03.join exampleOpen in one browser window, then rebuilda new notebook by copying its code cells:48

Coding Exercises: Join – Operator Graphcachedpartitionstage 1A:B:RDDE:map()map()stage 2C:join()D:map()stage 3map()49

Coding Exercises: Workflow assignmentHow to “think” in terms of leveraging notebooks,based on Computational Thinking:1. create a new notebook2. copy the assignment description as markdown3. split it into separate code cells4. for each step, write your code under themarkdown5. run each step and verify your results50

Coding Exercises: Workflow assignmentLet’s assemble the pieces of the previous few codeexamples. Using the readme and change log tables:1. create RDDs to filter each line for thekeyword Spark2. perform a WordCount on each, i.e., so theresults are (K,V) pairs of (keyword, count)3. join the two RDDs4. how many instances of “Spark” are there?51

Spark Essentials

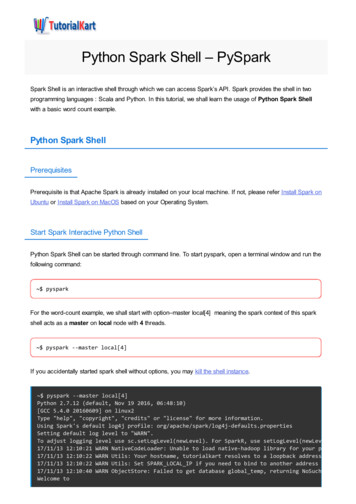

Spark Essentials:Intro apps, showing examples in bothScala and Python Let’s start with the basic concepts uide.htmlusing, respectively:./bin/spark-shell!./bin/pyspark53

Spark Essentials: SparkContextFirst thing that a Spark program does is createa SparkContext object, which tells Spark howto access a clusterIn the shell for either Scala or Python, this isthe sc variable, which is created automaticallyOther programs must use a constructor toinstantiate a new SparkContextThen in turn SparkContext gets used to createother variables54

Spark Essentials: SparkContextScala:scala sc!res: spark.SparkContext spark.SparkContext@470d1f30Python: sc! pyspark.context.SparkContext object at 0x7f7570783350 55

Spark Essentials: MasterThe master parameter for a SparkContextdetermines which cluster to usemasterdescriptionlocalrun Spark locally with one worker thread(no parallelism)local[K]run Spark locally with K worker threads(ideally set to # cores)spark://HOST:PORTconnect to a Spark standalone cluster;PORT depends on config (7077 by default)mesos://HOST:PORTconnect to a Mesos cluster;PORT depends on config (5050 by default)56

Spark Essentials: .htmlWorker NodeExecutortaskDriver ProgramcachetaskCluster ManagerSparkContextWorker NodeExecutortask57cachetask

Spark Essentials: Clusters1. master connects to a cluster manager toallocate resources across applications2. acquires executors on cluster nodes –processes run compute tasks, cache data3. sends app code to the executors4. sends tasks for the executors to runWorker NodeExecutortaskDriver ProgramcachetaskCluster ManagerSparkContextWorker NodeExecutortask58cachetask

Spark Essentials: RDDResilient Distributed Datasets (RDD) are theprimary abstraction in Spark – a fault-tolerantcollection of elements that can be operated onin parallelThere are currently two types: parallelized collections – take an existing Scalacollection and run functions on it in parallelHadoop datasets – run functions on each recordof a file in Hadoop distributed file system or anyother storage system supported by Hadoop59

Spark Essentials: RDD two types of operations on RDDs:transformations and actions transformations are lazy(not computed immediately) the transformed RDD gets recomputedwhen an action is run on it (default) however, an RDD can be persisted intostorage in memory or disk60

Spark Essentials: RDDScala:scala val data Array(1, 2, 3, 4, 5)!data: Array[Int] Array(1, 2, 3, 4, 5)!!scala val distData sc.parallelize(data)!distData: spark.RDD[Int] spark.ParallelCollection@10d13e3ePython: data [1, 2, 3, 4, 5]! data![1, 2, 3, 4, 5]!! distData sc.parallelize(data)! distData!ParallelCollectionRDD[0] at parallelize at PythonRDD.scala:22961

Spark Essentials: RDDSpark can create RDDs from any file stored in HDFSor other storage systems supported by Hadoop, e.g.,local file system, Amazon S3, Hypertable, HBase, etc.Spark supports text files, SequenceFiles, and anyother Hadoop InputFormat, and can also take adirectory or a glob (e.g. alue

Spark Essentials: RDDScala:scala val distFile sc.textFile("README.md")!distFile: spark.RDD[String] spark.HadoopRDD@1d4cee08Python: distFile sc.textFile("README.md")!14/04/19 23:42:40 INFO storage.MemoryStore: ensureFreeSpace(36827) calledwith curMem 0, maxMem 318111744!14/04/19 23:42:40 INFO storage.MemoryStore: Block broadcast 0 stored asvalues to memory (estimated size 36.0 KB, free 303.3 MB)! distFile!MappedRDD[2] at textFile at NativeMethodAccessorImpl.java:-263

Spark Essentials: TransformationsTransformations create a new dataset froman existing oneAll transformations in Spark are lazy: theydo not compute their results right away –instead they remember the transformationsapplied to some base dataset optimize the required calculations recover from lost data partitions64

Spark Essentials: descriptionreturn a new distributed dataset formed by passingeach element of the source through a function funcreturn a new dataset formed by selecting thoseelements of the source on which func returns trueflatMap(func)similar to map, but each input item can be mappedto 0 or more output items (so func should return aSeq rather than a single item)sample(withReplacement,fraction, seed)sample a fraction fraction of the data, with or withoutreplacement, using a given random number generatorseedunion(otherDataset)return a new dataset that contains the union of theelements in the source dataset and the argumentdistinct([numTasks]))return a new dataset that contains the distinct elementsof the source dataset65

Spark Essentials: ([numTasks])when called on a dataset of (K, V) pairs, returns adataset of (K, Seq[V]) pairsreduceByKey(func,[numTasks])when called on a dataset of (K, V) pairs, returnsa dataset of (K, V) pairs where the values for eachkey are aggregated using the given reduce functionsortByKey([ascending],[numTasks])when called on a dataset of (K, V) pairs where Kimplements Ordered, returns a dataset of (K, V)pairs sorted by keys in ascending or descending order,as specified in the boolean ascending argumentjoin(otherDataset,[numTasks])when called on datasets of type (K, V) and (K, W),returns a dataset of (K, (V, W)) pairs with all pairsof elements for each keycogroup(otherDataset,[numTasks])when called on datasets of type (K, V) and (K, W),returns a dataset of (K, Seq[V], Seq[W]) tuples –also called groupWithcartesian(otherDataset)when called on datasets of types T and U, returns adataset of (T, U) pairs (all pairs of elements)66

Spark Essentials: TransformationsScala:val distFile sc.textFile("README.md")!distFile.map(l l.split(" ")).collect()!distFile.flatMap(l l.split(" ")).collect()distFile is a collection of linesPython:distFile sc.textFile("README.md")!distFile.map(lambda x: x.split(' ')).collect()!distFile.flatMap(lambda x: x.split(' ')).collect()67

Spark Essentials: TransformationsScala:val distFile sc.textFile("README.md")!distFile.map(l l.split(" ")).collect()!distFile.flatMap(l l.split(" ")).collect()closuresPython:distFile sc.textFile("README.md")!distFile.map(lambda x: x.split(' ')).collect()!distFile.flatMap(lambda x: x.split(' ')).collect()68

Spark Essentials: TransformationsScala:val distFile sc.textFile("README.md")!distFile.map(l l.split(" ")).collect()!distFile.flatMap(l l.split(" ")).collect()closuresPython:distFile sc.textFile("README.md")!distFile.map(lambda x: x.split(' ')).collect()!distFile.flatMap(lambda x: x.split(' ')).collect()looking at the output, how would youcompare results for map() vs. flatMap() ?69

Spark Essentials: Actionsactiondescriptionreduce(func)aggregate the elements of the dataset using a functionfunc (which takes two arguments and returns one),and should also be commutative and associative sothat it can be computed correctly in parallelcollect()return all the elements of the dataset as an array atthe driver program – usually useful after a filter orother operation that returns a sufficiently small subsetof the datacount()return the number of elements in the datasetfirst()return the first element of the dataset – similar totake(1)take(n)return an array with the first n elements of the dataset– currently not executed in parallel, instead the driverprogram computes all the elementstakeSample(withReplacement,fraction, seed)return an array with a random sample of num elementsof the dataset, with or without replacement, using thegiven random number generator seed70

Spark Essentials: ActionsactiondescriptionsaveAsTextFile(path)write the elements of the dataset as a text file (or setof text files) in a given directory in the local filesystem,HDFS or any other Hadoop-supported file system.Spark will call toString on each element to convertit to a line of text in the filesaveAsSequenceFile(path)write the elements of the dataset as a HadoopSequenceFile in a given path in the local filesystem,HDFS or any other Hadoop-supported file system.Only available on RDDs of key-value pairs that eitherimplement Hadoop's Writable interface or areimplicitly convertible to Writable (Spark includesconversions for basic types like Int, Double, String,etc).countByKey()only available on RDDs of type (K, V). Returns a Map of (K, Int) pairs with the count of each keyforeach(func)run a function func on each element of the dataset –usually done for side effects such as updating anaccumulator variable or interacting with externalstorage systems71

Spark Essentials: ActionsScala:val f sc.textFile("README.md")!val words f.flatMap(l l.split(" ")).map(word (word, 1))!words.reduceByKey( ).collect.foreach(println)Python:from operator import add!f sc.textFile("README.md")!words f.flatMap(lambda x: x.split(' ')).map(lambda x: (x, 1))!words.reduceByKey(add).collect()72

Spark Essentials: PersistenceSpark can persist (or cache) a dataset inmemory across operationsEach node stores in memory any slices of itthat it computes and reuses them in otheractions on that dataset – often making futureactions more than 10x fasterThe cache is fault-tolerant: if any partitionof an RDD is lost, it will automatically berecomputed using the transformations thatoriginally created it73

Spark Essentials: PersistencetransformationdescriptionMEMORY ONLYStore RDD as deserialized Java objects in the JVM.If the RDD does not fit in memory, some partitionswill not be cached and will be recomputed on the flyeach time they're needed. This is the default level.MEMORY AND DISKStore RDD as deserialized Java objects in the JVM.If the RDD does not fit in memory, store the partitionsthat don't fit on disk, and read them from there whenthey're needed.MEMORY ONLY SERStore RDD as serialized Java objects (one byte arrayper partition). This is generally more space-efficientthan deserialized objects, especially when using a fastserializer, but more CPU-intensive to read.MEMORY AND DISK SERSimilar to MEMORY ONLY SER, but spill partitionsthat don't fit in memory to disk instead of recomputingthem on the fly each time they're needed.DISK ONLYMEMORY ONLY 2,MEMORY AND DISK 2, etcStore the RDD partitions only on disk.Same as the levels above, but replicate each partitionon two cluster nodes.74

Spark Essentials: PersistenceScala:val f sc.textFile("README.md")!val w f.flatMap(l l.split(" ")).map(word (word, 1)).cache()!w.reduceByKey( ).collect.foreach(println)Python:from operator import add!f sc.textFile("README.md")!w f.flatMap(lambda x: x.split(' ')).map(lambda x: (x, 1)).cache()!w.reduceByKey(add).collect()75

Spark Essentials: Broadcast VariablesBroadcast variables let programmer keep aread-only variable cached on each machinerather than shipping a copy of it with tasksFor example, to give every node a copy ofa large input dataset efficientlySpark also attempts to distribute broadcastvariables using efficient broadcast algorithmsto reduce communication cost76

Spark Essentials: Broadcast VariablesScala:val broadcastVar sc.broadcast(Array(1, 2, 3))!broadcastVar.valuePython:broadcastVar sc.broadcast(list(range(1, 4)))!broadcastVar.value77

Spark Essentials: AccumulatorsAccumulators are variables that can only be“added” to through an associative operationUsed to implement counters and sums,efficiently in parallelSpark natively supports accumulators ofnumeric value types and standard mutablecollections, and programmers can extendfor new typesOnly the driver program can read anaccumulator’s value, not the tasks78

Spark Essentials: AccumulatorsScala:val accum sc.accumulator(0)!sc.parallelize(Array(1, 2, 3, 4)).foreach(x accum x)!!accum.valuePython:accum sc.accumulator(0)!rdd sc.parallelize([1, 2, 3, 4])!def f(x):!global accum!accum x!!rdd.foreach(f)!!accum.value79

Spark Essentials: AccumulatorsScala:val accum sc.accumulator(0)!sc.parallelize(Array(1, 2, 3, 4)).foreach(x accum x)!!accum.valuedriver-sidePython:accum sc.accumulator(0)!rdd sc.parallelize([1, 2, 3, 4])!def f(x):!global accum!accum x!!rdd.foreach(f)!!accum.value80

Spark Essentials: API DetailsFor more details about the Scala/Java tml#org.apache.spark.package!For more details about the Python API:spark.apache.org/docs/latest/api/python/81

Follow-Up

certification:Apache Spark developer certificate program http://oreilly.com/go/sparkcert defined by Spark experts @Databricks assessed by O’Reilly Media establishes the bar for Spark expertise

MOOCs:Anthony JosephUC Berkeleybegins cs100-1xintroduction-big-6181Ameet TalwalkarUCLAbegins -cs190-1xscalable-machine-6066

community:spark.apache.org/community.htmlevents worldwide: goo.gl/2YqJZK!video preso archives: spark-summit.orgresources: databricks.com/spark-training-resourcesworkshops: databricks.com/spark-training

books:Learning SparkHolden Karau,Andy Konwinski,Matei ZahariaO’Reilly (2015*)Fast Data Processingwith SparkHolden KarauPackt p.oreilly.com/product/0636920028512.doSpark in ActionChris FreglyManning (2015*)sparkinaction.com/

spark.apache.org “Organizations that are looking at big data challenges – including collection, ETL, storage, exploration and analytics – should consider Spark for its in-memory performance and the breadth of its model. It supports advanced analytics so