Transcription

THE AMD gem5 APU SIMULATOR:MODELING GPUS USING THE MACHINE ISATONY GUTIERREZ, SOORAJ PUTHOOR, TUAN TA*, MATT SINCLAIR,AND BRAD BECKMANNAMD RESEARCH, *CORNELLJUNE 2, 2018

OBJECTIVES AND SCOPE Objectives‒ Introduce the Radeon Open Compute Platform (ROCm)‒ AMD’s Graphics Core Next (GCN) architecture and GCN3 ISA‒ Describe the gem5-based APU simulator Scope‒ Emphasis on the GPU side of the simulatorModelingAPUsystems‒ APU (CPU GPU) model, not discrete GPU‒ Covers GPU arch, GCN3 ISA, and HW-SW interfaces Why are we releasing our code?‒ Encourage AMD-relevant research‒ Modeling ISA and real system stack is important [1]‒ Enhance academic collaborations‒ Enable intern candidates to get experience before arriving‒ Enable interns to take their experience back to school Acknowledgement‒ AMD Research’s gem5 team[1] Gutierrez et al. Lost in Abstraction: Pitfalls of Analyzing GPUs at the Intermediate Language Level. HPCA, 2018.2 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

QUICK SURVEY Who is in our audience?‒‒‒‒Graduate studentsFaculty membersWorking for government research labsWorking for industry Have you written an GPU program?‒ CUDA, OpenCLTM, HIP, HC, C AMP, other languages Have you used these simulators?‒ GPGPU-Sim‒ Multi2Sim‒ gem5‒ Our HSAIL-based APU model Are you familiar with our HPCA 2018 paper?‒ Lost in Abstraction: Pitfalls of Analyzing GPUs at the Intermediate Language Level3 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

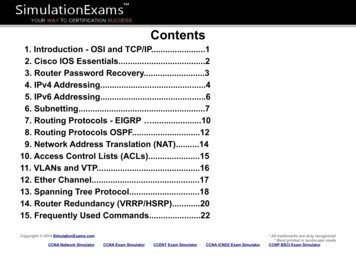

OUTLINETopicPresenterTimeBackgroundTony8:00 – 8:15ROCm, GCN3 ISA, and GPU ArchTony8:15 – 9:15HSA Implementation in gem5Sooraj9:15 – 10:00Break10:00 – 10:30Ruby and GPU Protocol TesterTuan10:30 – 11:15Demo and WorkloadsMatt11:15 – 11:50Summary and QuestionsAll11:50 – 12:004 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

BACKGROUND Overview of gem5‒ Source tree GPU terminology and system overview HSA standard and building blocks‒‒‒‒Coherent shared virtual memoryUser-level queuesSignalsetc.5 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

OVERVIEW OF gem5 Open-source modular platform for system architecture research‒ Integration of M5 (Univ. of Michigan) and GEMS (Univ. of Wisconsin)‒ Actively used in academia and industry Discrete-event simulation platform with numerous models‒ CPU models at various performance/accuracy trade-off points‒ Multiple ISAs: x86, ARM, Alpha, Power, SPARC, MIPS‒ Two memory system models: Ruby and “classic” (M5)‒ Including caches, DRAM controllers, interconnect, coherence protocols, etc.‒ I/O devices: disk, Ethernet, video, etc.‒ Full system or app-only (system-call emulation) Cycle-level modeling (not “cycle accurate”)‒ Accurate enough to capture first-order performance effects‒ Flexible enough to allow prototyping new ideas reasonably quickly See http://www.gem5.org More information available from Jason Lowe-Power’s tutorial‒ http://learning.gem5.org/tutorial/6 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

APU SIMULATOR CODE ORGANIZATION Gem5 top-level directory‒ src/‒ gpu-compute/ GPU core modelgem5‒ mem/protocol/ APU memory modelFor more information about theconfiguration system, see JasonLowe-Power’s tutorial.‒ mem/ruby/ APU memory model‒ dev/hsa/ HSA device modelssrc‒ configs/configs‒ example/ apu se.py sample script‒ ruby/ APU protocol configsgpucomputemem/protocolmem/rubydev/hsaFor the remainder of this talk, files without a directoryprefix are located in src/gpu-compute/7 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

GPU TERMINOLOGYSQC: Sequencer Cache (shared L1 instruction)CU: Compute Unit (SM in NVIDIA terminology)GPU I-CacheScalar CacheSQCGPUCoreGPUCoreGPU GPUCore CoreL1DL1DL1DL2L1DScalar CacheCUTCPCUCUTCPTCPCUTCPTCP: Texture Cache per Pipe(private L1 data)TCCAMD terminologyTCC: Texture Cache per Channel(shared L2)Not shown (per GPU core):LDS: Local Data Share (Shared memory in NVIDIAterminology, sometimes called “scratch pad”)8 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

EXAMPLE APU SYSTEMGPU CPU CORE-PAIR WITH A SHARED DIRECTORYCPUGPUScalar CacheCPU ectory9 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL L2MemoryControllerMemory

AMD TERMINOLOGY IN A NUTSHELL Heterogeneous Systems Architecture (HSA) programming abstraction‒ Standard for heterogeneous compute – supported by AMD hardware‒ Light abstractions of parallel physical hardware‒ Captures basic HSA and OpenCL constructs, plus much moreWork-itemGPU ArchitectureGPUGPU CoreGridThread blockin CUDAGPU CoreGridWorkgroupGrid in CUDAWorkgroupWorkgroupThread inCUDA Grid: N-Dimensional (N 1, 2, or 3) index space‒ Partitioned into workgroups, wavefronts, and work-items10 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL HSA ModelWork-item (WI)Wavefront (WF)Warp in CUDA

SPECIFICATION BUILDING BLOCKSHSA Hardware Building BlocksHSA Software Building Blocks Shared Virtual Memory‒‒‒‒‒Single address spaceCoherentPageableFast access from all componentsCan share pointers Architected User-Level Queues HSA RuntimeOpenSourceHSA Platform System ArchSpecification Signals Platform Atomics Defined Memory Model Context SwitchingIndustry standard, architected requirements forhow devices share memory and communicatewith each other11 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL ‒‒‒‒Implemented by the ROCm runtimeCreate queuesAllocate memoryDevice discoveryOpenSourceHSA System RuntimeSpecification Multiple high-level compilers‒ CLANG/LLVM‒ C , HIP, OpenMP, OpenACC, Python GCN3 Instruction Set ArchitectureOpenSourceGCN3 ISASpecification‒ Kernel state‒ ISA encodings‒ Program flow controlIndustry specifications to enableexisting programming languages totarget the undation

APU SIMULATION SUPPORTHSA Hardware Building BlocksHSA Software Building Blocks Shared virtual memory‒ Single address space‒ Coherent‒ Fast access from all components‒ Can share pointers‒ Pageable Radeon Open Compute platform (ROCm)‒ AMD’s implementation of HSA principles‒ Create queues‒ Device discovery‒ AQL support‒ Allocate memory Architected user-level queues‒ Via architected queuing language (AQL) Machine ISA‒ GCN3 Signals Heterogeneous Compute Compiler (HCC)‒ CLANG/LLVM – direct to GCN3 ISA‒ C , C AMP, HIP, OpenMP, OpenACC, Python Platform atomics Defined memory model‒ Basicacquireand releaseoperationsAcquireand releasesemanticsas implemented by the compiler‒ Merging functional and timing models Context switching12 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL LegendIncluded in this releaseWork-in-progress / may be releasedLonger term work

OUTLINETopicPresenterTimeBackgroundTony8:00 – 8:15ROCm, GCN3 ISA, and GPU ArchTony8:15 – 9:15HSA Implementation in gem5Sooraj9:15 – 10:00Break10:00 – 10:30Ruby and GPU Protocol TesterTuan10:30 – 11:15Demo and WorkloadsMatt11:15 – 11:50Summary and QuestionsAll11:50 – 12:0013 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

HW-SW INTERFACES ROCm – high-level SW stack HW-SW interfaces Kernel launch flow GCN3 ISA overview14 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

ARE YOU READY TO ROCm?SW STACK AND HIGH-LEVEL SIMULATION FLOWApplicationsource HCCHCCGCN3 ELF Code metadata‒ Clang front end and LLVM-based backend‒ Direct to ISA‒ Multi-ISA binary (x86 GCN3)HCCLibraries ROCm Stack‒‒‒‒HCC librariesRuntime layer – ROCrThunk (user space driver) – ROCtKernel fusion driver (KFD) – ROCkUser spaceROCrROCtRuntime loader loadsGCN3 ELF into memory GPU is a HW-SW co-designed machineOS kernel spaceROCk‒ Command processor (CP) HW aids in implementingHSA standard‒ Rich application binary interface (ABI) shader.[hh cc] GPU directly executes GCN3 ISA‒ Runtime ELF loaders for GCN3 binaryHardwareMEMcompute unit.[hh cc]gpu command processor.[hh cc]See https://rocm.github.io for documentation, source, and more.15 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL x86 ELFCommandProcessorCUGPU

DETAILED VIEW OF KERNEL LAUNCHGPU FRONTEND AND HW-SW INTERFACE User space SW talks to GPU via ioctl()User Space SWgpu compute driver.[hh cc]‒ HCC/ROCr/ROCt are off-the-shelf ROCm‒ ROCk is emulated in gem5‒ Handles ioctl commands CP frontendioctl()ROCkdev/hsa/hsa packet processor.[hh cc]MEMdev/hsa/hw scheduler.[hh cc]‒ Two primary components:‒ HSA packet processor (HSAPP)‒ Workgroup dispatcherkernelsDispatcherHSAPP Runtime creates soft HSA queues‒ HSAPP maps them to hardware queues‒ HSAPP schedules active queues Runtime creates and enqueues AQL packetsCPHW QueueSchedulerHW queueHead ptrTail ptrKernel resource requirementsKernel sizeKernel code object pointerMore hsa packet.hhhsa queue.hhHSA software queue16 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL GPUHW Model Components‒ Packets include:‒‒‒‒workCUgroups

DETAILED VIEW OF KERNEL LAUNCHDISPATCHER WORKGROUP ASSIGNMENTdispatcher.[hh cc] Kernel dispatch is resource limitedhsa queue entry.hh‒ WGs are scheduled to CUs Dispatcher tracks status of in-flight/pendingkernels‒ If a WG from a kernel cannot be scheduled, it isenqueued until resources become available‒ When all WGs from a task have completed, thedispatcher frees CU resources and notifies the hostShaderGPU DispatcherCUCUCUID0123AQL PktAQL PktAQL PktHSA Queue Entry(AQL kernel)-Gridwg(0, 0, 0) wg(1, 0, 0)wg(0, 1, 0) wg(1, 1, 0)17 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL 1) Try to dispatch WGs on every cycle2) Pick oldest AQL pkt in queue; if it hasunexecuted WGs, try to schedule them on a CU3) Dispatch WG to CU if there are enough WFslots, enough GPRs, and enough LDS space

DETAILED VIEW OF KERNEL LAUNCHSystem softwareGPU ABI INITIALIZATIONABI ABI‒ Interface between the application binary (ELF) andother components (e.g., OS, ISA, and HW)‒ Function call convention, location of special values,etc. ABI state primarily maintained in register file‒ Some state maintained in the WF context forsimplicityBinaryHW/ISAkernel code.hhAMDKernelCodeRegister File0x7fff CP Kernel argumentsbase address CP responsible for initializing register file state‒ Extracts metadata from code object (GPU ELF binary)‒ Initializes both scalar and vector registers‒ Kernel argument base address, vector condition codes(VCC), etc.VCC HIVCC LOGCN3 ISA application loading a kernel argument# Load 2nd 64b kernel arg into s[2:3]s load dwordx2 s[2:3], s[0:1], 0x0818 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

GPU MICROARCHITECTURE High-level gem5 and Ruby core models gem5 ISA/HW separation‒ Object oriented design‒ Modular, extensible ‒ Possible to support multiple-ISAs GCN microarchitecture gem5’s conceptual pipeline and timing flow GCN3 ISA description gem5 compute unit implementation‒ Pipeline breakdown19 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

GPU CORE MODULESGPU CORE MODULES VS. RUBY MODULESHardware building blocks GPU core is the compute unit‒ Resources inside GPU Core‒ Instruction buffering, Registers, Vector ALUsSQCScalarCacheCUCUCUCU‒ Resources outside GPU Core‒ TCP, TCC, SQC (Ruby based) Shader: object containing all GPU coremodels‒ The top-level view of the GPU in gem5‒ Contains multiple CUs‒ With other miscellaneous components20 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL TCPTCPTCPTCPGPU CoreModulesRubyModulesAPUSimulatorTCCSimulator software modules

ISA DESCRIPTION/MICROARCHITECTURE SEPARATION GPUStaticInst & GPUDynInst‒ Architecture-specific code src/arch/‒ Base instruction classes‒ ISA decoder‒ ISA state, etc.‒ Define API for instruction execution‒ e.g., execute() – perform instruction executionWavefront related interfacesStatic instruction objectsHW BlocksGPU coremodelcomponentsISA specificinstructionclasses andmethods‒ Implemented by ISA-specific instruction classes GPUExecContext & GPUISA‒ Define API for accessing ISA stateShaderCompute UnitsWF ContextGPUStaticInstGPUDynInstGPU Core/ISA API definitionGPUExecContextGCN3 StaticInstGCN3 DecoderOperandsISA StateISA RegistersISA-specific state Object-oriented design‒ Base classes define the API‒ Model uses base class pointers‒ Configuration system instantiates ISA-specificobjectsGPU core stateInstruction and ISA specific classesDynamic instruction informationand interfacesRelevant source:gpu exec context.[hh cc]gpu dyn inst.[hh cc]src/arch/gcn3/gpu static inst.hh cc]src/arch/gcn3/gpu h21 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL src/arch/gcn3/registers.[hh cc]

GPU CORE BASED ON GCN ARCHITECTUREscalar register file.[hh cc]vector register file.[hh cc]Compute UnitSIMD 1PC & IB10 WFsSIMD 2PC & IB10 WFsExecuteMessage &Branch UnitInstruction ArbitrationSQCInstruction FetchSIMD 0PC & IB10 WFsSIMD 3PC & IB10 WFsExport/GDSDecodeVector RFTCCSIMDsVALUVALUVALUVALULDS Decodewavefront.[hh cc]arch/gcn3/gpu decoder.hharch/gcn3/decoder.cc22 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL ScalarUnitScalarCacheLocal DataShare Memlds state.[hh cc]src/mem/protocol/GPU VIPER-TCP.smsrc/mem/protocol/GPU VIPER-TCC.smsrc/mem/protocol/GPU VIPER-SQC.smMore details available here: GCN Architecture Whitepaper www.amd.com/Documents/GCN Architecture whitepaper.pdf

GPU CORE TIMINGCONCEPTUAL TIMING STAGESFetched WFs Ready WFsFetchScoreboardExecuting WFsScheduleExecuteMemorypipelineLocal memory (LDS)Global memory (TCP)Scalar memory Execute-in-execute philosophy Pipeline stages‒‒‒‒‒Fetch: fetch for dispatched WFs - fetch stage.[hh cc] and fetch unit.[hh cc]Scoreboard: Check which WFs are ready - scoreboard check stage.[hh cc]Schedule: Select a WF from the ready pool - schedule stage.[hh cc]Execute: Run WF on execution resource - exec stage.[hh cc]Memory pipeline: Execute LDS/global memory operation‒ local memory pipeline.[hh cc]‒ global memory pipeline.[hh cc]‒ scalar memory pipeline.[hh cc]23 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

GCN3 GPU ISAarch/gcn3/insts/op encodings.[hh cc]arch/gcn3/insts/instructions.[hh cc] Vector and scalar instructionsOp Type‒ Single instruction stream‒ Not really “SIMT”‒ Divergence handled by scalar unit‒ Can directly modify execution mask‒ Jump over basic blocks when EXEC 0 Instructions broken down by OP type‒ Op types map to different functional units inCU‒ The CU can issue one instruction to eachunit in the same cycle‒ Export/GDS not supported in gem5Functional UnitUsageSOPPBranch/MessageUnitBranching, NOPs, Barriers, waitcnts,messagingSOPC/SOPK/SOP1/SOP2Scalar ALUGeneral scalar computation/divergencehandlingSMEMScalar MemoryScalar memory access, cache maintenanceVOPC/VOP1/VOP2/VOP3SIMD UnitGeneral SIMD computationDSLDSPrivate scratch pad memoryMUBUFVector MemoryAccessing vector memory, cachemaintenanceFLATLDS/Vector MemoryAccessing vector memory, may resolve toLDS or system memoryVINTRP/MTBUF/MIMG/EXPVariesPrimarily used by graphics. Not currentlymodeled in gem5; however, theinfrastructure to do so is present.Full GCN3 spec available at: rchitecture-manual/24 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

GPU CORE MODULE INTERNALS4-CU-shared SQCSHARED VS. PRIVATE STRUCTURES Compute unitSQCScalarCache‒ Four 16-wide SIMD units‒ SIMD hosts WFs‒ Private resources to each SIMD‒ Instruction buffering‒ Registers‒ Vector ALUsInstruction FetchCUCUCUCUTCPTCPTCPTCPWF 0-9ContextsTCCWF 10-19ContextsWF 20-29ContextsWF 30-39ContextsInstruction Decode/ArbitrationSIMD 0SIMD 1SIMD 2SIMD 3Scalar Unit‒ Shared resourcesVGPRsVGPRsVGPRsVGPRsSGPRs‒ Fetch and decode‒ TCP‒ ranchUnitLDSTCP25 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

fetch stage.[hh cc]FETCH AND WAVEFRONT CONTEXTSFetch buffer(per WF)fetch unit.[hh cc]4-CU-shared SQC SQC shared by 4 CUs‒ Number of SQCs and CUs are configurable Fetch‒ Shared and arbitrated between SIMDs in a CU‒ Fetch to each SIMD unit‒ Buffers fetched cache lines per WF WF ContextsInstruction FetchWF 0-9ContextsWF 10-19ContextsWF 20-29ContextsWF 30-39ContextsPC0PC1Instruction DataInstruction DataInstruction Decode/ArbitrationSIMD 0SIMD 1SIMD 2SIMD 3Scalar torALUVectorALUIntegerALU‒ 10 WFs per SIMD, 40 per CU‒ PC and decoded instruction buffers (IB)‒ Register file and LDS allocationBranchUnitLDS‒ ¼ of WF executes each cycle‒ 4 cycles needed to fully execute single SIMDinstructionTCPwavefront.[hh cc]WF 1WF 0PCPCIB26 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL WF 9PCIBIB

DECODE AND ISSUEscoreboard check stage.[hh cc]WF ContextsScoreboardIB4-CU-shared SQCInstruction data decodedinto instruction bufferReady List Per Execution UnitLDSSIMDScalarGlobal MemFetch buffer(per WF)Instruction FetchWF 0-9ContextsWF 10-19ContextsWF 20-29ContextsWF 30-39ContextsInstruction Decode/Arbitrationschedule stage.[hh cc]Read OperandsWF ArbitrationExecution UnitsLDSSIMDScalarSIMD 0SIMD 1SIMD 2SIMD 3Scalar torALUVectorALUIntegerALUGlobal MemLDS Instructions are decoded out of fetch buffers Instruction arbitrationscheduler.[hh cc]‒ Can issue to each functional unit each cyclescheduling policy.hh‒ Finds ready WFs‒ Scheduling policy dictates which WFs have priority‒ Oldest first, easy to add others27 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL BranchUnitTCP

vector register file.[hh cc]REGISTER FILESscalar register file.[hh cc]simple pool manager.[hh cc]static register manager policy.[hh cc]4-CU-shared SQC General Purpose Registers (GPRs)Instruction Fetch‒ Vector registers (VGPR) partitioned per SIMDSIMD 0‒ Configurable size‒ Because each SIMD executes independent WFV0WF 0-9ContextsWF0 – V0, N ‒ 32-bit wide‒ Combine adjacent VGPRs for 64-bit or 128-bit data‒ Each WF also has scalar general purpose registers(SGPRs) Register Allocation Done by a Simple Pool Manager‒ Only allows one WG at a time‒ Statically mapped virtual physical register indexWF 10-19ContextsWF 20-29ContextsInstruction Decode/ArbitrationVN-1VNVN 1VN 2SIMD 0SIMD 1SIMD 2SIMD 3Scalar torALUVectorALUIntegerALUBranchUnitLDS‒ base, limit pair of registers specify GPR allocation‒ Modular design – more advanced pool managers can beswapped into the VRF seamlessly‒ Simple timing model with constant delayTCPThis is an area where gem5 user contributions would be extremely valuable.28 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL WF 30-39Contexts

VECTOR ALUs4-CU-shared SQC 16-lane vector pipeline per SIMDInstruction Fetch‒ Each lane has a set of functional units‒ One work-item per laneWF 0-9Contexts 4 cycles to execute a WF for all 64 work-itemsWF 10-19ContextsWF 20-29ContextsWF 30-39ContextsInstruction Decode/Arbitration‒ In gem5, 64 work-items are executed in one tick and ticks aremultiplied by 4 SIMD execution may take longer if work-items in WF havedissimilar behaviorsSIMD 0SIMD 1SIMD 2SIMD 3Scalar torALUVectorALUIntegerALU‒ Example 1: Branch (or spatial) divergence‒ Branches executed through predication‒ When control flow diverges, all lanes take all pathsBranchUnitLDS‒ Example 2: Memory (or temporal) divergence‒ Longer access latency by one work-item stalls entire WFTCPcompute unit.[hh cc]Vector ALU in SIMDLane 029 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL Lane 1Lane 15

GPU CORE TIMINGgpu dyn inst.[hh cc]HANDLING MEMORY INSTRUCTIONSarch/gcn3/instructions.[hh cc]GPU dynamic memory instructionarch/gcn3/insts/op encodings[hh cc] Memory instructions generate memory requests‒ Part of GPU instruction definition (ISA-specific) Three phases‒ execute()‒ Read operands, calculate address, increment wait count,and issue to appropriate memory pipe‒ initiateAcc()‒ Issue request to memory system‒ completeAcc()‒ For loads write back data. Stores do nothing. New machine ISAs can use this capability tosupport their own memory instructions Individual stages contribute to the memoryinstruction timing‒ Additionally memory end timing handled by Ruby andmemory technology parameters Memory dependencies are preserved usingwaitcnts30 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL cal memory pipeline.[hh cc]WritebackExample GCN3 code:flat load dword v4, v[4:5]flat load dword v16, v[8:9]flat load dword v23, v[14:15]flat load dword v10, v[10:11]global memory pipeline.[hh cc]scalar memory pipeline.[hh cc]2s waitcntcnt(3)v ashrrevv5, 31, v431.flat load writes v42.Waitcnt specifies wait count value must be 33.Arithmetic shift later reads from v44.Waitcnt waits until at least #1 is finished

AddressWrite data In gem5:TCPTagCoalescerTo shared TCCVECTOR MEMORY EXECUTIONDataRead data‒ Address calculation: arch/gcn3/insts/op encodings.hh‒ Address .[hh cc]mem/ruby/system/VIPERCoalescer.[hh cc]mem/protocol/GPU VIPER-TCP.smmem/protocol/GPU VIPER-TCC.sm LDS‒‒‒‒‒User-managed address spaceScratchpad for WFs in workgroupUsed for data sharing and synchronization within workgroupCleared when workgroup completesIn gem5, functional model with a pointer per workgroup4-CU-shared SQCInstruction FetchWF 0-9ContextsWF 10-19ContextsWF 20-29ContextsInstruction Decode/ArbitrationSIMD 0SIMD 1SIMD 2SIMD 3Scalar hUnitLDSTCP31 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL WF 30-39ContextsTCC

CONTROL FLOW DIVERGENCESource code:SIMT VS. VECTOR EXECUTION MODELif (i 31) {*x 84;} else if (i 16) {HSAIL*x 90;}BB0cmp lt c0, s0, 32Execute taken pathfirst & flush IBcbr c0, @BB2BB1BB2st 84, [ d0]cmp gt c0, s0, 15br @BB4cbr c0, @BB4Branch overBB2 & BB3,flush IBBB3Fall through to BB3st 90, [d0]BB4retReconvergence point reached, HWinitiated jump to divergent path32 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL Instruction buffercmpretstcbrbrstretretbr

CONTROL FLOW DIVERGENCESource code:SIMT VS. VECTOR EXECUTION MODELif (i 31) {GCN3BB0cmp le vcc, 32, v0*x 84;s load s[0:1], s[6:7], 0x0s and saveexec s[2:3], vcc} else if (i 16) {HSAILv mov v0, 0x00000054*x 90;s cbranch execz @BB2}BB0cmp lt c0, s0, 32BB1Instruction buffers waitcnt lgkmcnt(0)cbr c0, @BB2cmps loads and saveexecv movv mov v[1:2], s[0:1]BB1BB2st 84, [ d0]cmp gt c0, s0, 15br @BB4cbr c0, @BB4flat store v[1:2], v0BB2s andn2 exec, s[2:3], execs cbranch execz @BB5BB3st 90, [d0]BB3BB4v cmp ge vcc, 15, v0rets and saveexec s[4:5], vccBranches are optimizations forcase when EXEC 0 for a BBv mov v0, 0x0000005as cbranch execz @BB5BB4s waitcnt lgkmcnt(0)v mov v[1:2], s[0:1]flat store v[1:2], v0BB5s endpgm33 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

OUTLINETopicPresenterTimeBackgroundTony8:00 – 8:15ROCm, GCN3 ISA, and GPU ArchTony8:15 – 9:15HSA Implementation in gem5Sooraj9:15 – 10:00Break10:00 – 10:30Ruby and GPU Protocol TesterTuan10:30 – 11:15Demo and WorkloadsMatt11:15 – 11:50Summary and QuestionsAll11:50 – 12:0034 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

WHAT IS HSA?Heterogeneous System ArchitectureProcessor design that makes it easy to harness the entire computingpower of GPUs for faster and more power-efficient devices, includingpersonal computers, tablets, smartphones, and serversBringing GPU performance to a wide variety of applications35 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

KEY FEATURES OF HSAhUMAhCommhQ36 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL Heterogeneous Unified Memory ArchitectureHeterogeneous Communication via Signals and AtomicsHeterogeneous Queuing

TRADITIONAL DISCRETE GPU Separate memory Separate addr spaceCPU CPUCPU12 NCU1CU2CUCU3 M Explicit data copyingPCIe Coherent SystemMemory‒ No pointer-baseddata structures‒ High latency‒ Low bandwidthGPU Memory Need lots ofcompute on GPU toamortize copyoverhead Very limited GPUmemory capacity37 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

hUMA UNIFIED MEMORY Unified address spaceCPU CPUCPU CU12 N1CU2CUCU3 M‒ GPU uses user virtual addresses‒ Fully coherent No explicit copying‒ Data movement on demandUnified Coherent Memory Pointer-based data structuresshared across CPU & GPU Pageable virtual addresses‒ No GPU capacity constraints38 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

HSA ATOMICS Atomic memory updates fundamental to efficient thread synchronization‒ Implement primitives like mutexes, semaphores, histograms, , previously only implemented on CPU HSA supports 32bit or 64bit values for atomic ops‒ CAS, SWAP, add, increment, sub, decrement, ‒ and other common arithmetic and logic atomic ops On PCI-Express system, atomics map to PCI-E atomics HSA specifies a well-defined “SC for HRF” memory model‒ A variant of “Release Consistency” model‒ Acquire: pull latest data (to me)‒ Release: push latest data (to others)‒ Compatible with C 11, Java, OpenCL, and .NET memory models‒ Details: “HSA Platform System Arch Specification”, http://hsafoundation.com39 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

THE HSA SIGNALS INFRASTRUCTURE Hardware-assisted signaling and synchronization primitives‒ Memory semantics, equivalent to atomics‒ e.g., 32bit or 64bit value, content updated atomically‒ Threads can wait on a value‒ Power-efficient synchronization between CPU and GPU threads Allows one-to-one, many-to-one, and one-to-many signaling‒ Used by system software, runtime, and application SW‒ Infrastructure to build higher-level synchronization primitives like mutexes, semaphores, etc. Updating the value of a signal is equivalent to sending the signal‒ Release semantics: push data to others Waiting on a signal is also permitted‒ Via a wait instruction or via a runtime API‒ Acquire semantics: pull data from others40 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL

TRADITIONAL COMMAND AND DISPATCH FLOWApp BApp BApp CDirect3DUserModeDriverDirect3DUserModeDriver41 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL CGPUATaskQueue

hQ COMMAND AND DISPATCH FLOWAAAApp AABApp B User-mode application talks directlyto the hardwareA‒ HSA Architected Queuing Language (AQL)defines vendor-independent format‒ No system call‒ No kernel driver involvementBBGPUCCCApp C42 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL Hardware scheduling Greatly reduced dispatch overhead less overhead to amortize profitable to offload smaller tasks Device enqueue: GPU kernels canself-enqueue additional tasks(dynamic parallelism)

NATIVE SUPPORT FOR DATA-DEPENDENT TASKSEXPOSE DIRECTED ACYCLIC GRAPHS (DAG) TO HARDWARETask ABarrier PacketTask BCompletion Signal ACompletion Signal BTask CBAHSA Queues43 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL CBP

COMMAND QUEUE OVERSUBSCRIPTION CHALLENGEHSAQueues(QID 0)HSAQueues(QID 1)Task: BTask: ATask: DBarrier PktHSAQueues(QID 2)HSAQueues(QID 3)Task: CBarrier PktBACDHWQueues44 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL Sooraj Puthoor, Xulong Tang, Joseph Gross, and Bradford M. Beckmann. 2018. Oversubscribed CommandQueues in GPUs. In Proceedings of the 11th Workshop on General Purpose GPUs (GPGPU-11). February 2018.

QUEUE SCHEDULING HARDWARECPUioctl()User Space SWROCkgem5 maintains active listinside HW queue schedulerMemoryComponentdriver*Q2*Q1*Q0HSA software HSA softwarequeue #0queue #1Active listCPHW QueueSchedulerDispatcherRegisteredQ0 Q2listHW queueHSAPPworkCUgroupsHW Model Components45 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL GPUSource filegpu-compute/gpu compute driver.ccdev/hsa/kfd ioctl.hhardwareschedulerdev/hsa/hw scheduler.[hh cc]packet processordev/hsa/hsa packet processor.[hh cc]dispatchergpu-compute/dispatcher.[hh cc]

DOORBELL PAGES AND EVENT PAGESCPUioctl()1.HSADriver::mmap(mmap args) runtime calls mmap on the driver mmap offset distinguishes event page vs doorbell page mmapUser Space SWHead ptr2. driver allocates doorbell page and returns the page address For doorbells - Driver maps the page address to PIO address in thePT For events - Event pages are user pages3. runtime allocates doorbell address for each queue based onqueue ID. Event pages are currently unused; model relies on functionalinterfaces for event notification.ROCkTail ptrHSA software queueCPHW QueueDispatcherSchedulerHW queueHSAPPMemoryworkCUgroupsHW Model Components46 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL GPU

HSA QUEUE CREATIONCPUioctl()User Space SWROCk1. hsa queue create(some args)2. GPUComputeDriver::ioctl(tc,AMDKFD IOC CREATE QUEUE)Head ptrTail ptr3. HWScheduler::registerNewQueue(some args)HSA software queueCPHW QueueDispatcherSchedulerHW queueHSAPP47 THE AMD gem5 APU SIMULATOR JUNE 2, 2018 ISCA 2018 TUTORIAL MemoryworkCUgroupsHW Model ComponentsGPU

QUEUE SWAPPING LOGICCPUioctl()active list (Hardware scheduler)registered list (Packet processor)// Wakeup every wakeupDelay ticks1. HWScheduler::wakeup()2. HWScheduler::contextSwitchQ ()User Space SWROCkHead ptrTail ptrHSA software queueCPHW QueueSchedulerHW queueDispatcherHSAPPworkCUgroupsHW Model Compone

‒mem/ruby/ APU memory model ‒dev/hsa/ HSA device models ‒configs/ ‒example/ apu_se.pysample script ‒ruby/ APU protocol configs For more information about the configuration system, see Jason Lowe-Power’stutorial. For the remainder of this talk, files w