Transcription

“Train-by-weight (TBW): Accelerated DeepLearning by Data Dimensionality Reduction”Michael Jo and Xingheng Lin – Rose-HulmanInstitute of TechnologyApril 27, 2021

tinyML Talks SponsorstinyML Strategic PartnertinyML Strategic PartnerAdditional Sponsorships available – contact Olga@tinyML.org for info

Arm: The Software and Hardware Foundation for tinyML11Connect tohigh-levelframeworks22Supported byend-to-end tooling3Connect toRuntimeProfiling anddebuggingtooling such asArm Keil MDK3ApplicationOptimized models for embeddedAI EcosystemPartnersRuntime(e.g. TensorFlow Lite Micro)Optimized low-level NN libraries(i.e. CMSIS-NN)RTOS such as Mbed OSStay ConnectedArm Cortex-M CPUs and urces: m33 2020 Arm Limited (or its affiliates)

Advancing AIresearch to makeefficient AI ubiquitousPower efficiencyPersonalizationEfficient learningModel design,compression, quantization,algorithms, efficienthardware, software toolContinuous learning,contextual, always-on,privacy-preserved,distributed learningRobust learningthrough minimal data,unsupervised learning,on-device learningA platform to scale AIacross the industryQualcomm AI Research is an initiative of Qualcomm Technologies, Inc.PerceptionIoT/IIoTObject detection, speechrecognition, contextual fusionEdge cloudReasoningAutomotiveScene understanding, languageunderstanding, behavior predictionActionReinforcement learningfor decision makingCloudMobile

WE USE AI TO MAKE OTHER AI FASTER, SMALLERAND MORE POWER EFFICIENTAutomatically compress SOTA models like MobileNet to 200KB withlittle to no drop in accuracy for inference on resource-limited MCUsReduce model optimization trial & error from weeks to days usingDeeplite's design space explorationDeploy more models to your device without sacrificing performance orbattery life with our easy-to-use softwareBECOME BETA USER bit.ly/testdeeplite Confidential Presentation 2020 Deeplite, All Rights ReservedPAGE 5

TinyML for all developersDatasetAcquire valuabletraining data securelyEnrich data and trainML algorithmsImpulseEdge DeviceReal sensors in real timeOpen source SDKTest impulse withreal-time devicedata flowsEmbedded and edgecompute deploymentoptionsTestCopyright EdgeImpulse Inc.www.edgeimpulse.com

Maxim Integrated: Enabling Edge IntelligenceAdvanced AI Acceleration ICLow Power Cortex M4 MicrosSensors and Signal ConditioningThe new MAX78000 implements AI inferences atlow energy levels, enabling complex audio andvideo inferencing to run on small batteries. Nowthe edge can see and hear like never before.Large (3MB flash 1MB SRAM) and small (256KBflash 96KB SRAM, 1.6mm x 1.6mm) Cortex M4microcontrollers enable algorithms and neuralnetworks to run at wearable power levels.Health sensors measure PPG and ECG signalscritical to understanding vital signs. Signal chainproducts enable measuring even the mostsensitive com/sensors

Qeexo AutoMLAutomated Machine Learning Platform that builds tinyML solutions for the Edge using sensor dataKey Features End-to-End Machine Learning PlatformSupports 17 ML methods: Multi-class algorithms: GBM, XGBoost, RandomForest, Logistic Regression, Gaussian Naive Bayes,Decision Tree, Polynomial SVM, RBF SVM, SVM, CNN,RNN, CRNN, ANN Single-class algorithms: Local Outlier Factor, OneClass SVM, One Class Random Forest, Isolation ForestFor more information, visit: www.qeexo.comLabels, records, validates, and visualizes time-seriessensor dataTarget Markets/ApplicationsOn-device inference optimized for low latency, low powerconsumption, and small memory footprint applications Industrial Predictive Maintenance Automotive Smart Home MobileSupports Arm Cortex - M0 to M4 class MCUs Wearables IoT

SynSense builds sensing and inference hardware for ultralow-power (sub-mW) embedded, mobile and edge devices.We design systems for real-time always-on smart sensing,for audio, vision, IMUs, bio-signals and more.https://SynSense.ai

collaboration withFocus on:(i) developing new use cases/apps for tinyML vision; and (ii) promoting tinyML tech & companies in the developer communityOpen nowSubmissions accepted until August 15th, 2021Winners announced on September 1, 2021 ( 6k value)Sponsorships available: tests/tinyml-vision

Successful tinyML Summit 2021:www.youtube.com/tinyML with 150 videostinyML Summit-2022, January 24-26, Silicon Valley, CA

June 7-10, 2021 (virtual, but LIVE)Deadline for abstracts: May 1Sponsorships are being accepted: sponsorships@tinyML.org

Next tinyML TalksDatePresenterTopic / TitleTuesday,May 11Chris KnorowskiCTO, SensiML CorporationBuild an Edge optimized tinyML applicationfor the Arduino Nano 33 BLE SenseWebcast start time is 8 am Pacific timePlease contact talks@tinyml.org if you are interested in presenting

RemindersSlides & Videos will be posted tomorrowtinyml.org/forumsyoutube.com/tinymlPlease use the Q&A window for your questions

Michael JoMichael Jo received his Ph.D. in Electrical andComputer Engineering in 2018 from the Universityof Illinois at Urbana-Champaign. He is currently anassistant professor at Rose-Hulman Institute ofTechnology in the department of Electrical andComputer Engineering. His current researchinterests are accelerated embedded machinelearning, computer vision, and integration ofartificial intelligence and nanotechnology.

Xingheng LinXingheng Lin was born in Jiangxi Province, China, in2000. He is currently pursuing the B. S. degree incomputer engineering at Rose-Hulman Institute ofTechnology. His primary research interests arePrincipal Component Analysis based machinelearning and deep learning acceleration. Besideshis primary research project, Xingheng is currentlyworking on pattern recognition of rapid salivaCOVID-19 test response which is a collaborationwith 12-15 Molecular Diagnostics.

Trained-by-weight (TBW): Accelerated DeepLearning by Data Dimensionality ReductionApril 27th, 2021Xingheng Lin and Michael JoElectrical and Computer EngineeringRose-Hulman Institute of Technology

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionAgenda Introduction and Motivation Dimensionality Reduction by Linear Classifiers Proposed Idea: Combination of Linear and non-Linear Classifiers Experiment Results Discussion and Future work Conclusion19

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionAgenda Introduction and Motivation Dimensionality Reduction by Linear Classifiers Proposed Idea: Combination of Linear and non-Linear Classifiers Applications and Experiment Results Discussion and Future work Conclusion20

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionImage Revolution24x24224x2241300x780x 87x 1,760 !3840x2160x 14,400 !!!J. Bernhard, “Deep Learning With PyTorch,” Medium, 13-Jul-2018. [Online]. Available: https://medium.com/@josh 2774/deep-learning-withpytorch-9574e74d17ad. [Accessed: 21-Nov-2020]."wallpaperix.com", Popular Cat and Dog Wallpaper. Available: ��imgur.com”, Beautiful macaw. Available: . Brownlee, “How to Develop a CNN for MNIST HandwrittenDigit Classification,” Machine Learning Mastery, 24-Aug-202021

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionBackground of image classification Artificial Neural Network Image input as node Suitable for small input Back PropagationAvailable: n-of-the-fruits360-image-3c56affa4491.22

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionConvolutional Neural Network CNN become deeper and deeperB. R. (J. Ng), “Using Artificial Neural Network for Image Classification,” Medium, 02-May-2020.S.-H. Tsang, “Review: GoogLeNet (Inception v1)- Winner of ILSVRC 2014 (Image Classification),” Medium, 18-Oct-2020.23

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionTraining data and 12cabEl Shawi et al., DLBench: a comprehensive experimental evaluation of deep learning frameworks.Cluster Computing (2021). 1-22. 10.1007/s10586-021-03240-4.24

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionRe-training data and timeIt’sIt’s aa “Dog”“Cat”These are also“dog”s.I am not trained for thisbut I will train myselfUm.again forthese new“dog”s.Dog, 0.98Cat, 0.99Dog, 0.53Dog, 0.24Dog, 0.33Image: .cahttps://www.pinterest.com25

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionRe-training data and timeDog, 0.98Cat, 0.99I am not trained forthis. PleaseUm.train mefor this class.Dog,0.120.23Cat,x 1M images and another day for trainingImage: https://www.petbacker.com26

tiny ML Internet of Things / Cyber-Physical Systems “The global 5G IoT market size is projected to grow from USD2.6 billion in 2021 to USD 40.2 billion by 2026, ” - Researchand markets, March crocontrollers-for-machine-learning-and-ai/27

tiny ML Internet of Things / Cyber-Physical Systems Challenges from limited hardware compared to laptops, desktops,clusters, servers, etc.ModelsGoogle Coral DevBoardNVIDIA JetsonNano Dev KitRaspberry Pi 4Computer Model B4GBROCK Pi 4 Model B4GBCoreSpeedNXP i.MX 8M Quadcore Arm A53 @1.5GHzQuad-core ARMA57 @ 1.43 GHzBroadcomBCM2711 CortexA72 ARM @ 1.5GHzDual Cortex-A72,frequency 1.8GhzGPUIntegrated GC7000Lite Graphics128-core NVIDIAMaxwell GPUBroadcomVideoCore VIMali T860MP4 GPU1 GB LPDDR44 GB 64-bit LPDDR425.6 GB/s1GB, 2GB or 4GBLPDDR464bit dual channelLPDDR4@3200Mb/s, 4GB,2GB or microcontrollers-for-machine-learning-and-ai/28

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionMotivation Accelerate the time-consuming model training process tosupport tinyML. Reduced the dependence of expensive computational devices29

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionAgenda Introduction and Motivation Dimensionality Reduction by Linear Classifiers Proposed Idea: Combination of Linear and non-Linear Classifiers Applications and Experiment Results Discussion and Future work Conclusion30

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionLinear classifier: Principal Component Analysis (PCA)Powell and L. Lehe, “Principal Component Analysis explained visually”31

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionAdvantage of PCA Reduced input size for training Most essential informationcaptured by selecting componentsthat matter esults-of-a-PCA-analysis32

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionDimensionality ReductionMIT-CBCL Database: recognition-database.html33

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionDimensionality Reduction34

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionAgenda Introduction and Motivation Dimensionality Reduction by Linear Classifiers Proposed Idea: Combination of Linear and non-Linear Classifiers Applications and Experiment Results Discussion and Future work Conclusion35

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionProposed Idea Input image data setreshaped to one inputmatrix Each column representone sample Extract the feature matrixby decorrelating the inputmatrix36

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionWeighted Input Matrix after PCADimensionalityReduction37

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionProposed Idea Combining Linear classifier and non-linear classifier38

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionAgenda Introduction and Motivation Dimensionality Reduction by Linear Classifiers Proposed Idea: Combination of Linear and non-Linear Classifiers Applications and Experiment Results Discussion and Future work Conclusion39

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionApplication: TBW-ANN (Artificial Neural Network) Reduced input as the training data of ANN The back propagation take less timeOriginal 32x32Face ImagesReduced 10x10Weighted imagesClass 1. FeaturesData Samples .Class 2 ⁞.Z: Weighted Data.Class nReshape to 100x1Input layer100 nodesHidden layer14 nodesOutput t-are-they40

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionExperiment Result for Face Dataset using ANNOriginal ANN500 Iteration Elapsed time: 78.54 sTrain by Weight (PCA) - ANNSpeed x 2.8500 Iteration Elapsed time: 27.81 s41

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionApplication: TBW-CNN (Convolutional Neural Network)42

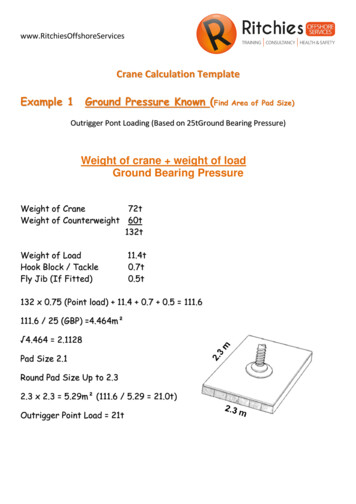

Results: TBW (PCA) - CNNAchieved 18x speed,with 1% accuracy lossTest ErrorTime for100 Iter.PCA-CNN10x104.4%120.7s32.8s5.45 %PCA-CNN12x124.67%193.6s83.2s13.82 %PCA-CNN14x145.33%247.3s117.9s19.58 %PCA-CNN16x165.78%289.7s170.1s28.25 %3%946.6s602.2s100 %CNN32x32Time forPercentageconverging43

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionAgenda Introduction and Motivation Dimensionality Reduction by Linear Classifiers Proposed Idea: Combination of Linear and non-Linear Classifiers Applications and Experiment Results Discussion and Future work Conclusion44

Discussion: Machine Learning assisting human Interpretable Machine LearningPirracchi et al., “Big data and targeted machine learning in action to assist medicaldecision in the ICU,” Anaesthesia Critical Care & Pain Medicine, Volume 38, Issue 4,August 201945

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionDiscussion: InterpretabilityFeatures from PCA1st Convolution layers1st Convolution Layer with PCAFeaturesMIT-CBCL Database: recognition-database.htmlS. Theodoridis and k. Koutroumbas, Pattern Recognition (4th edition)Matthias Scholz dissertation 200646

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionDiscussion: Interpretability Weighted images are hard to interpretCBCL Face ImagesWeighted images Weighted images20 x 2010 x 10CBCL Not-a-Face ImagesWeighted imagesWeighted images20 x 2010 x 10MIT-CBCL Database: recognition-database.htmlS. Theodoridis and k. Koutroumbas, Pattern Recognition (4th edition)Matthias Scholz dissertation 200647

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionFuture Works: Subsampling before PCA stagePCA reduce the featureNumber to 1024ConvolutionReduced 10x10Weighted imageSubsamplingConvolutionReshape to 32x32.Deep CNN5x5kernel1st Hidden LayerDown sampling StagePCA sampling StageTraining and back propagation Stage48

Future Works: Embedded Machine Learning Collaboration with 12-15 Molecular Diagnostics Rapid Saliva COVID-19 Test Device: completes in 20 minuteshttps://www.12-15mds.com/veralize 49

Future Works: Embedded Machine Learning We want to develop a model for test channel Composite of carbon nanotubes and nano-graphites And perform pattern recognition for positive resultshttps://www.12-15mds.com/veralize 50

Future Works: Embedded Machine Learning This pipeline can be used for fast machine learning applicationby reduced data sets, essential for Rapid Saliva COVID-19 /www.12-15mds.com/veralize 51

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionAgenda Introduction and Motivation Dimensionality Reduction by Linear Classifiers Proposed Idea: Combination of Linear and non-Linear Classifiers Applications and Experiment Results Discussion and Future work Conclusion52

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionConclusion We proposed Training by Weight (TBW), an algorithmicapproach of accelerated machine learning by combination oflinear and non-linear classifier. This simple idea accelerated the training time of existingmachine learning and deep learning application by up to 18times.53

Trained-by-weight (TBW): Accelerated Deep Learning by Data Dimensionality ReductionAcknowledgement This project was initiated by the generous supported by R-SURF(Rose-Hulman Summer Undergraduate Research Fellowships) andcontinued as independent study during academic year. Collaboration with 12-15 Molecular Diagnostics.54

Thank you! Any questions?Image courtesy of Rose-Hulman AdmissionsMichael K. Jo, PhD Assistant Professor : jo@rose-hulman.eduXingheng Lin Senior ECE student: linx2@rose-hulman.edu

Copyright NoticeThis presentation in this publication was presented as a tinyML Talks webcast. The content reflects theopinion of the author(s) and their respective companies. The inclusion of presentations in thispublication does not constitute an endorsement by tinyML Foundation or the sponsors.There is no copyright protection claimed by this publication. However, each presentation is the work ofthe authors and their respective companies and may contain copyrighted material. As such, it is stronglyencouraged that any use reflect proper acknowledgement to the appropriate source. Any questionsregarding the use of any materials presented should be directed to the author(s) or their companies.tinyML is a registered trademark of the tinyML Foundation.www.tinyML.org

Xingheng Lin Xingheng Lin was born in Jiangxi Province, China, in 2000. He is currently pursuing the B. S. d