Transcription

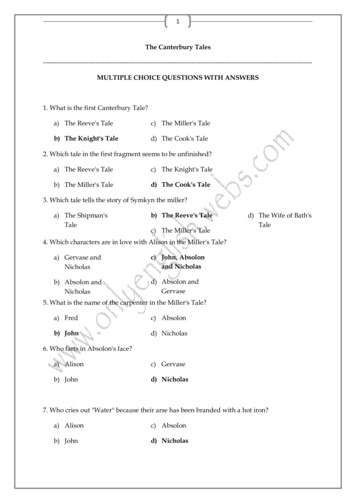

A Tale of Two Systems: Using Containers to DeployHPC Applications on Supercomputers and CloudsAndrew J. Younge, Kevin Pedretti, Ryan E. Grant, Ron BrightwellCenter for Computing ResearchSandia National Laboratories*Email: {ajyoung, ktpedre, regrant, rbbrigh}@sandia.govAbstract—Containerization, or OS-level virtualization hastaken root within the computing industry. However, containerutilization and its impact on performance and functionalitywithin High Performance Computing (HPC) is still relativelyundefined. This paper investigates the use of containers withadvanced supercomputing and HPC system software. With this,we define a model for parallel MPI application DevOps anddeployment using containers to enhance development effortand provide container portability from laptop to clouds orsupercomputers. In this endeavor, we extend the use of Singularity containers to a Cray XC-series supercomputer. Weuse the HPCG and IMB benchmarks to investigate potentialpoints of overhead and scalability with containers on a CrayXC30 testbed system. Furthermore, we also deploy the samecontainers with Docker on Amazon’s Elastic Compute Cloud(EC2), and compare against our Cray supercomputer testbed.Our results indicate that Singularity containers operate at nativeperformance when dynamically linking Cray’s MPI libraries ona Cray supercomputer testbed, and that while Amazon EC2 maybe useful for initial DevOps and testing, scaling HPC applicationsbetter fits supercomputing resources like a Cray.I. I NTRODUCTIONContainerization has emerged as a new paradigm for software management within distributed systems. This is due inpart to the ability of containers to reduce developer effortand involvement in deploying custom user-defined softwareecosystems on a wide array of computing infrastructure.Originating with a number of important additions to theUNIX chroot directive, recent container solutions like Docker[1] provide a new methodology for software development,management, and operations that many of the Internet’s mostpredominate systems use today [2]. Containers enable developers to specify the exact way to construct a workingsoftware environment for a given code. This includes the baseoperating system type, system libraries, environment variables,third party libraries, and even how to compile their applicationat hand. Within the HPC community, there exists a steadydiversification of different hardware and system architectures,coupled with a number of novel software tools for parallelcomputing as part of the U.S. Department of Energy’s Exascale Computing Project [3]. However, system software forsuch capabilities on extreme-scale supercomputing resources*Sandia National Laboratories is a multimission laboratory managed andoperated by National Technology and Engineering Solutions of Sandia,LLC., a wholly owned subsidiary of Honeywell International, Inc., for theU.S. Department of Energys National Nuclear Security Administration undercontract DE-NA0003525.needs to be enhanced to more appropriately support these typesof emerging software ecosystems.Within core algorithms and applications development effortsat Sandia, there is an increased reliance on testing, debugging,and performance portability for mission HPC applications.This is first due to the heterogeneity that has flourished acrossmultiple diverse HPC platforms, from many-core architecturesto GPUs. This heterogeneity has led to increased complexitywhen deploying new software on large-scale HPC platforms,whereby the path from code development to large-scale deployment has grown considerably. Furthermore, developmentefforts for codes that target extreme-scale supercomputingfacilities, such as the Trinity supercomputer [4], are oftendelayed by long queue wait times that preclude small-scaletesting and debugging. This leaves developers looking forsmall-scale testbeds or external resources for HPC development & operations (DevOps).Most containerization today is based on Docker and theDockerfile manifests used to construct container images. However, deploying container technologies like Docker directly foruse in an HPC environment is neither trivial nor practical.Running Docker on an HPC system brings forth a myriad ofsecurity and usability challenges that are unlikely to be solvedby minor changes to the Docker system. While any containersolution will add a useful layer of abstraction within thesoftware stack, such abstraction can also introduce overhead.This is a natural trade-off within systems software, and acareful and exacting performance evaluation is needed todiscover and quantify these trade-offs with the use of newcontainer abstractions.This manuscript describes a multi-faceted effort to addressthe use of containerization for HPC workloads, and makes anumber of key contributions in this regard. First, we investigatecontainer technologies and the potential advantages and drawbacks thereof, within an HPC environment, with a review ofcurrent options. Next, we explain our selection of Singularity,an HPC container solution, and provide a model for DevOpsfor Sandia’s emerging HPC applications, as well as describethe experiences of deploying a custom system software stackbased on Trilinos [5] within a container. We then providethe first known published effort demonstrating Singularity ona Cray XC-series supercomputer. Given this implementation,we conduct an evaluation of the performance and potentialoverhead with utilizing Singularity containers on a SandiaCray testbed system, finding that when vendor MPI libraries

are dynamically linked, containers run at native performance.Given the new DevOps model and the ability to deploy parallelcomputing codes on a range of resources, this is the first paperthat makes use of containers to directly compare a Cray XCseries testbed system against running on the Amazon ElasticCompute Cloud (EC2) service to explore each computingresource and the potential limits of containerization.II. BACKGROUNDThroughout the history of computing, supporting userdefined environments has often been a desired feature-seton multi-user computing systems. The ability to abstracthardware or software as well as upper-level APIs has hadprofound effects on the computing industry. As far back asthe 1970s, IBM pioneered the use of virtualization on theIBM System 370 [6], however there have been many iterationssince. Beginning last decade, the use of binary translation andspecialized instructions on x86 CPUs have enabled a new waveof host ISA virtualization whereby users could run specializedvirtual machines (VMs) on demand. This effort has spawnedinto what is now cloud computing, with some of the largestcomputational systems today running VMs.While VMs have seen a meteoric rise in the past decade,they are not without limitations. Early virtualization effortssuffered from poor performance and limited hardware flexibility [7], which dampened their use within HPC initially.In 2010, Jackson et al. compared HPC resources to AmazonEC2 instances [8] to illustrate limitations of HPC on publicclouds. However many of these limitations have been mitigated[9] and the performance issues have diminished with severalvirtualization technologies, including Palacios [10] and KVM[11]. Concurrently, there has also emerged the opportunity forsystem OS-level virtualization, or containerization within theHPC system software spectrum.Containerization, or OS-level virtualization, introduces anotion whereby all user-level software, code, settings, andenvironment variables, are packaged in a container and canbe transported to different endpoints. This approach createsa method of providing user-defined software stacks in amanner that is similar, yet different than virtual machines.With containers, there is no kernel, no device drivers, norabstracted hardware included. Instead, containers are isolatedprocess, user, and filesystem namespaces that exist on top asingle OS kernel, most commonly Linux. Containers, like VMusage in Cloud computing, can multiplex underlying hardwareusing Linux cgroups to implement resource limits.Containers rely heavily on the underlying host OS kernel forresource isolation, provisioning, control, security policies, anduser interaction. While this approach has caveats, includingreduced OS flexibility (only one OS kernel can run) and anincreased risk of security exploits, it also allows for a morenimble, high-performing environment. This is because there isno boot sequence for containers, but rather just a simple process clone and system configuration. Furthermore, containersgenerally operate at near-native speeds when there is only onecontainer on a host [12]. Due to similar user perspectives, thereare a number of studies that compare the performance andfeature sets of containers and virtual machines [13], [14], [12],[15], often with containers exhibiting near-native performance.The use of containers has found a recent assent in popularitywithin industry like VMs and cloud computing of a few yearsprior. Containers have been found to be useful for packagingand deploying small, lightweight, loosely-coupled services.Often, each container runs as a single process micro-service[16], instead of running entire software suites in a conventionalserver architecture. For instance, deployed environments oftenrun special web service containers, which are separated fromdatabase containers, or logging containers and separate datastores. While these containers are separate, they are oftenscheduled in a way whereby resources are packed together indense configurations for collective system efficiency by relyingupon Linux cgroups for resource sharing and brokering. Theability of these containers to be created using reusable andextensible configuration manifests, such as Dockerfiles, alongwith their integration into Git source and version controlmanagement, further adds to the appeal of using containersfor advanced DevOps [17].III. C ONTAINERS FOR HPCAs containers gain popularity within industry, their applicability towards supercomputing resources is also considered.A careful examination is necessary to evaluate the fit of suchsystem software to determine the components of containersthat can have the largest impact on HPC systems in thenear future. Current commodity containers could provide thefollowing positive attributes: BYOE - Bring-Your-Own-Environment. Developers define the operating environment and system libraries inwhich their application runs. Composability - Developers explicitly define how theirsoftware environment is composed of modular components as container images, enabling reproducible environments that can potentially span different architectures[18]. Portability - Containers can be rebuilt, layered, or sharedacross multiple different computing systems, potentiallyfrom laptops to clouds to advanced supercomputing resources. Version Control Integration - Containers integrate withrevision control systems like Git, including not only buildmanifests but also with complete container images usingcontainer registries like Docker Hub.While utilizing industry standard open-source containertechnologies within HPC could be feasible, there are a numberof potential caveats that are either incompatible or unnecessaryon HPC resources. These include the following attributes: Overhead - While some minor overhead is acceptablethrough new levels of abstraction, HPC applicationsgenerally cannot incur significant overhead from thedeployment or runtime aspects of containers. Micro-Services - Micro-services container methodologydoes not apply to HPC workloads. Instead, one application area per node with multiple processes or threads percontainer is a better fit for HPC usage models.

On-node Partitioning - On-node resource partitioningwith cgroups is not yet necessary for most parallel HPCapplications. While in-situ workload coupling is possibleusing containers, it is beyond the scope of this work. Root Operation - Containers often allow root-level access control to users; however, in supercomputers this isunnecessary and a significant security risk for facilities. Commodity Networking - Containers and their networkcontrol mechanisms are built around commodity networking (TCP/IP). Most supercomputers utilize custominterconnects that use interfaces that bypass the OS kernelfor network operations.Effectively, these two attribute lists act as selection criteriafor investigating containers within an HPC ecosystem. Container solutions that can operate within the realm of HPC whileincorporating as many positive attributes and simultaneouslyavoiding negative attributes are likely to be successful technologies for supporting future HPC ecosystems. Due to thepopularity of containers and the growing demand for moreadvanced use cases of HPC resources, we can use theseattributes to investigate several potential container solutionsfor HPC that have been recently created.1) Docker: It is impossible to discuss containers without first mentioning Docker [1]. Docker is the industryleading method for packaging, creating, and deploying containers on commodity infrastructure. Originally developedatop Linux Containers (LXC) [12], Docker now has itsown libcontainer package that provides namespaces andcgroups isolation. However, between security concerns, rootuser operation, and the lack distributed storage integration,Docker is not appropriate for use on HPC resources in itscurrent form. Yet Docker is still useful for individual containerdevelopment on laptops and workstations whereby images canbe ported to other systems, allowing for root system softwareconstruction but specialized deployment elsewhere.2) Shifter: Shifter is one of the first major projects tobring containerization to an advanced supercomputing architecture, specifically on Cray systems [19]. Shifter, developedby NERSC, enables Docker images to be converted anddeployed to Cray XC series compute nodes, specifically theEdison and Cori systems [20]. Shifter provides an imagegateway service to store converted container images, whichcan be provisioned via read-only loopback bind mounts backedby a Lustre filesystem. Shifter takes advantage of chrootto provide filesystem isolation while avoiding some of theother potentially convoluted Docker features such as cgroups.Shifter also can inject a statically linked ssh server into thecontainer to minimize the potential for security vulnerabilitiesand reduce conflicts with Cray’s existing management tools.Shifter recently included support for GPUs [21], and has beenused to deploy Apache Spark big data workloads [22]. WhileShifter is an open-source project, the project to date has largelyfocused on deployment on Cray supercomputer platforms andincorporates implementation specific characteristics that makeporting to separate architectures more difficult.3) Charliecloud: Charliecloud [23] is an open source implementation of containers developed for the Los AlamosNational Laboratory’s production HPC clusters. It allows for the conversion of Docker containers to run on HPC clusters.Charliecloud utilizes user namespaces to avoid Docker’s modeof running root-level services, and the code base is reportedlyvery small and concise at under 1000 lines of code. However,long-term stability and portability of the Linux kernel namespaces that are used in Charliecloud is undetermined, largelydue to the lack for support in various distributions such as RedHat. Nevertheless, CharlieCloud does look to provide HPCcontainers built from Docker with ease, and its small footprintleads to minimally invasive deployments.4) Singularity: Singularity [24] offers another compellinguse of containers specifically crafted for the HPC ecosystem.Singularity was originally developed by Lawrence BerkeleyNational Laboratory, but is now under Singularity-Ware, LLC.Like many of the other solutions, Singularity provides customDocker images to be run on HPC compute nodes on demand.Singularity leverages chroot and bind mounts (optionally alsoOverlayFS) to mount container images and directories, as wellas Linux namespaces and mapping of users to any givencontainer without root access. While Singularity’s capabilitiesinitially may look similar to other container mechanisms, italso has a number of additional advantages. First, Singularitysupports custom image manifest generation through the definition of Singularity containers, as well as the import of Dockercontainers on demand. Perhaps more importantly, Singularitywraps all images in a single file that provides easy management and sharing of containers, not only by a given user,but potentially also across different resources. This approachalleviates the need for additional image management tools orgateway services, greatly simplifying container deployment.Furthermore, Singularity is a single-package installation andprovides a straightforward configuration system.With the range of options for providing containerizationwithin HPC environments, we chose to use Singularity containers for a number of reasons. Singularity’s interoperabilityenables container portability across a wide range of architectures. Users can build dedicated Singularity containers onLinux workstations and utilize the new SingularityHub service.This approach provides relative independence from Docker,while simultaneously still supporting importing Docker containers directly. This balanced approach allows for additionalflexibility with less compromise from a user feature-set standpoint. Singularity’s implementation also solves a number ofissues when operating with HPC resources. Issues include theomission of cgroups, root level operation, the ability to bindor Overlay mount system libraries or distributed filesystemswithin containers, and the support of user namespaces thatintegrate with existing HPC user control mechanisms.IV. C ONTAINER B UILD , D EPLOYMENT, & D EVO PSBuilding Docker containers with Sandia mission applications on individual laptops, workstations, or servers can enablerapid development, testing, and deployment times. However,many HPC applications implement bulk synchronous parallelalgorithms, which necessitate a multi-node deployment foreffective development and testing. This situation is complicated by the growing heterogeneity of emerging HPC systems

architectures, including GPUs, interconnects, and new CPUarchitectures. As such, there is an increased demand onperformance portability within algorithms, although DevOpsmodels have yet to catch up.A. Container Deployment and DevOpsIt is often impractical for applications to immediately moveto large-scale supercomputing resources for DevOps and/ortesting. Due to their high demand, HPC resources oftenhave long batch queues of job request, which can delaydevelopment time. For instance, developers may just wantto ensure the latest build passes some acceptance tests orevaluate a portion of the code to test completeness. Currentsolutions include the deployment of smaller testbed systemsthat are a miniature version of large-scale supercomputers, butdedicated to application development. Another solution is toleverage Cloud infrastructure, potentially both public or privateIaaS resources or clusters to do initial DevOps, includingnightly build testing or architecture validation. As such, ourcontainer deployment and DevOps model, described below,enables increased usability for all of these potential solutions.service. While this approach avoids many potential pitfallswhen exporting code, this process can also be replicatedthrough the use of Github and Dockerhub for open sourceprojects. Here, developers focus on code changes and packageinto a local container build using Docker on their laptop,knowing their software can be distributed to HPC resources.Once a developer has uploaded a container image to theregistry, it can then be imported from a number of platforms.While the remainder of this paper focuses on two deploymentmethods, a Cray XC supercomputer with Singularity and aEC2 Docker deployment, we can also include a standardLinux HPC cluster through Singularity. Initial performanceevaluations of Singularity on the SDSD Comet cluster arepromising [25]. We expect more Linux clusters to includeSingularity, especially given the rise of the OpenHPC softwarestack that now includes the Singularity container service. As ofSingularity 2.3, importing containers from Docker can be donewithout elevated privileges, which removes the requirement foran intermediary workstation to generate singularity images.Therefore, Docker containers can be imported or pulled froma repository on demand and deployed on a wide array ofSingularity deployments, or even left as Docker containers anddeployed on commodity systems.B. Container BuildsUnder this DevOps model, users first work with containerinstances from their own laptops and workstations. Here,commodity container solutions such as Docker can be used,which runs on multiple OSs allowing developers to build codesets targeting Linux on machines not natively running Linux.As Mac or PC workstations are commonplace, the current bestpractice for localized container development includes the useof Docker, as Singularity is dependent on Linux.Fig. 1: Deploying Docker Container across multiple HPCsystems, including Amazon EC2, a commodity cluster, anda Cray supercomputerThis paper provides a containerized toolchain and deployment process, as illustrated in Figure 1, which architects amethodology for deploying custom containers across a rangeof computing resources. The deployment initially includeslocal resources such as a laptop or workstation, often usingcommodity Docker containers. First, the container Dockerfileand related build files are saved into a Git code project.Due to security concerns surrounding some applications, thiscontainer model utilizes an internal Gitlab repository systemat Sandia. Gitlab provides an internal repo for code versioncontrol, similar in functionality to Github, except that all codeis isolated in internal networks. With Gitlab, we can alsopush entire Docker images into the Gitlab container registryFROM ajyounge/dev-tpl# Load MPICH from TPL imageRUN module load mpiWORKDIR /opt/trilinos# Download TrilinosCOPY do-configure /opt/trilinos/RUN wget -nv ce.tar.gz \-O /opt/trilinos/trilinos.tar.gz# Extract Trilinos source fileRUN tar xf /opt/trilinos/trilinos.tar.gzRUN mv trilinosRUN mkdir /opt/trilinos/trilinos-build# Compile TrilinosRUN /opt/trilinos/do-configureRUN cd /opt/trilinos/trilinos-build && make -j 3# Link in Muelu tutorialRUN ln -s orialWORKDIR /opt/muelu-tutorialAn example of a container development effort of interest isintroduced by Trilinos [5]. Above is an excerpt that describesan example Trilinos container build. For our purposes, itserves as an educational tutorial for various Trilinos packages,such as Muelu [26]. The Dockerfile above first describes howwe inherit from another container dev-tpl, which containsTrilinos’ necessary third party libraries such as NetCDF andcompiler installations, for instance. This Dockerfile specifies

how to pull the latest Trilinos source code and compile Trilinosusing a predetermined configure script. While the examplebelow is simply an abbreviated version, the full version enablesdevelopers to specify a particular Trilinos version to build aswell as a different configure script to build certain features asdesired. As such, we maintain the potential portability withinthe Dockerfile manifest itself, rather than the built image.While the Trilinos example serves as a real-world containerDockerfile of interest to Sandia, the model itself is more general. To demonstrate this, we will use a specialized containerinstance capable of detailed system evaluation throughout therest of the manuscript. This new container includes the HPCGbenchmark application [27] to evaluate the efficiency of running the parallel conjugate gradient algorithm, along with theIntel MPI Benchmark suite (IMB) [28] to evaluate interconnectperformance. This container image was based on a standardCentos 7 install, and both benchmarks were built using theIntel 2017 Parallel Studio, which includes the latest Intelcompilers and Intel MPI library. Furthermore, an SSH serveris installed and set as the container’s default executable. WhileSSH is not strictly necessary in cases where Process Management Interface (PMI) compatible batch schedulers are used, itis useful for bootstrapping MPI across a commodity clusteror EC2. As the benchmarks used are open and public, thecontainer has been uploaded to Dockerhub and can be accessedvia docker pull ajyounge/hpcg-container.V. S INGULARITY ON A C RAYWhile Singularity addresses a number of issues regardingsecurity, interoperability, and environment portability in anHPC environment, the fact that it is not supported on allsupercomputing platforms is a major challenge. Specifically,the Cray XC-series supercomputing platform does not nativelysupport Singularity. These machines represent the extremescale of HPC systems, as demonstrated by their prevalenceat the highest ranks of the Top500 list [20]. However, Craysystems are substantially different from commodity clustersfor which Singularity was developed. From a system softwareperspective, there are several complexities with the CrayCompute Node Linux (CNL) environment [29], which is ahighly tuned and optimized Linux OS to reduce noise andjitter when running parallel bulk synchronous workloads, suchas MPI applications. The Cray environment also includeshighly tuned and optimized scientific and distributed memorylibraries specific for HPC applications. On the Cray XC30, thissoftware stack includes a 3.0.101 Linux kernel, an older Linuxkernel that lacks numerous features found on more modernLinux distributions, including support for virtualization andextended commodity filesystems.In order to use Singularity on a Cray supercomputer, a fewmodifications were necessary to the CNL OS. Specifically,support for loopback devices and the EXT3 filesystem wereadded into a modified 3.0.101 kernel. A new Cray OS imageis uploaded to the boot system and distributed to computenodes on the machine. These modifications are only possiblewith root on all compute and service nodes as well as asimilar kernel source. This situation means that, for othersto use Singularity on a Cray, they too need to make thesemodifications, or obtain an officially supported CNL OS withthe necessary modifications from Cray.Once Singularity’s packages are built and installed in ashared location, configuration is still necessary to producean optimal runtime environment. Specifically, we configureSingularity to mount /opt/cray, as well as /var/opt/cray foreach container instance. While the system currently performsall mount operations with bind mounts, the use of OverlayFSin the future would enable a much more seamless transitionand likely improve usability. Otherwise, base directories maybe mounted that obfuscate portions of the existing containerfilesystem, or special mount points must be created. These bindmounts enable Cray’s custom software stack to be includeddirectly in running container instances, which can have drasticimpacts on overall performance for HPC applications.As all of Sandia’s applications of interest leverage MPIfor inter-process communication, utilizing an optimal MPIimplementation within containers is a key aspect to a successful deployment. Because containers rely on the samehost kernel, the Cray Aries interconnect can be used directlywithin a container. However, Intel MPI and standard MPICHconfigurations available during compile-time do not supportusing the Aries interconnect at runtime. In order to leverage theAries interconnect as well as advanced shared memory intranode communication mechanisms, we dynamically link Cray’sMPI and associated libraries provided in /opt/cray directlywithin the container. To accomplish this, a number of Craylibraries need to be added to the link library path within thecontainer. This set includes Cray’s uGNI messaging interface,the XPMEM shared memory subsystem, Cray PMI runtimelibraries, uDREG registration cache, the application placementscheduler (ALPS), and the configure workload manager. Furthermore, some Intel Parallel Studio libraries are also linked infor the necessary dynamic libraries that were used to build theapplication. While dynamic linking is handled in Cray’s system through aprun and Cray’s special environment variables,these variables need to be explicitly set within a Singularitycontainer. While this can be done at container runtime or viabashrc by setting LD LIBRARY PATH, we hope to developa method for setting environment variables automatically forany and all containers within Singularity, rather than burdeningthe users with this requirement. Unsurprisingly, the dynamiclinking of Cray libraries is also the approach that Shifter takesautomatically during the creation of Shifter container images.This overlay of the Cray MPI environment into containersis possible due to the application binary interface (ABI)compatibility between Cray, MPICH, and Intel MPI implementations. These MPI libraries can be dynamically linkedand swapped without impact on the application’s functionality.It is worth noting that this is not true with all MPI libraries orother libraries. For instance, OpenMPI uses different internalinterfaces and as such is not compatible with Cray’s MPI.Future research and standardization efforts are needed tomore explicitly define and provide ABI compatibility acrosssystems, as binary compatibility is a key aspect to ensuringperformance portability within containers.

VI. R ESULTSA. Two Systems: A Cray Supercomputer and Amazon EC2linked to leverage vendor-tuned libraries, such as Cray’s MPIand XPMEM for ABI compatibility. As such, we run theIMB be

containers with Docker on Amazon’s Elastic Compute Cloud (EC2), and compare against our Cray supercomputer testbed. Our results indicate that Singularity containers operate at native performance when dynamically linking Cray’s MPI libraries on a Cray supercompute